Vision can be a very complex problem and when you add in the requirements and restrictions from the multirotor it can be very hard to find a suitable algorithm.

- Requirements of system

- Capable of dealing with outdoor conditions during the day

- Global and local illumination changes

- Due to clouds, shadows (both copter and objects), changes in time of day and so on. This can really ruin color based approaches if they have assumptions about the background(which they tend to do)

- High amounts of IR

- The sun is a big ole ball of IR so IR based approaches don't tend to work during the day without some heavy assumptions or very powerful IR leds

- Cluttered environment

- In order to remove any assumptions about the operating environment. Which can make model based searches fail if similar objects exist in the scene

- Arbitrary backgrounds

- Once again to remove operating assumptions this means that color based approaches can fail if they have assumptions about the background (which they tend to do)

- Capable of dealing with the operating conditions of a multirotor UAV

- Partial occlusion

- Due to the pitching and rolling movement of the copter in order to translate it can cause the image to partially move out of the image.

- Heavy skew

- As above but it causes skew

- Blur

- Depending on a large number of factors such as exposure, sensor quality, vibrations and speed of movement there can be some blurring in the image

- High variability in scale

- The vision system has to be capable of dealing with the copter at as many heights possible from 20cm to 20m away

- Arbitrary changes in orientation

- The camera and copter can be orientated arbitrarly compared to the target so the system has to be capable of dealing with that.

- Needs to operate as quickly as possible as to run in realtime (5hz minimum)

- If its too slow the control will not be capable of dealing with perturbations and compensate for error

- Limited processing power

- Due to the time constraints and onboard computer expensive visual operations are not suitable

- Needs a high detection rate and low false detection rate

- Cant have the copter trying to land somewhere that’s not the target

- Needs a way of determining the confidence of the detection

- This is useful for use in data fusion such as a Kalman filter and determining if there are false detections.

Approaches tried and some results / notes

- Approaches tried

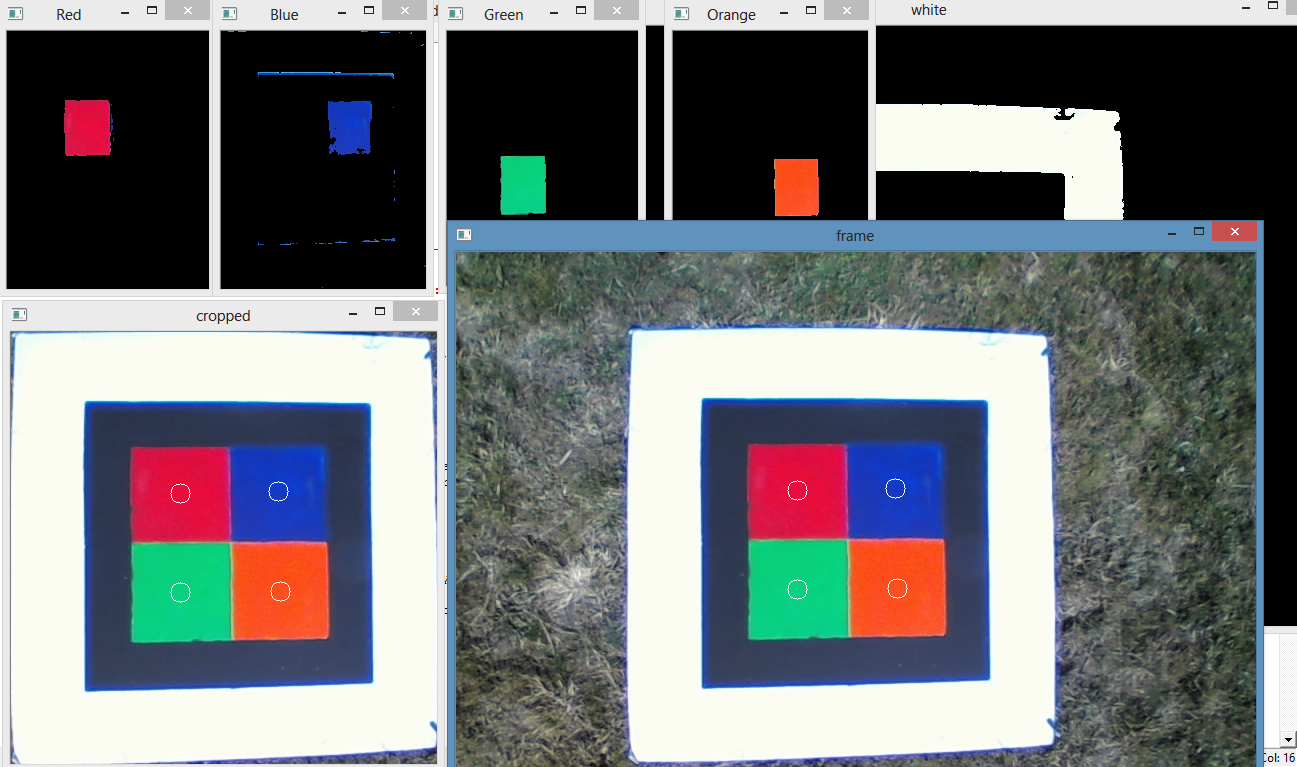

- Color blob detection of the centers and using the color to solve the correspondence problem and PNP for pose estimation

- Color blob detection with cropping(based on looking for the white outline) and PNP for pose estimation

These approaches worked at about 3 hz or 15 hz with the cropping approach and most of the image is cropped out ,but PNP is very sensitive to pixel coordinates of the detected colours. Also colour based approaches are sensitive to changes in illumination and this approach doesnt allow any occlusion of the target.

Example of working blob detection (circles are detected centers)

![]() Example of breakdown of blob detection

Example of breakdown of blob detection![]()

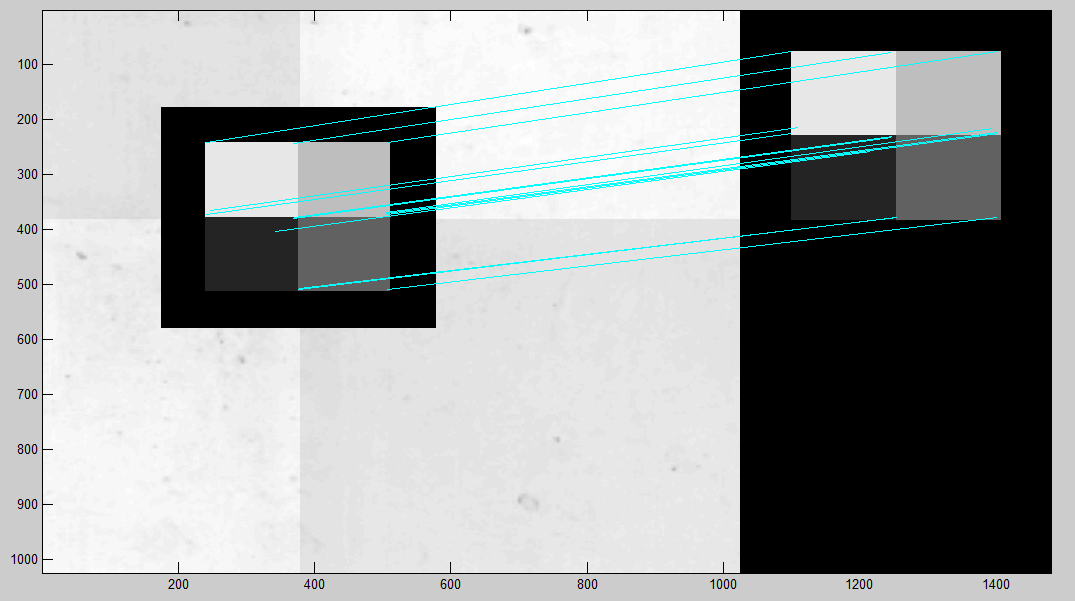

- SIFT / SURF / BRIEF / ORB based template search using RANSAC and RPP

Example working output of image feature based approach

![]()

Example breaking down output of image feature based approach

![]()

- CMT

Consensus-based Matching and Tracking of Keypoints (CMT) is a new algorithm from a conference this year it works by tracking image features or keypoints between frames and comparing keypoints based on their descriptors. For CMT I altered the landing target to have a checkerboard in the center which gives a higher number of key points to detect at lower heights.Examples of CMT working at 2 meters and 15 meters above the ground from the copters downward facing camera. The blue squares are the detected markers and the dots are the matched keypoints.

Output from CMT outdoors with hexacopter 2m above ground Output from CMT outdoors with hexacopter 15 meters above the ground

Output from CMT outdoors with hexacopter 15 meters above the ground

Giovanni Durso

Giovanni Durso Example of breakdown of blob detection

Example of breakdown of blob detection

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.