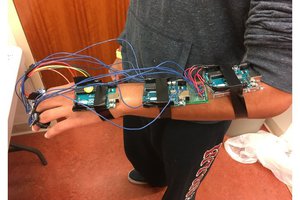

Last year I bought an EEG headset (the Mindwave Mobile) to play with my Raspberry Pi and then ended up putting it down for a while. Luckily, this semester I started doing some more machine learning and decided to try it back out. I thought it might be possible to have it recognize when you dislike music and then switch the song on Pandora for you. This would be great for when you are working on something or moving around away from your computer.

So using the EEG headset, a Raspberry Pi, and a bluetooth module, I set to work on recording some data. I listened to a couple songs I liked and then a couple songs I didn't like with labeled data. The Mindwave gives you the delta, theta, high alpha, low alpha, high beta, low beta, high gamma, and mid gamma brainwaves. It also approximates your attention level and meditation level using the FFT (Fast Fourier Transform) and gives you a skin contact signal level (with 0 being the best and 200 being the worst).

Since I know very little about brainwaves, I can't make an educated decision on what changes to look at to detect this; that's where machine learning comes in. I can use Bayesian Estimation to construct two multivariate Gaussian models, one that represents good music and one that represents bad music.

----TECHNICAL DETAILS BELOW----

We construct the model using the parameters below (where μ is the mean of the data and Σ is the standard deviation of the data):

Now that we have the model above for both good music and bad music, we can use a decision boundary to detect what kind of music you are listening to at each data point.

where:

The boundary will be some sort of quadratic (hyper ellipsoid, hyper parabola, etc) and it might look something like below (though ours is a 10 dimensional function):

----END TECHNICAL DETAILS----

The result is an algorithm that is accurate about 70% of the time, which isn't reliable enough. However, since we have temporal data, we can utilize that information, and we wait until we get 4 bad music estimations in a row, then we skip the song.

I've created a short video (don't worry, I skip around so you don't have to watch me listen to music forever) as a proof of concept. Then end result is a way to control what song is playing with only your brainwaves.

This is an extremely experimental system and only works because there are only two classes to choose and it is not even close to good accuracy. I just thought it was cool. I'm curious to see if training using my brainwaves will work for other people as well but I haven't tested it yet. There is a lot still to refine but it's cool to have a proof of concept. You can't buy one of these off the shelf and expect it to change your life. It's uncomfortable and not as accurate as an expensive EEG but it is fun to play with. Now I need to attach one to Google Glass.

NOTE: This was done as a toybox example as fun. You probably aren't going to see EEG controlled headphones in the next couple years. Eventually maybe, but not due to work like this.

How to get it working

HERE IS THE SOURCE CODE

I use pianobar to stream Pandora and have a modified version of the control-pianobar.sh control scripts I have put in the github repository below.

I have put the code on Github here but first you need to make sure you have python >= 3.0, bluez, pybluez, and pianobar installed to use it. You will also need to change the home directory information, copy the control-pianobar.sh script to /usr/bin, change the MAC address (mindwaveMobileAddress) in mindwavemobile/MindwaveMobileRawReader.py to the MAC address of your mindwave mobile device (which I got the python code fromhere), and run sudo python setup.py install.

I start pianobar with control-pianobar.sh p then I start the EEG program with python control_music.py, it will tell you what it thinks the song is in real time and then will skip it if it detects 4 bad signals in a row. It will also tell you whether the headset is on well enough with a low signal warning.

Thanks to Dr. Aaron...

Steven Hickson

Steven Hickson

Charlie Smith

Charlie Smith

Lars Knudsen

Lars Knudsen

Awesome! Perfect!

Could it be applayed which to detect brain area is more active now?