Neural networks may be used to enhance and restore low resolution images. In this post I explore image super-resolution deep learning in the context of Virtual Reality.

Because I'm waiting to snipe a good deal for a GPU (which performs CNN 20x faster than CPU), a friend has blessed me with a remote Arch Linux box with a GTX 770 with CUDA installed, i5-4670K @ 4GHz. Thanks gb.

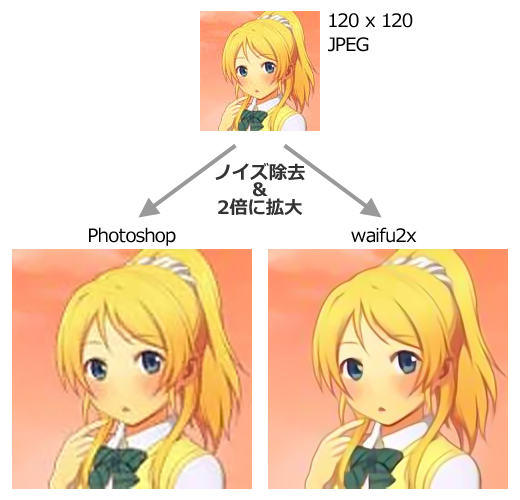

In these experiments, I am using deep learning in order to input low resolution images and output high resolution. The code I am using is waifu2x, a neural network model that has been trained on anime style art.

Installing it was relatively straightforward from an Arch system:

# Update the system

sudo pacman -Syu

# Create directory for all project stuff

mkdir dream; cd $_

# Install neural network framework, compile with CUDA

git clone https://github.com/BVLC/caffe.git

yaort -S caffe-git

# Follow instructions in README to set this up.

git clone https://github.com/nagadomi/waifu2x.git Arch Linux is not a noob-friendly distro, but I'll provide better instructions when I test on my own box.When ready to test, the command in order to scale the image will look like this:

# Noise reduction and 2x upscaling

th waifu2x.lua -m noise_scale noise_level 2 -i file.png -o out.png

# Backup command in-case of error.

th waifu2x.lua -m noise -scale 2 -i file.png -o out.png Original resolution of 256x172 to a final resolution of 4096x2752. 16x Resolution

16x Resolution

The convolution network up-scaling from original 256x144 to 2048x1152.

Thumbnails from BigDog Beach'n video magnified 8x resolution.

Intel Core i5-4670K CPU @ 4GHz GeForce GTX 770 (794 frames, 26 seconds long)

- 256x144 -> 512x288 0.755s per frame 10min total

- 512x288 -> 1024x576 1s 668ms per frame 22min total

- 1024x576 -> 2048x1152 6s 150ms per frame 80min total

- 2048x1152 -> 4096x2304 8s per frame 94min total

Here's a 4K resolution (16x resolution) video + links for the other resolution videos are on the vimeo page.

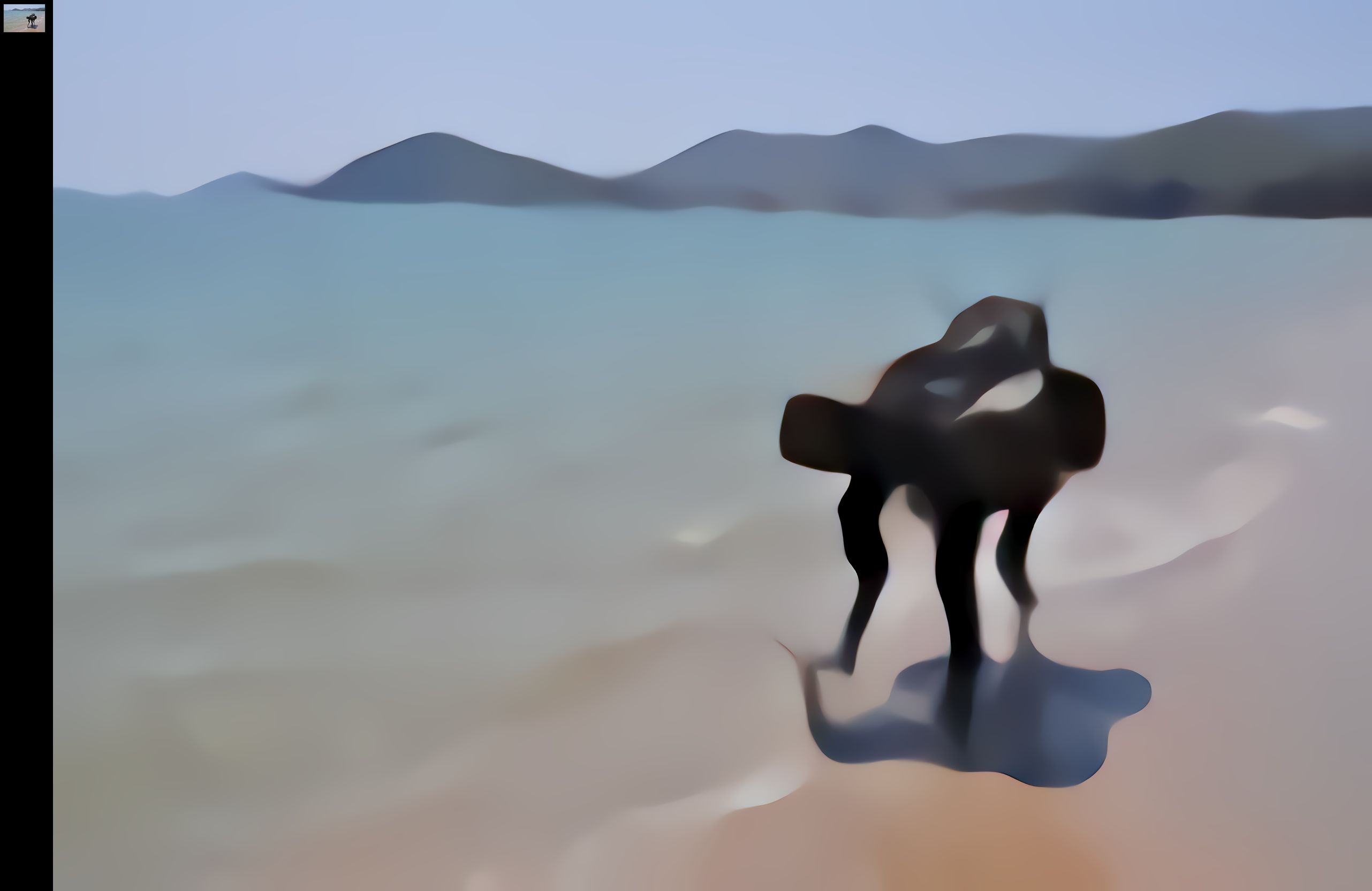

For the next experiment, I took a tiny 77x52 resolution image to test with, the results were very interesting to see.

- 154x104 492ms

- 308x208 594ms

- 616x416 1.12ms

- 1232x832 3.16s

- 2464x1664 10.56s

Because the resolution is higher than most monitors, I am creating a VR world so that the difference can be better understood. It will be online soon and accessible through JanusVR, a 3D browser, more info in next post.

The image was enhanced 32x. The result tells me that waifu2x can paint the base, while deep dream can fill in the details. ;)

The image was enhanced 32x. The result tells me that waifu2x can paint the base, while deep dream can fill in the details. ;)

The neural network that's been trained on Anime gives a very smooth painted look to it. It's quite beautiful and reminds me of something out of A Scanner Darkly or Waking Life. The neural network restores quality to the photo!

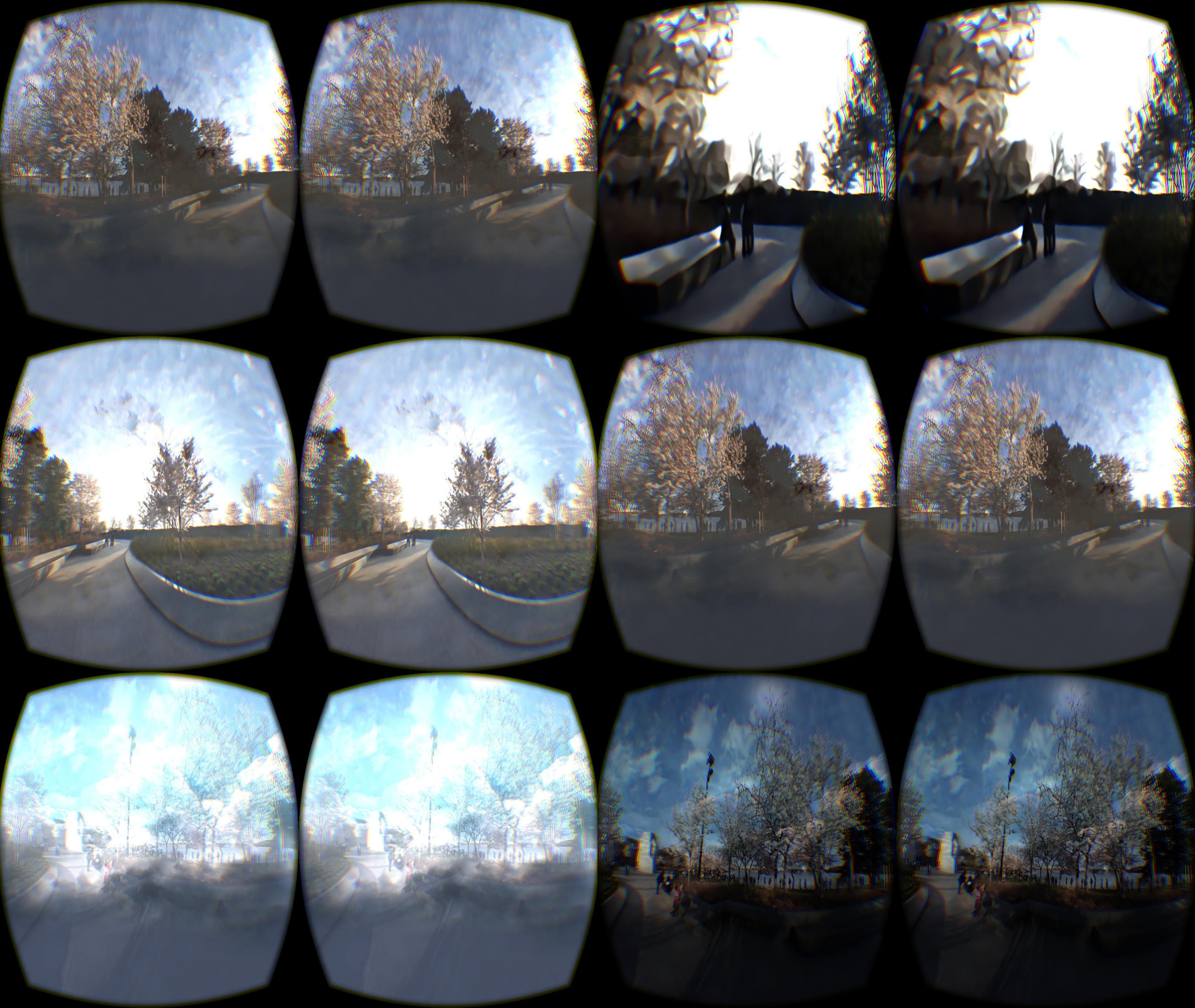

I took this method into the context of VR, quickly mocking up a room that anybody can access via JanusVR by downloading the client here. Upon logging in, press tab for the address bar to paste the URL and enter through the portal by clicking it.

https://gitlab.com/alusion/paprika/raw/master/dcdream.html

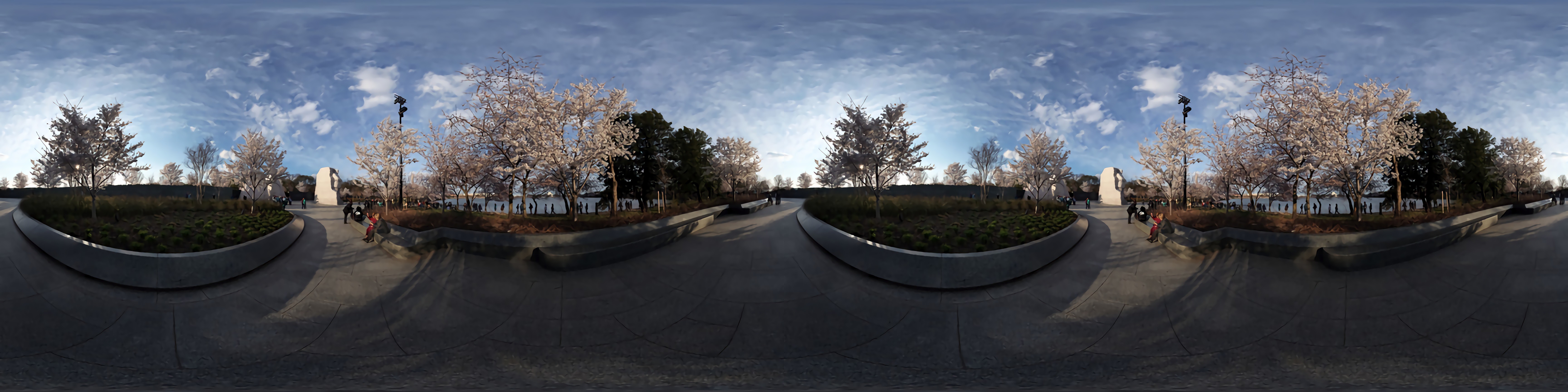

The original photograph is by Jon Brack, a photographer whom I've met in DC that shoots awesome 360 Panoramas. For this example I used a 360 image of the MLK memorial in Washington, DC. First, I resized a 360 image to 20% of it's original size to 864x432, and input it into waifu2x, up-scaling 8x resolution into 3456 x 1728. Then I format the image into a side by side stereo format so that it can be viewed in 3D via an HMD.

Enhance.

Enhance. The convolutional neural networks create a beautifully rendered ephemeral world, up-scaling the image exponentially.

The convolutional neural networks create a beautifully rendered ephemeral world, up-scaling the image exponentially.  It was like remembering a dream.

It was like remembering a dream.

to be continued

alusion

alusion

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.