One foundational challenge for a lot of hobby robotics is navigation and localisation. We can access cheap sensors in the form of IR and sonar, which give distances to solid objects. We can use IMU's and wheel encoders to estimate distance and direction travelled.

It can be difficult to combine all this information to build a map of our environment. Especially if we want to use the map to correct for small errors like wheel slip, which add up over time. There is an excellent book that covers this: "Probabilistic Robotics". The problem is that it's written for engineers, so the notation and examples can be hard for beginners to approach. An alternative to doing it yourself, is to use a robotics package such as ROS. While ROS is amazing for having the entire kitchen sink ready to use, it has a lot of overheads for low power hardware, and adds a lot of complexity to a project even for implementing simple tasks.

Hopefully, I can break down the complexities of programming an intelligent robot over a these project logs. I will be implementing my autonomous rover software using Python and the Arduino IDE. While I am using an Orange Pi Mini 2 (which is a cheap, nasty, powerful clone of the raspberry pi board), I will also give performance figures for an original Raspberry Pi to show that it is possible to get something running relatively well even on low powered hardware and in Python.

Grid Maps

First off, let's talk about making maps. The bread-and-butter of simple and useful robot navigation is the grid-map. This is used in many robot vacuum cleaners, and it's what I'm going to use for navigation first. Basically, the idea is that we have a huge 2D grid of values indicating whether the ground at that part of the map is blocked or not. This grid can be set up at different resolutions, depending on the memory and processing power available to us.

Another consideration is how precise our sensors are - it won't improve anything to measure at higher resolutions than our sensors can sense. I'm using sonar sensors for my project, and I have decided to use 2cm squares as my grid cells. This means I need to store about 1 million values for a 20 meter square (i.e. 40sqm) map, or around 4-8mb. This is pretty small, but we will probably want bigger and irregularly shaped maps later on.

To support large maps, I'm going to use a sparse matrix representation. Basically, I will store the grid in chunks of, say, 5 by 5 meters. New chunks will only be initialised as the robot moves into them. Later on if RAM becomes an issue, we can write far away map chunks to disk. This allows us to only initialise the memory we need. My implementation is pretty incomplete, but it supports the things that I need it to. Here is the code for my sparse grid map (please forgive the lack of comments): [GitHub]

Sensor Updates

Now we need to update the map using sensor readings. It turns out that with some funky math, the updates from sensor readings boil down to adding and subtracting from the grid map values (if you really want to know how, get the book I mentioned). There are two values we need to consider: the amount to decrement the value of a cell by if the sensor indicates it is empty, and the amount to increment a cell by if the sensor indicates it is occupied. After this, we can simply threshold the map at some value to find obstacles. There are equations for working out exactly what the values to add and subract are (based on sensor noise measurements), but I'm going to go with adding 3.0 when an obstacle is hit, and subtracting 0.3 where an obstacle is not hit. This will be good enough in practice.

Usually when this type of mapping is done, lasers are used. Lasers are very easy to program updates for, because you just update cells in a straight line. Scanning lasers are a little expensive though. For IR and sonar, it's necessary to update cells in an arc. My colleagues who work on this technology for commercial autonomous vehicles normally just treat an arc as multiple "laser rays". This can get pretty computationally expensive, and won't fit with my goals of running on a Raspberry Pi in Python. Instead I'm going to look to the computer graphics world for inspiration, and rasterize an approximate arc!

Bresenham Arc-filling

Small arcs are nearly triangles. So if I can divide my arc into enough triangles, I'll have only a small error. Using some simple math, the error turns out to be:

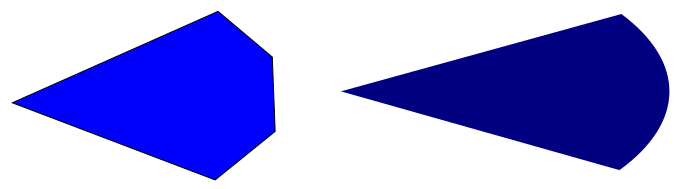

The maximum distance of my sensors is 5 meters, and the original arc angle is 15 degrees. To make my maximum error less than my 2cm cell size, I need to divide my arc into 3 triangles with an arc-angle of 5 degrees each. The figure below shows my approximation and final solution (very rough, not to scale!):

To implement arc-filling, I used Bresenham line-drawing techniques to generate polygon sides, and then raster filled the polygons. There is some room for improvement, but the results appear to work well enough. The code for the raster scanning is available Here [GitHub]. After implementing the raster scans, all I do is add the "non-hit" value to everything inside the area of the arc polygon for each sensor, and then draw a line at the arc edge of the polygon, adding "hit" values to it.

Performance

The idea of the grid-map algorithm is to update the map every time we get a reading from the sensors, and slowly over time it builds up an estimate of where obstacles and clear space are. This lets a little autonomous rover navigate around the piles of clothes on the floor. The following video shows a test-run for a simulated vehicle with 4 sensors at 90 degrees to each other - just to check that the basic idea works (the robot is the red triangle). The results look ok, so now I have to start working on getting my vehicle measuring its movement. After that, I can do some real-world testing!

This code updates a sensor in about 3.6ms on my laptop, and 286.5ms on my Raspberry Pi (at stock speed). The Pi is a bit slow, but for demonstrating how grid-maps work on hobby robots it's probably fast enough. Using pypy or turning the code into a Cython/C module would make it very quick. Maybe when my robot has a lot more going on I'll try this.

If anybody wants to try the code, you can get it here (run gridmap.py): [GitHub]

It's designed to be minimal and easy to fit into a bigger project. All you need for sonar is the robot's location and forward angle, and the sensor's offset from the robot's forward direction.

JosiahWalker

JosiahWalker

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.