The second component of Firefly's base algorithmic layer deals with the problem of avoiding collisions with obstacles. Most existing algorithms for mapping and obstacle avoidance use complex, sophisticated sensors, like depth cameras or laser scanners. The problem with these sensors is that they're large, heavy, expensive, and draw a lot of power. Mapping with a single camera (or monocular mapping) is a good alternative, but it's a much harder problem because you're trying to use fundamentally two-dimensional information to understand a three-dimensional environment. While a few algorithms exist for this task, they're either limited to simple, structured environments, or are computationally-intensive and difficult to run in real time. Because of these limitations, I had to develop my own for Firefly.

Approach

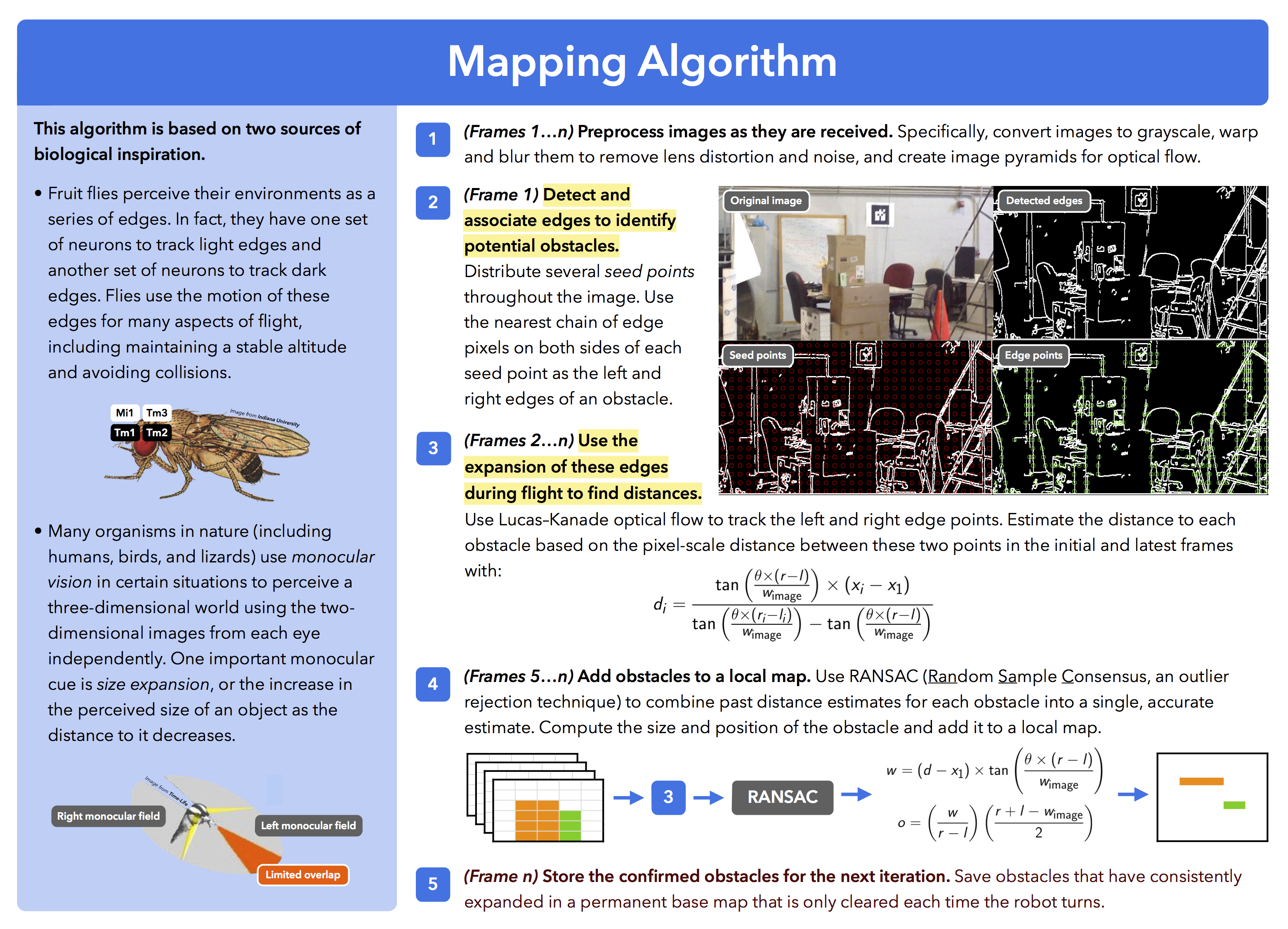

Here's a graphic illustrating how my monocular mapping algorithm works:

This algorithm proved to be able to avoid obstacles of different sizes, shapes, colors, and textures—more on this in a future log! Until then, if you really want to see it in action, my TEDxTeen talk (linked in the sidebar) has a video showing some of my results.

This algorithm proved to be able to avoid obstacles of different sizes, shapes, colors, and textures—more on this in a future log! Until then, if you really want to see it in action, my TEDxTeen talk (linked in the sidebar) has a video showing some of my results.

Mihir Garimella

Mihir Garimella

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.