-

Why Beaglebone?

05/24/2016 at 16:39 • 1 commentJason Kridner was kind enough to top up our stock of Beaglebone Blacks for development at MakerFaire this weekend, and in exchange he asked me to talk a bit about why we chose the Beaglebone.

Driving an autonomous boat around the world is a hard problem. The ocean is a very harsh environment, and even basic navigation and propulsion present major challenges. We realized early on that we needed more processing grunt than we could get out of an Arduino for making high-level navigation decisions. In particular, obstacle avoidance and weather routing require substantial amounts of memory and processing resources that Arduinos largely lack. At the same time, every watt is precious on a boat powered by solar cells or the wind, so we needed to keep the power requirements low.

This led us to choose a federated architecture, with an Arduino handling the low-level real time control and an embedded Linux computer handling the higher level functions. The intent is for the Linux box to spend most of its time asleep and only wake up ever five to ten minutes to scan the horizon, parse incoming weather data, make path planning decisions, and then go back to sleep. The Arduino handles steering a course, propulsion management, and vehicle health monitoring. If the Linux box does not wake up on time, the Arduino reboots it. Note that this time frame for sleep/wake cycles is more than fast enough. The fastest ships encountered in the open ocean typically travel at 30 knots or less. That means they only move 250-500 feet between cycles... and they can be seen for more than ten miles. The ocean is a big place, so things happen relatively slowly compared to other autonomous vehicle domains.

When we were making our initial hardware choices a couple of years back, there were really only three cheap embedded Linux boards we knew of -- Arduino Yun, Rasberry Pi, and Beaglebone Black. Despite the fact that it appears to combine the two things we want, we kicked Arduino Yun out immediately. It's dreadfully slow and its onboard storage and RAM are tiny. This left us looking at Raspberry Pi B vs the Beaglebone White. At that time, getting a BBB was a crap shoot, so our Beaglebone candidate was the Beaglebone Whites I had on hand from an earlier project.

The Raspberry Pi B had one major advantage -- it had 512 MB of RAM vs the 256 MB of the BBW. However, the BBW spanked it on just about every other metric and we knew we were going to get the RAM as soon as we were able to score a BBB. We didn't know about the PRUs yet, so it came down to two big advantages and one initially small one.

First, the Beaglebone is almost 50% faster, at 1 GHz (vs 700 MHz for the RasPi B). This is important because it meant we could wake it up for shorter periods to do the same work. Since the Beaglebone has slightly lower power consumption, this is a big advantage for the Beaglebone.

Second, it has several times as much I/O available as the RasPi, which meant that we had room to add future vehicle health monitoring and more subsystems without changing platforms or too much messing around. This was important enough that when we built the brain box, we broke out every pin to an empty header to allow us to quickly and easily add more stuff. As you can see, we wasted no time at all exploiting this.

![Hackerboat Brain Surgery]()

Third, Beaglebone is a substantially more open platform than RasPi in practice. In particular, you can order small quantities of key parts rather than having to deal with massive minimum orders. This was a minor consideration at the time, but the announcement of the Beaglebone Blue reinforced it as a significant long term advantage. The RasPi notably does not have any special-purpose variants, and I believe that is due to a functionally much more closed hardware platform.

Our current brain box design mates the Beaglebone, an Arduino Mega, an Adafruit 9-axis IMU, and an Adafruit Ultimate GPS breakout. We stuffed it in an off the shelf IP-68 case. The connectors are M8 and M12s, which are a standard IP-68 connector system. Mouser, Automation Direct, and McMaster-Carr all carry the connectors and cable sets.

As soon as the Beaglebone Blue comes out, we're going to move over to it. Our current brain box includes a 9-axis IMU and a WiFi interface... which the Blue comes with out of the box. That's a really solid win in cost and complexity. The inclusion of BLE gives us interesting options for networking in remote sensors and actuators at minimal power cost.

-

Back from Makerfaire

05/24/2016 at 03:46 • 0 commentsMakerfaire Bay Area was awesome and exhausting, as always. Alex and Heather drove the boat down and back. Here's the main boat painted and our new sail boat sitting next to it.

![]()

-

Blogging about our design

04/16/2016 at 06:11 • 0 commentsI just wrote a blog entry about our hardware and software design... first in what's likely to be a long series. We're going to be painting the boat tomorrow so it looks a bit less hoopty.

-

Makerfaire Bay Area

04/01/2016 at 03:13 • 0 commentsWe're going to be at Makerfaire Bay Area as an exhibitor. Come on by and see the boat!

-

Software Work

03/14/2016 at 19:30 • 0 commentsThe last few months have been lots of core software work, which doesn't make for a whole lot of pretty pictures to hang an update on. I think we'll be ready to get back in the water on the first weekend in April -- here's what we have accomplished so far:

- Preliminary control webpage and backing javascript. This is most of the way it can go until we have the underlying control code running.

- Wrote the core REST interface, main control, and navigation code for the Beaglebone. Some of it even compiles. We're still working on the interprocess communication to bring it all together. Also, unit tests with the gtest framework. Wim has been doing an excellent job with getting this stuff to compile and adding unit tests... and he's been remarkably unsnarky about my C++ skills. I have become a significantly better C++ programmer during this process.

- Switched the core code to C++. I realized I was writing objects in C, so I decided to enlist the compiler's help.

- Wrote the Arduino code. This is currently in the debug phase -- I'm having trouble getting aREST to play nice with variables and functions.

- Fixed the GPS -- it turns out the GPS logger shield we hacked in was dead; replacing it with the intended GPS breakout resulted in a happy GPS. Along the way, I had to learn a bit about how the new pinmux with device trees work. Once I got past the initial table-pounding phase, it wasn't too horrid.

So, it's all coming together and we're currently in the debug & integrate phase of the project, which will likely last a while. Check out the github for our latest progress. We've got a pile of hardware minutiae to address as well, but that's lower on the priority list right at the moment.

-

Software Refactoring Progress

12/07/2015 at 04:36 • 0 commentsThe big takeaway from our launch was that our software needs a major overhaul. Since we're not getting back in the water anytime in the next couple of months, this is what we are working on now.

Software Refactoring

I've taken the first cut at refactoring the Arduino code and defining a new REST API for the boat. The results can be seen in the doc/systems folder of the main repository.

One of the things I did was move all of the configuration and operational code into an Arduino library. This is largely to make it easier to write unit test code, since Arduino lacks any other reasonable way to compile multiple sketches off a single code base.

The way aREST handles variables and functions forced me to modify my original vision for the API a bit, but nothing too major. The state and REST tables reflect the changes, but the top level system description does not yet. I had to modify aREST along the way, adding the ability to turn direct pin access on and off. I also modified the handle() method to return a boolean indicating whether or not it had found a valid message. Once I've tested these functions adequately, I'll issue a pull request for it.

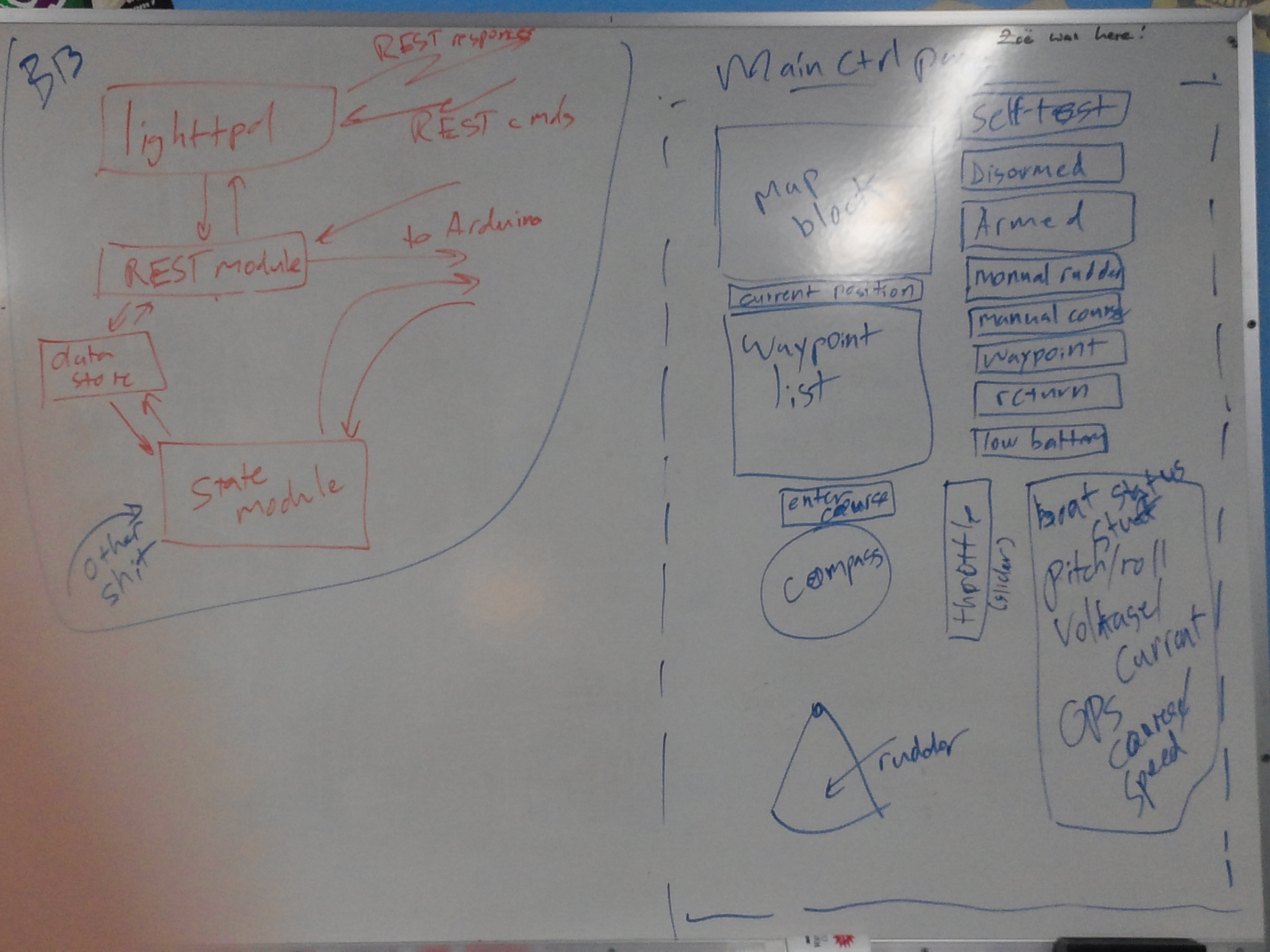

Last night, Jeremy, Wim, and I knocked around some ideas for software organization on the Beaglebone and some initial thoughts on what the UI is going to look like. Here's a shot of the whiteboard before we went home:

![]() The map block will show the current location of the boat and the waypoints. Map data will come from Google Maps or a local store. Current position and waypoint list should be self-explanatory. The list of buttons to the right of the map block corresponds to the different boat states. The current state will be lit and the operator will press one to request a change. Below the waypoint list is a block to enter the desired course of the boat and a compass rose to show the current heading, current target course, and a draggable pointer to allow the user to set the course. The rudder block shows the current position of the rudder and, in the right boat state, allows the user to set it. The block to the right of this displays a bunch of boat health/state stuff. I listed some things that ought to be there, but that's not an exhaustive list.

The map block will show the current location of the boat and the waypoints. Map data will come from Google Maps or a local store. Current position and waypoint list should be self-explanatory. The list of buttons to the right of the map block corresponds to the different boat states. The current state will be lit and the operator will press one to request a change. Below the waypoint list is a block to enter the desired course of the boat and a compass rose to show the current heading, current target course, and a draggable pointer to allow the user to set the course. The rudder block shows the current position of the rudder and, in the right boat state, allows the user to set it. The block to the right of this displays a bunch of boat health/state stuff. I listed some things that ought to be there, but that's not an exhaustive list.The Beaglebone side is deceptively simple, but we sure talked about it a lot. Lighttpd should be self-explanatory -- it's a popular lightweight webserver for embedded applications. Having a running webserver makes it straightforward to serve back log files, camera images, and the like without a bunch of new UI. It also gives us access to existing authentication and SSL tools. That calls a REST handler on receipt of requests. That module can read and write from the Arduino via serial port. It can also read and write to a data store that it shares with the state machine module. The state machine module maintains the state of the Beaglebone, the waypoint list, and does the navigation.

Jeremy suggested something called microhttpd instead, which is a library designed to be statically compiled into the program in order to provide httpd functionality. I wasn't hugely in to it at the time, because I like the idea of being able to serve static stuff like log files directly. It also nicely separates functions into separately testable bits. However, thinking about the complexity required to make the data store part work, squishing this all into a single program sounds better and better. We can still have a regular httpd for everything else.

There's also an other shit box running into the state machine... that's other navigation data, which I'll get to in a bit.

A couple of things came out of this discussion...

- We're going to need some help on the Javascript front to do the UI in a way we can live with. Our thought so far is that it's going to be something with jQuery and some javascript widget library yet to be identified.

- The data store block in the Beaglebone code diagram to the left probably wants to be a database if we maintain separate programs.

Obstacle Avoidance

There is good open source digital map data... as long as we have a hard rule to remain a sufficient distance from shore, we should be fine. Some interesting local areas are pretty congested, with distances between adjacent islands as small as 50 - 60 meters. This will require some thought and perhaps on the ground measurement of tight areas to assure ourselves that the data is in fact good. The other problem is GPS accuracy -- GPS alone is not good enough to stay off the rocks in some areas.

A key element for any autonomous operation in in-shore waters is some way to avoid other traffic. Bigger ships (ferries, container ships, fishing vessels, some yachts) are kind enough to transmit their identity, location, speed, and heading via AIS. Dedicated AIS receivers run from $200 on up, but a little digging around shows that you can do it with an RTL-SDR dongle, and those cost about $20. Conveniently, the ships we most want to stay far away from, like ferries (aka imperial entanglements), are also the most rigorous about having functional, accurate AIS gear onboard. Since AIS users are using local GPS, systemic errors are likely to be constant between different users. Rocks, kayakers, and most smaller pleasure craft do not have AIS, however.

My intuition for the right way to sense obstacles is the same way I do -- stereoscopic vision. Invitingly, OpenCV has functions that just do this, and WiFi cameras are inexpensive. We have a baseline of a meter or so in the form of the forward solar panel. If we assume 60 degree field of view and a horizontal resolution of 640, this gives us a maximum theoretical range of about 300m... although somewhat less in practice due to noise etc.

Jeremy attended an autonomous sailboat talk at UW a few months back, and noted that they were trying a similar approach and having issues with it. In particular, they were having problems discriminating between actual obstacles and the water. I think we can avoid some of this by removing the water from the scene by determining the current position of the horizon relative to the boat and simply cutting it off. We already have pitch and roll sensors, so this ought to be straightforward. We'll see how it works in practice.

-

Hackerboat launch, 18 Oct 2015

11/23/2015 at 07:07 • 0 commentsWe got the boat in the water for a short test on Oct 18. You can read more about it on the Hackerbot blog.

The map block will show the current location of the boat and the waypoints. Map data will come from Google Maps or a local store. Current position and waypoint list should be self-explanatory. The list of buttons to the right of the map block corresponds to the different boat states. The current state will be lit and the operator will press one to request a change. Below the waypoint list is a block to enter the desired course of the boat and a compass rose to show the current heading, current target course, and a draggable pointer to allow the user to set the course. The rudder block shows the current position of the rudder and, in the right boat state, allows the user to set it. The block to the right of this displays a bunch of boat health/state stuff. I listed some things that ought to be there, but that's not an exhaustive list.

The map block will show the current location of the boat and the waypoints. Map data will come from Google Maps or a local store. Current position and waypoint list should be self-explanatory. The list of buttons to the right of the map block corresponds to the different boat states. The current state will be lit and the operator will press one to request a change. Below the waypoint list is a block to enter the desired course of the boat and a compass rose to show the current heading, current target course, and a draggable pointer to allow the user to set the course. The rudder block shows the current position of the rudder and, in the right boat state, allows the user to set it. The block to the right of this displays a bunch of boat health/state stuff. I listed some things that ought to be there, but that's not an exhaustive list.