My lab is a disaster, and I'm shooting for a tech-fix instead of actually getting organized. What I'd like to have is a barcode scanner that reads DigiKey packages and allows me to catalog and track my parts inventory. I think this would also be a great addition to the AR workbench. So, I started prototyping a scanner and will document it here as I develop it.

DigiKey uses DataMatrix 2D barcodes on their packaging. I know they at least encode DigiKey's part number and the manufacturer's part number, plus some other information which I haven't been able to map out yet. Either one of the part numbers would be sufficient to identify and catalog the part. I can imagine scanning bags as they arrive, then tagging the records with a location where the parts are stored, plus a count of how many are available. When I use a part, I scan the bag again, then enter the number used. That's enough to track inventory.

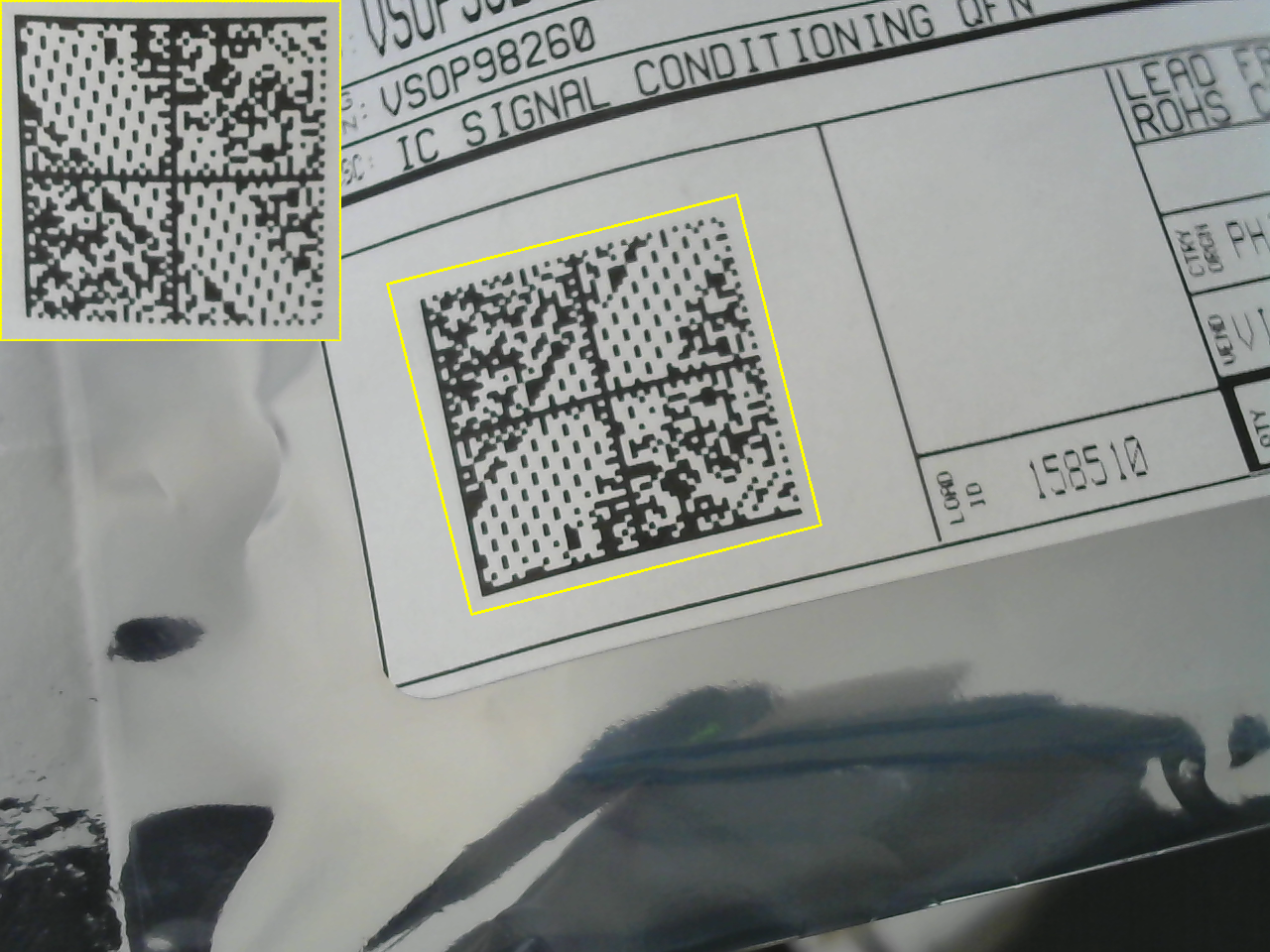

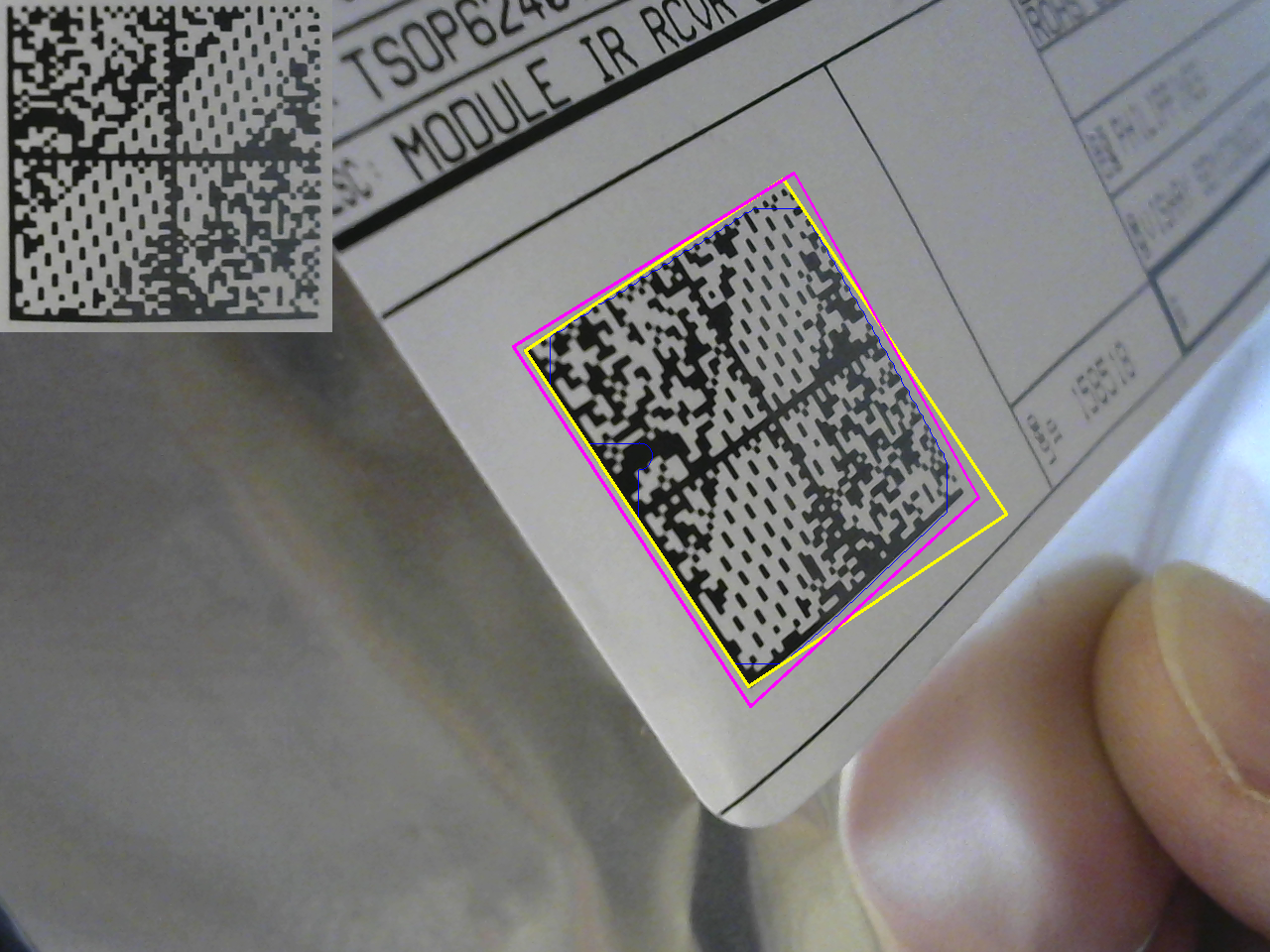

So far, I can automatically locate the barcode in an image and crop it out, undoing some (but not all yet) perspective distortion, as shown above. There are a handful of parameters which need fine-tuning, but the performance seems reasonable. If it beeped when a code was successfully recognized, it would probably be usable as is.

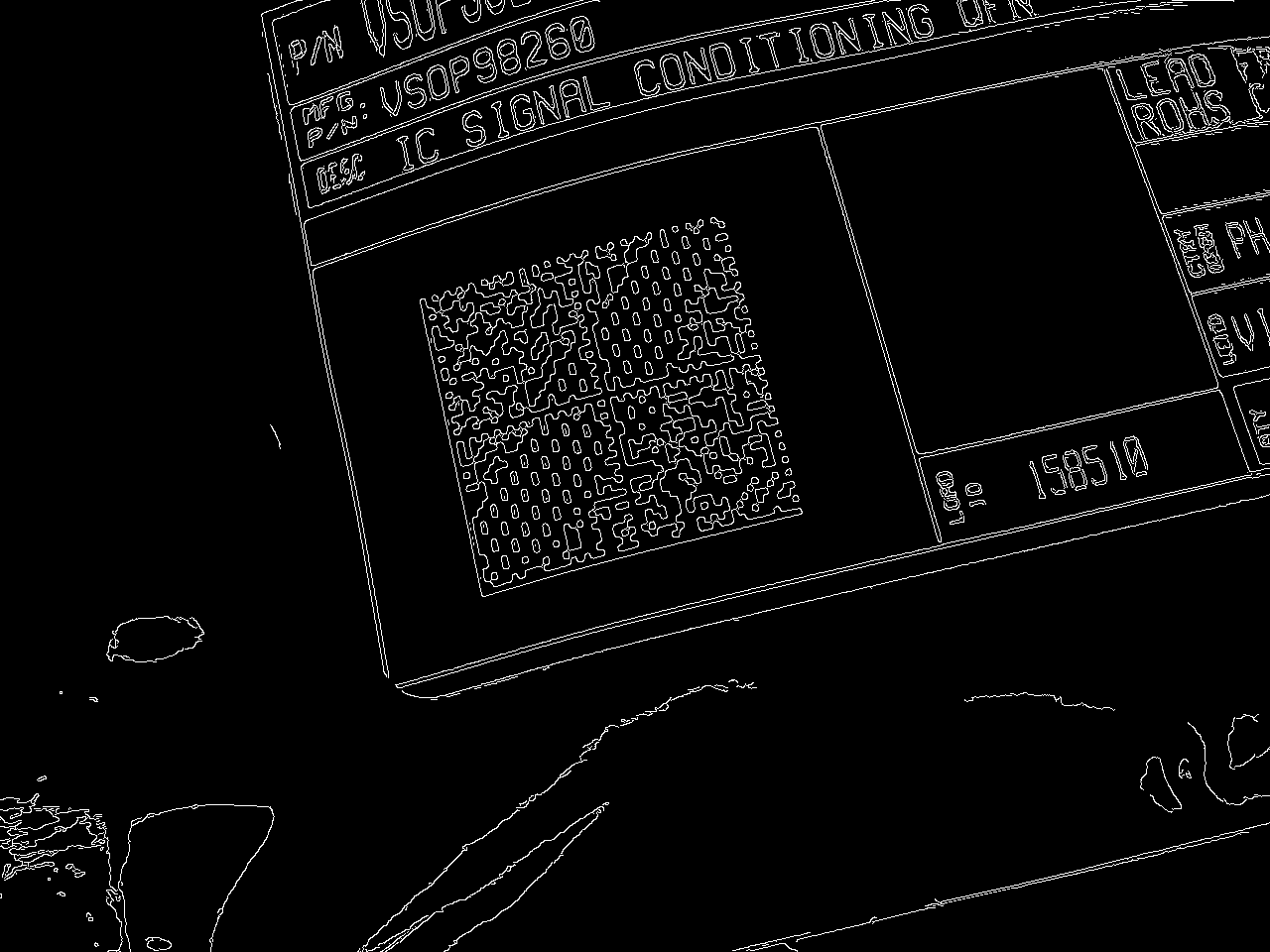

The algorithm is really simple. First, I convert the input image to grayscale and find edges:

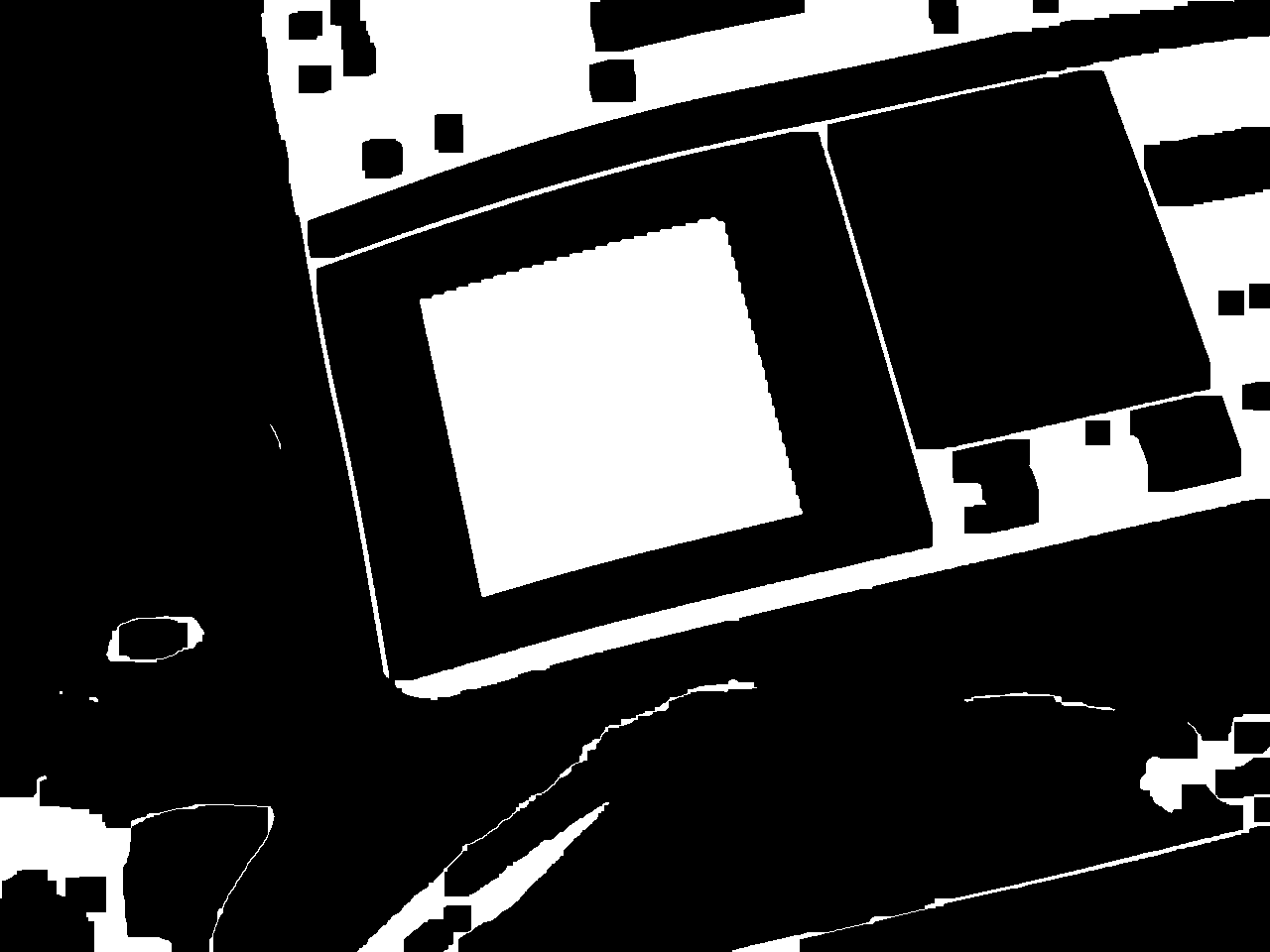

I found this approach is suggested in a blog post about finding 1D barcodes. The codes contain a high density of edges. The next step is to use morphological operators to detect the codes. I use a morphological closing (dilation followed by erosion) to connect the code into a solid mass:

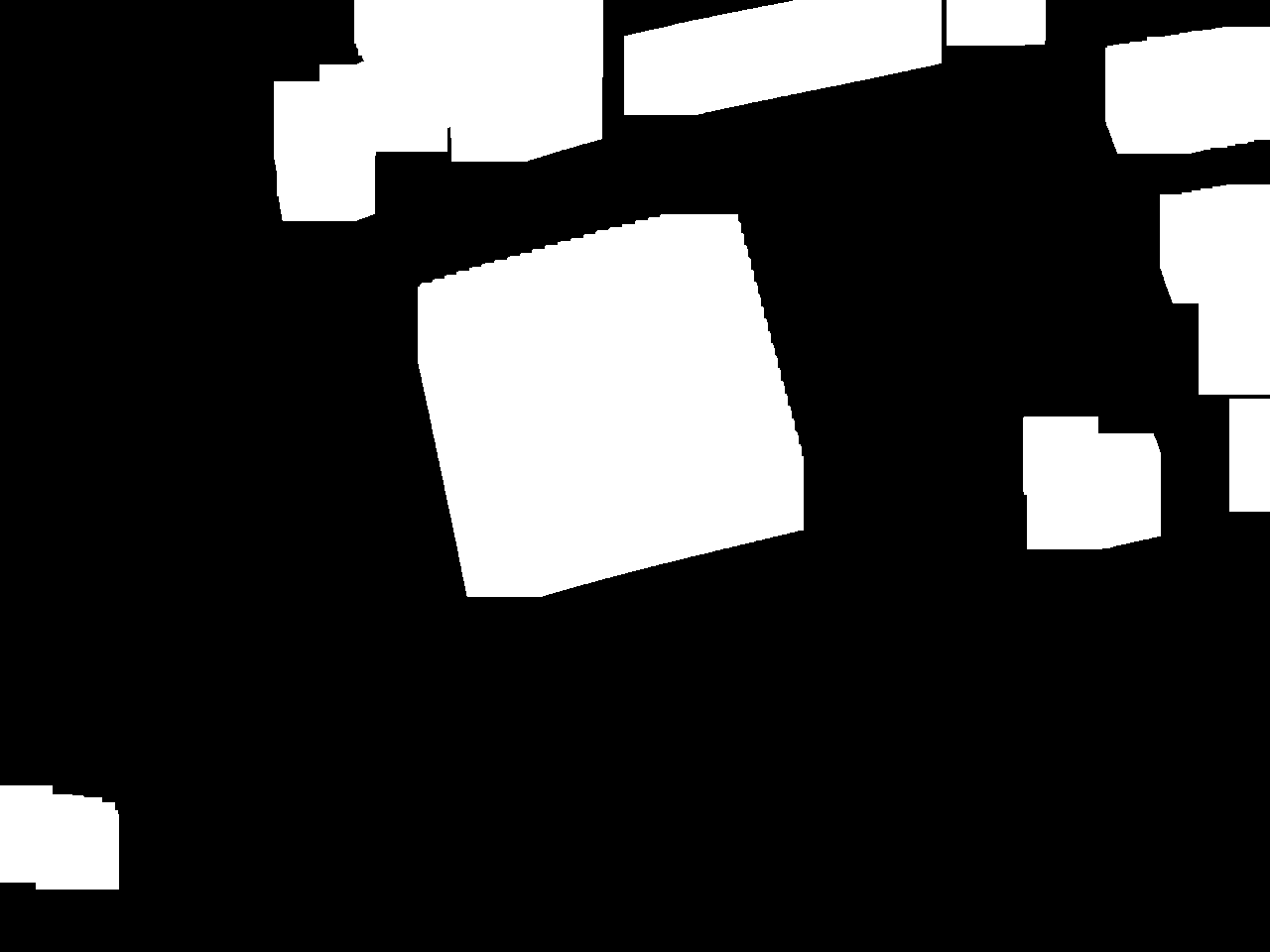

Then use a morphological opening (erosion followed by dilation) to remove small areas:

Finally, I apply a connected components analysis and use size-pass filtering to detect the barcode. I use the OpenCV function minAreaRect() to find the minimum area rectangle enclosing the region, then calculate a perspective transform to warp this rectangle into a canonical square. In general, the image of the bar code is not a rectangle, but simply a quadrilateral, so this approach does not remove all perspective distortion (you can see this in the processed image above). It's a start, though, and I can refine it as I go.

So far, there are a few issues. The extracted image can currently be in one of four orientations. I need to write some code to figure this out; I think the wide empty stripe in the code probably creates a preferred orientation if you do principal components analysis, for example. Or, maybe a line detector could find the outer edges easily.

I think a second round of processing on the extracted image chip to fix remaining distortions would also be helpful in recognition. Removing the lens distortion from the camera would also help a great deal.

I'll update this log as I go.

Update: libdmtx

I found the libdmtx library for decoding the barcodes. It compiled fine, and I got a first pass at decoding going combined with my OpenCV code. So far the end-to-end system is a little finicky, but I did get it to read and decode the barcodes at least sometimes. So far, I get:

[)>

06

PVSOP98260CT-ND

1PVSOP98260

K

1K54152345

10K61950195

11K1

4L

Q10

11ZPICK

12Z3504686

13Z158510

20Z000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000

"VSOP98260CT-ND" is the DigiKey part number.

"VSOP98260" is the manufacturer part number.

"54152345" is the sales order number.

"61950195" is the invoice number.

"Q10" indicates quantity 10.

"158150" is the "load ID" also printed on the label. Purpose unknown.

The other fields are a mystery so far.

Update: Perspective Correction

I had to write a bunch of code for this, but it seems to work pretty well. There isn't a RANSAC line-finder in OpenCV for some reason, so I had to make one. It works by randomly selecting two points from a noisy set (containing some lines), then accepting points close to the line connecting the two chosen points. The line is then least-squares fit to the set of selected (inlier) points. I use this to find a quadrilateral region enclosing the barcode. Mapping this quadrilateral to a square with a planar homography removes the perspective distortion. You can see it here, where the enclosing rectangle (yellow) really doesn't capture the shape of the code, while the fit lines (magenta) do.

The image chip in the top left is the extracted and corrected barcode. There's still some distortion caused by the code not being flat, but that's probably best handled after the chip has been extracted. I'm thinking of ways to deal with this.

So far, the experiments with libdmtx have been mixed. It seems *very* slow, and doesn't recognize the codes very often at all.

I think the vision code is getting good enough that I can take a crack at interpreting the barcode myself. I found this document which explains much of the code. If I limit myself to the DigiKey version (size, shape, etc), I can probably write a decently robust algorithm.

Next Steps

I need to clean this prototype code up, refine it a bit, and put it up on the githubs.

Digikey has a published API and client (C#, anyway). You have to register with them to use it, but this might be the easiest way to collect information about each part as it is scanned.

Alternatively, the data could be scraped from the DigiKey web site, although that sounds like a pain. And it could be fragile.

Ted Yapo

Ted Yapo

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

I'm late to the party, but here's what's in that code. There are two ASCII control characters, hex 1d and 1e, the Group Separator (␝) and Record Separator (␞) respectively.

- [)>␞ : Indicates the data is compliant to ISO/IEC 15434.

- 06 : From ISO 15434, that the data is using ASC MH 10 data identifiers

After there are fields separated with the Group Separator. Each fields starts with an optional number and a mandatory single letter that defines the data type. These codes are defined in MH10.8. After the code is the data value. The data fields in the above code are:

- P: Item Identification Code assigned by Customer. (VSOP98260CT-ND)

- 1P: Item Identification Code assigned by Supplier. (VSOP98260)

- K: Order Number assigned by Customer to identify a Purchasing Transaction (empty)

- 1K: Order Number assigned by Supplier to identify a Purchasing Transaction (54152345)

- 10K: Invoice Number. (61950195)

- 11K: Packing List Number. (1)

- 4L: Country of Origin, two-character ISO 3166 country code. (empty)

- Q: Quantity, Number of Pieces, or Amount (10)

- 11Z: Structured Free Text (PICK)

- 12Z: Structured Free Text (3504686)

- 13Z: Structured Free Text (158510)

- 20Z: Structured Free Text (0000...)

Are you sure? yes | no

This label encoding is MH10.8.2. You are missing the special characters (\x1d \x1e) in the datamatrix dump. My scanner outputs "[)>\x1e06\x1dPVSOP98260CT-ND\x1d1...", which looks nicer to decode.

Are you sure? yes | no

Interestingly, the characters are actually there verbatim. You can see them, for example, if you copy and paste the string into an editor that supports displaying non-printable characters, for example into Visual Studio Code.

Are you sure? yes | no