Introduction:

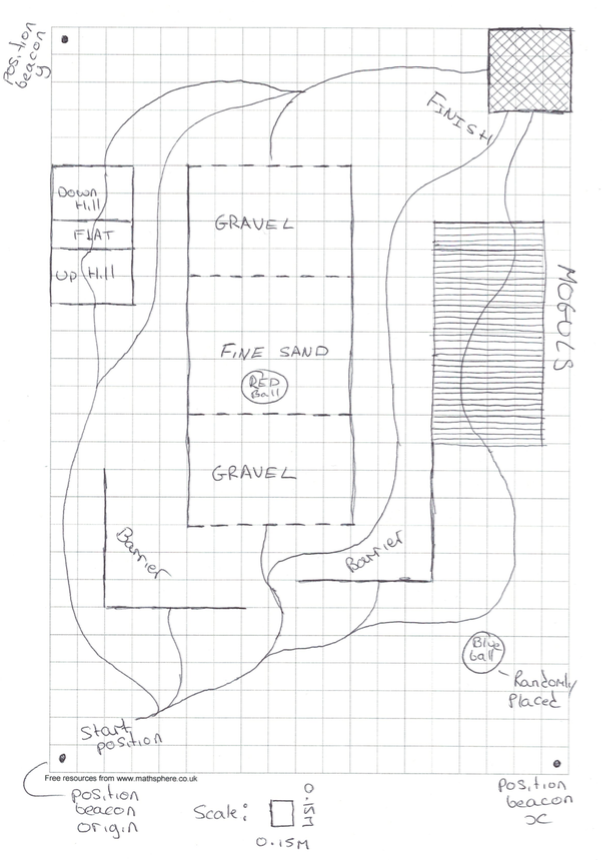

The robotics competition is organized by the Robotics Society at the University of Manchester and the main requirement is building an autonomous robot by undertaking all the processes from design to manufacturing and programming. The robot should perform tasks with a high degree of autonomy in order to be capable of traveling from point A to B by surpassing challenging and randomly placed obstacles. This project integrates two important aspects of modern electronics, namely computer engineering and control systems engineering. The competition will be monitored by a group of jury who will eventually decide the winner by considering a number of criteria.

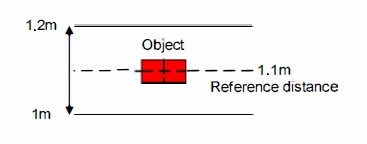

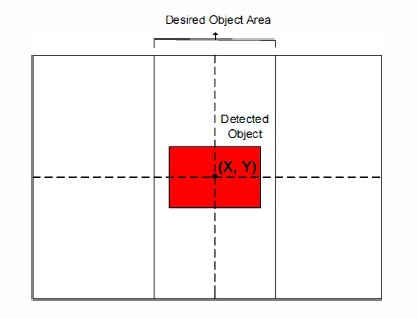

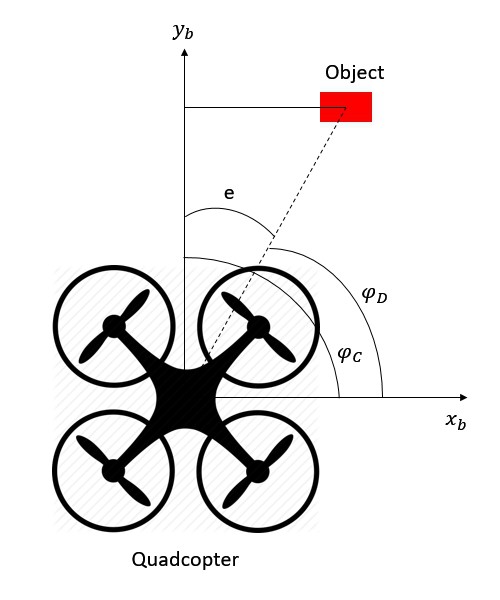

There will be 2 randomly placed balls that are coloured in red and blue and the robot that successfully locates these balls gains extra marks by the jury. Therefore our aim is to engineer a quadcopter which will autonomously scan the course looking for a red or blue ball. Once it detects either the red or blue ball it will hover over it and descend, touch it and then scan the rest of the course for the other ball.

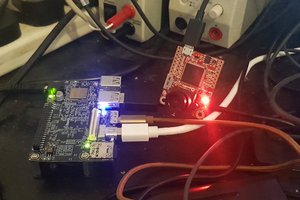

I am responsible of developing the system of image processing to detect the location of the red and blue balls and outputting the location in terms of coordinates. Francesco Fumagalli is undertaking the programming of the actual quadcopter. You can follow his blog here.

P.s. The majority of the components that are used in this project are supplied by the UoM Robotics Society.

The course of the competition:

Figure 2 Image showing a detected object within desired area

Figure 2 Image showing a detected object within desired area

Adam Taylor

Adam Taylor

kutluhan_aktar

kutluhan_aktar

Eugene

Eugene

Hi Canberk Gurel.

I have experience building drones. I find very interesting this project. I think if you use opencv in raspberry Pi 2 can process images at about 6 fps, which may be enough to control the drone at low speeds, it has my support for your project.

but my English is very basic ....