-

Hack Chat Transcript, Part 2

01/19/2022 at 21:09 • 0 comments![]()

![]() with ultrasound you probably wouldn't be measuring the nerve activity you'd be measuring the actual contraction of the muscle, but it might fill in the gaps..

with ultrasound you probably wouldn't be measuring the nerve activity you'd be measuring the actual contraction of the muscle, but it might fill in the gaps..![]() Didn't know you could use ultrasound for that, that's fascinating

Didn't know you could use ultrasound for that, that's fascinating![]() @anfractuosity Focused ultrasound was first used for surgery and cancers, I think. Getting to the level of sensitivity and care to sense and stimulate individual nerves took time and a lot of data. Most of the raw data went into human neural nets, so none of it got shared. If your group shares the raw data, there are groups world wide who can work on algorithms and clearly defined tasks and tests.

@anfractuosity Focused ultrasound was first used for surgery and cancers, I think. Getting to the level of sensitivity and care to sense and stimulate individual nerves took time and a lot of data. Most of the raw data went into human neural nets, so none of it got shared. If your group shares the raw data, there are groups world wide who can work on algorithms and clearly defined tasks and tests.![]() It's literally "The machine that goes 'PING!'"

It's literally "The machine that goes 'PING!'"![]() @RichardCollins intriguing, not sure i knew it was used for cancers. I recall touching an ultrasonic fogger thing, and it seemed to hurt a bit

@RichardCollins intriguing, not sure i knew it was used for cancers. I recall touching an ultrasonic fogger thing, and it seemed to hurt a bit![]() what kind of pain? heat? :)

what kind of pain? heat? :)![]() with array of transducers you can steer the beam/focal point. lots of videos on youtube.

with array of transducers you can steer the beam/focal point. lots of videos on youtube.![]() yeah hard to explain heh, i guess it felt like heat

yeah hard to explain heh, i guess it felt like heat![]() that's interesting you're using gnuradio companion for some stuff :)

that's interesting you're using gnuradio companion for some stuff :)![]() The concept of using the existing muscles is powerful. Exoskeletons and robotic assistance is costly and clumsy. Take the "intention" signals and send them to a body controller that knows the 3D structure and properties, then determines the muscles needed and their response signals - is possible. Big data, external computer needed for prototypes, but wireless sensors and stimulation. Look at TENS stimulators, they are high voltages cheap circuits, but timing and strength can stimulate the right nerver. About 650 muscles in the body.

The concept of using the existing muscles is powerful. Exoskeletons and robotic assistance is costly and clumsy. Take the "intention" signals and send them to a body controller that knows the 3D structure and properties, then determines the muscles needed and their response signals - is possible. Big data, external computer needed for prototypes, but wireless sensors and stimulation. Look at TENS stimulators, they are high voltages cheap circuits, but timing and strength can stimulate the right nerver. About 650 muscles in the body.![]() that's good for assistive tech/medical tech. but the force doesn't scale for exoskeleton use. different domain, though, but could also work for physical telepresence.

that's good for assistive tech/medical tech. but the force doesn't scale for exoskeleton use. different domain, though, but could also work for physical telepresence.![]() @anfractuosity Yes, I found that this is the best data plotter for my purposes, although it feels like it hasn't exactly been designed for my use case :D

@anfractuosity Yes, I found that this is the best data plotter for my purposes, although it feels like it hasn't exactly been designed for my use case :D![]() The software defined radio groups are expanding and improving every day. They are into 10s of gigasamples per second at 12 bits. If you have the money you can get most anything you want. But the 10 Msps (megasamples per second) 10 bit low cost devices give you FFTs to work with in a community where some of the hard parts are done for you. Then focus on algorithms and applications.

The software defined radio groups are expanding and improving every day. They are into 10s of gigasamples per second at 12 bits. If you have the money you can get most anything you want. But the 10 Msps (megasamples per second) 10 bit low cost devices give you FFTs to work with in a community where some of the hard parts are done for you. Then focus on algorithms and applications.![]() biosignals should work with way lower samplerates.

biosignals should work with way lower samplerates.![]() the highest useful sampling rate for surface EMG is around 4kHz, though 500Hz gives you most of the signal already

the highest useful sampling rate for surface EMG is around 4kHz, though 500Hz gives you most of the signal already![]() You should be able to use an SDR with a tiny bit of electrical fiddling to get high sampling rate FFTs. The radio signals are microvolts. The USB oscilloscope groups are also going faster - using the same technologies. They just don't have FFT and neural net software plugged in. They can handle millivolts signals with differential amplfiers more and more built into the oscilloscope programmable amplifier front ends.

You should be able to use an SDR with a tiny bit of electrical fiddling to get high sampling rate FFTs. The radio signals are microvolts. The USB oscilloscope groups are also going faster - using the same technologies. They just don't have FFT and neural net software plugged in. They can handle millivolts signals with differential amplfiers more and more built into the oscilloscope programmable amplifier front ends.![]() does anybody know good dry electrodes that one could use for EMG? I'm still using very suboptimal ones

does anybody know good dry electrodes that one could use for EMG? I'm still using very suboptimal ones![]() using an SDR for processing biosignals sure sounds interesting, haven't thought of that

using an SDR for processing biosignals sure sounds interesting, haven't thought of that![]() I'd imagine the dry EEG electrodes (either gold or Ag/AgCl) would work, although I've never tried

I'd imagine the dry EEG electrodes (either gold or Ag/AgCl) would work, although I've never tried![]() will try them out

will try them out![]() thank you @Preston

thank you @Preston![]() if you have any more questions after this session, you can come to PsyLink's Matrix chatroom any time: https://matrix.to/#/#psylink:matrix.org

if you have any more questions after this session, you can come to PsyLink's Matrix chatroom any time: https://matrix.to/#/#psylink:matrix.org![]() @hut we have some limited success with conductive fabric - but can't say I'm satisfied with it, looking for better options

@hut we have some limited success with conductive fabric - but can't say I'm satisfied with it, looking for better options![]() @hut You know I'm using brass or silver-plated corkboard pins or copper shimmies from laptop CPUs since theyre both cheap, but I'm yet to have a good comparison between them (https://github.com/PerlinWarp/pyomyo/wiki/The-basics-of-EMG-design ).

@hut You know I'm using brass or silver-plated corkboard pins or copper shimmies from laptop CPUs since theyre both cheap, but I'm yet to have a good comparison between them (https://github.com/PerlinWarp/pyomyo/wiki/The-basics-of-EMG-design ). Anyone know if these are horrible ideas? ahah

![]() doesnt copper / silver tarnish though?

doesnt copper / silver tarnish though?![]()

![]()

![]() older prototypes used snap buttons that are typically found in jackets or other clothing

older prototypes used snap buttons that are typically found in jackets or other clothing![]() Electron paramagnetic resonance spectroscopic microscopy, and NMR imaging spectroscopic microscopy and other groups are trying to measure (non invasive) nerve activity. The microscopic is mostly cubic millimeter voxels, but people go larger. Imaging ultrasound can modulate electrical signals so groups are combining EEG MEG (magneto) and other non contact electromagnetic measurements with ultrasound. All for tracking nerve activity.

Electron paramagnetic resonance spectroscopic microscopy, and NMR imaging spectroscopic microscopy and other groups are trying to measure (non invasive) nerve activity. The microscopic is mostly cubic millimeter voxels, but people go larger. Imaging ultrasound can modulate electrical signals so groups are combining EEG MEG (magneto) and other non contact electromagnetic measurements with ultrasound. All for tracking nerve activity.![]() And just like that, our time is up! Really good discussion, great way to kick off 2022! I want to thank hut for his time today, and all of you for the great questions and insights. Feel free to keep the discussion going here, you're welcome to stay as long as you like. Thanks all!

And just like that, our time is up! Really good discussion, great way to kick off 2022! I want to thank hut for his time today, and all of you for the great questions and insights. Feel free to keep the discussion going here, you're welcome to stay as long as you like. Thanks all!![]() Thank you Dan for inviting me!

Thank you Dan for inviting me!![]() You're welcome! And everyone make sure you come back for next week's chat:

You're welcome! And everyone make sure you come back for next week's chat:![]() Thanks @hut

Thanks @hut![]()

https://hackaday.io/event/183427-compliant-mechanisms-hack-chat

![]() that was really cool! look forward to reading more about your project :)

that was really cool! look forward to reading more about your project :)![]() the link isn't valid

the link isn't valid![]() Thanks everyone :)

Thanks everyone :)![]() The stakes are high. Many people become paralyzed from accidents and diseases, but also drugs and stroke. So tens of millions need devices to measure "intention" by nerve activity or small muscle movements - and translate that to muscles stimulations or to machine controls. The intentional use by anyone means driving vehicles and operating complex equipment.

The stakes are high. Many people become paralyzed from accidents and diseases, but also drugs and stroke. So tens of millions need devices to measure "intention" by nerve activity or small muscle movements - and translate that to muscles stimulations or to machine controls. The intentional use by anyone means driving vehicles and operating complex equipment.![]() sorry, now it's good

sorry, now it's good![]() @Nicolas Tremblay - Sorry about that, it was still private. I changed it to public

@Nicolas Tremblay - Sorry about that, it was still private. I changed it to public![]()

https://hackaday.io/event/183427-compliant-mechanisms-hack-chat

![]() Thanks @hut !

Thanks @hut !![]() @anfractuosity For more news, you can subscribe to the RSS feed of the development blog [https://psylink.me/index.xml] or follow PsyLink on Mastodon: https://fosstodon.org/@psylink

@anfractuosity For more news, you can subscribe to the RSS feed of the development blog [https://psylink.me/index.xml] or follow PsyLink on Mastodon: https://fosstodon.org/@psylink![]() oh cheers, i like rss too

oh cheers, i like rss too![]() thank you @hut and everyone else for a 'stimulating' conversation.

thank you @hut and everyone else for a 'stimulating' conversation.![]() my pleasure, thank you all too!

my pleasure, thank you all too!![]() Thanks everyone, especially @hut and @Dan Maloney !

Thanks everyone, especially @hut and @Dan Maloney ! -

Hack Chat Transcript, Part 1

01/19/2022 at 21:08 • 0 comments![]() OK, welcome back to the Hack Chat! We've been on break since before Christmas, so I'm going to have to remember all the right buttons to push.

OK, welcome back to the Hack Chat! We've been on break since before Christmas, so I'm going to have to remember all the right buttons to push.Anyways, let's get started. I'm Dan, I'll be the mod today along with Dusan as we welcome @hut to the Hack Chat to talk about electromyography.

![]() Hi Dan, Do Electromygraph can beneficial to build an exo-skeleton?

Hi Dan, Do Electromygraph can beneficial to build an exo-skeleton?![]() Hi Dan

Hi Dan![]() Welcome everyone!

Welcome everyone!![]() Hi @hut -- care to give us a little detail on how you got into EMG?

Hi @hut -- care to give us a little detail on how you got into EMG?![]() Hi Dule! Been a while, eh?

Hi Dule! Been a while, eh?![]() Indeed, long time!

Indeed, long time!![]() Hi Dan :) I got into EMG because I've been wanting to build BCIs for a long time but always kept pushing it off because it just seems too difficult, especially if you want to implant electrodes. But then I figured, EMG is the next best thing :)

Hi Dan :) I got into EMG because I've been wanting to build BCIs for a long time but always kept pushing it off because it just seems too difficult, especially if you want to implant electrodes. But then I figured, EMG is the next best thing :)![]() Do you mind explaining what Electromyography actually is?

Do you mind explaining what Electromyography actually is?![]() How does it worksy?

How does it worksy?![]() Hi Marc! I'll let hut take that one...

Hi Marc! I'll let hut take that one...![]() @curiousmarc when the brain wants to activate muscles, it sends electric impulses through the spinal cord to activate these. This triggers a complex pattern of electricity in the muscles, which you can record with electrodes

@curiousmarc when the brain wants to activate muscles, it sends electric impulses through the spinal cord to activate these. This triggers a complex pattern of electricity in the muscles, which you can record with electrodes![]() Can you compare the different types of EMG electrodes (stainless steel, Ag/AgCl (dry and gelled), and gold) and what theyre respective use cases may be

Can you compare the different types of EMG electrodes (stainless steel, Ag/AgCl (dry and gelled), and gold) and what theyre respective use cases may be![]() @Preston I'm not an expert on different materials, but I can tell you that dry electrodes are more useful for non-medical, consumer applications, since they don't require gel to be applied on each use, whereas the gelled ones (wet electrodes) result in much better signals, which can be used better in a medical setting

@Preston I'm not an expert on different materials, but I can tell you that dry electrodes are more useful for non-medical, consumer applications, since they don't require gel to be applied on each use, whereas the gelled ones (wet electrodes) result in much better signals, which can be used better in a medical setting![]() Have you attempted to record/decode the movements of individual fingers? (Such as at what appears to be shown in CTRL Labs demos)

Have you attempted to record/decode the movements of individual fingers? (Such as at what appears to be shown in CTRL Labs demos)![]() So you "just" pick up an electrical signal from the skin? Where do you place the electrodes?

So you "just" pick up an electrical signal from the skin? Where do you place the electrodes?![]()

![]()

![]() I'd almost imagine that dry electrodes would help decrease the background noise from other signals in the body, like ECG or just plain EMI noise coupled from the environment.

I'd almost imagine that dry electrodes would help decrease the background noise from other signals in the body, like ECG or just plain EMI noise coupled from the environment.![]() @anfractuosity yes, I placed electrodes on the Flexor digitorum superficialis muscle and recorded the patterns that arise when I press individual fingers onto the table, and I found distinct patterns in the resulting EMG signal from which you can deduce which finger was used

@anfractuosity yes, I placed electrodes on the Flexor digitorum superficialis muscle and recorded the patterns that arise when I press individual fingers onto the table, and I found distinct patterns in the resulting EMG signal from which you can deduce which finger was used![]() @curiousmarc for some implementation details on EMG, I'd recommend reading this: https://www.delsys.com/downloads/TUTORIAL/semg-detection-and-recording.pdf

@curiousmarc for some implementation details on EMG, I'd recommend reading this: https://www.delsys.com/downloads/TUTORIAL/semg-detection-and-recording.pdfYou get a potential difference from points on the skin and then amplify them commonly using an instrumentation amplifier. The psylink blog also has more great details

![]() @perlinwarp Thanks, I'm off to the wizard, er, link.

@perlinwarp Thanks, I'm off to the wizard, er, link.![]() @anfractuosity if you look at the video, the electrode module is actually sitting on the flexor digitorum superficialis muscle.

@anfractuosity if you look at the video, the electrode module is actually sitting on the flexor digitorum superficialis muscle.![]() What kind of algorithms do you need to interpret the signals?

What kind of algorithms do you need to interpret the signals?![]() @hut neat :) would i be right in thinking you'd need a large number of electrodes to monitor each finger simulatenously? they seem to use lots of electrodes unless i'm mistaken?

@hut neat :) would i be right in thinking you'd need a large number of electrodes to monitor each finger simulatenously? they seem to use lots of electrodes unless i'm mistaken?![]() Guess you've got to know your anatomy pretty well. Seems like most people think the muscles for the fingers are actually in the fingers, not in the forearm like they are.

Guess you've got to know your anatomy pretty well. Seems like most people think the muscles for the fingers are actually in the fingers, not in the forearm like they are.![]() I also tried typing on a keyboard while training the AI, and hoped that I can later type on an invisible keyboard to reproduce the same keys, but unfortunately this worked only when I restricted myself to a small number of keys (2 or 3)

I also tried typing on a keyboard while training the AI, and hoped that I can later type on an invisible keyboard to reproduce the same keys, but unfortunately this worked only when I restricted myself to a small number of keys (2 or 3)![]() @Dan Maloney yes, I spend a lot of time exploring the forearm :D

@Dan Maloney yes, I spend a lot of time exploring the forearm :D![]() @anfractuosity that depends on level of detail you want to extract. 5 fingers rough flexion/extension can be done with 5 electrodes (did that myself), but you won't get very high reliability this way

@anfractuosity that depends on level of detail you want to extract. 5 fingers rough flexion/extension can be done with 5 electrodes (did that myself), but you won't get very high reliability this way![]() @hut Sounds super interesting! Did you just use the raw EMG for training the network or did you find some features that work better?

@hut Sounds super interesting! Did you just use the raw EMG for training the network or did you find some features that work better?![]() it looked to me like they used 20+ i think

it looked to me like they used 20+ i think![]() but didn't count properly

but didn't count properly![]() @Pete Warden when I started out, I used FFT and various methods of averaging, rolling window sampling, but before digging into signal processing algorithms further, I decided to give it a try to just throw the raw signals into a neural network and let it make sense of it.... and it just works. It's a pretty simple neural network too, with 6 layers and <1000 neurons

@Pete Warden when I started out, I used FFT and various methods of averaging, rolling window sampling, but before digging into signal processing algorithms further, I decided to give it a try to just throw the raw signals into a neural network and let it make sense of it.... and it just works. It's a pretty simple neural network too, with 6 layers and <1000 neurons![]() @anfractuosity 8 has been proven to be enough by using the Myo, although some papers suggest you can get away with 4 using some clever ML, e.g. this one: https://dl.acm.org/doi/abs/10.1145/3442381.3449890 , It's also been implemented using the Thalmic Labs Myo to control VR here: https://github.com/PerlinWarp/NeuroGloves

@anfractuosity 8 has been proven to be enough by using the Myo, although some papers suggest you can get away with 4 using some clever ML, e.g. this one: https://dl.acm.org/doi/abs/10.1145/3442381.3449890 , It's also been implemented using the Thalmic Labs Myo to control VR here: https://github.com/PerlinWarp/NeuroGloves![]() @hut I designed a 10 finger EMG keyboard in high school, 1024 codes and it is not too hard to learn binary. No motion. One hand, 5 fingers, is 32 symbols, and you can use arm or other places for shift or a three bit modifier. 2^8 is 256 characters. Faster than moving fingers.

@hut I designed a 10 finger EMG keyboard in high school, 1024 codes and it is not too hard to learn binary. No motion. One hand, 5 fingers, is 32 symbols, and you can use arm or other places for shift or a three bit modifier. 2^8 is 256 characters. Faster than moving fingers.![]() @hut great thanks! What kind of force feedback did you use for measuring force in flexion and extension? I'm not sure about how sensitive the load cells should be to record the single finger activity..

@hut great thanks! What kind of force feedback did you use for measuring force in flexion and extension? I'm not sure about how sensitive the load cells should be to record the single finger activity..![]() @hut neat! I work on TensorFlow Lite Micro, so I'm very interested in neural net approaches

@hut neat! I work on TensorFlow Lite Micro, so I'm very interested in neural net approaches![]() @perlinwarp , thanks, will have a look!

@perlinwarp , thanks, will have a look!![]() @RichardCollins that sounds fascinating, do you have a link?

@RichardCollins that sounds fascinating, do you have a link?![]() That was 55 years ago, we still used paper and pencil. I had computers the next few years, but not ones with digital media I could keep.

That was 55 years ago, we still used paper and pencil. I had computers the next few years, but not ones with digital media I could keep.![]() @Agnese Grison there is no force feedback, PsyLink is only reading signals

@Agnese Grison there is no force feedback, PsyLink is only reading signals![]() @hut Love the project! Is very inspirational with trying to develop a workout tracker device that records movements to skip having to enter your rep count and such into a workout tracker.

@hut Love the project! Is very inspirational with trying to develop a workout tracker device that records movements to skip having to enter your rep count and such into a workout tracker.![]() @AaronA thank you :) you can probably do workout tracking with purely an IMU with gyroscope/accelerometer though

@AaronA thank you :) you can probably do workout tracking with purely an IMU with gyroscope/accelerometer though![]() @perlinwarp thanks for the image and the extra information :)

@perlinwarp thanks for the image and the extra information :)![]() @hut I was just indicating that one hand dedicated to generating the bulk of the signal was sufficient, then convenient muscles sites on the neck face arms legs toes give you enough to communicate - at speed. When you learn to read fast, the first think it to not subvocalize. The way that works for fingers and typing is to measure any small signal of the "intention" to move the fingers, not the movement itself.

@hut I was just indicating that one hand dedicated to generating the bulk of the signal was sufficient, then convenient muscles sites on the neck face arms legs toes give you enough to communicate - at speed. When you learn to read fast, the first think it to not subvocalize. The way that works for fingers and typing is to measure any small signal of the "intention" to move the fingers, not the movement itself.![]() I started with that, but feel like recording the muscle contractions is more accurate.

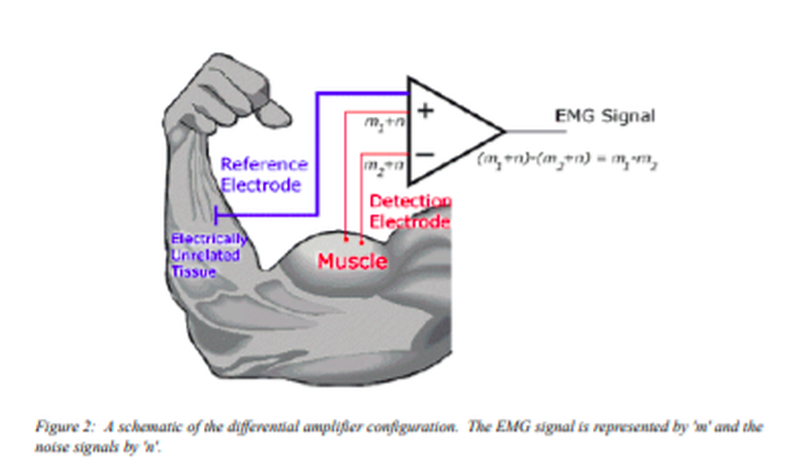

I started with that, but feel like recording the muscle contractions is more accurate.![]() The ElectroMyoGram signals are voltages. The currents are small so differential amplifiers and high amplifications are needed. But "instrumentation" amplifier chips and systems are widespread. Most don't tell you the signal characteristics.

The ElectroMyoGram signals are voltages. The currents are small so differential amplifiers and high amplifications are needed. But "instrumentation" amplifier chips and systems are widespread. Most don't tell you the signal characteristics.![]() @hut You're welcome! Thanks for inspiring the community with such an awesome project, especially for publishing the schematics and code as open-source!

@hut You're welcome! Thanks for inspiring the community with such an awesome project, especially for publishing the schematics and code as open-source!![]() I was about to ask that. if differential signaling is still required with the machine learning.

I was about to ask that. if differential signaling is still required with the machine learning.![]() @Daren Schwenke not necessarily. My first approaches were actually with plugging the electrode straight into the ADC of the microcontroller (arduino nano 33 ble sense), and while the readout is rather noisy, the signal is still detectable. But you get better results with differential amplification

@Daren Schwenke not necessarily. My first approaches were actually with plugging the electrode straight into the ADC of the microcontroller (arduino nano 33 ble sense), and while the readout is rather noisy, the signal is still detectable. But you get better results with differential amplification![]() @Daren Schwenke I have rather promising results with single reference and 4 channel electrodes (still running them through buffer opamps, but not instrumentation ones)

@Daren Schwenke I have rather promising results with single reference and 4 channel electrodes (still running them through buffer opamps, but not instrumentation ones)![]() @RichardCollins I also want to get to the point where one can detect signals without visible movement. If you did that in high school, then I'm hopeful I'll get there eventually :)

@RichardCollins I also want to get to the point where one can detect signals without visible movement. If you did that in high school, then I'm hopeful I'll get there eventually :)![]() (actually PsyLink might also be able to do this. I haven't tested that recently.)

(actually PsyLink might also be able to do this. I haven't tested that recently.)![]() @hut lots of interesting research on EMG based detection of subvocalization

@hut lots of interesting research on EMG based detection of subvocalization![]() of speech?

of speech?![]() yep

yep![]() yeah, that looks really neat too

yeah, that looks really neat too![]() A neural net can take noisy input. If you have 15,000 samples per second that is more than enough date to discriminate 1024 codes. I did not see the sampling rate mentioned, nor the bit size, no any recorded data samples for testing and creating algorithms. An open group shares data and algorithms, in near real time.

A neural net can take noisy input. If you have 15,000 samples per second that is more than enough date to discriminate 1024 codes. I did not see the sampling rate mentioned, nor the bit size, no any recorded data samples for testing and creating algorithms. An open group shares data and algorithms, in near real time.![]() that sounds like a military contract waiting to happen.

that sounds like a military contract waiting to happen.![]() @Preston -- Where would the electrodes go for subvocalization? On the neck I'd imagine.

@Preston -- Where would the electrodes go for subvocalization? On the neck I'd imagine.![]() @Dan Maloney neck and face, look into the 'alter ego' work from the MIT media lab and its derivatives for a promising system

@Dan Maloney neck and face, look into the 'alter ego' work from the MIT media lab and its derivatives for a promising system![]() @RichardCollins the sampling rate of psylink is 500Hz @ 8 bit, 8 channels. But indeed there's no recorded data published. I can add that to the website

@RichardCollins the sampling rate of psylink is 500Hz @ 8 bit, 8 channels. But indeed there's no recorded data published. I can add that to the website![]() (it could go up to 12 bit, but currently bandwidth over BLE is a concern)

(it could go up to 12 bit, but currently bandwidth over BLE is a concern)![]() would wifi use too much power compared to BLE?

would wifi use too much power compared to BLE?![]() I chose BLE specifically because you can use it to emulate a human input device (keyboard or mouse) and connect to e.g. a phone to control it

I chose BLE specifically because you can use it to emulate a human input device (keyboard or mouse) and connect to e.g. a phone to control it![]() this has not been implemented yet though

this has not been implemented yet though![]() ah sorry, gotcha

ah sorry, gotcha![]() @anfractuosity wifi has high peak consumption, you'll need to put more effort in isolating analog schematics from its influence. Not impossible, but not easy as well

@anfractuosity wifi has high peak consumption, you'll need to put more effort in isolating analog schematics from its influence. Not impossible, but not easy as well![]() ahh

ahh![]() have you experienced any issues with the radio broadcasts affecting the signals that you have read?

have you experienced any issues with the radio broadcasts affecting the signals that you have read?![]() It helps to break up into "projects". When you try to do all things with all ways of doing those things, almost nothing gets done. And, when I search on topics like EMG there are tens of thousands of groups out there - all mostly trying the same things, and mostly not talking to each other.

It helps to break up into "projects". When you try to do all things with all ways of doing those things, almost nothing gets done. And, when I search on topics like EMG there are tens of thousands of groups out there - all mostly trying the same things, and mostly not talking to each other.![]() what about multichannel EMG? with devices like this https://hackaday.io/project/166893-freeeeg32-alpha15 and this https://hackaday.io/project/181521-freeeeg128-alpha

what about multichannel EMG? with devices like this https://hackaday.io/project/166893-freeeeg32-alpha15 and this https://hackaday.io/project/181521-freeeeg128-alpha![]() And the 33 BLE Sense supports TensorFlow for Micros so hopefully you can process some of the data on the board and reduce what needs sending, but still doesn't solve the bottlenecks for when training or gathering data for ML

And the 33 BLE Sense supports TensorFlow for Micros so hopefully you can process some of the data on the board and reduce what needs sending, but still doesn't solve the bottlenecks for when training or gathering data for ML![]() @Daren Schwenke not yet, but if you shine a bright lamp on the wires that connect the electrode module to the arduino, the noise goes crazy

@Daren Schwenke not yet, but if you shine a bright lamp on the wires that connect the electrode module to the arduino, the noise goes crazy![]() the difference between Wi-Fi and BLE I imagine is a an order of magnitude of power.

the difference between Wi-Fi and BLE I imagine is a an order of magnitude of power.![]() interesting!

interesting!![]() Am i right in thinking looking at the schematic you're amplifying the difference between two electrodes, on each of your PCBs?

Am i right in thinking looking at the schematic you're amplifying the difference between two electrodes, on each of your PCBs?![]() @RichardCollins communication between groups is indeed an issue, wish I knew how to bridge the groups

@RichardCollins communication between groups is indeed an issue, wish I knew how to bridge the groups![]() there 32 differential inputs on FreeEEG32 and 128 differential inputs on FreeEEG128

there 32 differential inputs on FreeEEG32 and 128 differential inputs on FreeEEG128![]() there is a possibility, re wifi, to know when it will broadcast and sandwich the packets between the measurements.

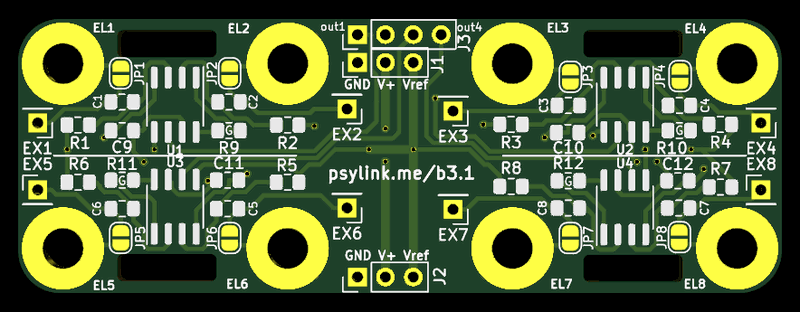

there is a possibility, re wifi, to know when it will broadcast and sandwich the packets between the measurements.![]() @anfractuosity the current electrode module ( https://psylink.me/b3.1/ ) supports 8 electrodes (the big yellow circles) and 4 differential amplifier chips calculate the difference between 2 electrodes each

@anfractuosity the current electrode module ( https://psylink.me/b3.1/ ) supports 8 electrodes (the big yellow circles) and 4 differential amplifier chips calculate the difference between 2 electrodes each![]() all with simultaneous sampling, used ad7771 and ads131m08

all with simultaneous sampling, used ad7771 and ads131m08![]() cool , the pcb images are v. helpful

cool , the pcb images are v. helpful![]() any thoughts on possibly enhancing your data using something like ultrasound?

any thoughts on possibly enhancing your data using something like ultrasound?![]() We should have pictures of the things you mentioned floating on these screens. So we can just point to things, and keep adding details. Taking text words and associating them, and going back to earlier mentions is time consuming. @hut A friend of mine's husband broke his neck at C4 and I spent all of October Nov Dec finding all the groups doing 3D imaging of nerve activity, and stimulation of muscles by many methods. Stimulated muscles can substitute for brain control. So take the "intention" and convert directly to the suite and timing of muscles needed to move the body using existing muscles. The weak muscles get put through training to maintain tone and strenght. Same works for micro-g environments in space, mars, moon.

We should have pictures of the things you mentioned floating on these screens. So we can just point to things, and keep adding details. Taking text words and associating them, and going back to earlier mentions is time consuming. @hut A friend of mine's husband broke his neck at C4 and I spent all of October Nov Dec finding all the groups doing 3D imaging of nerve activity, and stimulation of muscles by many methods. Stimulated muscles can substitute for brain control. So take the "intention" and convert directly to the suite and timing of muscles needed to move the body using existing muscles. The weak muscles get put through training to maintain tone and strenght. Same works for micro-g environments in space, mars, moon.![]() @Dmitry Sukhoruchkin those links sound very interesting, will research that project after the chat :) perhaps we can collaborate

@Dmitry Sukhoruchkin those links sound very interesting, will research that project after the chat :) perhaps we can collaborate![]()

![]()

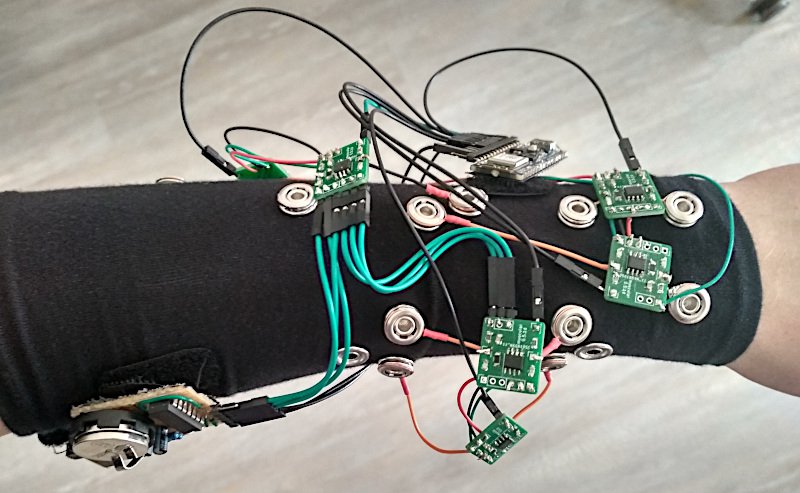

![]() @RichardCollins you're right, here's a current image of psylink

@RichardCollins you're right, here's a current image of psylink![]()

![]()

![]() this is the electrode module I linked before

this is the electrode module I linked before![]() FYI, I'll be posting a transcript right after the chat. In case anyone needs to refer back to links, etc.

FYI, I'll be posting a transcript right after the chat. In case anyone needs to refer back to links, etc.![]()

![]()

![]() Ultrasound nerve activity montoring is fairly advanced. DARPA awarded a contract to implant a bypass devices for spinal cord the measured above and stimulated below, and measured below and stimulated above - the break. "Focused ultrasound stimulation" is well advanced. And offshoot of array focusing methods from ultrasound, radar and other imaging array methods.

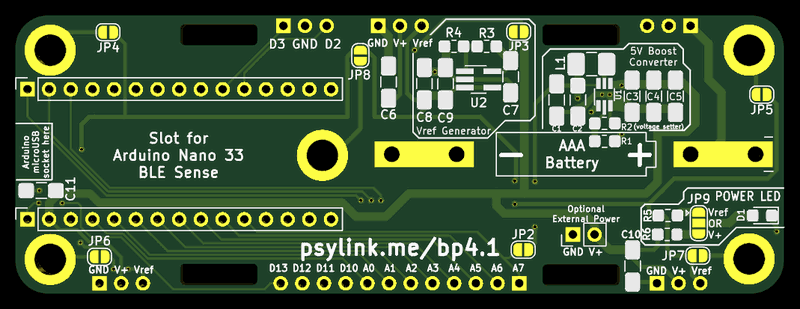

Ultrasound nerve activity montoring is fairly advanced. DARPA awarded a contract to implant a bypass devices for spinal cord the measured above and stimulated below, and measured below and stimulated above - the break. "Focused ultrasound stimulation" is well advanced. And offshoot of array focusing methods from ultrasound, radar and other imaging array methods.![]() and the power module ( https://psylink.me/bp4.1/ ) for plugging in the AAA battery and the Arduino Nano 33 BLE Sense

and the power module ( https://psylink.me/bp4.1/ ) for plugging in the AAA battery and the Arduino Nano 33 BLE Sense![]() With ultrasound, would you have to be pretty careful about power intensity too, to avoid damage?

With ultrasound, would you have to be pretty careful about power intensity too, to avoid damage?![]() ultrasound can be also used for bladeless neurosurgery. put a neurotoxin in the blood stream, selectively disrupt blood-brain barrier. https://www.sciencedaily.com/releases/2021/12/211203095804.htm

ultrasound can be also used for bladeless neurosurgery. put a neurotoxin in the blood stream, selectively disrupt blood-brain barrier. https://www.sciencedaily.com/releases/2021/12/211203095804.htmAbout Us Contact Hackaday.io Give Feedback Terms of Use Privacy Policy Hackaday API

Lutetium

Lutetium