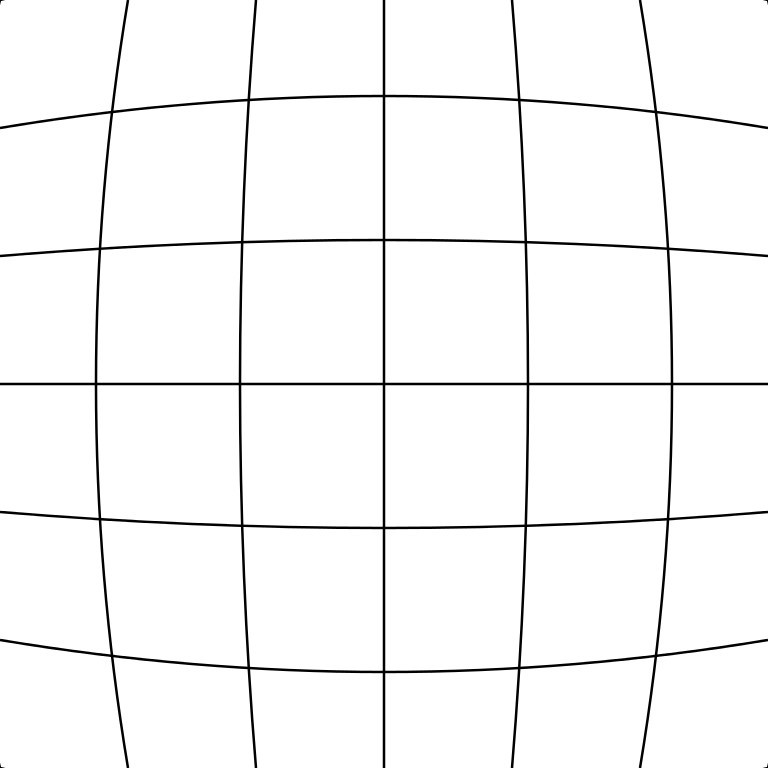

Certain types of camera lenses (such as in the webcam used in this project) introduce distortion characteristics to the images such that objects along the optical axis of the lens occupy disproportionately large areas of the image. Objects near the periphery occupy a smaller area of the image. The following figure illustrates this effect:

This so-called barrel distortion results in the fact that the representation of distance relations in the real world is not the same as in the camera image -- i.e. distance relations in the camera image are non-linear.

However, in this project, linear distance relations are required to estimate the servo motor angles from the camera images. Hence, lens distortions have to be corrected by remapping the camera images to a rectilinear representation. This procedure is also called unwarping. To correct for lens distortions in the camera images I made use of OpenCV's camera calibration tool.

Estimation of lens parameters using OpenCV

For unwarping images OpenCV takes the radial and the tangential distortion factors into account. Radial distortion is pretty much what leads to the barrel or fisheye effect described above. Whereas, tangential distortion describes the decentering of the optical axis of the lens in accordance to the image plane.

To correct for radial distortion the following formulas can be used:

So a pixel position (x, y) in the original image will be remapped to the pixel position (x_{corrected}, y_{corrected}) in the new image.

Tangential distortion can be corrected via the formulas:

Hence we have five distortion parameters which in OpenCV are presented as one row matrix with five columns:

For the unit conversion OpenCV uses the following formula:

The parameters are f_x and f_y (camera focal lengths) and (c_x, c_y) which are the optical centers expressed in pixels coordinates. The matrix containing these four parameters is referred to as the camera matrix.

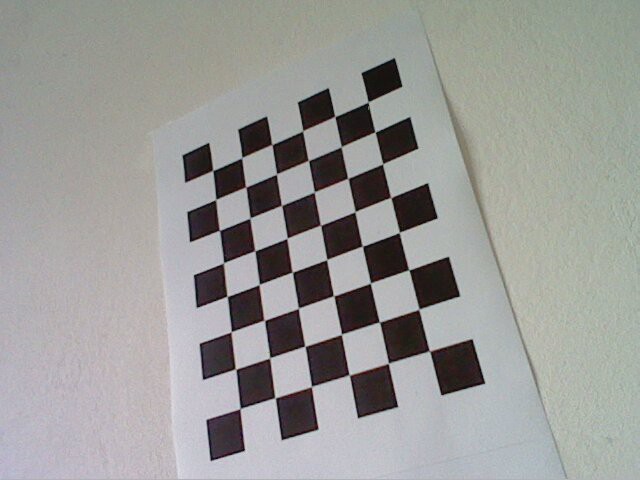

The camera matrix as well as the vector containing the distortion coefficients can be obtained by using OpenCVs camera calibration toolbox. OpenCV determines the constants in these two matrices by performing basic geometrical equations on several camera snapshots of calibration objects. For calibration I used snapshots of a black-white chessboard pattern with known dimensions taken with my webcam:

python calibrate.py "calibration_samples/image_*.jpg"

the calibration parameters for our camera, namely the root-mean-square error (RMS) of our parameter estimation, the camera matrix and the distortion coefficients can be obtained:RMS: 0.171988082483

camera matrix:

[[ 611.18384754 0. 515.31108992]

[ 0. 611.06728767 402.07541332]

[ 0. 0. 1. ]]

distortion coefficients: [-0.36824145 0.2848545 0.00079123 0.00064924 -0.16345661]

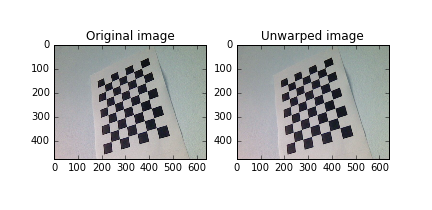

Unwarping the images

To unwarp an image and hence correct for lens distortions I made use of the recently acquired parameters and OpenCVs remap function as shown in the example code below:

import numpy as np

import cv2

from matplotlib import pyplot as plt

# Define camera matrix K

K = np.array([[673.9683892, 0., 343.68638231],

[0., 676.08466459, 245.31865398],

[0., 0., 1.]])

# Define distortion coefficients d

d = np.array([5.44787247e-02, 1.23043244e-01, -4.52559581e-04, 5.47011732e-03, -6.83110234e-01])

# Read an example image and acquire its size

img = cv2.imread("calibration_samples/2016-07-13-124020.jpg")

h, w = img.shape[:2]

# Generate new camera matrix from parameters

newcameramatrix, roi = cv2.getOptimalNewCameraMatrix(K, d, (w,h), 0)

# Generate look-up tables for remapping the camera image

mapx, mapy = cv2.initUndistortRectifyMap(K, d, None, newcameramatrix, (w, h), 5)

# Remap the original image to a new image

newimg = cv2.remap(img, mapx, mapy, cv2.INTER_LINEAR)

# Display old and new image

fig, (oldimg_ax, newimg_ax) = plt.subplots(1, 2)

oldimg_ax.imshow(img)

oldimg_ax.set_title('Original image')

newimg_ax.imshow(newimg)

newimg_ax.set_title('Unwarped image')

plt.show()

Here, after generating an optimized camera matrix by passing the distortion coefficients d and the camera matrix k into OpenCV's getOptimalNewCameraMatrix method, I generate the look-up-tables (LUTs) mapx and mapy for remapping pixel values in the original camera image into an undistorted camera image using the initUndistortRectify method.

Each of the two LUTs represent a two-dimensional matrix LUT_{m,n} where m and n represent the pixel positions of the undistorted image. The LUTs mapx and mapy at the positions (m,n) contain the pixel coordinate x or respectively y in the original image. Hence, I construct an undistorted image from the orginal image, by filling in the pixel value at the respective position (m,n) in the undistorted image using the pixel value at position (x,y) in the original image. This is performed using OpenCV's remap method. As the pixel positions (x,y) specified by the LUTs do not necessarily have to be integers, one has to define an algorithm for interpolating a pixel value from non-integer pixel positions in the original image. Here I use a simple bilinear interpolation specified by the argument INTER_LINEAR which gets passed in the remap method.

The Unwarping class

In order to unwarp the images obtained from the webcam, I have written a Python class, which performs the computations as described above (see Github repository). Same as in the class for controlling the servos, I use Python's multiprocessing library which provides an interface for forking the image unwarping object as a separate process on one of the Raspberry Pi 2's CPU cores.

hanno

hanno

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Hey, I tried this out but was stonewalled by incompatibilities with opencv3! Dang. Looked useful.

Are you sure? yes | no

Hello,

Thank you for posting this. I am very new to both python and raspberry Pis. I really like the clear explanations and you walking us through the process. I do have one question though, would it be possible to use your method to correct a video feed while it is being outputted through the HDMI interface?

Are you sure? yes | no

Great bits of info here, thanks :)

And keep it up, liking the idea of your project alot!

Are you sure? yes | no