After correction for lens distortions in the camera images, I apply a face detection

algorithm on each camera image to obtain the apparent pixel position as

well as the apparent pixel width and height of a face in front

of the camera. For this purpose I make use of a set of

haar-like features trained to match certain features of image recordings of frontal faces. I then use a classifier

which tries to match the features contained in the haar feature database

with the camera images in order to detect a face (see

Viola-Jones object detection). It is notable, that although training a set of haar-filters of facial features is in

principle possible (given a large enough database

with images of faces), in the scope of this project I use a bank of

pre-trained haar-like features that already comes with OpenCV.

Detecting facial features using haar-like features

Haar-like features can be defined as the difference of the sum of pixels

of areas inside a rectangle, which can be at any position and scale

within the original image. Hence, by trying to match each feature (at different scales) in the database with different positions in the original

image the existence

or absence of certain characteristics at the image position can be obtained. These

characteristics can be for example edges or changes in textures. Hence,

when applying a set of haar-like features pre-trained to match certain

characteristics of facial features, the correlation by which a certain feature matches an image feature can tell something about the existence

or non-existence of certain facial characteristics at a certain

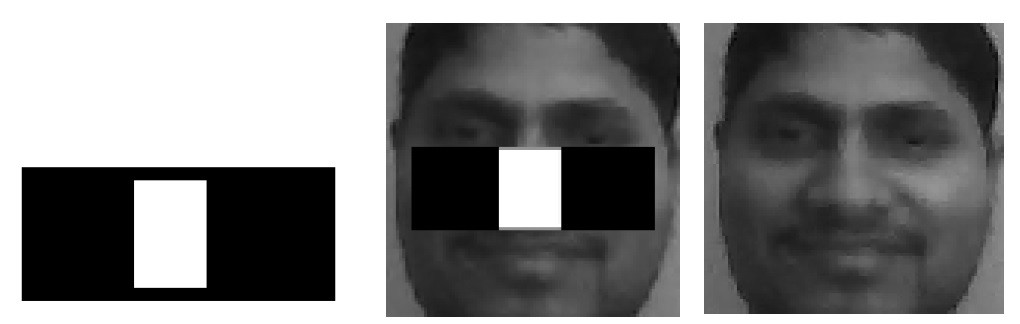

position. As an example, in the following figure a haar-like feature that

looks similar to the bridge of the nose is applied onto a face (image taken from

Wikipedia):

In order to detect a face using haar-like facial features one can use a cascade classifier. Explaining the inner workings of the cascade classifier used here is out of the scope of this project update. However, this video by Adam Harvey gives quite an intuitive impression of how it works:

OpenCV Face Detection: Visualized from Adam Harvey on Vimeo.

Face detection using haar-like features using a cascade classifier can be implemented in OpenCV in the following way:

import cv2

from matplotlib import pyplot as plt

# Initialize cascade classifier with pre-trained haar-like facial features

classifier = cv2.CascadeClassifier("haarcascade_frontalface_alt2.xml")

# Read an example image

image = cv2.imread("images/merkel_small.jpg")

# Convert image to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Detect faces in the image

face = classifier.detectMultiScale(

gray,

scaleFactor=1.1,

minNeighbors=5,

minSize=(30, 30),

flags = (cv2.cv.CV_HAAR_SCALE_IMAGE +

cv2.cv.CV_HAAR_DO_CANNY_PRUNING +

cv2.cv.CV_HAAR_FIND_BIGGEST_OBJECT +

cv2.cv.CV_HAAR_DO_ROUGH_SEARCH)

)

# Draw a rectangle around the faces

for (x, y, w, h) in face:

cv2.rectangle(gray, (x, y), (x+w, y+h), (0, 255, 0), 2)

# Display image

plt.imshow(gray, 'gray')

Here I first initialize a cascade classifier object with a pre-trained database of haar-like facial features (haarcascade_frontalface_alt2.xml) using OpenCVs CascadeClassifier class. The cascade classfier can then be used to detect facial features in an example image using its detectMultiscale method. The method takes the arguments scaleFactor, minNeighbours and minSize as an input.

The argument scaleFactor determines the factor by which the detection window of the classifier is scaled down per detection pass (see video above). A factor of 1.1 corresponds to an increase of 10%. Hence, increasing the scale factor increases performance, as the number of detection passes is reduced. However, as a consequence the reliability by which a face is detected is reduced.

The argument minNeighbor determines the minimum number of neighboring facial features that need to be present to indicate the detection of a face by the classifier. Decreasing the factor increases the amount of false positive detections. Increasing the factor might lead to missing faces in the image. The argument seems to have no influence on the performance of the algorithm.

The argument minSize determines the minimum size of the detection window in pixels. Increasing the minimum detection window increases performance. However, smaller faces are going to be missed then. In the scope of this project however a relatively big detection window can be used, as the user is sitting directly in front of the camera.

Further, several flags are passed to the classifier. These are: a) CV_HAAR_SCALE_IMAGE, b) CV_HAAR_DO_CANNY_PRUNING, c) CV_HAAR_FIND_BIGGEST_OBJECT and d) CV_HAAR_DO_ROUGH_SEARCH.

The flag CV_HAAR_SCALE_IMAGE tells the classifier that haar-like features are applied on image data, which is what we are doing for detecting faces.

The flag CV_HAAR_DO_CANNY_PRUNING is useful when detecting faces in image data, as it tells the classifier to skip sharp edges. Hence, the reliabilty by which a face can be detected is increased. Depending on the backdrop, performance of the algorithm is increased when passing this flag.

The flag CV_HAAR_FIND_BIGGEST_OBJECT sets the classifier to only return the biggest object detected. Whereas the flag CV_HAAR_DO_ROUGH_SEARCH sets the classifier to stop after a face has been detected. Setting both flags drastically improves performance, when only one face needs to be detected by the algorithm.

When a face has been detected by the classifier it returns a list containing the lower left pixel position (x,y) and the width and height (w,h) of a bounding box surrounding the face. These parameters can then (hopefully!!) be used to determine the position of the face in the camera in order to control the servo motor angles.

The FaceDetection class

In order to detect faces from the unwarped camera images i wrote a Python class which implements face detection using haar-like features as described above. The class is implemented as a Python multiprocessing task and takes the unwarped camera images as an input. As an output it returns the x,y coordinates and the size of the bounding box of the face. Unfortunately, the x,y coordinates obtained from OpenCV's cascade classifier jitter over time. This will result in jittery movements of the fan's lammelae when directly computing servo angles from the ccordinates of the face in the image. Hence, I implemented a temporal first-order lowpass filter which removes high frequency oscillations from the face coordinates over time. The following video shows a short test of the face detection module:

hanno

hanno

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Hi,

I trained my own haar cascade classifier, i want show the inner working of classifier as you have shown it in video done by Adam Harvey. Please help me out to check (visualize) how it is working on my image.If you have any commands or code or links to go through please share me. Thanks in advance.

Are you sure? yes | no