-

Integron as Reactron subcomplex

09/01/2014 at 20:39 • 0 commentsWhenever I have a small number of Reactrons that are meant to work together as essentially a single unit, I call the arrangement a subcomplex. It is a complex, in that it consists of more than one internal Reactron. But it is "sub" in the sense that these internal units exist below a unified Reactron interface for the complex, so from the outside, it may appear as a single unit.

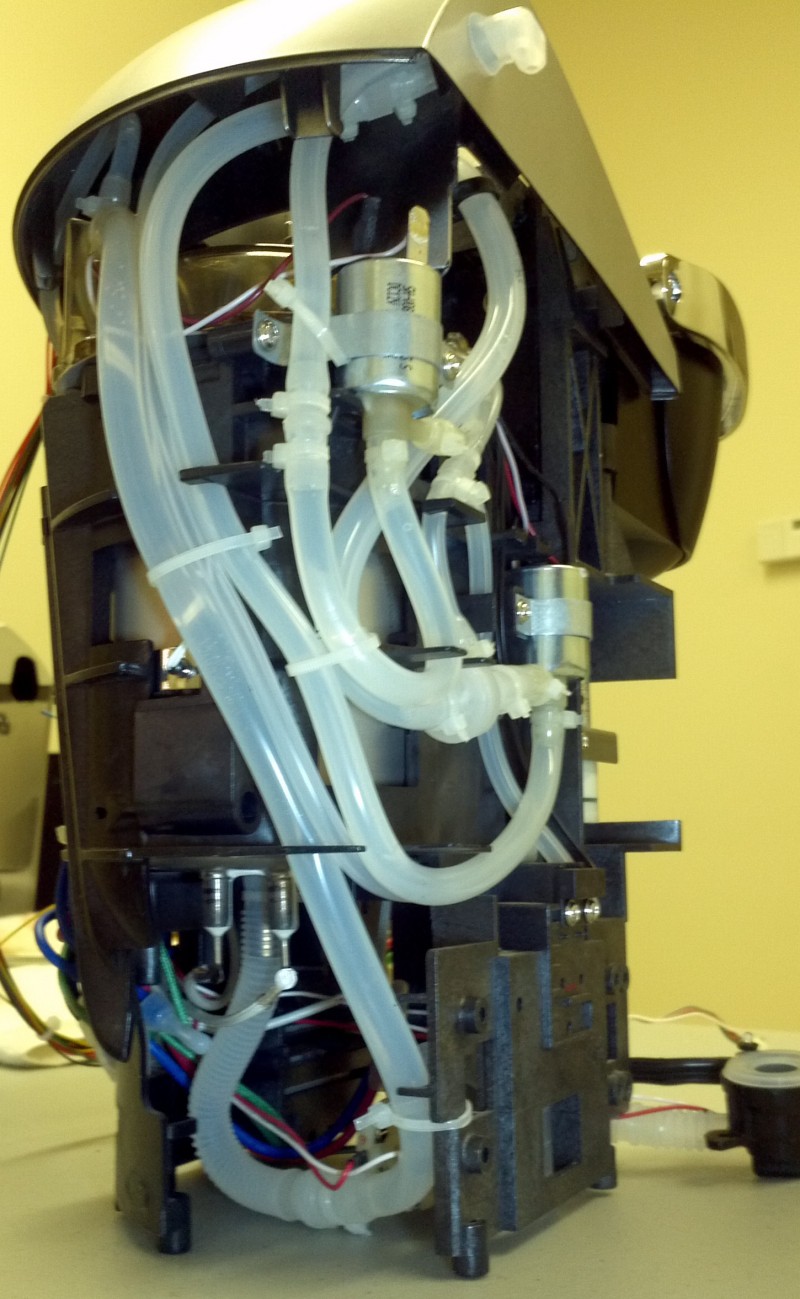

As an example, if you consider the Reactron coffemaker, water pump, and coffee-contextualized simple button, those are actually three separate Reactrons. But, because these Reactrons are a special case scenario, where the button has a one-to-one relationship with the water pump, and the pump has a one-to-one relationship with the coffeemaker reservoir, they could have been structured as a single, three-unit subcomplex, with a single network interface, instead of three. I didn't do it this way because these units were all conceived and added at completely different points in time. Additionally, I like keeping the button and pump abstracted, because if I ever need a general purpose button elsewhere, or a pump, these designs can be replicated easily without de-coupling a coffeemaker. That is one of the basic tenets of Reactron Overdrive - keep units simple and abstracted, and create desired complexity with increased numbers.

In developing the speech-interacting Integron, I have done a lot of testing and analysis, and suspect that the base unit is perhaps too complex. It may be truer to the principles to abstract the speech processing from the human interaction, where the Linux module is a sub-Reactron module in its own right, and the sight, gesture, and audio components comprise a separate sub-Reactron.

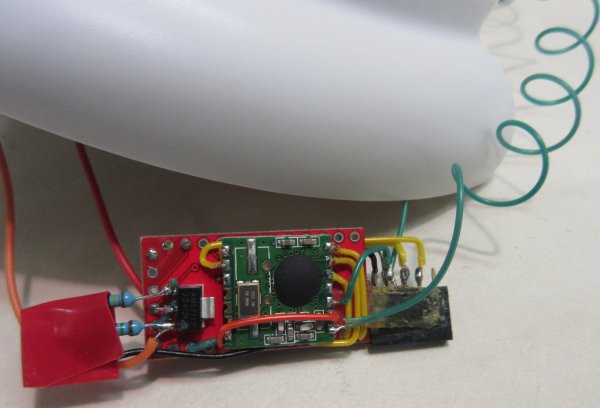

I had been working on an "Integron relay" where a subset of the human interaction components were present, but offloaded the heavy processing to a separate unit. My thought was to create a device that could act as a (door) threshold device, like a doorbell intercom, but fully integrated with the whole network. If a doorbell, this would be externally mounted, and therefore subject to weather, damage, potential theft, etc. So it would be beneficial to have it be cheap and replaceable, with nothing critical in it at all. It would just be a dumb terminal, effectively.

After a lot of development on the Integron unit, I am thinking this should actually become the standard model. All Integrons should be a sub-complex, with an Integron relay of whatever form (doorbell, automobile, desktop, wall-mounted, ceiling mounted, wrist-mounted) presented to the human, and all the data processing for speech and most Reactron network functions located on a separate unit. The two sub-units could be physically located together, but need not be. This will allow the use of much higher performance computers to be utilized for speech processing, and will also create better machine utilization efficiency, since we generally do not need as many speech engines as we do interface points. The separation will allow a few-to-many relationship of engines to human interfaces.

It also allows a completely different handling of the audio, escaping ALSA and allowing Linux to just handle received waveform data without trying to play it or capture it. The real question is, will the transport of data to and from the relay units be faster than the capture, processing, and playout all on a local Linux SBC? I don't know yet, but I am going to test it.

In the case of the Automobile Integron, I think the hardware will be pretty much the same, but wired and coded differently. I will still use a BBB for the speech processor, but now the microcontroller will handle the audio capture and playout instead of ALSA. That subcomplex will be entirely local to the car, as GPRS would not be efficient enough data transport, performance-wise, and further, loss of signal would end up disabling the interface. Also, BBB consumes much less power than a more powerful laptop, which is important as the unit needs to be aware and respectful of remaining battery power when the car is not running. So that seems like it may be the right set of compromises there.

As this has gotten more complex, I will introduce a new project for the Integron itself, and keep my updates here about the Reactron architecture and network, as well as overall status and introductory remarks for new Reactron projects as they come about. I have more in the pipeline, coming up later on.

-

First cut of desktop morphology Integron

08/20/2014 at 04:02 • 0 commentsA lot of the Integron unit is working in component parts. It remains for me to coordinate the various internal subsystems. Here is an image of the first cut of the desktop morphology.

![]()

In this image the screen is present but not active, and the little ripple in its surface is just the protective plastic which I have not yet removed. A NeoPixel is activated blue, under an internal diffuser, in front of a sound baffle and support structure joining base disk to top disk. The fabric sleeve is an acoustically transparent speaker grille fabric. There is some left-right asymmetry due to uneven tension, due to me assembling and disassembling this prototype a number of times.

Still working on the mechanical fit and angle of the PIR sensors in the support baffle structure, and I have yet to test the transparency of the acoustic fabric to the ultrasonic sensor. The infrared passed just fine, so worst case if the ultrasonic does not work I will put a central PIR in a tube to narrow the angle, and use that to indicate presence of someone standing right in front of the unit. But I'd rather use ultrasonic ranging since then I get an actual distance.

The unit looks great in a darker setting.

![]()

It also is effective at giving a colored light signal from a distance in normal light, though the camera does not report well what the human eye sees, which is much more even than this image shows.

![]()

The unit in this image does not contain the Beaglebone Black and audio components, I have that assembled on a breadboard separately for testing, but expect to be assembling it all together shortly. (It does fit - using a proto cape on the BBB.)

![]()

The speech synthesis and speech recognition are working. The USB sound card is working well, but I am still working on the right microphone and audio amplifier on the output. Currently I have a capsule electret mic sourced from Adafruit, and it works quite well close up. But I have ordered higher sensitivity ones, because I want to be able to speak to it from a distance, and it's just not there yet. I have also ordered some adjustable pre-amplified mics, which may be OK if I attenuate the signal all the way down so that the peak-to-peak does not overdrive (!) the mic input. The sound card is apparently able to withstand abuse - I did try a sustained, full 2 volts peak-to-peak signal to see if it would fry (the sound cards are only a few dollars each, that test was worth it to find out the capability.) Terrible sound, of course, super distorted - but it didn't fry the board. We'll see if we have to go there at all. The internal baffle of the Integron unit is designed to be a sound cone. On the output side of things, I am using an Adafruit audio amp, but while it is quite excellent at what it does, it may not be the best choice for this application. I have some less expensive PAM chips on order, and some different ones I have used before in house, I will be experimenting with them soon. I also changed the speaker to one that was less tinny. The speech output is mono, so I am just using one speaker of a pair. This unit is really a point source so in some ways it makes sense to combine channels for any potential stereo signal sound files and just use mono. That makes it more compact.

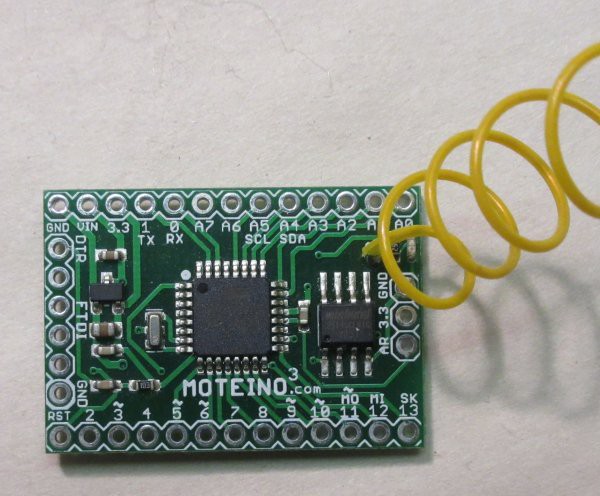

The Moteino is driving the NeoPixel without issue, but I will use more than one in the final unit so that the top, middle, and bottom can be illuminated differently to give three different levels of signal. (That is the idea anyway, we will see if it is practical.) Due to the internal baffling, there may need to be three LEDs per tier to enhance visibility from all sides, so nine total. I am considering making the screen bezel ring out of translucent white acrylic, and adding another NeoPixel to make that a soft, power-on indicator light (overridable of course, if you want it off). So ten RGB LEDs. In that case I will probably switch to this format or even a pixel strip format where I would deconstruct the pixels and place them as needed. It may be the most economical way, and since the mounting is so custom at this point, it might just be the right thing. Again, practical concerns will dominate.

Separately, Moteino connects to my RF network without issue, as it has proven itself over and over again, using a HopeRF transceiver and a library based on [Felix Rusu]'s RFM69 driver library (after I clean it up it may just be able to be his library unadorned with my complications).

I have still to get the serial comm working between Moteino and BeagleBone Black, but it should be trivial, I just have not had a chance because the other parts of this were much more challenging, so I have been working on them. The PIRs I tested with Moteino, because I had already written that code for use in an early Integron.

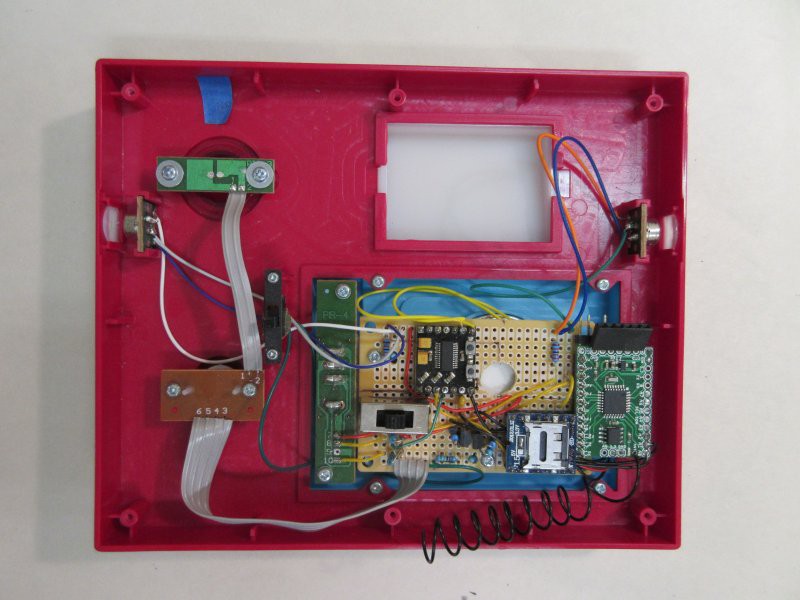

![]()

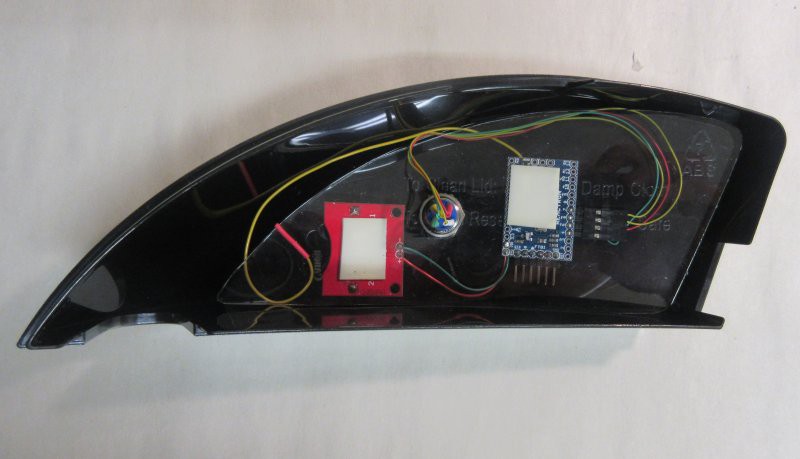

(You can see the PIR sensors on either side, and the Moteino on the bottom right, the green board. The square component in the center with black PCB is a PAM8803 audio amp, which I will test for output in this new model too, but which I believe is not suitable due to implementation details.)

I want to move the PIRs to the ADCs on the BBB, so that the ATMega328P can just concentrate on the RF network and the NeoPixels, and that can be a standardized unit. The PIRs are really tied to these units with the (low-res) gesture recognition and speech recognition and synthesis, so they really ought to be monitored directly by the BBB.

Aesthetically, it is not exactly what I was going for, but functionally it is right on so far. (Well, the speech recognition and synthesis prep could be faster but maybe I will try overclocking the BBB and other tweaks - if that does not work it's just a matter of time before faster hardware is released, and maybe code optimizations on the speech engines.)

I think this will be more visually harmonious if the screen is just removed, and the unit could be a continuous soft dome or other graceful shape. Actually I am hoping that it will integrate so well that there is no reason for the screen. Or, potentially try a small projector, so that the screen could be the wall behind instead of a physically integrated surface. That gives more potential for altering the shape for aesthetics. But yes, it increases cost a lot. Maybe a smaller screen would do, but the idea is that you should be able to see the text from as far away as possible, if you are interfacing that way. It's not meant to be anything but a signal contextualizer, it's not for digesting large amounts of detailed information. And it should be acceptable for people with less-than-perfect eyesight.

Also, if the screen goes away, there is probably no need for any of the gesture detection stuff - that is really just there to "scroll" information on the screen and navigate, without having to touch the device or be too precise with your motions, as precision takes time. The screen is actually a touch screen, but consider how this is if you have arthritis or terrible eyesight, or both ... I just wanted a way to interface easily without the device's limitations being forced on the human, as I think that 80% of the info we need in workflow data is low-resolution (yes/no, up/down, bad/good/better/best). It makes sense to me that motions and retrieval methods should also be low-resolution (and highly error tolerant). Otherwise, it is a frustrating bottleneck standing in between human and the data of the human's interest. In some future form I may integrate a camera and use that for gesture interpretation instead, as well as facial recognition, and ambient light data (with which I could adjust the brightness of the NeoPixels). Maybe I should add a photosensor now though....

Well, I'll deal with beauty after functional form is stabilized somewhat. I'd like to create several forms, all equally functional but allowing for different people's sense of style.

I expect other morphology for wall-mount, ceiling-mount, and of course the special case Automobile Integron.

-

Component changes

08/12/2014 at 03:43 • 0 commentsI've been holding off on an official component list until I can stabilize the build a bit more, but I wanted to mention a few things that have occurred since the original plan was hatched, and some of the design decisions involved.

Without delving too much into the history, I will just say that at one point I was coding a very simple speech recognizer to run on the ATMega328P, and I was able to create some code that was about 85% effective at recognizing a handful of carefully selected keywords that were pretty distinct in their pronunciation. That was the true positive rate. There was also a high-enough-to-be-annoying false positive rate, which would trigger my “initializer” (more on that at a later time). Anyway, I was getting sucked into the details of speech recognition and optimizing for a tiny processor, and this is really not the area where I can add the most value. So, I moved to R.Pi, to try to implement open-source speech recognition using Pocketsphinx, like many other builds. It meant having to use an SBC w/ Linux in addition to the ATMega328P based board, but for this usage I was not so space-constrained so that was OK, even with the added cost and power usage. Also, moving to Linux enabled speech synthesis as well, using festival. Before, I was playing out pre-defined sound files containing sounds and canned speech responses. But I wanted a more general interface, one that could recognize more than a handful of pre-defined keywords, and trigger more than a handful of pre-defined sounds. Ultimately I moved on to BeagleBone Black as the SBC for performance and I/O, but this is just for these Integron units. Other Reactrons that don’t require all this library support are fine on R.Pi, or just stand-alone ATMega328P, or even stand-alone Android devices, based on the specific application.

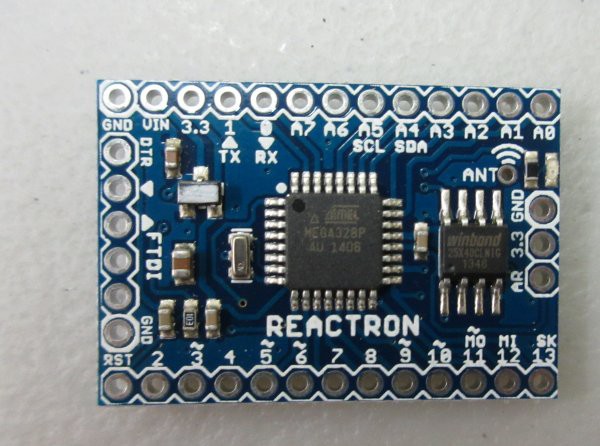

A Reactron is defined by its communications protocol and internal data structure, not by its specific hardware complement. I wanted to mention that while my header image shows a panel of ATMega328P boards (based on the Moteino by Low Power Lab), Reactrons are not Arduino clones, though their hardware complement may contain one, several, or none. I chose the image because to me, it evoked “many small computers working together”, which is what the Reactron network is all about. In my other images, you will see a multiplicity of BBBs and R.Pis and other hardware, as the mix of hardware for a generalized Reactron completely depends on its purpose. For instance, some existing Collector units are purely BBB boards with attached sensors, and the software to support the Reactron interface. Some Recognizers are just BBBs running statistical calculations on inputs from Collectors - without any directly attached auxiliary hardware. I haven't had a chance yet to write much about Recognizers, but that is coming soon.

Moving on to some of the changes, specific to the Integron unit:

One excellent thing that has changed since I started this project is that the Moteino has been upgraded. [Felix] at Low Power Lab has informed me that all new R4 Moteinos are now shipping with the MCP1703 voltage regulator. This is good news for me, because now I am able to just add “Moteino” to the components list, instead of a board "based on the Moteino", where I would have to do have a separate BOM and so forth. Now, you can just use a stock R4 Moteino (with HopeRF radio and a 4Mbit flash chip) for any of my projects that require an RF enabled ATMega328P-based solution. At the time I started this project, the Moteino was shipping with the MCP1702 voltage regulator, and that meant that using a then-stock Moteino in my 12+V projects (like the Reactron water pump or the newer Automobile Integron) might have resulted in a puff of smoke and little else.

I would also like to direct your attention to the fact that [Felix] has entered THP with the Moteino Framework, and you should go there and give him a skull. [Felix] is simply amazing and creates an enabling technology for many, many cool projects, including mine, and you should visit his site and forum to check them all out. I'm so happy to have the RF/ATMega portion of my network running on this robust design!

Another big change is that the BeagleBone Black went from Rev B to Rev C, and in doing so changed their onboard eMMC chip from 2GB to a faster 4GB one, and this means that it can just run Debian without having to boot it from a larger SD card on the Rev B, which was a minor hassle.

Other hardware changes involve the audio components. Mostly it has been involving testing out different microphones for response, since it appears that sensitivity specifications are merely suggestions on what might be. I do not have final components on this yet, still experimenting to find the best one (at a good price).

One of the components is essentially optional, that being a USB hub. The Integron unit uses an inexpensive USB sound card to play sounds and listen for speech. The BBB has a single USB port, and that is sufficient for this usage. However, I realize that not everyone might want to use the wired Ethernet connection, which means that a USB hub would be necessary in order to have ports for a USB WiFi adapter as well as the required USB sound card. So I have been experimenting with what USB hub to use, and it comes down mostly to shape factor, since this all needs to fit within a certain envelope. There isn’t really room to plug a bunch of stuff into the USB port with their relatively enormous connectors, so it comes down to soldering jumpers from the BBB board itself to a protocape, and splitting USB out from there, locally on the proto board.

The BBB I am sourcing from Adafruit, along with the BBB protocape and other components. I have decided to try to standardize on NeoPixels as much as possible, just because of the flexibility. This statement is valid only for Reactron projects that include an Arduino clone, because of the timing constraints with the execution of commands to those modules. Details will be forthcoming as soon as more of this gets locked down and stabilized.

Some of the design considerations are as follows:

In addition to the NeoPixel timing constraints, any of the timing-sensitive requirements will require an Arduino clone, which I will standardize now on the newer R4 Moteino. (From a standardization standpoint, in my opinion, and in this specific case, it is preferable to add a Moteino and maintain one library, than to port things to run on the BBB PRU and maintain a second library. I have many more nodes with just a Moteino and no BBB, than I do with a BBB and no Moteino. And generally, the standalone BBBs tend not to require auxiliary, timing-sensitive components. I do not mind re-evaluating this decision if a special case arises and it makes sense to do so.)

For the Integron unit, in addition to the data processing, audio, and RF comm modules, we have NeoPixels, PIR sensors, an HC-SR04 sensor, and a small screen (still working on the specific model, but either TFT or OLED, likely sourced from Adafruit). As discussed, the NeoPixels will need to run off Moteino due to tight timing requirements, but the PIR sensors are just for human interaction (slow) so can probably run right on the BBB ADCs. The screen can be run via SPI, so that can run on BBB as well, though other implementations with no BBB may use screens on SPI using Arduino compatible libraries. The HC-SR04 sensor is timing-sensitive so will also run from the ATMega328P. Many libraries are provided by Adafruit, and I'm very happy to integrate with their robust designs.

After a lot of research on a totally separate project, I am considering replacing or at least augmenting the gesture sensing with vision, using camera-based sensing, and stereo audio. Ultimately the Integron unit should be made available in several form factors. The one I am describing here is a desktop unit (movable), but there will be others, such as wall-mount, ceiling mount, and the entirely separate Automobile Integron, among others. I’d like to have as much modular overlap as possible, so each thing is not unique, but rather draws from a common design. At minimum, there is a common design philosophy, even if there are differences in execution due to practical concerns.

More details will be posted soon, in the system design document.

-

Automobile Integron project posted

07/24/2014 at 15:26 • 0 commentsWhile this main project describes the human integration device (Integron) for living spaces, it does not address what you do when you are not in a general living space such as home or office. The automobile is another common living space in our private lives that we personally control.

But it is a mobile one, and one where (at least currently) our faculties are tied up with the activity of driving. So there are some considerations there for a mobile human integration device. The main function is still the same, however. The tie in to leverage the rest of the network of simple nodes to deal with your personal, private data systems is the same.

Home and office are living spaces, as is the little bubble of the automobile. The personal mobile bubble of a person is another one, that is not bounded by the confines of a vehicle. We do see people walking down the street speaking into their bluetooth headset, or engrossed in their smartphone screen - these are just human integration devices at work on the personal mobile space. There's also Google Glass and smart watches.

Maybe in the future, the ubiquity of small simple computers in public spaces will allow us to technologically move though our personal living spaces, our mobile bubbles, without being encumbered by too much attention-stealing equipment. What's going on in our own dataspace is very important to us, and it has caused us to make a compromise with situational awareness. It is clear that we can think while walking. The problem is time-serializing interfaces, in my opinion. So it is a public safety issue as well! The hands-free driving laws validate that idea.

It would be great if your biometric data could follow you anonymously through nodes, and levels of access to it by the network itself was granted or revoked by you. Additionally, a similarly moving interface point for you to hit your remote data securely, with similar permissions handles, would allow you to have mobile integration through public spaces. If an organizing principle allowed companies and people to allocate a small portion of their gear to support this private interfacing, the interaction with computers and physical machines could really be much more pleasant and have less overhead.

The data may be split across multiple connectivity providers - providing even more security, and also failsafes and redundancy for data transfer.

Well, for now, the smartphone will continue to be the primary personal bubble interface. For now, the Reactron network will be concerned with home, office, and car - all spaces in one's personal control. A smartphone integration app can extend some utility when you are just walking out in the world, the idea being that the phone is in your control as well. The problem there is that at best, your phone only has a few hints that you are in fact you. Maybe an app can enforce additional, better security.

-

The Hackaday Prize Stage 1

07/14/2014 at 05:31 • 0 commentsVideo is up, and added to the project details. You can tell from the video that there has been a lot of development that I have not documented in the logs... yet. The video documents a lot of the components as they stand now, but I do expect changes. I plan to stabilize and refine things a bit more before getting into the full detail, because the last thing I want is to waste anyone's time with information that will be superseded shortly.

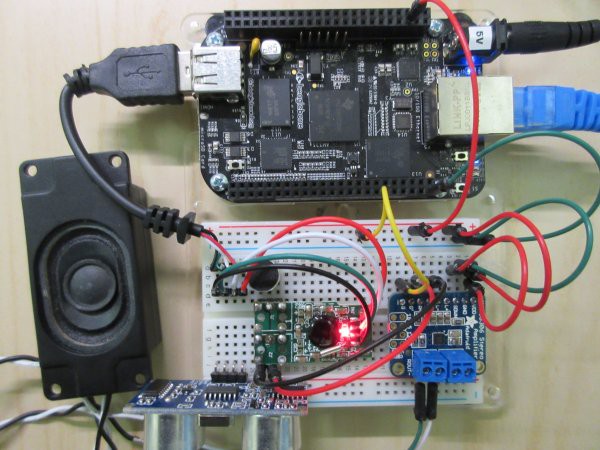

But if there is interest, I will mention the components for the Integron unit here, they are: BeagleBone Black (to run voice recognition and synthesis), some NeoPixel LEDs and a TFT screen (from Adafruit - actually I got the BBBs from Adafruit also), PIR sensors from ebay, and then a handful of other components that may change, and these relate primarily to the sound support. BBB has only one USB port, so I may need to solder in a two-port USB hub (space constraints) to enable more ports. I need one port for a sound card. Still working with the audio levels of various microphones and amplifiers, the final selection may change.

My previous version of the Integron was on R.Pi, so I didn't need to add a sound card. The mic sensitivity and selectiveness is something I wanted to improve, so that development is ongoing. One goal is for these things to be able to really parse speech from a reasonable distance. They do a negotiation when certain sounds are parsed, to see if other nearby units heard the same sound, and use a volume comparison to figure out approximately where in the room the speaker is, and also which Integron unit should handle a response. This allows you to walk around the room and have continuous integration. One open item is to create a handoff procedure - right now this targeting decision is at a single point. Once the decision is made, the entire response comes from one unit. But it would be great, if the response is long, for the sound to follow you around the room, so you aren't chained to one box while you listen. I deal with this now by just turning the volume up, but ideally I want these machines to speak in a low voice (or one that matches the volume with which you issued a command), so as not to disturb what is going on in the room. I do not want a loudspeaker broadcast, I just want a little private conversation in my corner of the room, with my machine ambassador. And later, when I develop better selective targeting, it may be possible for two (or more) users in different parts of the room to have their own different conversations.

Back to the components. The ubiquitous HC-SR04 ultrasonic sensor I had lying around, can be sourced on ebay for very little. Speakers, also from ebay, just tiny 1 inch cheapies, but they are pretty loud and adequate for synthesized speech.

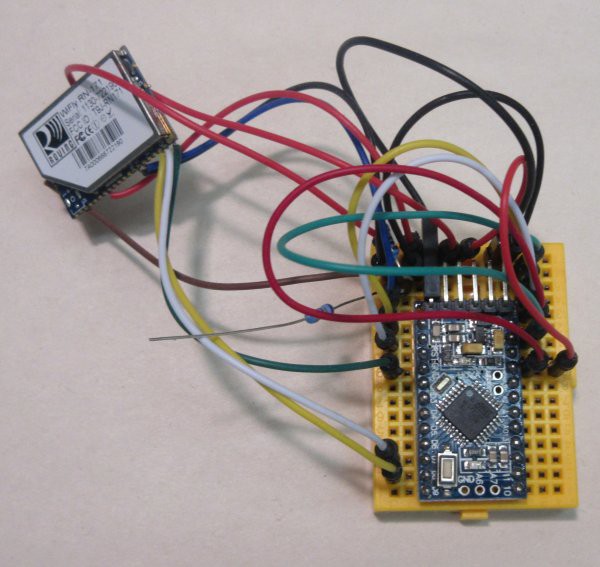

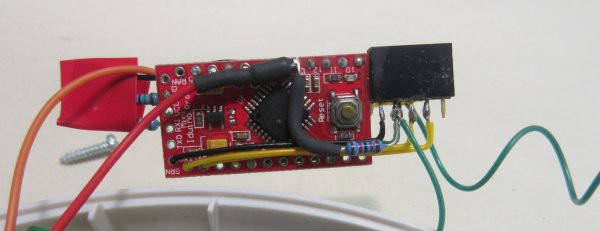

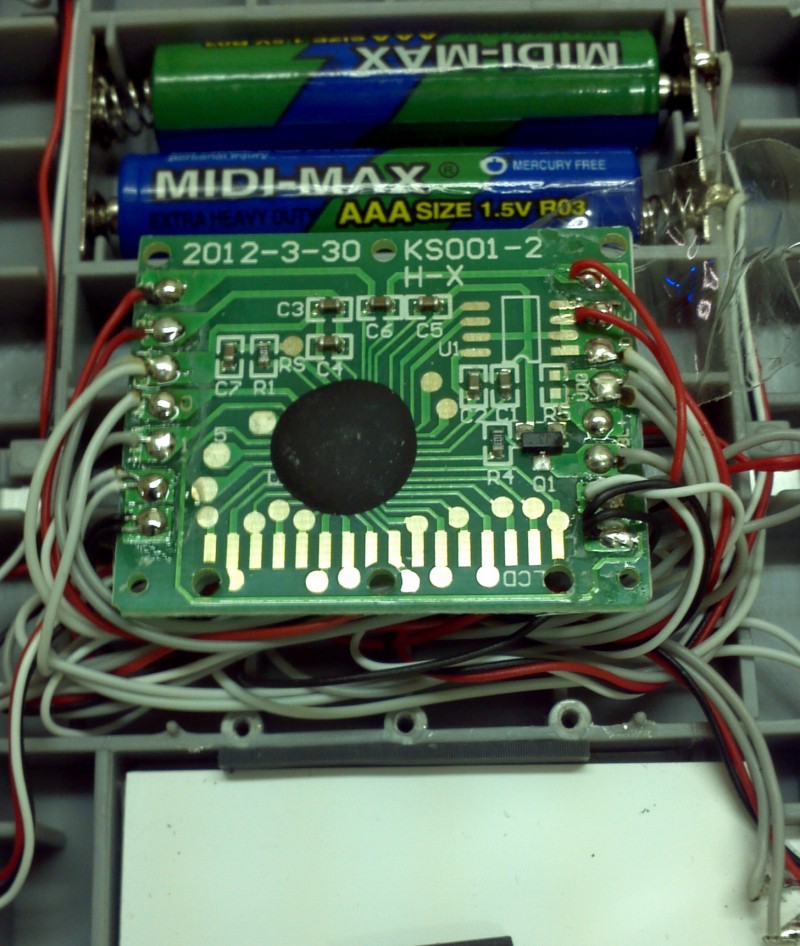

The Arduino clone is based on the open-source Moteino from Low Power Lab. I had been making these previously, in various forms with either Hope RF radios or WiFly RN-171 for wireless TCP/IP.

![]()

![]()

![]()

The Moteino doesn't support WiFly directly but you can of course connect one. However, it is great at supporting RFM12B and RFM69W/HW so if you want an excellent, tiny, 3.3V clone with RF and capacity for flash memory, you should definitely look into these excellent boards.

![Moteino from Low Power Lab]()

I decided to use this pattern and standardizing has really saved me a lot of time, and enabled clean hardware integration. I will upload my Eagle files to Github, but you can get usable files from Low Power Lab - the only thing that is somewhat different is the silkscreen, and my requirement for certain radios and a flash chip. If you don't need that stuff, or need other stuff for your project, Moteino is the way to go, there are lots of options. One of the reasons for my own silkscreen is just for me to identify which ones meet my specific standards - I still have a lot of experimental Moteinos in use and they don't all have memory chips or RFM69, so if I see one I know I cannot assume its hardware complement.

I think that is it for stuff I have bought, except for some speaker grille fabric, used for the outer casing, which I obviously need to be sound-transparent.

The rest I have fabricated. The round parts in the video are dev parts made in MDF, the finals will probably be white acrylic to diffuse LED light. Mechanical support parts for the PIR sensors were not shown in the video, as they are still under development. All those design files will be made available on Github.

![]()

There is a lot of documentation to do!

I should be clear that Integrons as I have them can be made with any Arduino clone and RF setup, if you have some stuff lying around already. This is just the route I went for a lot of different reasons. Also, Reactrons are much more about the interface and the integration than the specific choice of Arduino hardware (or SBC hardware, or RF hardware, etc.) That is one of the points of the design, and it allows old machines to not become obsolete. As long as an older machine can push a few bytes, it can be a Reactron. It doesn't need RF or TCPIP, as long as it can communicate in some way, IR, whatever. (And if it can't, then you slap on one of these inexpensive RF enabled Arduinos, as shown in several of my projects, and you are good to go.) If you keep a machine's tasks very simple, it may live a long time. Even twenty year old computers have more power than the Apollo space program did, so it has been demonstrated that you can do amazing things with relatively small amounts of data and computing power.

-

Another unit is aggregated (it is futile to resist)

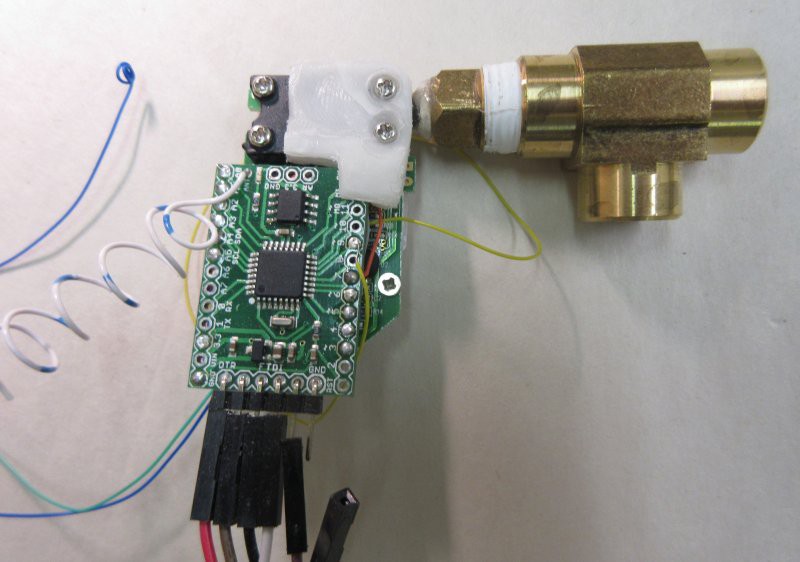

07/14/2014 at 03:39 • 0 commentsSimilar in spirit to the label maker, I am adding another incidental machine to the network to allow more flexible and complex usage. A simple garden watering valve.

It broke, so instead of replacing it I thought I would use the opportunity to fix and upgrade it, and have it react with my other network nodes such as temperature and humidity sensors, as well as voice control nodes and status indicator nodes. It's a super-simple machine, and that is what I like about it. I won't miss the highly-specific one-of-a-kind interface (read: terrible) it came with, and it will be able to do a lot more things than before, by leveraging complexity from the various simple nodes on the netowrk, each with their own simple and singular purposes.

That's the idea, anyway.

One by one, as these things come up in life, I will find ways to integrate them. With home automation, you can basically expect thermostats and door locks, window sensors and control of lights, all these things are possible too. But I'd much rather have a system that can accept any simple machine, because who knows what you might want or need - or invent. This garden watering timer probably does fall under the scope of home automation, but probably not the label maker. And I'd rather not have all my devices need to come from a single manufacturer or need to support a specific API directly. Most simple things don't have an API, like this timer, and that is just fine with me. To me, having one in a device like this would be just another example of complexity in the wrong spot - don't make the device more complex, driving up the cost and failure rate. I'll add my stuff. What I do wish, however, is that manufacturers would design access to control points to attach external devices. Just expose some solder pads, put them all in one spot, label them. Or better yet, a slot, like an SD card slot, that could take a small board with microcontroller. That might add one part, but I'd be OK with it if there was a standardization in play. But I'll take solder pads so no additional complexity or cost in incurred. And failing that, I will do what I do - hunt for vias and pads and connectors wherever they are on these boards, and tap them.

I'm not sure which way the future will go on this one.

-

The network grows, one unit at a time

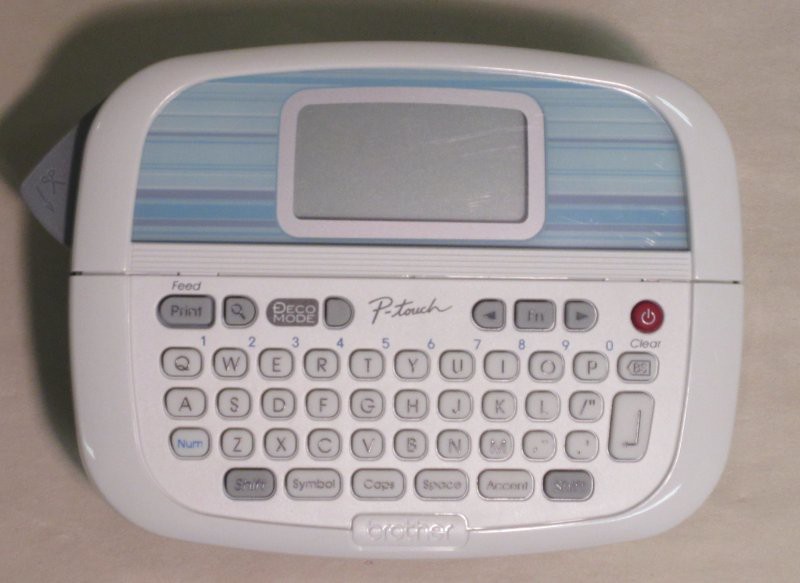

07/04/2014 at 02:39 • 0 commentsThis week I had a little problem because I grabbed an unlabeled box, and it was not the box I thought it was.

The problem is sometimes I am too busy to label my boxes, and by that I mean most of the time. All, maybe? I think there must be one box that is labeled...

Solution? Add a label maker to the Reactron network. Then I will be able to use voice recognition already handled by the network to simply print out a label on demand, rather than use a serializing interface to slowly and painfully create and print labels the normal way. I found a cheap label maker for $20, we'll see if I break it or manage to add it to the network, there is some hacking to do.

This is a really simple hack but that really is the point of Reactron Overdrive. One simple added node to solve a specific problem, but then the node is there and available for other future as-yet-unknown uses. The point is to augment life's workflows, improve them, and ultimately have less undesired or inefficient machine interface time. (I'm all for desirable machine interface time, making labels is not that.)

-

Good to the last #endif

06/27/2014 at 03:06 • 0 commentsThis week has been about coffee.

I posted three related and inter-related projects:

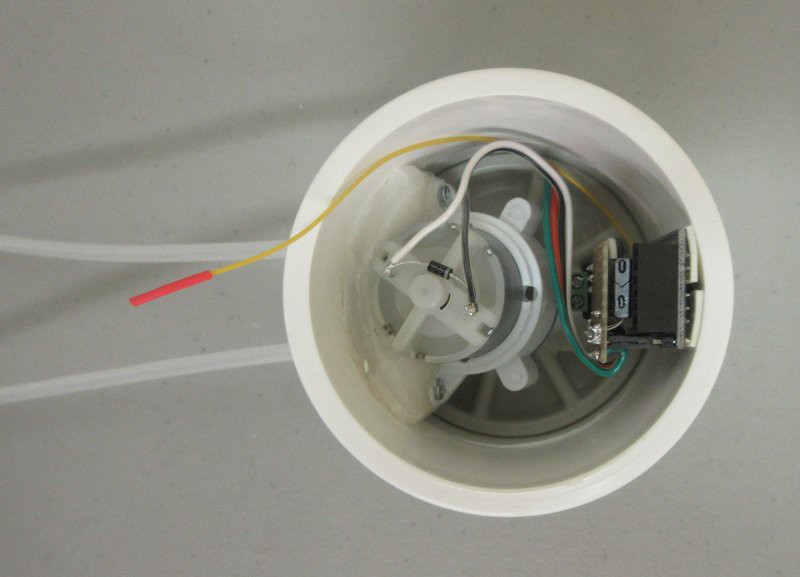

automatic water pump to fill the coffee maker

![water pump]()

and a switch module

which despite being a separate machine entirely, lives inside the top cover of the coffee maker's water tank, providing a manual water feed button for the pump.

Links have been added to the side.

I guess the Quadralope, previously discussed, also counts with respect to coffee. Yay coffee!

-

Do robots need their own furniture?

06/25/2014 at 16:52 • 0 commentsI think the answer is yes.

The furniture we have in our homes are anti-gravity devices such as tables and chairs, or organizational devices such as cabinets and desks.

If you have a machine culture in your house, and the robotic units are doing things among themselves, which include moving stuff around, then at minimum they will need transfer stations. These can be as simple as little, out-of-the-way tables, or something more complex.

I envision the need for mobile robots to require a recharging station that they can get to and manage on their own.

There may be the need for organized temporary storage (material buffering).

It may well be that all of these robotic needs can come together in one piece of furniture - a small footprint, height-adjusting table to facilitate material transfer to different conveyances, with cabinetry below, and a charging port (or several) for robots to charge up along their travels, if necessary. There may be the case for a precision location beacon, to augment other tracking methods. If this robot furniture is designed to be stationary, it can be a positional reference for triangulation for any moving objects, including organic ones.

At what point is this piece of furniture just another robot, albeit a stationary one? I don't know. Perhaps it is more properly called an appliance, like an oven or dishwasher, which are machines that don't change location. But the table-like and organizational aspects seem more like furniture - just not for humans. And since we would be seeing these items, but not actively interacting with them the way we do with an oven or dishwasher, they should be good looking and innocuous, more like furniture than an appliance. Perhaps a new designation is appropriate.

This piece of furniture might be a good place to put the ubiquitous Integron unit, as well.

-

Can robots be furniture? Plus some more stuff...

06/19/2014 at 20:03 • 0 commentsA not-fully developed Reactron, the "Quadralope", is basically just a table. But with movable legs, and a Reactron wireless controller, the idea is that it will bring you your stuff, or take stuff away.

It isn't concerned with what the payload is, only where it is going. The Recognizers have a statistical model of where you are, and a discrete model of where other Reactron units are, so it may dispatch one of these units with a route. This is just a physical conveyance. I broke the details of this unit out into a separate project to focus on those details.

Why legs? Wouldn't wheels suffice? Well, the idea of a Reactron machine culture integrating with the human culture to augment human living means that some things the machines must learn to do, so that we do not have to alter our methods to fit them. We have carpets, hardwood floors, thresholds, furniture, objects on the floor, etc., all for very good, human reasons. I for one do not want a shiny leveled pathway everywhere in the house, where wheeled service bots can roll (and to which they may be restricted). A walker can handle these things very well. The total contact patch of this robot is perhaps five square inches, and it would be programmed to scuttle out of the way, when not specifically delivering something. "Out of the way" may mean something like "exist in plain sight, but not in a human walkway". If so, it would help if the robot was in fact furniture - looked good, matched the decor, etc. (Yes, this prototype needs work to look less robotish, but you get the idea.)

And bring me coffee!

Four other Reactron projects were also posted:

- Reactron collector: Kitchen scale (weighs stuff and transmits that data)

![Kitchen Scale]()

- Reactron collector: Air pressure transducer (measures pressure and transmits that data)

![Air Pressure transducer]()

- Reactron material processor: Heat exchanger (cools off water and integrates to the network)

![Heat exchanger]()

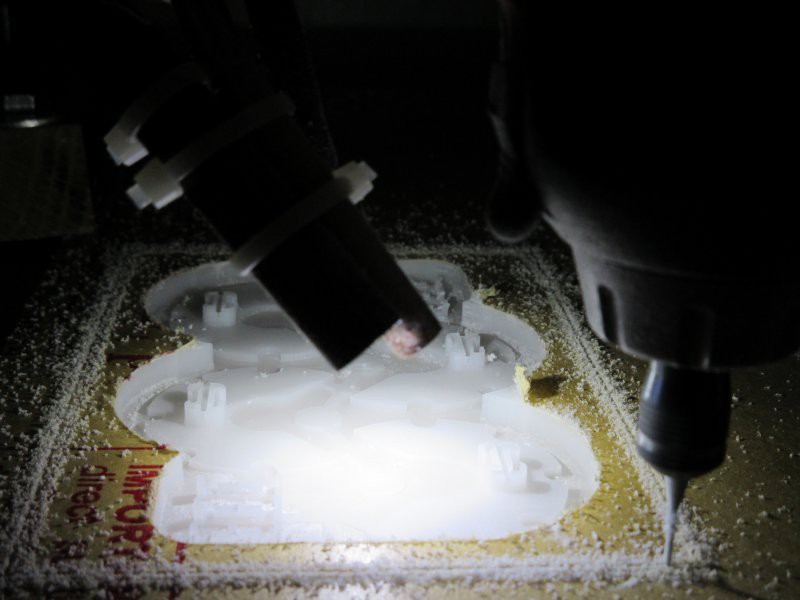

- Reactron material processor: Wireless CNC machine (milling machine that integrates to the network)

![CNC mill]()

These projects were also broken out to focus on the individual details.

But the main point here is that these are all Reactron components, integrate with each other, and the number of possible combinations, workflows, and outcomes increase exponentially, the more simple discrete units there are.

Anything can be a "material processor", like a coffee maker, for instance. It does not have the human interface built in, nor the means for conveyance to the human, or from the raw material supply (water and ground coffee), nor the means to transport the material (the coffee) to and from the conveyances. A single machine that did all this would be horribly complex, require lots of tuning and maintenance, and break easily. But a small critical mass of certain types of machines can result in complex outcomes that allow you to just seamlessly live your life while machines asynchronously manage the things that you allow them to manage.

We will trust machines much more, to do complex tasks, when they are simpler and more numerous, and coordinated. They will break less, and have backup when individual units fail.

- Reactron collector: Kitchen scale (weighs stuff and transmits that data)

Reactron Overdrive

A small but critical number of minimally complex machines interact with each other, providing machine augmentation of human activity.

Kenji Larsen

Kenji Larsen