-

How the connected world empowered my daughter

11/22/2019 at 14:56 • 0 commentsLorelei and Bodo Hoenen present their robotic exoskeleton at HLTH2019

-

Job Opportunity - Help us develop open-source code for project

01/29/2018 at 15:41 • 0 commentsWe are looking for some help to develop open source code for this project

We aim to develop an open-source platform for muscular activity signal detection —recorded non-invasively on the skins surface— and using that for exoskeleton control.

The muscle signals will be recorded using the Myo Armband and processing/exoskeleton control will take place on a Raspberry Pi. The goal is to detect 2 to 5 patterns from myo signals using machine learning / pattern recognition methods.

Many open-source libraries are available and can be used in the project subject to minor modifications. Guidance and support will be provided.

Here is a Wiki that we have for this project https://sites.google.com/site/ourkidscandoanything/.

We already have a working model for this, but it is using proprietary software, We now need to develop this software as open source so that we can freely share it.

Required skills- Python programming

- Basic Bluetooth communication principles

- Basic signal processing (i.e. filtering, sliding window processing)

- Basic machine learning / pattern recognition (e.g. classification using the sklearn library)

- Raspberry Pi (& Linux operating system)

- Version Control (e.g. Git/Github)

Breakdown of work- Set-up myo communication (using off-the-shelf library).

- EMG signal processing (using library developed by Agamemnon: https://github.com/agamemnonc/pyEMG )

- Develop training module (using code by Agamemnon: https://github.com/agamemnonc/pyEMG )

- Develop real-time control module (using code by Agamemnon: https://github.com/agamemnonc/pyEMG )

- Testing and debugging

- Final application development

-

The end of our story and the beginning of many more

09/26/2017 at 17:43 • 0 comments -

Build your own robotic assistive arm

02/18/2017 at 19:01 • 0 commentsDo you want to build a robotic assistive arm, we have the instructions here: www.ourkidscandoanything.com

-

We were on TWIT

02/18/2017 at 19:00 • 0 comments -

Our story so far

11/23/2016 at 01:33 • 0 commentsOver the course of the last few months, a group of innovators from around the world have joined my daughter and I to build an open source robotic assistive arm. This is our story so far, come join us and make it your story too! : https://www.linkedin.com/pulse/i-didnt-expect-doing-bodo-hoenen

-

We are getting there!

11/22/2016 at 15:36 • 0 commentsWe need help, if anyone knows how to code machine learning to recognize patterns in myoelectric signals, you could change the world with this!

Over the course of the last few months, a group of innovators from around the world have joined my daughter and I to build an open source robotic assistive arm. This is our story so far, come join us and make it your story too! : https://www.linkedin.com/pulse/i-didnt-expect-doing-bodo-hoenen

-

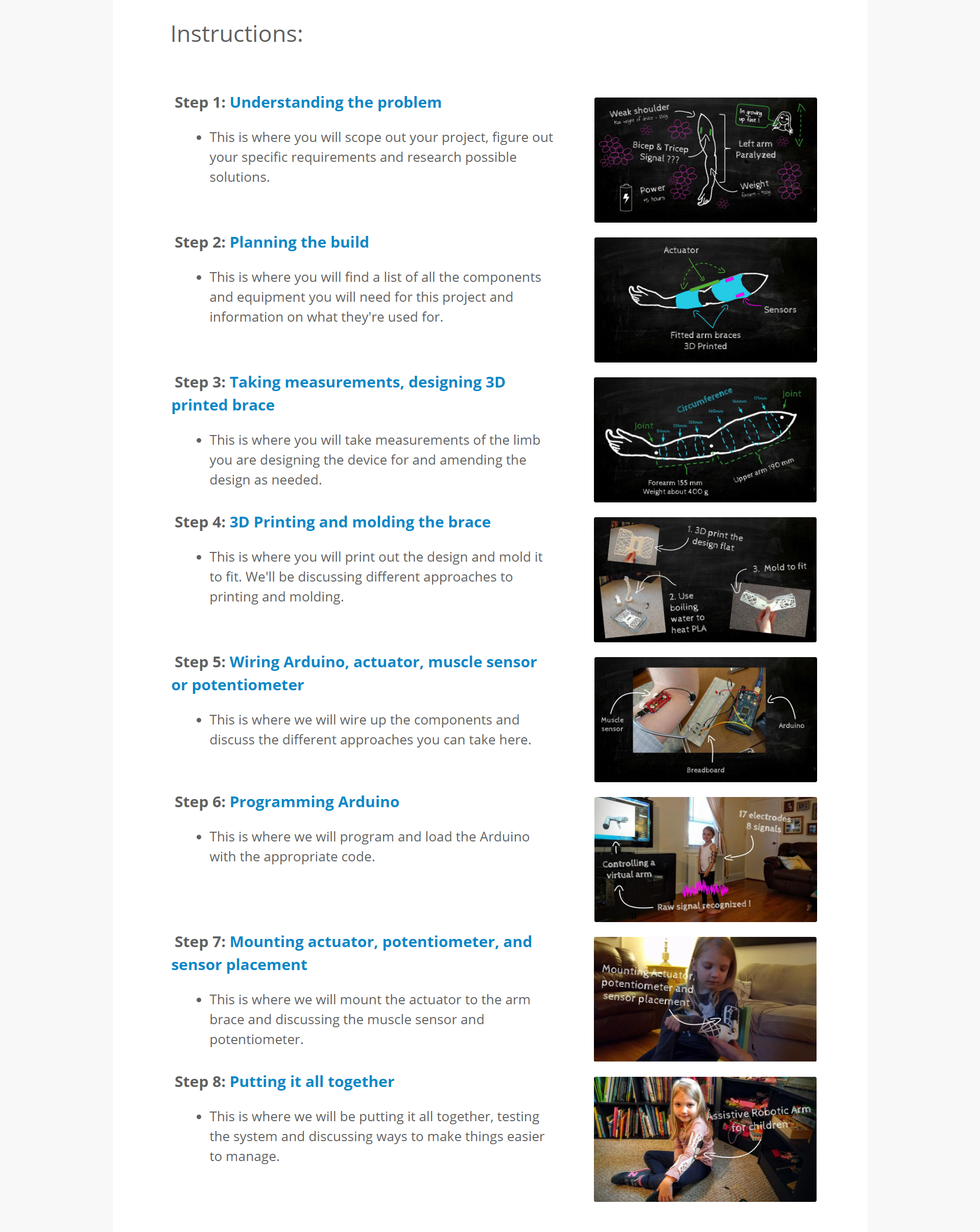

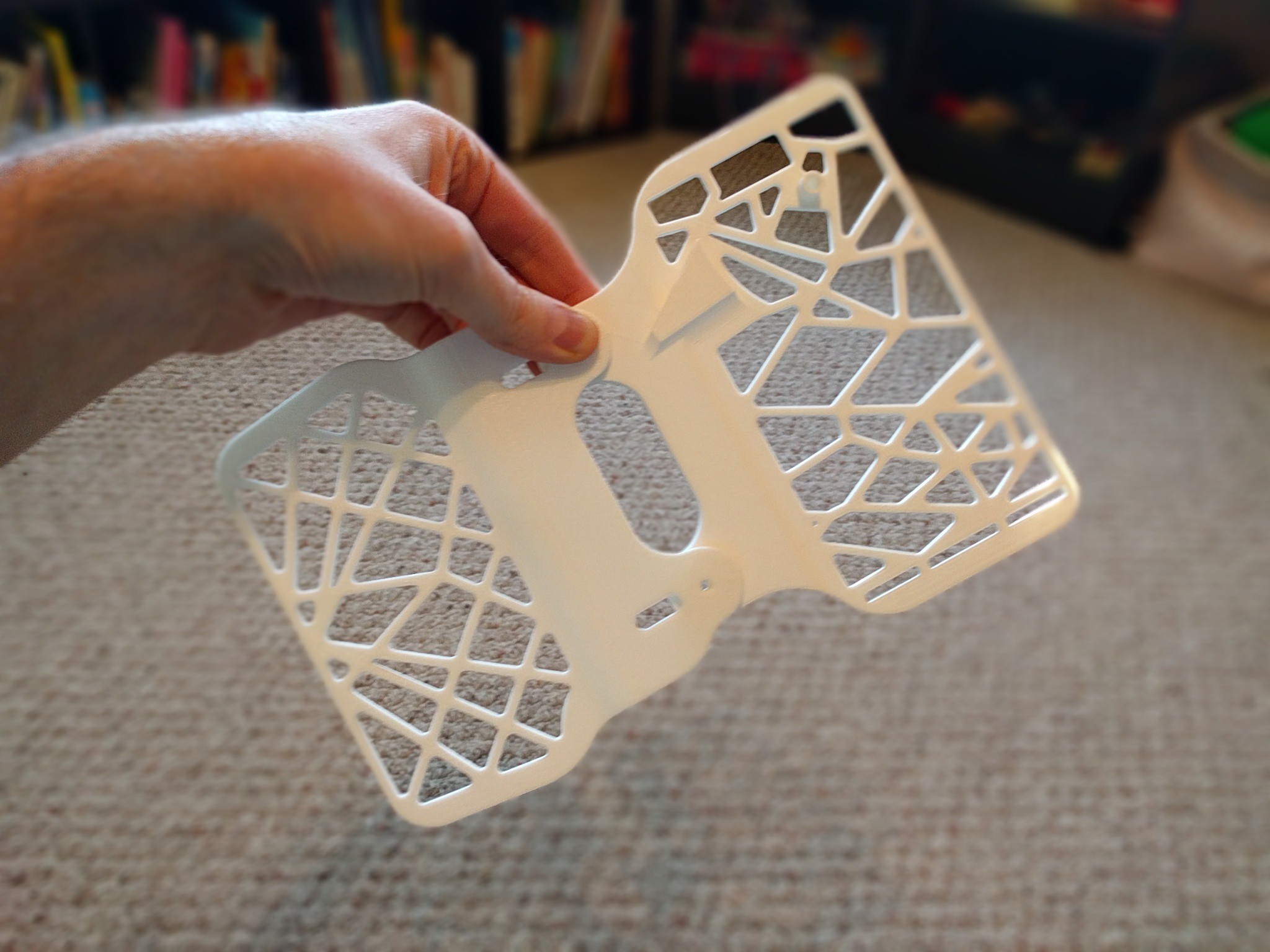

Moulding 3D print

11/05/2016 at 20:42 • 0 commentsWe printed out our first attempt at the arm brace. We decided to use PLA plastic as it would allow us to print out the brace flat and them mold around Lorelei's arm after we heat it. To make that easier and to make sure I don't burn lorelei, we made a cast of her upper and fore arm, and molded the brace around those instead. It worked really well!

https://m.facebook.com/story.php?story_fbid=10155164173891754&id=708211753

![]()

![]()

-

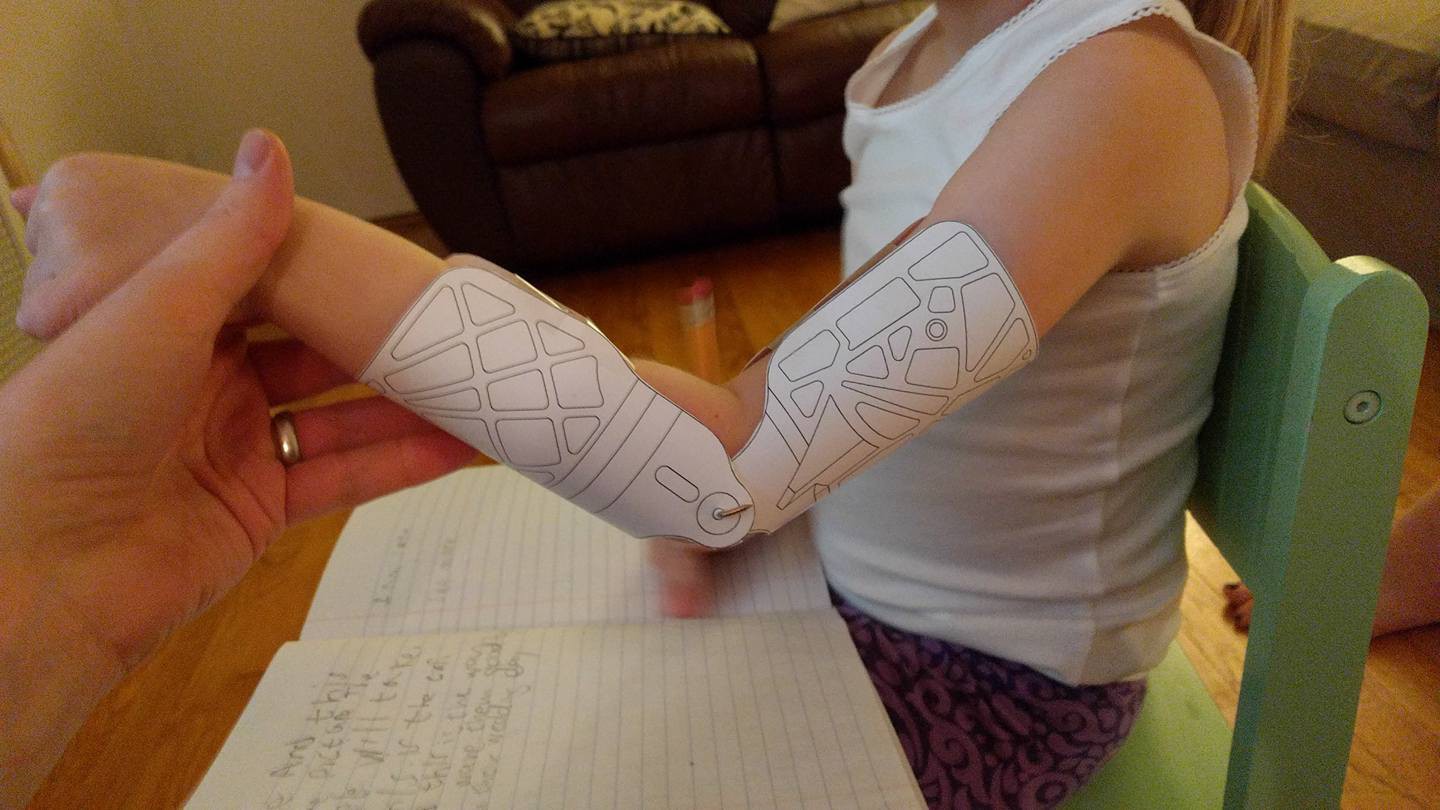

Testing out designs

11/03/2016 at 11:08 • 0 commentsWe have been testing out the arm brace design a little by printing it out on paper. And Now that we have a design that should work well we sent it off to the 3D printer, and will hopefully get it by the weekend.

![]()

-

Using Machine learning to boost MyoElectric signal recognition

10/21/2016 at 02:04 • 0 commentsSuper Excited today. Lorelei and I figured out how to reliably get a signal from her arm to control the robotic prosthetic! (Disclaimer the video and lighting is poor but I was just excited to quickly show you all).

Background: Up until now we have been struggling to get an accurate signal from her arm to control the robotics. We are looking for the signal that is being sent from Loreleis brain to her arm, but because of the motor neuron damage, the signal is really weak. The sensors we used until now essentially filtered and normalized the raw signal so that it can isolate spikes within the signal. When a spike reaches a given amplitude (threshold) we would trigger the robotic actuator to move the prosthetic arm, thereby helping lorelei move it. However, given the weakness of my daughters signal in her muscles, we had to set the threshold really low which in turn caused the robotic actuator to be triggered by signals from her heart or other muscles in her arm or body. Using this traditional approach resulted in a significant roadblock to our project.

I was thinking that there must be a better way... Thinking of approaches that are used within things like image and voice recognition, we can train algorithms to recognize objects in pictures (this is how Google can tell you what is in your photos) or how we train algorithms to recognize speech (Like Siri and Google Now). In both these examples they train the algorithms with vast amounts of data, they do not filter the data or normalize it. What if we did the same with Lorelei's signals, could we not use a similar machine learning technique to look at the unfiltered, full signal that Lorelei's arm produces and have an algorithm learn to recognize the hidden signals within that noise.

It turns out there is a company CoApt who are doing something really similar. After a few emails and a call, they kindly sent us an evaluation unit. After placing 17 sensors on her arm and a bit of calibration we got it to work. We were able to control a virtual arm you can see in the video. This is so amazing! Really amazing stuff CoApt, thank you!

Building an Assistive Robotic Arm

We are building an assistive robotic arm for my daughter who recently became paralyzed in her left arm.

Bodo Hoenen

Bodo Hoenen