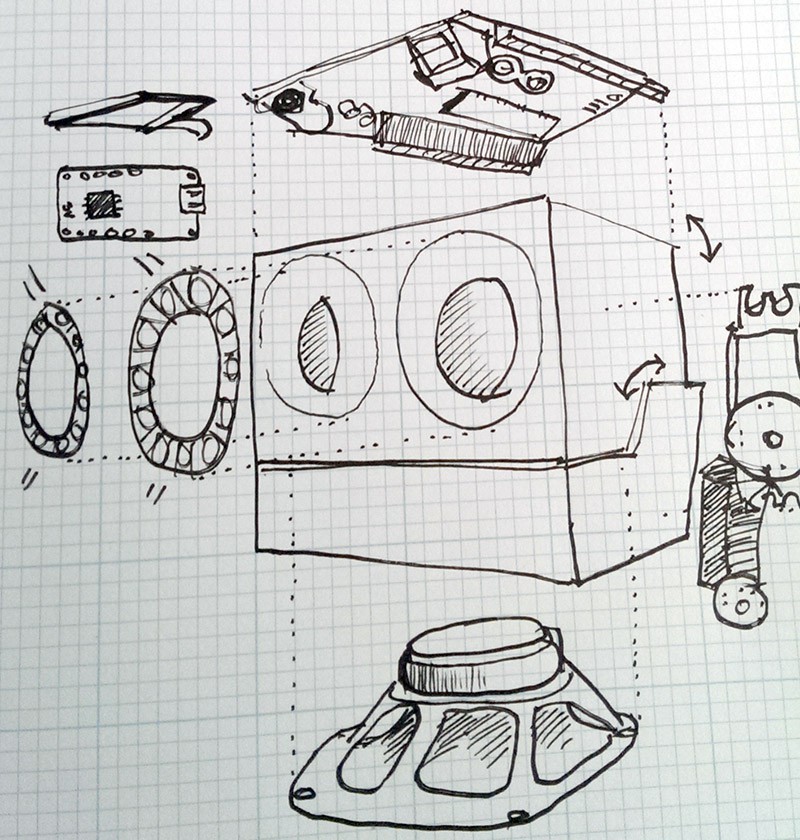

Pablo is formed with one continuously folded cardboard box. His eyes are diffused with an electrical wire spool cut in half. Pablo is open source hardware and software and details including license can be found on Github https://github.com/somenice/pablo

Pablo uses PyAIML. A FreeBSD licensed Python AIML Interpreter by Cort Stratton available at http://pyaiml.sourceforge.net/

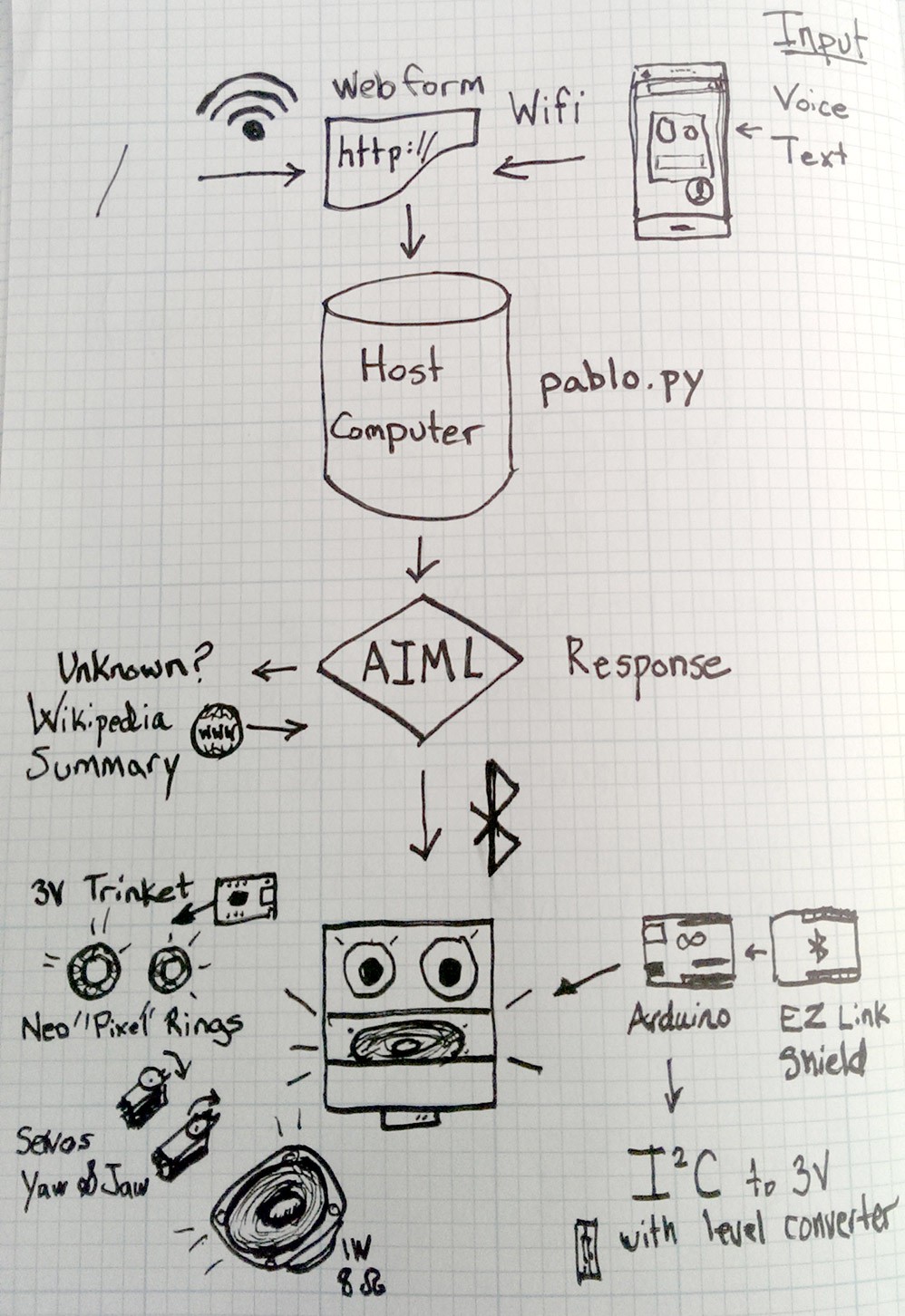

Using the basic PyAIML example plus a web.py form we are able to talk to PABLO.

Speech-to-text works on Android but I need to get the Javascript API working. I was using the speech-to-text input feature in Chrome (x-webkit-speech) but it has since been deprecated. NOTE: As of this writing, a Javascript Speech API example is implemented but not yet integrated.

AIML responses are constructed from a set of reduced answers to planned questions. eg. “What’s your name?” “Who are you?” “What are you called?” = PABLO. If a pattern is not matched, it is searched on Wikipedia. (Working on disambiguation errors.) Most of the time Pablo cocks his head to the side and says "dunno".

Not quite Natural Language Processing but with random responses and recollection it can make for convincing conversations.

An Arduino with the Adafruit EZ-Link Bluetooth Shield receives the response from the host computer. The response is interpreted then commands issued to eyes, servos, and speech module.

I'm using DECtalk commands to control voice (http://www.grandideastudio.com/wp-content/uploads/EpsonDECtalk501.pdf) and DECtalk-like commands for eyes and mouth control.

<category>

<pattern>CLOSE YOUR EYES</pattern>

<template>

[:rgb000000]<think><set name="topic">EYES</set></think>

</template>

</category>

<category>

<pattern>RED EYES</pattern>

<template>

[:rgb220000]

</template>

</category>

<category>

<pattern>SHUT UP</pattern>

<template>[:jaw0] </template>

</category>

<category>

<pattern>SHUT YOUR MOUTH</pattern>

<template><srai>SHUT UP</srai></template>

</category>

I used the proto area of the shield to connect headers to which I can temporarily plug in the text-to-speech module, two servo motors, and the level converter connection to eyes.

The eyes are controlled by a small microcontroller from Adafruit called the Trinket and is powered by the lipo battery. They are self supported and can easily be repurposed for other projects. I used a 16 pixel ring and a 12 pixel ring which made some of the eye functions a little specific to this build. The logic level converter is used to receive commands over I2C from the 5v Arduino microcontroller using the Tinywire library.

Everything is currently crammed into a cardboard box with a speaker pointed down into the mouth. A talking function randomly moves the jaw servo while Pablo is talking, opening and closing the mouth. This combined with the advanced settings of the Emic2 voice module make for endless hilarity. A second servo twists the head briefly, as one might picture a confused dog, when an answer is not known. The datasheet from Parallax(PDF) shows you how to change the basic settings and take advantage of the more powerful DECTalk processor.

Lots of things to build on, still tons to do, least of which is his “personality”.

Andrew Smith

Andrew Smith

Great project!!