-

Plan for finished system

10/30/2017 at 21:41 • 0 commentsSo, I've decided to turn this into a kind of digital naturalist system, with a rewards system for competing against other users to see who can spot the largest number of critters (and could add plants too).

SPOTTING AND CLASSIFYING COMPONENTS OF THE SYSTEM

1. Camera trap for larger animals

So we already have this, but not with a waterproof enclosure, and we need to alter the solar recharging circuit too. This is just the raspberry pi, or two, that will take images when PIR triggered, classify them, take video subsequently, and upload these along with labels returned from TensorFlow. We'll need two raspberry pi for night and day detection, unless we solve camera multiplexing. We will also need the IR illumination for night detection. So we'd want to build our own 5v one I think.

2. Hand-held unit for getting close to bugs, and smaller animals like frogs

So this will be a Pi with an LCD screen. So users can hold it close to the smaller critters before photographing them. We will need a GUI application to make everything easy. That'll start with a camera interface, then run the code for classification of the critters in the images acquired. Then it can return this to the user, and ask if they want to upload the spotted critters. This will need 3G/4G connectivity. We can start with the USB 3G dongles.

UPLOADING THE IMAGES AND REWARDS SYSTEM

So this is the most difficult bit for me.

What we need:

Digital naturalist system website.

The users of the system will sign up for this, register their spotting/classifying components, and here they will compete against each other to spot the most critters. I guess we could do a XP thing as per gaming, so they'd get not much XP for a cow, but quite a lot for a more illusive creature such as a pine marten. We could alter the XP given based on geo-location. So if user is in location with plentiful lions, they would not be getting so much XP for spotting those. I'd go ahead and add a feed (like the facebook old feed). So if other users like your spotted critter, you'd get more XP. We'd have a determined number of XP points to level-up too! The current easiest comparison is Overwatch, I guess.

Digital naturalist system smart-phone apps.

We'd need the same thing, linked to the website, as smart-phone apps for iOS and Android too. Actually, we could do the hand-held unit just from a smart-phone.

Supervised re-training of CNs.

So this is most exciting part I guess, and has the cross-over to help researchers. We attract all these users with the competitive element, and then we use their feedback on classified images (i.e. was that actually a starling, or was it a blackbird?) to help retrain our CN. I touched on my idea for doing this with cats here https://hackaday.io/project/20448-elephant-ai/log/69834-building-a-daytime-cat-detector-part-2 . Eventually we will have enough human-labelled images of common wildlife to build a CN from scratch.

THINGS TO DO

1. So should I start by writing a GUI app for Pi in C# as needed by the hand-held unit? Then I can use C# to write the iOS and Android apps too? That seems ok.

2. I've no idea about how to go about doing the website!

-

Plan for finished system

10/30/2017 at 21:41 • 0 commentsSo, I've decided to turn this into a kind of digital naturalist system, with a rewards system for competing against other users to see who can spot the largest number of critters (and could add plants too).

SPOTTING AND CLASSIFYING COMPONENTS OF THE SYSTEM

1. Camera trap for larger animals

So we already have this, but not with a waterproof enclosure, and we need to alter the solar recharging circuit too. This is just the raspberry pi, or two, that will take images when PIR triggered, classify them, take video subsequently, and upload these along with labels returned from TensorFlow. We'll need two raspberry pi for night and day detection, unless we solve camera multiplexing. We will also need the IR illumination for night detection. So we'd want to build our own 5v one I think.

2. Hand-held unit for getting close to bugs, and smaller animals like frogs

So this will be a Pi with an LCD screen. So users can hold it close to the smaller critters before photographing them. We will need a GUI application to make everything easy. That'll start with a camera interface, then run the code for classification of the critters in the images acquired. Then it can return this to the user, and ask if they want to upload the spotted critters. This will need 3G/4G connectivity. We can start with the USB 3G dongles.

UPLOADING THE IMAGES AND REWARDS SYSTEM

So this is the most difficult bit for me.

What we need:

Digital naturalist system website.

The users of the system will sign up for this, register their spotting/classifying components, and here they will compete against each other to spot the most critters. I guess we could do a XP thing as per gaming, so they'd get not much XP for a cow, but quite a lot for a more illusive creature such as a pine marten. We could alter the XP given based on geo-location. So if user is in location with plentiful lions, they would not be getting so much XP for spotting those. I'd go ahead and add a feed (like the facebook old feed). So if other users like your spotted critter, you'd get more XP. We'd have a determined number of XP points to level-up too! The current easiest comparison is Overwatch, I guess.

Digital naturalist system smart-phone apps.

We'd need the same thing, linked to the website, as smart-phone apps for iOS and Android too. Actually, we could do the hand-held unit just from a smart-phone.

Supervised re-training of CNs.

So this is most exciting part I guess, and has the cross-over to help researchers. We attract all these users with the competitive element, and then we use their feedback on classified images (i.e. was that actually a starling, or was it a blackbird?) to help retrain our CN. I touched on my idea for doing this with cats here https://hackaday.io/project/20448-elephant-ai/log/69834-building-a-daytime-cat-detector-part-2 . Eventually we will have enough human-labelled images of common wildlife to build a CN from scratch.

THINGS TO DO

1. So should I start by writing a GUI app for Pi in C# as needed by the hand-held unit? Then I can use C# to write the iOS and Android apps too? That seems ok.

2. I've no idea about how to go about doing the website!

-

Re-starting the project since ElephantAI has been submitted now!

10/21/2017 at 19:20 • 0 commentsThe majority of the work for this has already been accomplished in ElephantAI. So let me run through what is going on! I'm going to build this first as a daytime wildlife detector. It obviously can be placed anywhere - not just the garden. I'll change the name when I think of a better one! We'll be adjusting it so that it doesn't detect any animals which are trafficked (e.g. Pangolin).

BASICS

The daytime detector is comprised of the following hardware: one raspberry pi, a USB dongle for mobile connectivity, a raspberry pi camera, a PIR, a LiPo battery, a waterproof case. I'll use an ABS case for now, but we will 3d print a waterproof case eventually. We'll put a waterproof switch on the outside to switch it on (this is just by connecting the battery). And we'll have a waterproof switch for safe-shutdown (shutdown the OS, not just kill the power and risk SD card corruption).

So, if we get HIGH on our PIR we will take a series of images using the PiCamera. Say 1 image, wait 3 seconds, then 1 image. Looping through for say 5 minutes. But checking if PIR went to LOW after each 2 images. If it did, we'll break out and wait for it to go HIGH again. You need to be careful with the code for this, or else the PiCamera will show error that it has run out of resources following your first round of images. I got stuck with that when building ElephantAI.

Now, we go and pass these images to the wildlife detector - which is only using TensorFlow with InceptionV3 off-shelf model. So just with this code adjusted to put our top 5 detected animals into a list and return it from the wildlife detector function https://github.com/tensorflow/tensorflow/blob/master/tensorflow/examples/image_retraining/label_image.py

Right, well if we didn't get any animals in our top 5 detections, we can just delete the images and go back to waiting for PIR to be HIGH and do the above all over again.

But if we did, then we can go ahead and tweet those images with their suspected names, using our 3G USB dongle!

That's all really!

ADJUSTMENTS

Could we cut down our time taking images, to say 1 minute? Then if we get any animals detected from the images after they are sent to the wildlife detector, we start taking video for 30 seconds? Then we go ahead and upload the images + video to twitter with their suspected names?

Or we could try and detect from video, but I'm not sure about doing this using Raspberry Pi really.

FURTHER

What I'd like to do, and it's the same thing as with the ElephantAI, is to get twitter users to help with identifying the spotted animals, and use this data to either build a CN for common garden animals/common animals from scratch, or use it retrain an existing model with some new more accurate classes of common animals.

So it goes something like this:

1. Twitter users will tweet back with hashtags #yescorrect or #nowrong according to whether the image they see corresponds to the suspected animal tagged by our wildlife detector.

2. Now this is not done on Raspberry Pi at all! The replies will be monitored by a server. All the Raspberry Pi's do is send out their images with suspected animals tagged.

3. If an image got a designated number of #yescorrect replies back from twitter users, the server will store that image in directory of correct_animalname. If an image got a designated number of #nowrong replies back from twitter users, the server will store that image in directory of wrong_animalname.

4. Now, if a large number of people are using the automated wildlife detection systems, and there is a lot of twitter interaction, we could be getting 100s of correct_animalname images per week!

So, as you might have guessed, we've got an easy way to do supervised machine learning!

5. After a few weeks, our server can go ahead and retrain an off-shelf model with all these images of common animals that have been labelled according to their class (name) by twitter users!

6. We could go ahead and send the new graph to the automated wildlife detection systems if we wanted via their 3G connectivity. So updating them every month or so!

7. And as the whole process repeats, we get increasing accuracy for our wildlife detector, as more and more correctly labelled images are accumulated! So we'd soon have enough images to train a CN for this common wildlife detection task from scratch!

* there's the obvious issue that twitter users may try to sabotage the system by sending the wrong hashtags e.g. saying a correctly labelled animal was incorrectly labelled :-(

-

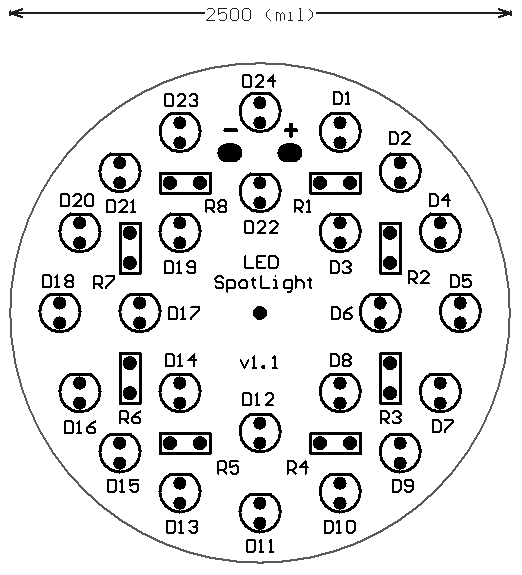

IR LED array

10/09/2016 at 20:47 • 0 commentsI'm going to try with a 24x IR LED array on PCB to begin with, although a huge 20x20 was tempting, using this design https://www.pcboard.ca/led-spotlight

![]()

-

Machine vision algorithms

10/05/2016 at 20:44 • 0 commentsI'll stick to using Haar feature-based cascade classifier, and I'll be training it with garden wildlife images actually obtained using the device. I think will be more accurate than having to use 1000s of elephant images I obtained from wikimedia commons etc for the elephant project.. but might try HOG + Linear SVM instead, although I'm not sure and have never used this on Raspberry Pi.

UPDATE: I will not be using Haar ! An object detector using HOG + SVM will be used to start with!

-

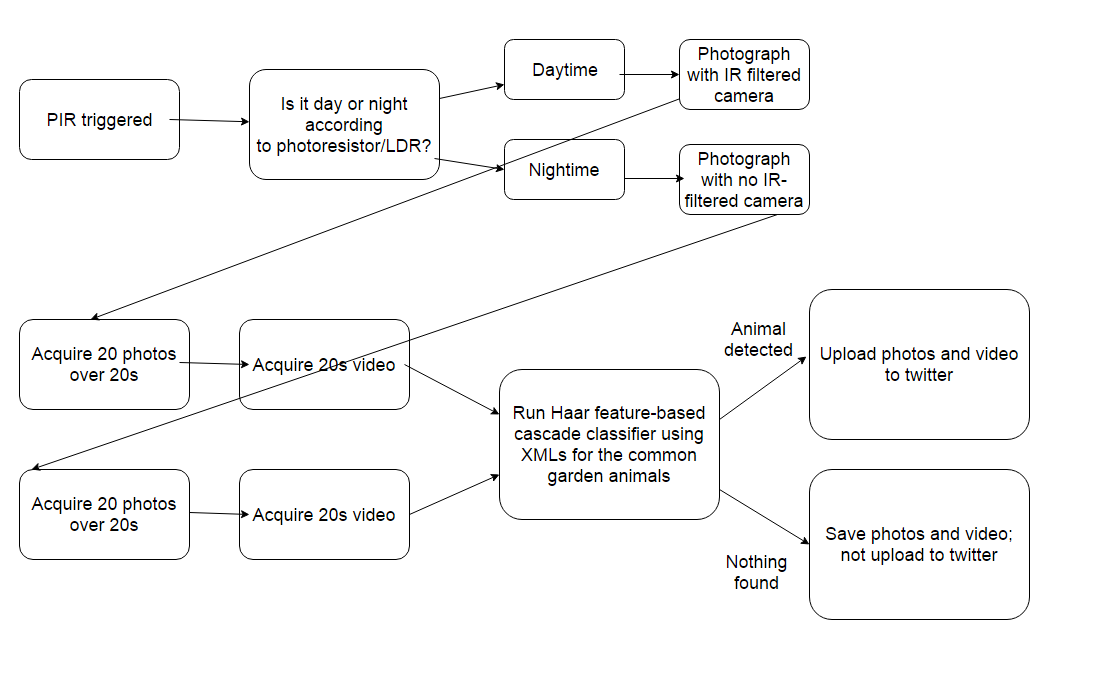

Data flow outline

10/05/2016 at 20:19 • 0 comments![]()

-

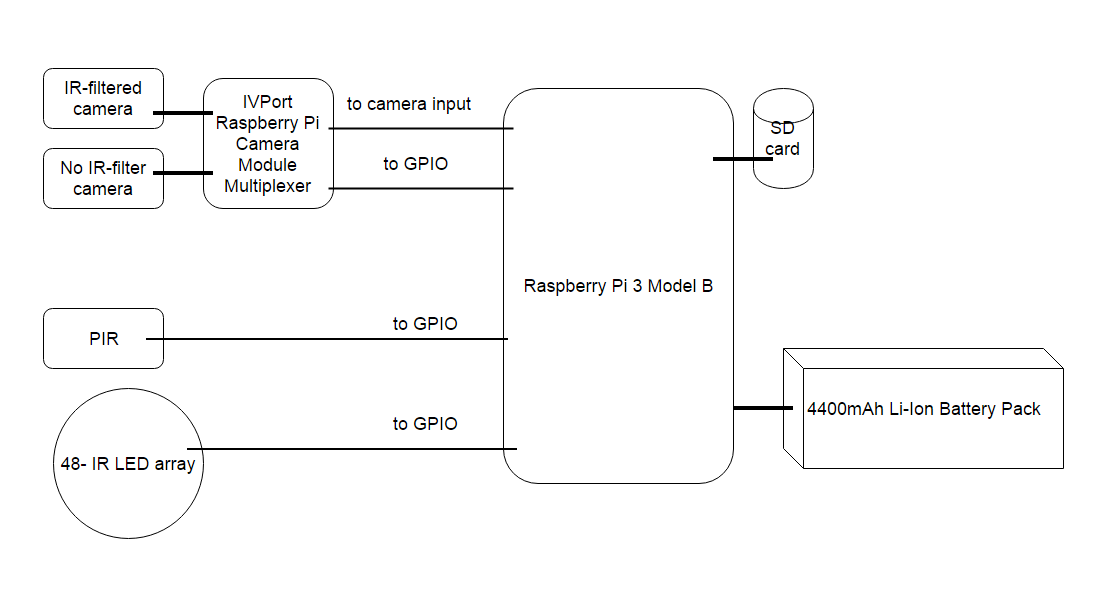

Hardware design

10/05/2016 at 20:00 • 0 comments![]()

Using wifi for comms, as the detector should be close enough to house. Alternatively I would use RF (XBee 2mW Wire Antenna - Series 2 (ZigBee Mesh)), or a USB modem.

* don't forget the photoresistor to determine day or night!

Machine-vision based wildlife-detection project

Camera-trap for larger animals, and hand-held (Pi, or run on smart-phone) for bugs and smaller animals (e.g. frogs)

Neil K. Sheridan

Neil K. Sheridan