UBASIC And The Need For Speed

I basic, 'ubasic', they basic, we basic; wouldn't you like a speedy BASIC, too?

I basic, 'ubasic', they basic, we basic; wouldn't you like a speedy BASIC, too?

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

tokenizer2.cthe output from processing ubasic.re, for those who might be casually curious to see what the generated DFA looks likeC Source File - 23.45 kB - 06/20/2018 at 17:18 |

|

|

ubasic.rethe re2c input file, for those who might be casually curious to see what one looks likere - 10.23 kB - 06/20/2018 at 17:17 |

|

Jun-16 12:09 PM

With the two new mechanisms available, it was time to test. Since the motivation for the activity was to get a feel for the improvements, I decided to test a little differently. This time I did not use the profiler, but rather added a simple stopwatch around the execution of the program. The test program was intended to be run forever, so I altered it to run for just 10 generations and then exit. Since I was running this on Windows, I used the QueryPerformanceCounter() api to make my stopwatch:

int main(int argc, char* argv[])

{

LARGE_INTEGER liFreq; //... so chic

LARGE_INTEGER liCtrStart, liCtrEnd;

QueryPerformanceFrequency ( &liFreq );

QueryPerformanceCounter ( &liCtrStart );

//setup to execute program.

ubasic_init ( _achProgram, false );

//run the program until error 'thrown'

do {

if ( !setjmp ( jbuf ) )

ubasic_run();

else

{

printf ( "\nBASIC error\n" );

break;

}

} while ( ! ubasic_finished() );

QueryPerformanceCounter ( &liCtrEnd );

double dur = (double)(liCtrEnd.QuadPart - liCtrStart.QuadPart) / (double)liFreq.QuadPart;

printf ( "duration: %f sec\n", dur );

return 0;

}

This should give the 'bottom line' numbers on how these two improvements worked out. Plus I wanted to visually see it!

Also, I wanted to subtract off the contribution of screen I/O. Screen I/O on Windows console is quite slow, and having that contribute to the numbers would be meaningless for the target platform, since it has a completely different I/O system (SPI LCD? I don't know). This was easy, fortunately, since the I/O from the BASIC is character oriented -- I simply put a conditional compile around the actual output routines:

//this lets us suppress screen output, which skews timing numbers

//towards console IO away from our program's computation time

//#define NO_OUTPUT 1

//write null-terminated string to standard output

uint8_t stdio_write ( int8_t * data )

{

#if NO_OUTPUT

return 0;

#else

return (uint8_t) puts ( (const char*)data );

#endif

}

//write one character to standard output

uint8_t stdio_c ( uint8_t data )

{

#if NO_OUTPUT

return data;

#else

return (uint8_t)_putch ( data );

#endif

}

Testing time! I created 4 test scenarios:

I also created two flavors: suppressed screen output, and included screen output.

I ran each configuration a few times and used the 'best of three' timing for each. I used 'best of three' because wall-clock timing this way can be affected by all sorts of other stuff coincidentally going on in the operating system at the time, so I wanted to have a little insulation from that.

With screen output suppressed:

And to observe how much the screen I/O contributes to runtime in my test program:

Jun-16 12:09 PM

As mentioned before, the core 'goto' implementation is in jump_linenum(), and works by restarting parsing of the entire program until the desired line if found. Here is a compacted form:

static void jump_linenum(int linenum)

{

tokenizer_init(program_ptr);

while(tokenizer_num() != linenum)

{

do

{

//... eat tokens; emit an error if we reach end-of-stream

}

while(tokenizer_token() != TOKENIZER_NUMBER);

}

}

I was not wanting to do a major re-engineering of the ubasic, so I decided to keep the rest of the system as-is, and only rework this method.

The strategy I used here was to make a 'cache' of line-number-to-program-text-offset mappings. Then, when this function is invoked, the cache is consulted to find the offset into the program, and set the parser up there. Because tokenizer_init() is a cheap call, I used it to do the setting up of the parser by simply pretending that the program starts at the target line we found, rather than try to fiddle with the internals of the parser state. A hack, but a convenient one, and ultimately this exercise was to prove the concept that improving jump_linenum() would have a material impact, and how much.

For simplicity, my 'cache' was simply an array of structs, and my 'lookup' was simply a linear search. Also, I simply allocated a fixed block of structs. In a production system, this could be improved in all sorts of ways, of course, but here I just wanted to see if it was a worthwhile approach.

The struct I used was simple, and there was added a 'setup' method that was called during program initialization time to populate the cache:

//HHH a line number lookup cache; this is just proof-of-concept junk

typedef struct

{

uint16_t _nLineno; //the line number

uint16_t _nIdx; //offset into program text

} LNLC_ENT;

LNLC_ENT g_nLines[1000]; //mm-mm-mm. just takin' memory like it's free, and limiting the program to 1000 lines

int g_nLineCount = -1;

#define COUNTOF(arr) (sizeof(arr)/sizeof((arr)[0]))

void __setupGotoCache(void)

{

tokenizer_init(program_ptr);

g_nLineCount = 0; //the beginning is a very delicate time

while ( tokenizer_token () != TOKENIZER_ENDOFINPUT )

{

int nLineNo;

const char* pTok;

const char* __getAt (void); //(added to tokenizer.c)

//we must be on a line number

if ( tokenizer_token () != TOKENIZER_NUMBER )

{

g_nLineCount = -1; //purge 'goto' cache

sprintf ( err_msg, "Unspeakable horror\n" );

stdio_write ( err_msg );

tokenizer_error_print ();

longjmp ( jbuf, 1 );

}

//remember where we are

nLineNo = tokenizer_num ();

pTok = __getAt ();

//create an entry

g_nLines[g_nLineCount]._nLineno = nLineNo;

g_nLines[g_nLineCount]._nIdx = pTok - program_ptr;

//whizz on past this line

while ( tokenizer_token () != TOKENIZER_ENDOFINPUT &&

tokenizer_token () != TOKENIZER_CR

)

{

tokenizer_next ();

}

if ( TOKENIZER_CR == tokenizer_token () )

{

tokenizer_next ();

}

//next entry

++g_nLineCount;

//too many lines?

if ( g_nLineCount >= COUNTOF ( g_nLines ) )

{

g_nLineCount = -1; //purge 'goto' cache

sprintf ( err_msg, "Program too long to cache!\n" );

stdio_write ( err_msg );

tokenizer_error_print ();

longjmp ( jbuf, 1 );

}

}

//did we get anything? if not, purge 'goto' cache

if ( 0 == g_nLineCount ) //pretty...

Read more »

Jun-16 12:09 PM

It's a little inconvenient for the construction of this back-formed log, but chronologically I had started investigating a potential way of replacing the get_next_token() implementation just prior to the events of the last log entry, wherein I had discovered some data anomalies that changed my opinion about what work would result in the biggest gains for the least costs. But having got past that diversion, I was now more resolved to actually implement and measure results.

My background is not in Computer Science -- I am self-taught in software engineering -- and consequently there are holes in my understanding of various formal Computer Science topics. Amongst these is all the theory around the construction of language processors. But I'm at least cursorily familiar with their existence, and I can construct useful web queries.

Parsing is one of my least favorite activities, right up there with keyboard debouncing and bitblt routines. Doubtlessly this is because I have always hand-crafted parsers. You can get by with that for simple stuff, but it rapidly becomes an un-fun chore. Many years back I decided to bite-the-bullet and learn about 'regular expressions' as the term applies to software, which are a computerification of some stuff that Noam Chomsky and others did ages ago in the field of linguistics.

https://en.wikipedia.org/wiki/Regular_language

Suffice it to say that regular expressions (or 'regexes') are a succinct way of express a pattern for matching against a sequence of symbols. Most will be familiar with a very simple form of pattern matching for doing directory listings; e.g. 'ls *.txt' to list all files that end with '.txt'. Regexes are like that on steroids.

My boredom with parsing is evidently something of a cultural universal, because there have been tools created to automate the generation of parsers since time immemorial, and they pretty much all use regexes for the first part: 'lexing', or breaking stuff up into tokens ('lexemes'). I.e., exactly what we are trying to do in get_next_token(). (The second part is applying grammar, which we are leaving in the existing ubasic implementation). There are a lot of tools for all the usual reasons: folks make new versions with modern capabilities, folks make versions with more power, folks make versions suited to different execution environments. So, time for shopping...

I didn't spend a lot of time researching options, but I did spend a little, and I'll spare you the shopping tales. I decided on using 're2c' to generate the lexer/tokenizer.

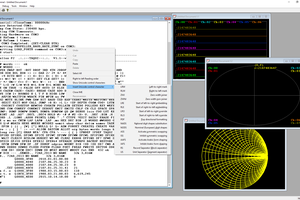

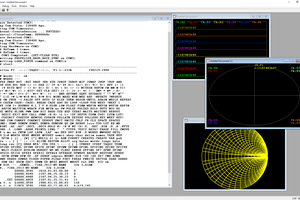

This tool is pretty simple in what it does: take a list of regular expressions associated with a chunklette of C code to invoke when they match. It doesn't really even create a complete piece of code, but rather is expected to have it's output spewed into the middle of a function that you write, that is the lexical scanner. The code that it generates is a monster switch statement that represents a compiled and optimized Deterministic Finite Automata (DFA)

https://en.wikipedia.org/wiki/Deterministic_finite_automaton

for all the patterns that you have specified. It is held in high regard, and apparently results in more efficient code than some alternative approaches, such as the table-driven approach used in Lex/Flex. It is also minimalistic in that it just spews out pure C code, with no particular library dependencies (not even stdlib, really) since you are expected to do all the I/O needed to provide symbols to it. So it seemed a good match, and a-learnin' I did go.

I had not used this tool before, so I did the usual cut-and-paste of the most simple examples I could find for it, and then find the 'hole' where I stick in my regexes. I figured that out fairly quickly, so then I needed to see how to marry all this with the existing ubasic code.

There was...

Read more »Jun-15 8:01 PM

When writing, I try to edit, but I have know that I will fail and there will be silly typos. I have gotten over this. Less so with errors in facts. And so it was the case when I was looking at the data again, and noticed that some things did not quite jibe.

For one thing, the time taken for strncmp was shown to be zero, even though it was called 125 million times. Somehow I doubt that. get_next_token()'s implementation for the most part is looping over a list of keywords and calling strncmp(). So it seemed that strncmp()'s execution time was being somehow sucked up into get_next_token()'s.

Another thing was that jump_linenum() was being called an awful lot. Doing a quick cross-reference to the call sites for this method, I discovered that it is used for 'goto', but also 'gosub', 'return', 'next', 'if', 'then' -- pretty much anything that changes flow-of-control other than sequentially forward. That makes some sense. But then I noticed the implementation details, and things really made sense:

static void jump_linenum(int linenum)

{

tokenizer_init(program_ptr);

while(tokenizer_num() != linenum)

{

do

{

do

{

tokenizer_next();

}

while(tokenizer_token() != TOKENIZER_CR &&

tokenizer_token() != TOKENIZER_ENDOFINPUT);

if(tokenizer_token() == TOKENIZER_CR)

{

tokenizer_next();

}

if (tokenizer_token() == TOKENIZER_ENDOFINPUT)

{

sprintf(err_msg,"Unreachable line %d called from %d\n",linenum,last_linenum);

stdio_write(err_msg);

tokenizer_error_print();

longjmp(jbuf,1); // jumps back to where setjmp was called - making setjmp now return 1

}

}

while(tokenizer_token() != TOKENIZER_NUMBER);

DEBUG_PRINTF("jump_linenum: Found line %d\n", tokenizer_num());

}

}So, the first thing that caught my eye is "tokenizer_init(program_ptr);". That means any time we are changing flow-of-control, we are re-initializing the parsing of the entire program from the beginning. The init call itself is not expensive -- it just sets up some pointers -- but it makes it clear that any time one does a transfer of control, that we are setting up to scan the whole program to find the target line number.

The next thing that caught my eye were the loops within. Essentially, they are tokenizing the program, and throwing away all the tokens, except for the end-of-line. This is used to sync the parser to the start of the line (with the line number being the first parsed value) to test if it is the target line number. That's when I had a realization: that probably the reason there is so much time spend in the tokenizer routine 'get_next_token()' is because all transfer-of-controls imply re-parsing the program text up to the target line.

In retrospect, maybe this should have been a bit obvious looking at the numbers from the profiler, the 17,186 jump_linenum calls being related to 5,563,383 get_next_token calls, but I didn't think much of it at the time.

So, I suddenly got a sinking feeling that I had given bad advice in recommending focusing on improving get_next_token(). That that function still could benefit from improvement, but the real gains might be in simply having it called far less often.

The other thing that puzzled me was the apparently free calls to strncmp that were made 125 million times. Now that would be some magic. Having freshly studied jump_linenum(), I hit upon an intuition: maybe information is being lost behind the 'static' declaration. This warrants a little explaining...

'static' is an odd C keyword in that is overloaded to mean either of:In this case it means 'private'. Compilers...

Read more »Jun-12 3:10 PM - Jun-12 6:01 PM

I was eager to get some empirical validation (or negation) of these hypotheses (and maybe even get some new ones). However, I didn't have the actual hardware. On the one hand, you can't draw conclusive inferences when testing on a non-normative platform, but on the other hand, what else can I use. So I decided to go ahead and port the program to a desktop application, and just try to be careful to avoid measuring anything that was not CPU related (like screen I/O), and even then, take the results with a grain of salt.

Mostly because of personal familiarity, and partially because of expedience, I decided to port the ubasic into a Windows application. The reasons were:

It seems that Microsoft has significantly dumbed-down the profiler in VS, in the past few years. On the upside, it's much easier to start up, but I do miss the granularity of control that I had before. Maybe I'm a masochist. Anyway, for this exercise, I'm using Developer Studio 2013 Community Edition. (Old, I know, but I use virtual machines for my build boxes, and I happened to have one already setup with this version in it.)

But first things first: building an application.

The needed changes were relatively straightforward, thanks to the clean effort of the [project's authors] -- especially considering how quickly they built this system. Mostly, I needed to do some haquery to simply stub out the hardware-specific stuff (like twiddling gpio), since my test program didn't use those, and then change the video output to go to the screen. Fortunately, since those routines were character-oriented at the point where I was slicing, it was easy for me to change those to stdio things like 'putc' and 'puts'. It still took about 3 hours to do, because you need to also understand what you're cutting out when you're doing the surgery.

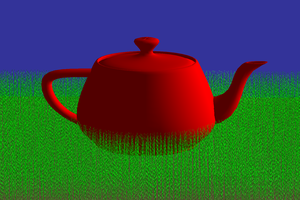

After that, I was able to run the sample BASIC life program. The screen output was garbage, so maybe it wasn't working, but I could see screen loads of output, followed by a pause, followed by more screen loads of output. Nothing crashed. This is a pretty good indication that things are more-or-less working.

Just looking at the output change during the run showed that it was pretty slow. This was running on my 2.8 GHz desktop machine and it was slow, so I can only imagine the experience on the 48 MHz embedded processor. To make it visually look a little better, I tweaked the source code to output a different character, and implemented the 'setxy' function to actually set the cursor position, and then had Windows remember the screen size, which I set to 40x21 (I added a row because doing a putc to the last character position would stimulate a screen scroll). Now it looks a little more like 'Life'.

OK, that's enough play time. We should be ready to do a profiling run. As mentioned, this is more easily launched in current Visual Studios. In the case of VS 2013, it is under the DEBUG menu as 'Performance and Diagnostics. (check the docco for your version if different; they move it from time-to-time.)

Which will lead to the opening screen.

And when you click 'start' you'll get into the 'performance wizard', which is just a sequence of dialog boxes with options.

There's several ways to profile. The default is a low-impact sampling method. In retrospect, I probably should have used this to start with -- it usually presents a reasonably accurate view, and it is low-impact. But I was going for precision out of habit, and I chose 'Instrumentation'. This has associated costs.

The 'CPU sampling' method presumably works by periodically interrupting the process and seeing where it is, and taking notes. The 'Instrumentation'...

Read more »Jun-11 7:17 PM - Jun-11 9:50 PM

Initially, I described some speculative causes for 'slowness':

I asked what does 'slow' mean in this context, because there really was no baseline: it was a bespoke project unlike any other. It just 'felt' slow at some intuitive level. I asked if I could have a sample BASIC program that felt slow, and that I would take a looky-loo.

The sample program happened to be an implementation of Conway's game of Life, and I was familiar with that. They were super popular in the 70s-80s, and I had run one at least once on my actual TRS-80 back in the day, with it's screaming hot ~1.77 MHz Z-80. And Z-80's took 4-23 clocks to do one instruction, so I would think that, yeah, a 48 MHz MIPS could probably do a little better.

Now, I had no reason to believe that the video thing I mentioned was relevant here, but I did want to point out that sometimes it's not where you think it is. In that case, you could 'optimize' the BASIC until it took zero CPU time, and that still would not get you improvements to deal with an I/O bound bottleneck, or even a CPU bottleneck that is not where you are looking. Believe me, I have made this mistake before.

Speculation is useful as a heuristic to formulate hypotheses, but you really need to test empirically. Not measuring risks wasting development time chasing wild aquatic fowl, and that is itself a kind of speed optimization.

Jun-6 4:20 PM - Jun-11 12:44 PM

[MS] had reached out asking about a project of mine, the [TRS-80 on a PIC32], and if it was considered stable. I believed it was -- it's an older project of mine, and now it's in enhancement/maintenance mode (though interesting bugs are still found from time-to-time by users!) At length, the reason for the inquiry is that they were thinking about replacing an existing BASIC interpreter that seemed slow with an emulation of a TRS-80. They did a little research and so came across my project.

However, as flattered as I was at the notion of porting my emulator to another hardware platform (which I would enjoy), I had to ask: wouldn't a native implementation be faster than an implementation on an emulated CPU? And has there been any analysis done on where the slowdown might be.

[The guys involved in the project] had been running at break-neck speed to get it completed for an event, and really hadn't had time to dig into the speed problem. (I know, I saw the commit history -- it was very impressive what was done in such a small time. Integrating this BASIC was just a small part of the overall project.) Since that event was over, there was a little time to come up for breath and look at possible improvements.

I had taken a peek at the BASIC implementation. It was straightforward: just 2 C-language modules (4 files, header and implementation), and didn't have any funky library dependencies. The code was originally written by an Adam Dunkels [http://dunkels.com/adam/ubasic/] as a personal challenge to himself to speed-code a functioning BASIC, and he released it unto the world for anyone to use if they liked. [The project team] had extended it with a bunch of new keywords, and of course adapting to their board's I/O subsystems.

OK, those that know me, know that I have a fetish for disassembly. I'd check myself into Betty Ford if they had one for reversers. I don't know why it is -- it just is. It's yielded some good things professionally, but not often enough to slake my thirst. A distant third or fourth place is for code optimization, so for someone to say they have a speed problem in some straightforward C source code... That's going to be a... 'distraction', for me. I guess it's best just to take a look and get it over with....

Create an account to leave a comment. Already have an account? Log In.

Become a member to follow this project and never miss any updates

glgorman

glgorman

Badmonky

Badmonky