Latest News

- Publications:

- Prizes:

Thanks to all of you who support and follow us, your feedback is hugely helpful...

___________________________________________________________________________

Challenge

Indoor 3d positioning is a problem that has been fought for a while but there is no perfect solution.

The closest available is probably the motion capture system using cameras, it's very expensive and doesn't scale (above a dozen of tracked objects the system lags).

Solution

After ultrasound and magnetic approaches (see legacy), it looks like lasers are not too bad!

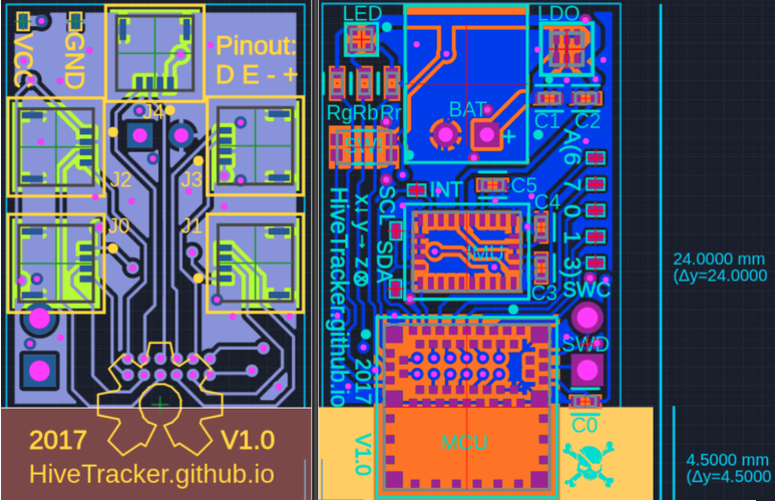

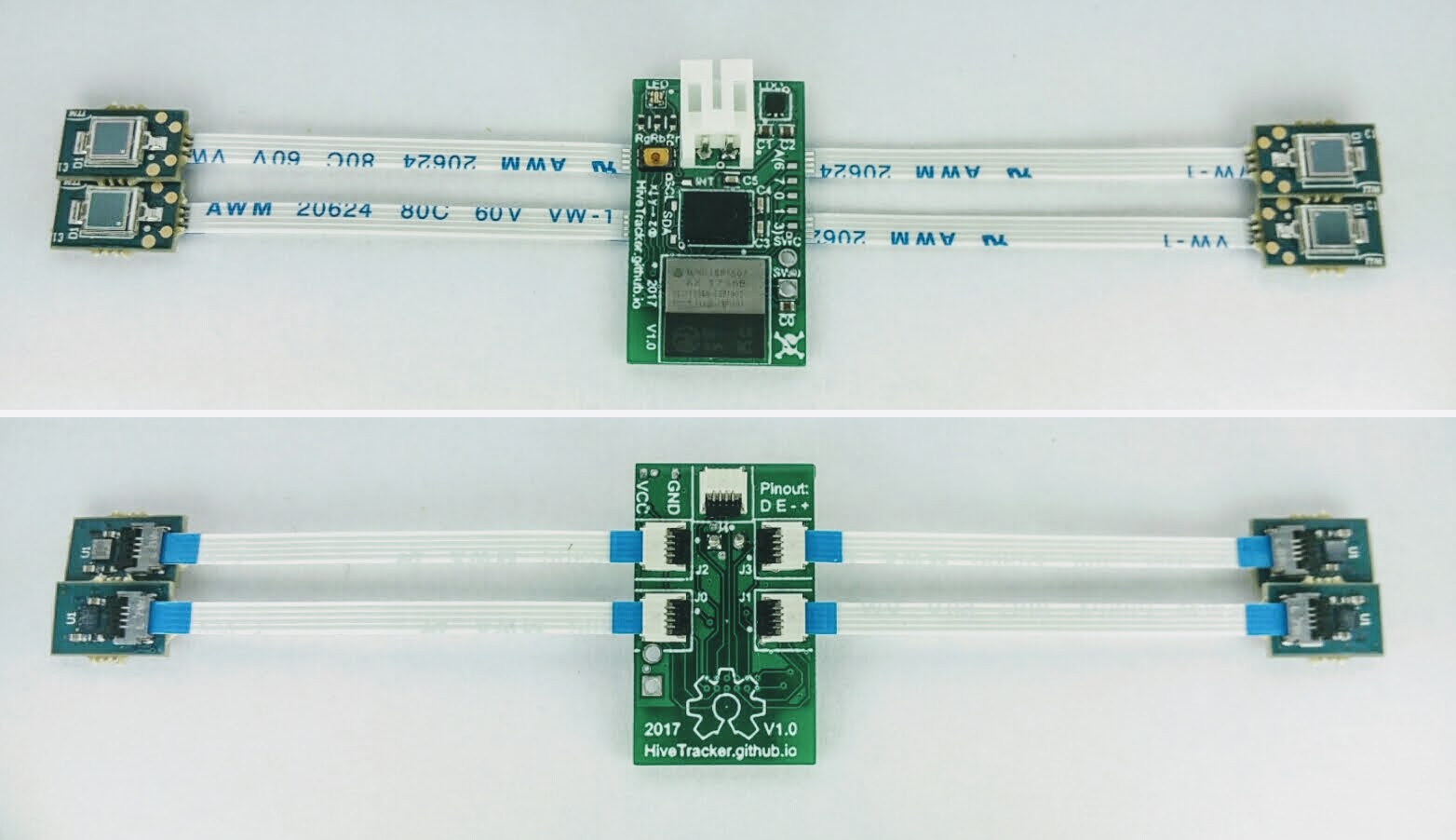

We made our own board that uses photo sensors to piggy back on the lighthouses by HTC Vive.

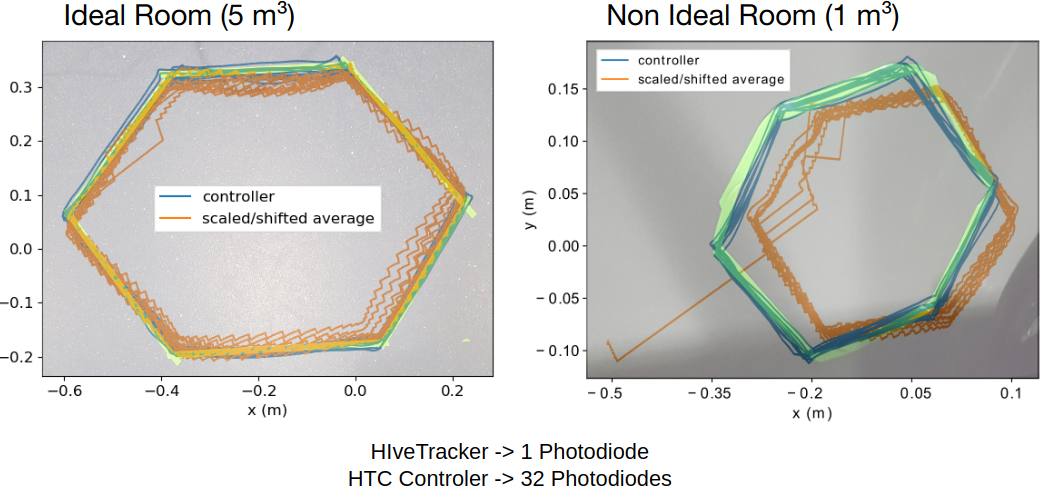

This project gave birth to a first academic publication in the HCI conference called Augmented Human, the published details are very useful, but don't miss the logs for even more details.

Anyway, here is a demo:

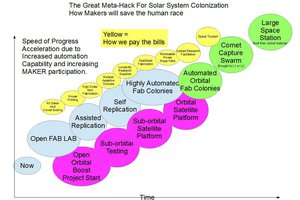

Wait, but why?

With backgrounds from HCI (human computer interactions), neurosciences, robotics, and other various engineering fields, we all found that miniature 3d positioning challenge was worth solving.

Some of the main applications we are interested in are listed here:

- fablab of the future to document handcrafts accurately and help teaching it later through more than video (using haptic feedback for example)

- robotic surgery controllers can greatly be improved (size, weight, accuracy...)

- dance performances can already be greatly improved with machine learning (example) but not that easily in real time.

- rats "hive" positions and brain activities tracking is probably the most complicated challenge, here is a simpler example using computer vision:

How does it work?

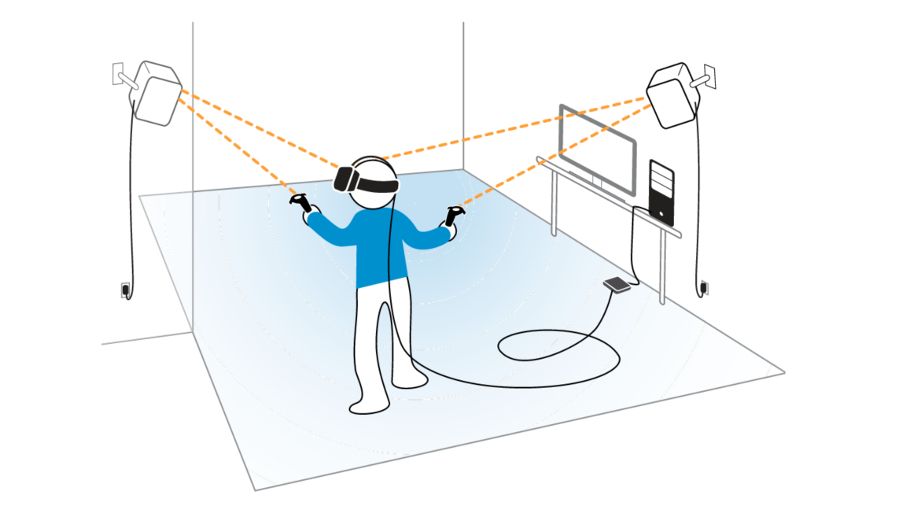

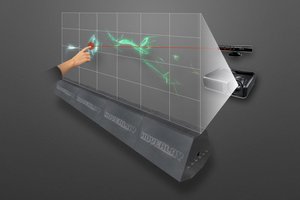

HTC Vive uses 2 lighthouses that emit laser signals accurate enough to perform sub-millimeter 3d positioning. The system looks like this:

This gif summarizes fairly well the principle, but clicking on it will open an even better video:

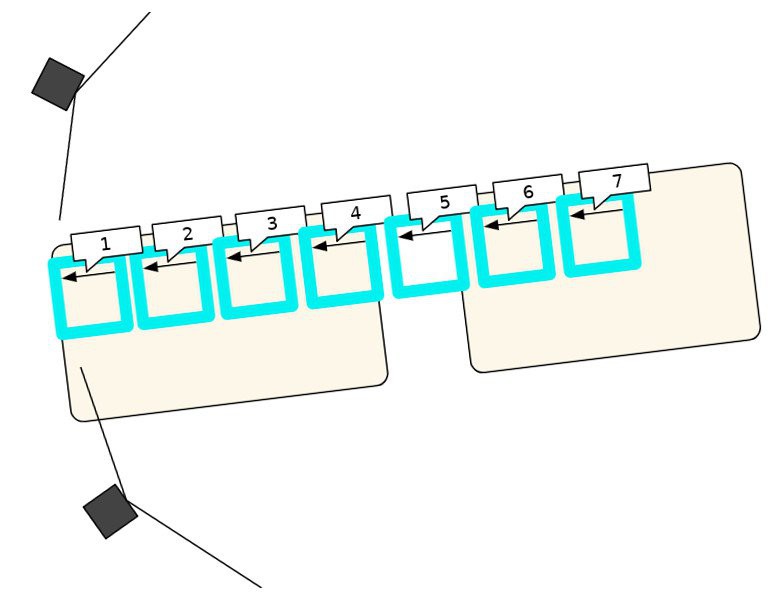

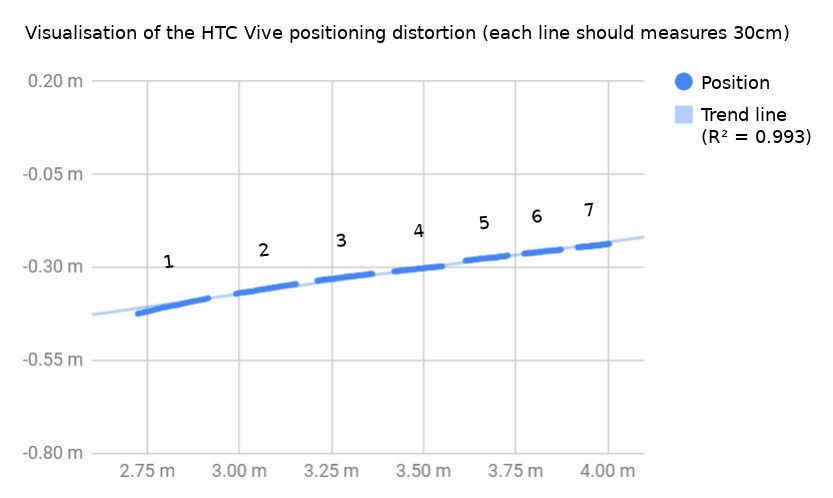

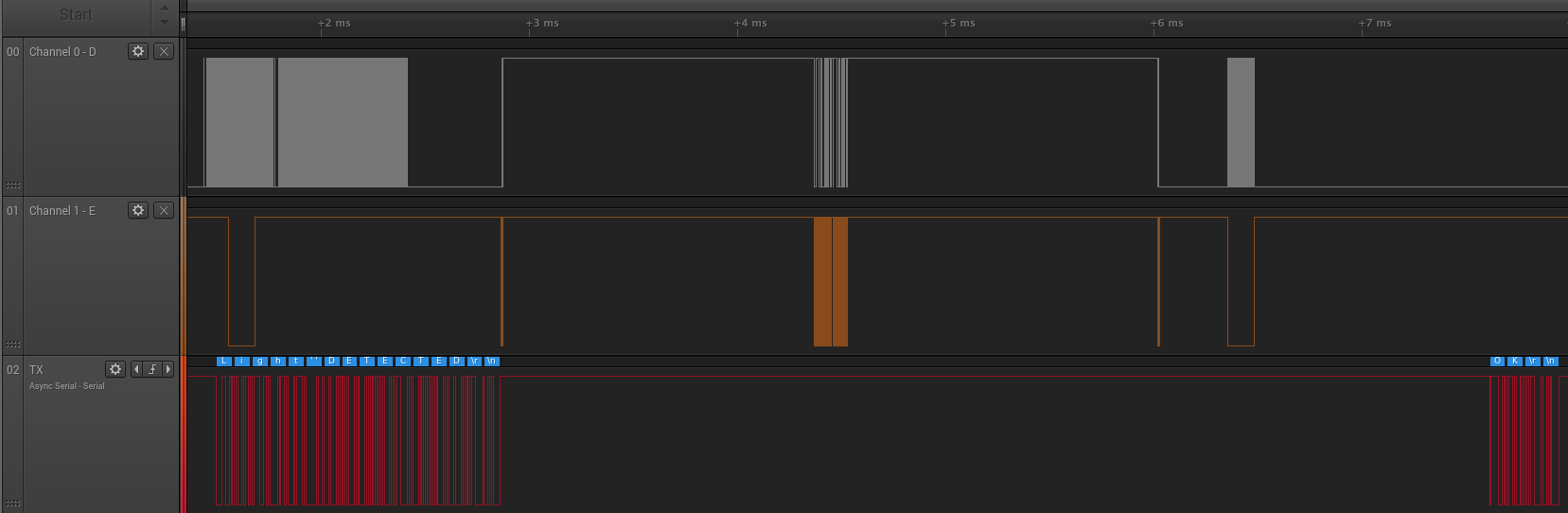

The idea is to measure timings at which we get hit by this laser sweep.

As we know the rotation speed and as we get a sync pulse broadcast, we can get 2 angles in 3d from each lighthouse, and estimate our position with the intersection (see details in the logs).

Our approach is to observe these light signals and estimate our position from them.

Trick alert!

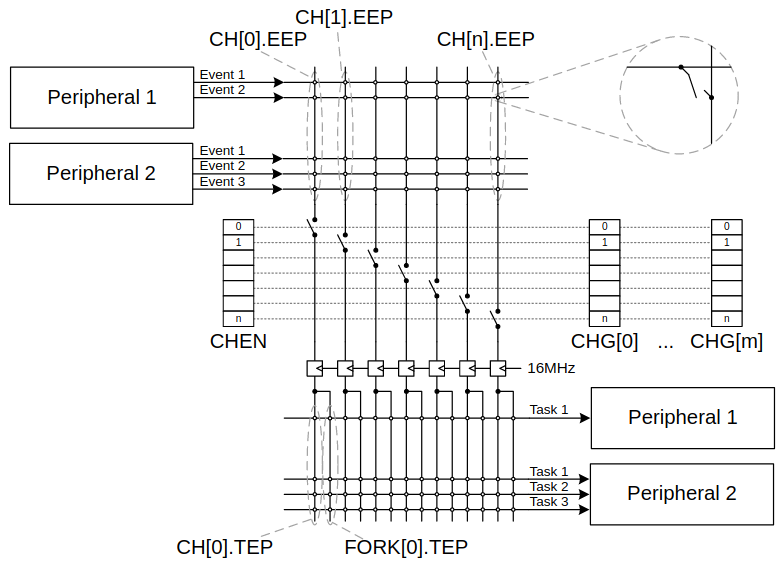

Those signals are way too fast to be observed by normal micro-controllers, but we found one (with BLE) that has a very particular feature called PPI, it allows parallel processing a bit as in an FPGA.

Checkout the "miniaturization idea validation log page" to learn more about it.

In Progress

We're still fighting with a few problems, but as we're open sourcing everything, maybe the community will help?

Please contact us if you are interested in helping, below are some of the current issues that need some love. We have the solution to all the problems, but more expert hands help can't hurt:

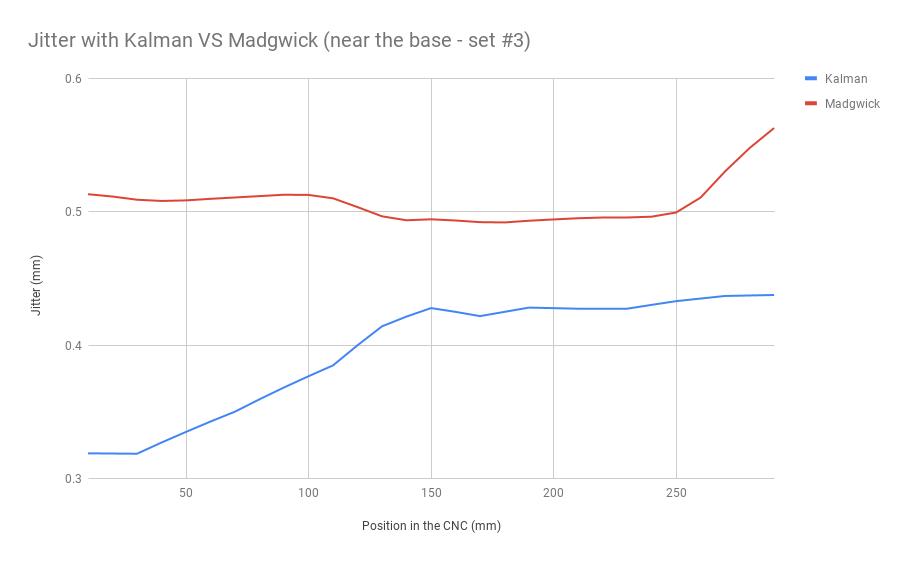

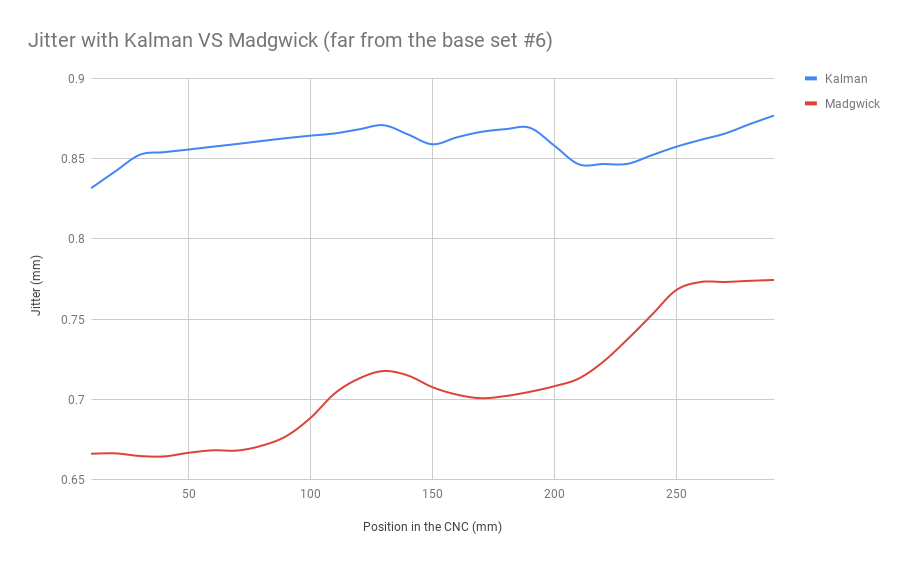

- Kalman filtering: we're using the fusion of the accelerometer integration and optically obtained 3d position to improve our results. Our proof of concept is getting close to usable, now we need to port it.

- Calibration: when using the trackers at 1st, finding bases positions & orientations is not trivial, we have a procedure but it could be greatly improved...

Who Are We?

This is a research project made with L❤VE by "Vigilante Intergalactic Roustabout Scholars" from @UCL, @UPV & @Sorbonne_U. It stared in the hackerspaces of San Francisco, Singapore, Shenzhen and Paris, then took a new home in academia, where knowledge can stay public.

Contact:

- Updates: https://twitter.com/hive_tracker

- Mailing list: https://groups.google.com/forum/#!forum/hivetracker

- Team email: hivetracker+owners@googlegroups.com...

Read more » Cedric Honnet

Cedric Honnet

Moritz Walter

Moritz Walter

ProgressTH

ProgressTH

HI~ Can it be adapted to lighthouse 2?