The journey begins by reading through

https://github.com/NVIDIA-AI-IOT/trt_pose

Your biggest challenge is going to be installing the pytorch dependencies as described on

https://docs.nvidia.com/deeplearning/frameworks/install-pytorch-jetson-platform/index.html

Some missing dependencies:

apt install python3-pip libopenblas-dev

pip3 install packaging

python3 -m pip install --no-cache $TORCH_INSTALL failed.

The TORCH_INSTALL variable has to be set to the right wheel file for your python version. There's a list of wheel files for the various jetson images on

https://forums.developer.nvidia.com/t/pytorch-for-jetson/72048

The lion image was jetpack 4.6 which has python 3.6, so the wheel file for that version was

torch-1.9.0-cp36-cp36m-linux_aarch64.whl

The lion kingdom cut & pasted bits from the live_demo.ipynb file to create an executable. The live_demo requires many more dependencies

python3 -m pip install Pillow # PIL errors python3 -m pip install torchvision pip3 install traitlets pip3 install ipywidgets

You have to install the jetcam module with git

https://github.com/NVIDIA-AI-IOT/jetcam

The live_demo program only works inside a web app. The best it can do without bringing up a lot more bits is process JPG images.

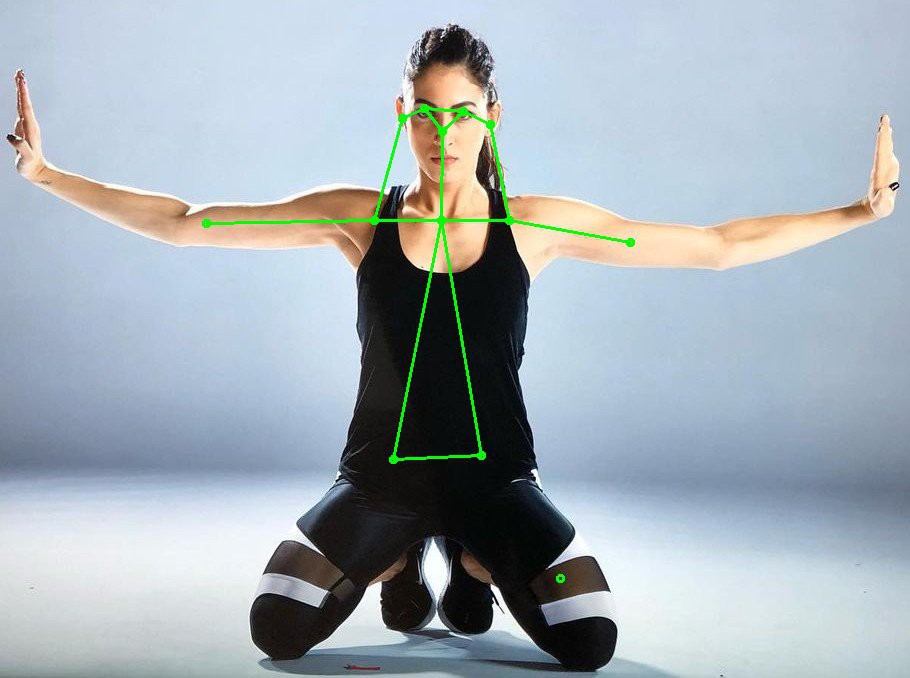

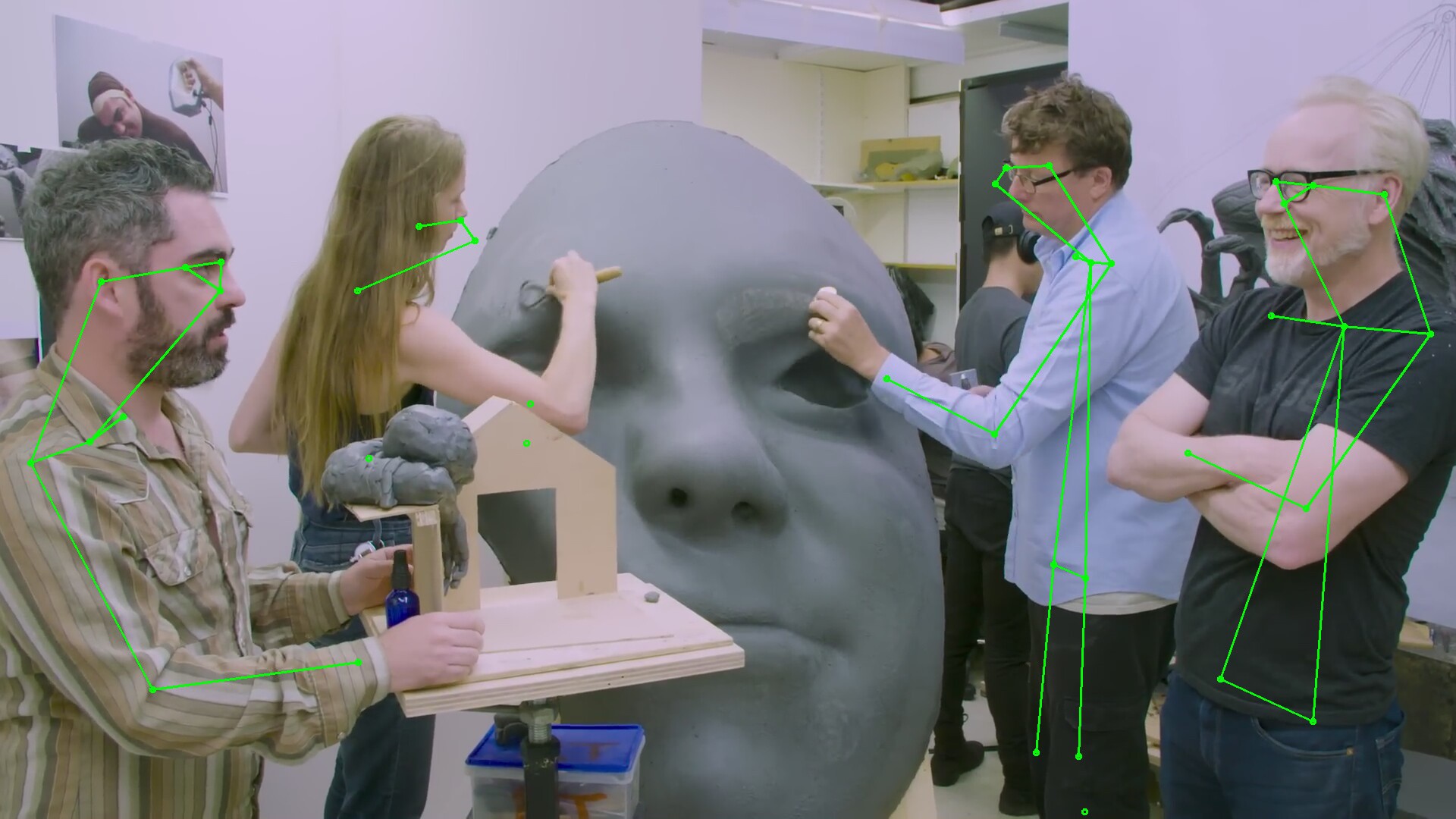

It supports multiple animals, but it's trash at detecting body parts. Changing the aspect ratio gives different but equally worthless results.

The demo without a browser can give a rough idea of the framerate. The frame rate seemed to be in the 12-15fps range.

There is another program which exposes somewhat of a C++ API for trt_pose, but with the same model.

https://github.com/spacewalk01/tensorrt-openpose

Acceptable quality seems to require the original openpose at 5fps. The jetson might be good enough to run efficientdet & face recognition on the truckcam. It's not good enough for rep counting & camera tracking. The most cost effective solution is going to be a gaming laptop more than any embedded confuser.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.