-

Portrait mode & HDMI tapping

05/17/2020 at 19:10 • 0 comments![]()

![]()

![]()

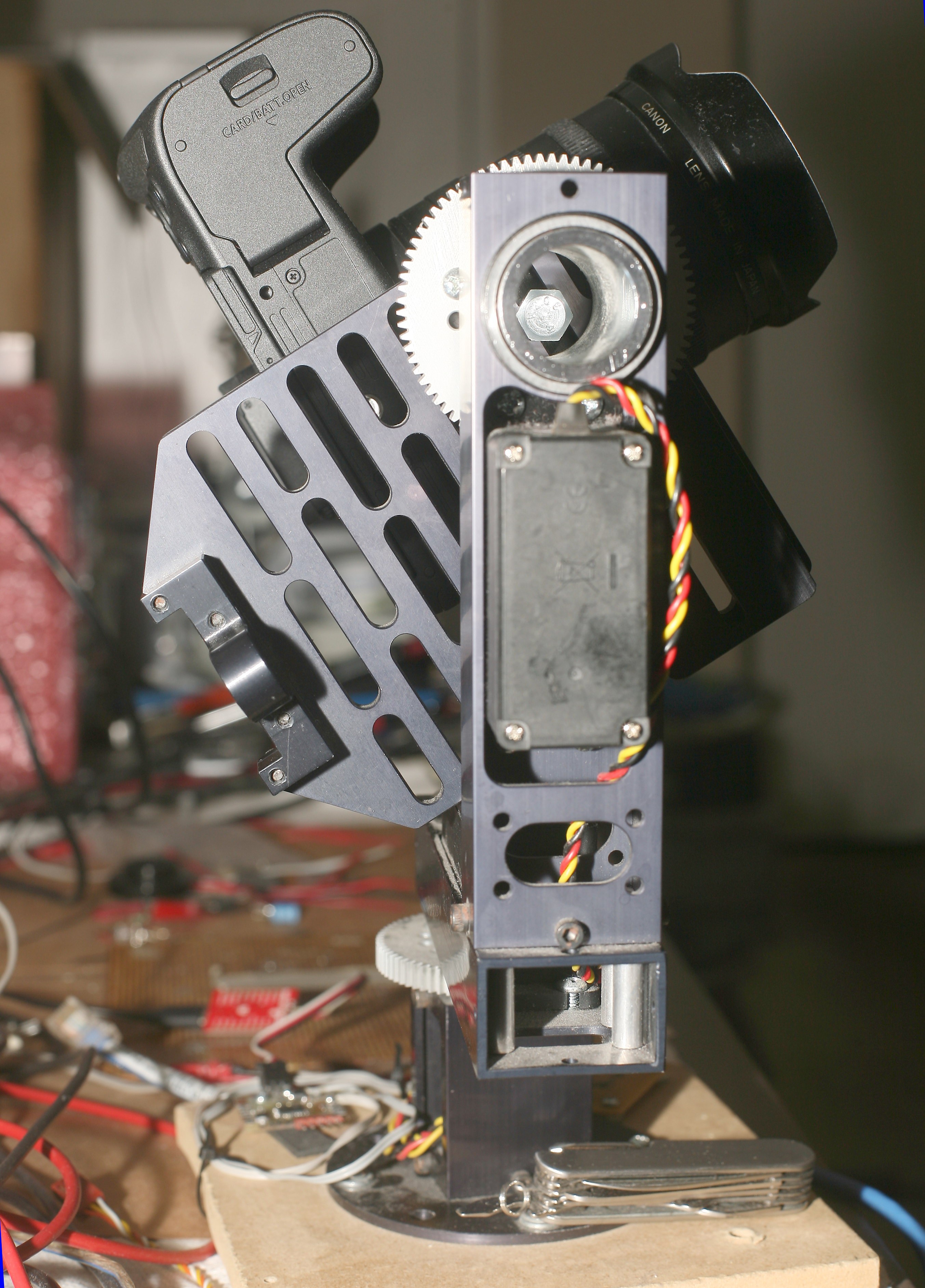

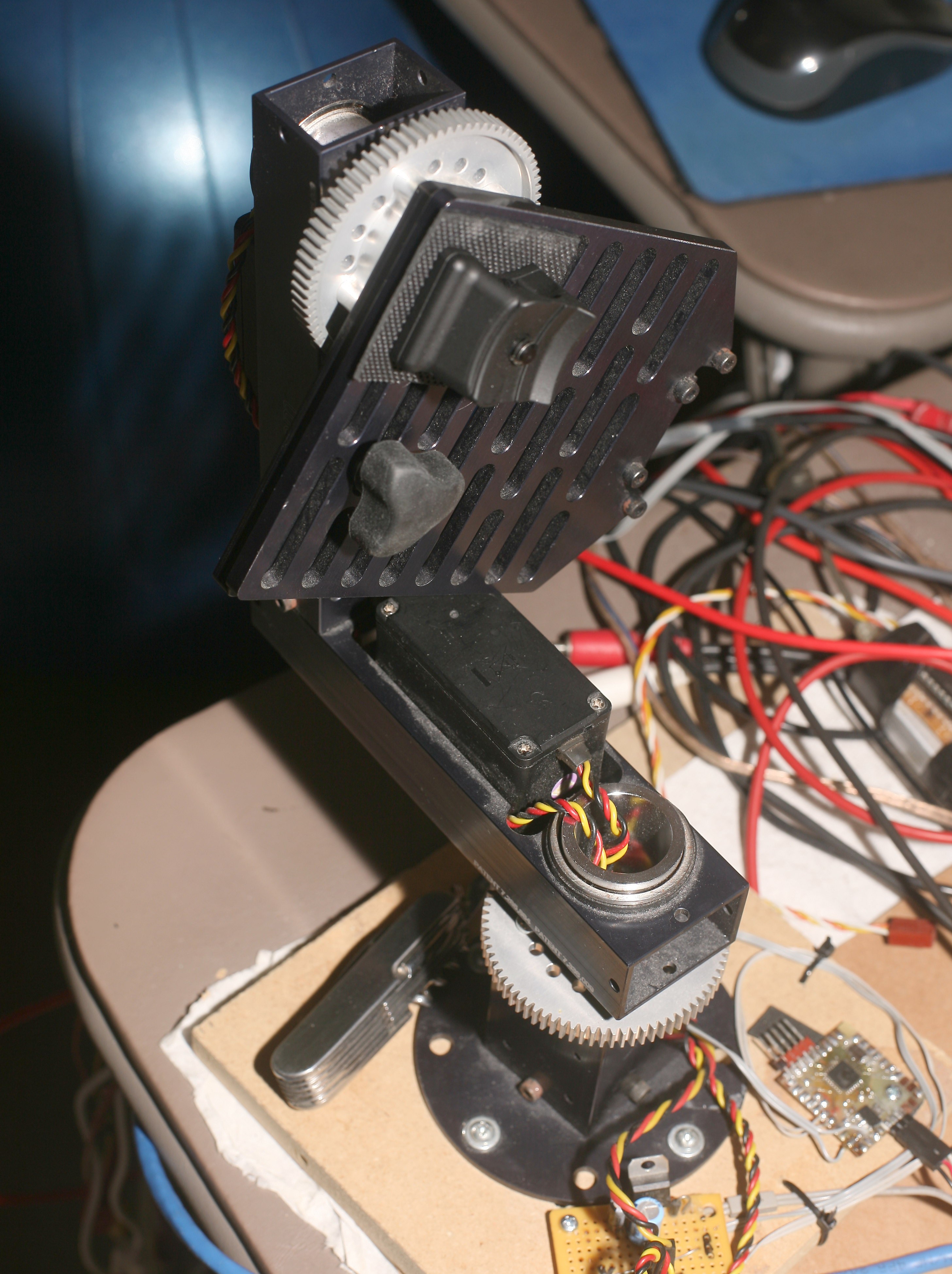

The tracker needs to support still photos in portrait mode. Portrait mode is never used for video, at least by these animals. A few days of struggle yielded this arrangement for portrait mode. The servo mount may actually be designed to go into this configuration.

It mechanically jams before smashing the camera. Still photos would be the 1st 30 minutes & video the 2nd 30 minutes of a model shoot, since it takes a long time to set up portrait mode.News flash: openpose can't handle rotations. It can't detect a lion standing on its head. It starts falling over with just 90 degree rotations. The video has to be rotated before being fed to openpose.

![]()

![]()

![]()

https://www.amazon.com/gp/product/B0876VWFH7

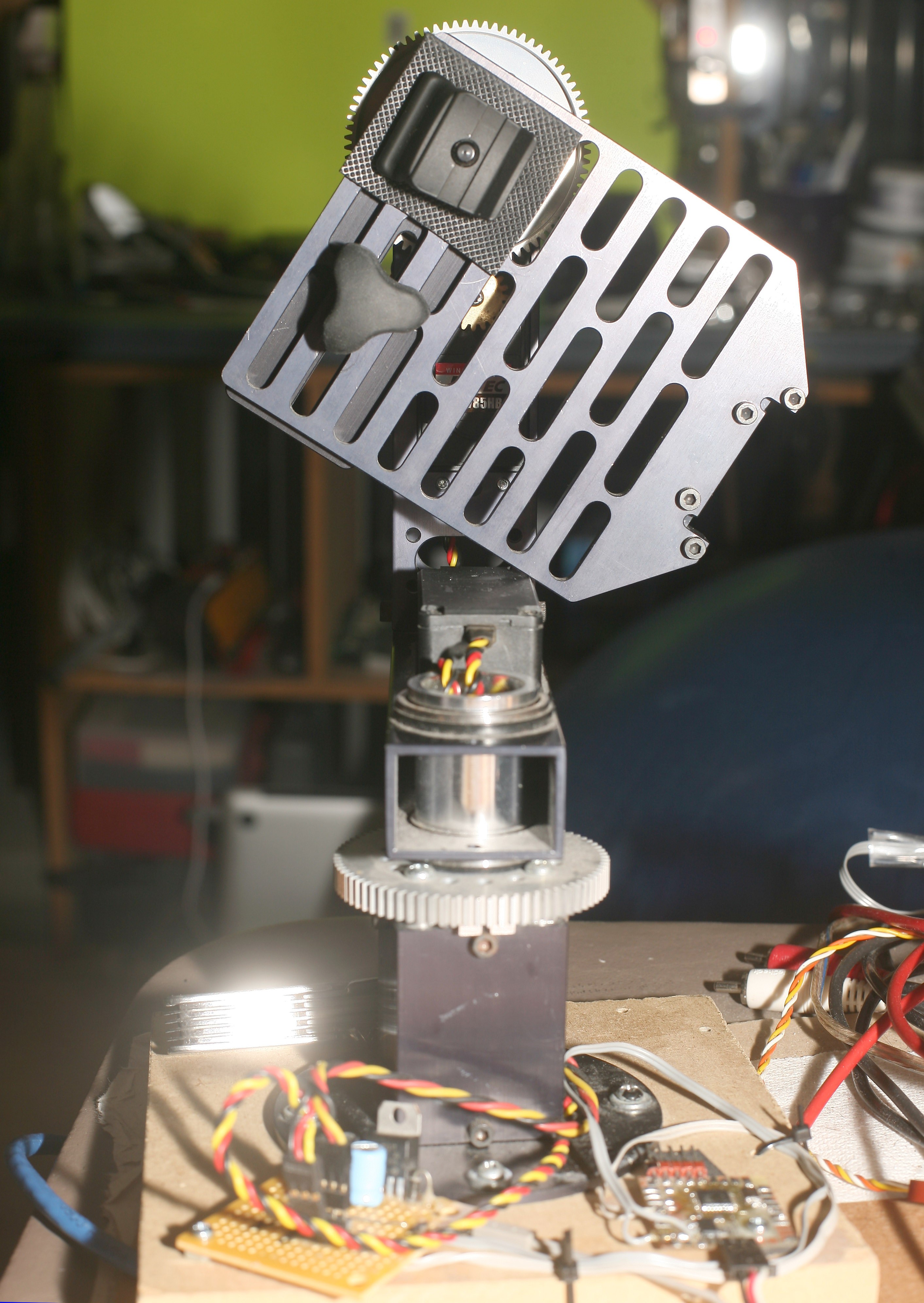

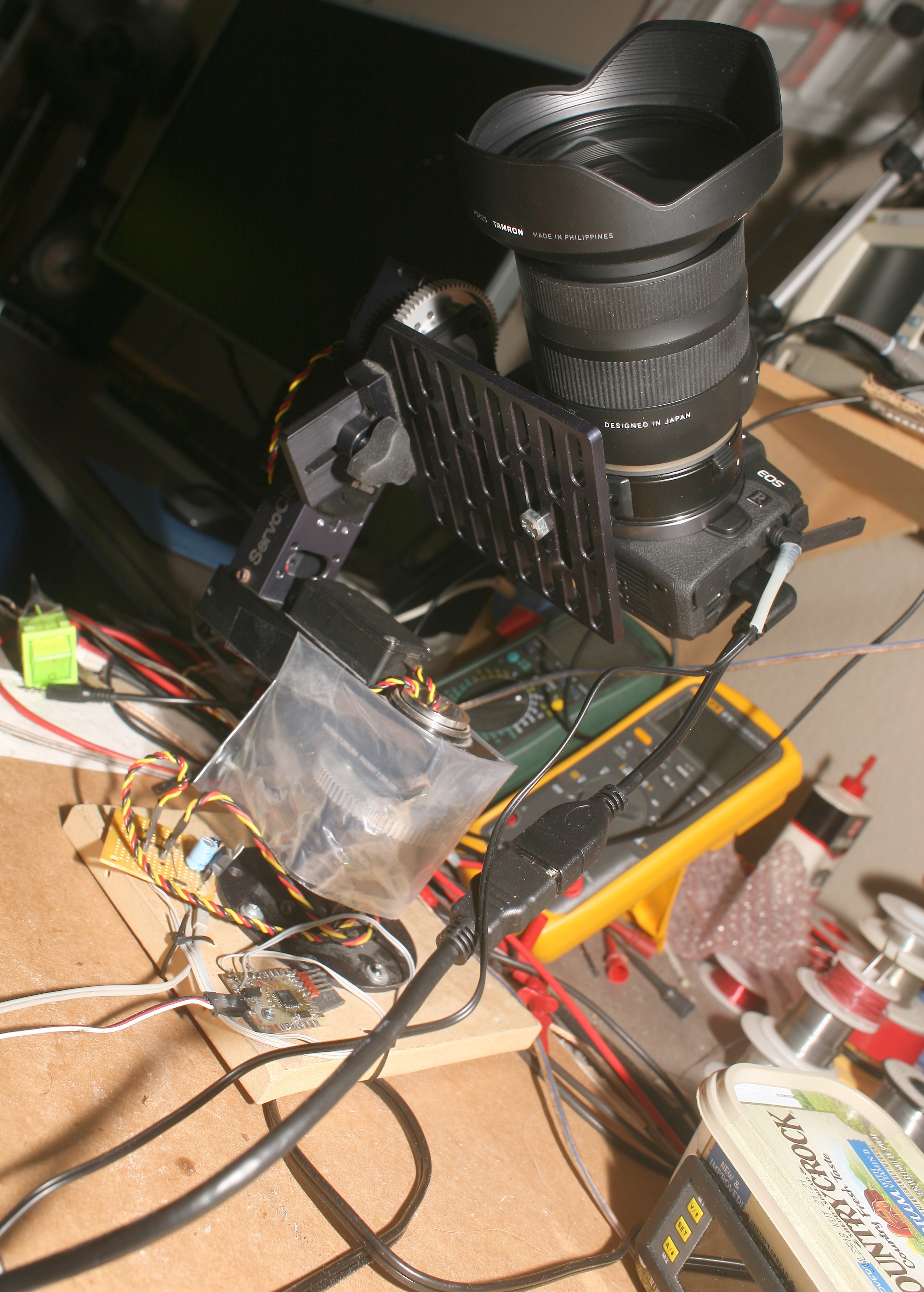

Also installed were the HDMI video feed & the remote shutter control. These 2 bits achieved their final form. Absolutely none of the EOS RP's computer connections ended up useful. Note the gears gained a barrier from the cables.

News flash: the EOS RP can't record video when it's outputting a clean HDMI signal. The reason is clean HDMI causes it to show a parallel view on the LCD while sending a clean signal to HDMI. It uses a 2nd channel that would normally be used for recording video & there's no way to turn it off.

Your only recording option when outputting clean HDMI is on the laptop. Helas the $50 HDMI board outputs real crummy JPEG's at 29.97fps or 8 bit YUYV at 5fps. It's limited by USB 2.0 bandwidth, no good for pirating video or any recording.

![]()

The mighty Tamron 17-35mm was the next piece. It was the lion kingdom's 1st lens in 12 years. The lion kingdom relied on a 15mm fisheye for its entire wide angle career. It was over $500 when it was new. It was discontinued in 2011 & used ones are now being sold for $350. Its purchase was inspired by a demo photo with the 15mm on an EOS 1DS.

Defishing the 15mm in software gave decent results for still photos, but less so for video. There will never be a hack to defish the 15mm in the camera.

![]()

![]()

With the HDMI tap, it could finally take pictures & record video through the camera. The tracker did its best to make some portraits. The tracking movement caused motion blur. Large deadband is key for freezing the camera movement. Portrait mode still needs faster horizontal movement, because it has less horizontal room.

Openpose lacks a way to detect the outline of an animal. It only detects eyes, so the size of the head has to be estimated by the shoulder position. It gets inaccurate if the animal bends over. Openpose has proven about as good at detecting a head as a dedicated face tracker.

The tracker has options for different lenses. Longer lenses make it hunt. 50mm has been the limit for these servos. Adding deadbands reduces the hunting but makes it less accurate. It's definitely going to require a large padding from the frame edges. For talking heads, the subject definitely needs to be standing up for the tracker to estimate the head size.

A corner case is if the entire body is in frame, but the lower section is obstructed. The tracker could assume the lower section is out of frame & tilt down until the head is on top. In practice, openpose seems to create a placeholder when the legs are obstructed. -

Tilt tracking

05/14/2020 at 19:36 • 0 commentsTilt tracking was a long, hard process but after 4 years, it finally surrendered. The best solution ended up dividing the 25 body parts from openpose into 4 vertical zones. Depending on which zones are visible, it tilts to track the head zone, all the zones, or just tilts up hoping to find the head. The trick is dividing the body into more than 2 zones. That allows a key state where the head is visible but only some zones below the head are visible.

The composition deteriorates as the subject gets closer, but it does a good job tracking the subject even with only the viewfinder. It tries to adjust the head position based on the head size, but head size can only be an estimation.

It supports 3 lenses, but each lens requires different calibration factors. The narrower the lens, the less body parts it sees & the more it just tracks the head. Each lens needs different calibration factors, especially the relation between head size & head position. The narrower the lens, the slower it needs to track, since the servos overshoot. Openpose becomes less effective as fewer body parts are visible. Since only the widest lens will ever be used in practice, only the widest lens is dialed in.

The servos & autofocus are real noisy. It still can't record anything.

All this is conjecture, since the mane application is with 2 humans & there's no easy way to test it with 2 humans. With 2 humans, it's supposed to use the tallest human for tilt & the average of all the humans for pan.

Pan continues to simply center on the average X position of all the detected body parts.

There is a case for supporting a narrow lens for portraits & talking heads. It would need a deadband to reduce the oscillation & the deadband would require the head to be closer to the center. A face tracker rather than openpose would be required for a talking head. -

The EOS RP arrives

05/10/2020 at 20:33 • 0 comments![]()

![]()

The next camera entry was the mighty EOS RP. It's advantages were better light sensitivity, a wider field of view through a full size sensor, faster autofocus, preview video over USB. Combined with the junk laptop's higher framerate, it managed real good tracking without the janky Escam.

The preview video can be previewed by running

gphoto2 --capture-movie --stdout | mplayer -

The preview video in video mode is 1024x576 JPEG photos, coming out at the camera's framerate. If it's set for 23.97, they come out at 23.97. If it's 59.94, they come out at 59.94. Regardless of the shutter speed, the JPEG frames are duplicated to always come out at over 23.97. They're all encoded differently by a constant bitrate algorithm, so there's no way to dedupe by comparing files.

Once streaming begins, most of the camera interface is broken until the USB cable is unplugged or the tracker program is killed. There is some limited exposure control in video mode. In still photo mode, the preview video is 960x640 & the camera interface is completely disabled.

Whenever USB is connected, there's no way to record video. There is a way to take still photos by killing gphoto2, running it again as

gphoto2 --set-config capturetarget=1 --capture-image

& resuming the video preview. It obviously has only a single video encoder & it has to share it between preview mode, video compression & still photos.

In video mode, the camera has to be power cycled to get gphoto2 to reconnect to it after killing the program. In still photo mode, it seems to reconnect after taking a still photo. The lion kingdom will probably use 30 minutes in video mode & 30 minutes in still photo mode in practical use, so a method would be required to remotely trigger gphoto2 if USB previewing was the goal.

Helas, the lion kingdom decided not to use USB previewing at all. The next step is to try an HDMI capture board.

Added more safety features to keep the mount from destroying the camera, manely it goes into a manual alignment mode when it starts & only starts tracking after the user enables it, has limits to the step size. Unfortunately, initialization still sometimes glitches to a random angle.

Tracking tilt continues to be a big problem. It can be used with some kind of manual tilt control, but there should be a way to do it automatically.

-

Dedicated camera ideas

01/31/2020 at 07:33 • 0 comments1 of the many deal breakers with this project was the autofocus on the DSLR completely failing to track the subject. The mane requirement was the lowest light sensitivity & an ancient EOS T4I with prime lenses was the only option.

It finally occurred to the lion kingdom that a tracking mount probably needs a dedicated camera specially equipped for a tracking mount, rather than a DSLR. With much videography being airshows & rockets, there was also a growing need for something with stabilization, faster autofocus, & sharper focus. Lions were once again getting nowhere near the quality of modern videos.

The Jennamarbles dog videos are always razor sharp & perfectly lit. It's astounding how sharp the focus remanes while tracking a dog. These videos look a lot more professional than all the other meaningless gootube videos, just because of the focus & the even lighting. As much as lions like blown out colors, what gives Jennamarbles a professional touch seems to be the washed out but even colors.

The internet doesn't really know whether she uses a Canon PowerShot G7 X Mark II or an EOS 80D. The internet definitely doesn't know what lens is on the EOS 80D.

![]()

This dog video appears to have a reflection of a small Powershot, unless her preference in men is larger than the usual oversized men. All the good shots seem to be coming from the Powershot.

![]()

This dog shot stood out for its even lighting & sharp focus, despite the motion.

-

Commercial solutions appear

07/05/2019 at 20:03 • 0 commentsAfter months of spending 4 hours/day commuting instead of working on the tracking camera, a commercial solution from Hong Kong hit stores for $719.

https://www.amazon.com/dp/B07RY5KDX2/

The example videos show it doing a good job. Instead of a spherical camera or wide angle lens, it manages to track only by what's in its narrow field of view. This requires it to move very fast, resulting in jerky panning.

It isolates the subject from a background of other humans, recognizes paw gestures, & smartly tracks whatever part of the body is in view without getting thrown off. In the demos, it locks onto the subject with only a single frame of video rather than a thorough training set. It recognizes as little as an arm showing from behind an obstacle. Based on the multicolored clothing, they're running several simultaneous algorithms: a face tracker, a color tracker, & a pose tracker. The junk laptop would have a hard time just doing pose tracking.

The image sensor is an awful Chinese one. It would never do in a dim hotel room. Chinese manufacturers are not allowed to use any imported parts. The neural network processor is not an NVidia but an indigenously produced HiSilicon Hi3559A. China's government is focused on having no debt, but how's that working in a world where credit is viewed as an investment in the future? They can't borrow money to import a decent Sony sensor, so the world has to wait for China's own sensor to match Sony.

It's strange that tracking cameras have been on quad copters for years, now are slowly emerging on ground cameras, but have never been used in any kind of production & never replicated by any open source efforts. There has also never been any tracking for higher end DSLR cameras. It's only been offered on consumer platforms.

-

Junk laptop arrives

06/08/2019 at 21:18 • 0 comments![]()

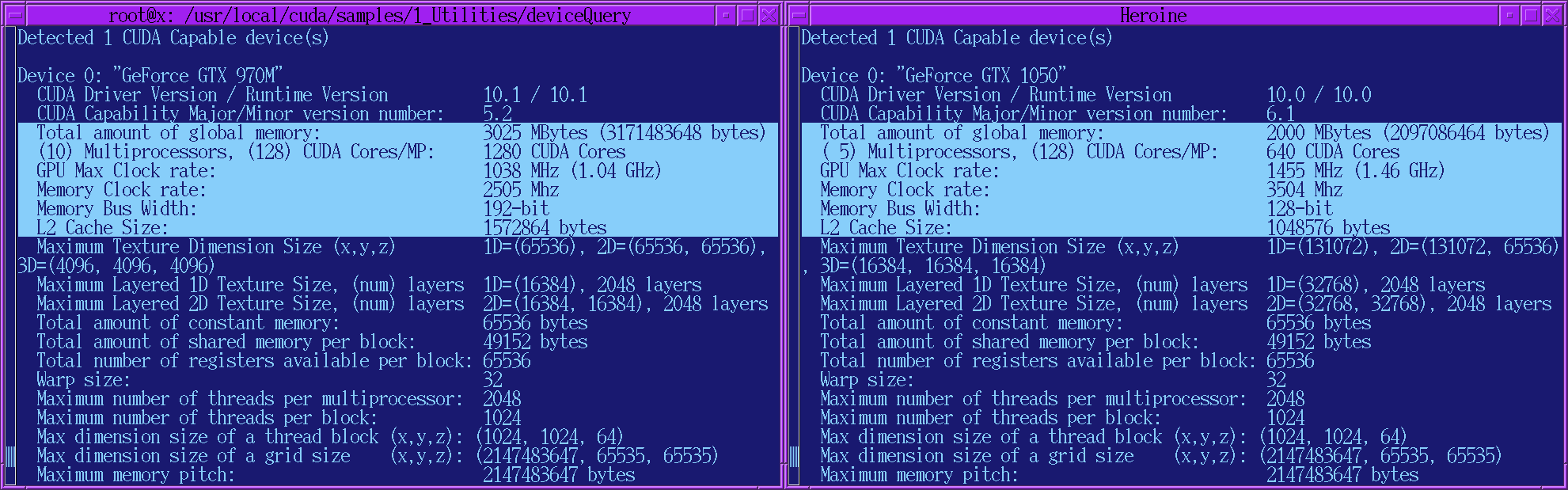

After living with a macbook that only did 3fps & a desktop which ran the neural network over a network, the lion kingdom obtained a gaming laptop with GT970 that fell off a truck. The GT970 was much more powerful than the macbook's GT750 & the desktop's GT1050, while the rest was far behind. Of course, the rest was a quad 2.6Ghz I7 with 12GB RAM. To an old timer, it's an astounding amount of power. Just not comparable to the last 5 years.

Most surprising was how the GT970 had 50% more memory & 2x more cores than the GT1050 but lower clockspeeds. They traded clockspeed for parallelism to make it portable, implying clockspeed used more power than transistor count.

Pose tracking on a tablet using cloud computing was a failure. The network was never reliable enough. It desperately needed a laptop with more horsepower than the macbook.

The instructions on https://hackaday.io/project/162944-auto-tracking-camera/log/157763-making-it-portable were still relevant. After 2 days of compiling openpose from scratch with the latest libraries, porting countreps to a standalone Linux version, the junk laptop ran it at 6.5fps with the full neuron count, 1280x720 webcam, no more dropped frames. It was a lot better at counting than the tablet.

The mane problem was it quickly overheated. After some experimentation, 2 small igloo bars directly under the fans were enough to keep it cool. Even better would be a sheet of paper outlining where to put the igloo bars & laptop. Igloo bars may actually be a viable way to use the power of a refrigerator to cool CPUs.

-

Tracking problems

02/08/2019 at 23:13 • 0 commentsPanning to follow standing humans is quite good. Being tall & narrow animals, humans have a very stable X as body parts come in & out of view. The Y is erratic. The erratic direction reverses when they lie down. The next question is what body part is most often in view & can we rank body parts to track based on amount of visibility?

It depends on how high the camera is. A truly automated camera needs to be on a jib with a computer controlling height. Anything else entails manual selection of the body part to track. With a waist height camera, the butt is the best body part to track. With an eye level camera, the head is the best body part to track.

Lacking Z information or enough computing power to simultaneously track the viewfinder, the only option is adding a fixed offset to the head position. For the offset to be fixed, the camera has to always be at eye level, so there's no point in having tilt support. There's no plan to ever use head tracking.

The servocity mount doesn't automatically center, either. There needs to be a manual interface for the user to center it.

Autofocus has been bad.

It's all a bit less automatic than hoped. For the intended application, butt tracking would probably work best.

-

Rotating the escam

02/03/2019 at 19:56 • 0 comments![]()

![]()

Rotating the Escam 45 degrees & defishing buys a lot of horizontal range, but causes it to drop lions far away. The image has to be slightly defished to detect any of the additional edge room, which shrinks the center.

![]()

![]()

The unrotated, unprocessed version does better in the distance & worse in the edges, but covers more of the corners. Another idea is defishing every other rotated frame, giving the best of both algorithms 50% of the time. The problem is the pose coordinates would alternate between the 2 algorithms, making the camera oscillate. It would have to average when the 2 algorithms were producing a match. When it went from 1 algorithm to 2 algorithms, there would be a glitch.

In practical use, the camera is going to be in the corner of a cheap motel room, so the maximum horizontal angle is only 90 degrees, while longer distance is desirable. The safest solution is neither rotating or defishing.

Another discovery about the Escam is if it's powered up below 5V, it ends up stuck in night vision B&W mode. It has to be powered up at 5V to go into color mode. From then on, it can work properly below 5V. So the USB hub has to be powered off a battery before plugging it into the laptop. Not sure if openpose works any better in color, but it's about getting your money's worth.

The next problem is the escam can only approximate what the DSLR sees, so there's parallax error. There's too much error to precisely get the head in the top of frame.

After much work to get the power supply, servo control, escam mount, & DSLR mount barely working, there was just enough data to see the next problems. Getting smooth motion is a huge problem. Body parts coming in & out of view is a huge problem. Parallax error made it aim high.

-

Openpose with the Escam

02/02/2019 at 08:30 • 0 commentsThe Escam was put back into its ugly case, in order to use the tripod mount & protect it. The serial port was left exposed, to get the coveted IP address.

![]()

![]()

Pretty scary, how much the Escam can see in the dark. It could easily see ghosts. Lions look like real lions. Lining up the Escam with the DSLR is quite challenging.

To get raw RGB frames from the RTSP stream to another program, there's

ffmpeg -i rtsp://admin:password@10.0.2.78/onvif1 -f rawvideo -pix_fmt rgb24 pipe:1 > /tmp/x

It also works on Macos.

With the full 896x896, openpose ran at 0.2fps.

With 4x3 cropping, it was 4fps, but detected a lot of positions it couldn't with the Samsung in 4x3. Decided this was as cropped as the lion kingdom would go.

The escam is a lot narrower than 180 degrees, maybe only slightly wider than the gopro. Detection naturally fails near the edges. Because so much of the lens is cropped, the next step would be mounting it diagonally & stretching it in software.

lion mclionhead

lion mclionhead