HISTORY

Please see My Embedded Project History page for a history of the projects that led up to this vGuitar rig.

MOTIVATION

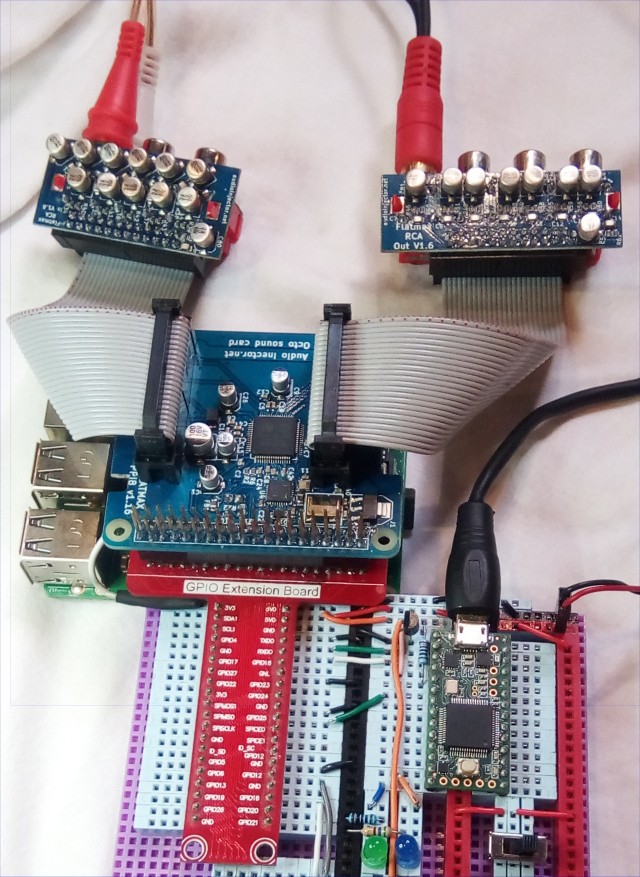

I've been a gigging guitar player for over 40 years. I've been a professional software engineer for the same amount of time. Over the years my "rig" has evolved from a few foot pedals, to multi-effect pedals and loopers, to most recently, an iPad based system that includes an FTP Triple Play guitar synthesizer with SampleTank, ToneStack, and Quantiloop pro running in an AudioBus framework, with IO provided by an iRigHD usb audio device for guitar sound input and final sound output. I use a variety of controllers including 4 analog volume pedals, a SoftStep II foot pedal, and a MPD218 that I put on the floor as a foot switch.

The basic problem with the iPad setup is latency. I have measured 38ms of latency from the time I pluck the guitar until the sound from the guitar makes it up through the iRig into the iPad, gets through the Audiobus framework, and is returned as a line output by the iRig. This is independent of the particular apps (effects) I am using. And it even appears to be independent from Audiobus itself. This appears to mostly be latency caused by conversion to USB, and the sound making it's way up the USB stack into the iPad sound architecture and back out to the iRig.

Another major issue is the complexity, and the resultant fragility, of the setup. There are no less than 21 connectors, including a 7 port USB hub, and a dozen or more cables, where a failure of any one of them can basically fail the entire system .... live and in real-time ... in the middle of a song while I am onstage.

Another factor is related to the fact that I am using a general purpose device (the iPad) for a very specific function, and it is not optimized for that. This manifests itself in many ways.

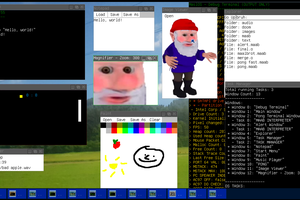

IOS, and the whole apple world, are notoriously closed and proprietary. You can't even write an app for it without apple's approval. I'm not talking about this whole generation of "served" apps, where you have to connect to the "cloud" or a web-server and are really running a JavaScript app. These frameworks are prolific, but, not appropriate for what I'm trying to do. A served app won't do for a live gig rig. I'm talking about native apps only. Like you would expect from a professional setup.

You have to accept the architecture IOS provides (Core Midi) and the way the apps that you purchase interact with it. In turn, each app is it's own closed, proprietary architecture.

For example, SampleTank cannot be made to respond to a specific MIDI device. It accepts any midi device, all glommed together by Core Midi, and the only way you can differentiate devices is by using the precious midi channel numbers, 1 through 16. Of course, in full blown mode, the FTP pickup uses no less than 14 of those 16 channels, due to it's own bogus setup (it uses channels 1-6 for the strings, and then duplicates them again on channels 11-16, while also using two or three channels for mysterious proprietary messages).

Don't get me started on the FTP itself! It outputs so many midi messages, including sysex, during a gig, that often I think the whole system just crashes because of overload. I have spent a LOT of time reverse engineering the FTP usb pickup. Part of my project will be to isolate, and filter it, so that it presents the correct set of midi events that I want to see, and not millions of other messages that clog up the system downstream.

Anyways, please understand that I absolutely LOVED the the iPad rig when I first got it. Mostly because for the first time I personally could add bass, organ, piano, violin, or spacey synth sounds, to my onstage repetoire. And Quantiloop...

Read more » Patrick

Patrick

The source code is posted

The source code is posted

Marcel

Marcel

Pero

Pero

Fabien-Chouteau

Fabien-Chouteau

shlonkin

shlonkin

OHMYGOD. Yeah, just another dude working on something very similar, but for very different reasons and with very different skillsets. I can't really program anymore without negative mental health shit coming back, but I have 3d printers (FDM and resin), a laser cutter, and PCB fab (still in it's final stage of working it out, but I can make boards with 95% the quality of fab houses, and so much faster). If you'd like some help I'd love to talk, I have one killer idea but I don't want to talk about it in the open, have a main comms method?