-

Yet a few more findings about how the "advanced coding" mode works (part 2)

01/01/2020 at 19:03 • 0 commentsI've done a few more tests and found a couple more details that are worth reporting.

Stopping the execution of a sequence before its completion:

When using the "coding mode", which I described in my last two updates, the Android app sends a sequence of movement commands delimited by two special commands ("1100" start-of-sequence, "1101" end-of-sequence). Once the robot receives the "1101" end-of-sequence command, it starts executing the movements in the sequence one by one without requiring any further command from the Android app. It will run through the whole sequence unless two things happen:

1- a "1000" (STOP) command is sent by the Android app

or

2- the BLE connection between the phone and the robot is terminated.

Procedures are simple loop sections and their full definition is sent every time they are called:

The Android app allows the user to define sequences of up to 8 movement commands called "procedures" that can be executed multiple repeated times. In my last update I explained that the Android app instructs the robot to run a procedure multiple times by delimiting its sequence of movements using two special commands (start-of-an-XX-times-loop message "0700 00XX" and end-of-loop "0701"). I was wondering if separate "procedures" in the Android app would be defined using different special commands and the definition of these procedures would be reused if the same procedure was called again within the sequence. This would enable us to save a lot of space if we had to alternate different loops. Unfortunately, I found out that each time a procedure is used, their full definition is sent using the same special delimiters. I also found out that the Android app is clever enough to not send the special delimiters if the procedure is executed only once.

Procedures can't be nested

I was wondering if it would be possible to include a "procedure" within another "procedure" to create nested loops. Unfortunately, I tried it and found out that the robot would discard any previous start-of-loop commands and any redundant end-of-loop commands.

The robot can handle loops of more than 8 operations.

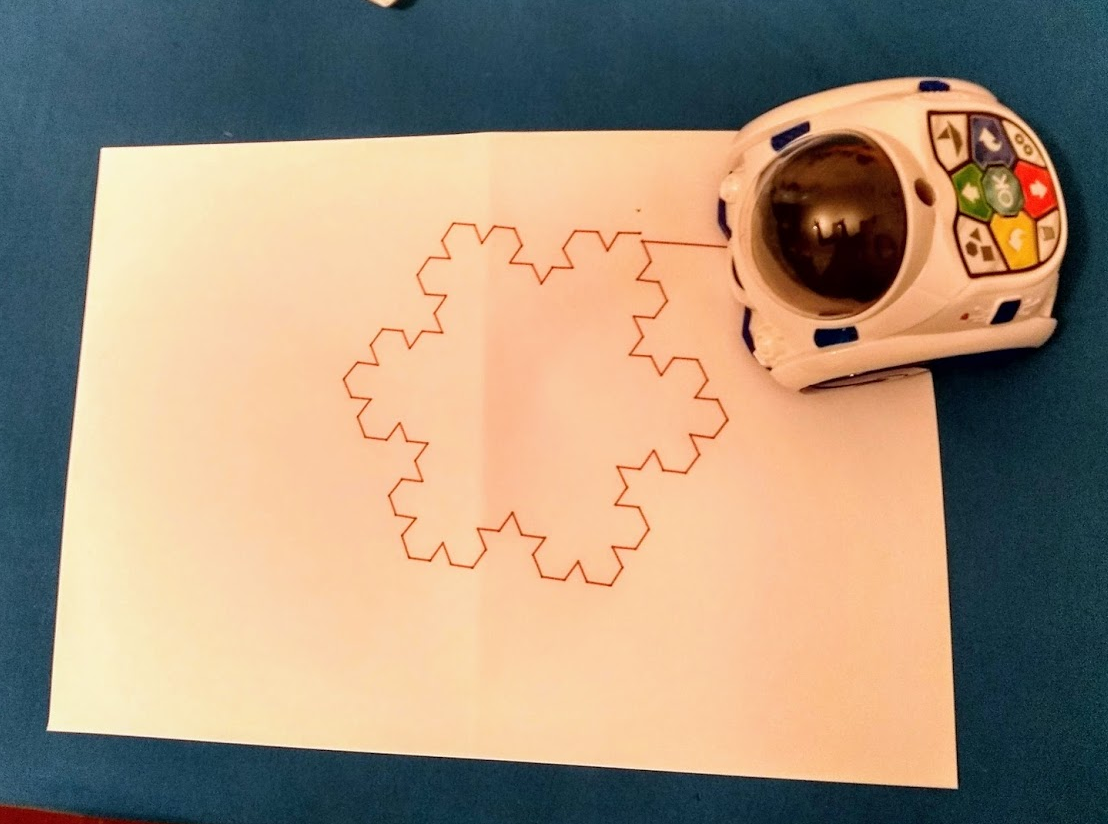

The Android app limits the number of commands in a procedure to be less than 8. Arguably there are many simple shapes that can be drawn using loops with less than 8 commands; however, now that we know that we can't use procedures as functions and call them from within other procedures and we can't nest loops, it would be useful to be able to define longer loops. The good news is that the robot can handle loops of quite many commands. I tried using one with 34 commands and saw no issues. This enabled me to draw a "reverse 3rd order" Koch snowflake (made up name) by repeating the sequence of drawing one of its smaller sides 6 times:

The robot can handle sequences of more than 34 commands (at least >46)

I noticed that when executing drawings from the "free-hand drawing mode" that involved many steps, the robot would pause at times and the Android app would show a "sending data" window for a couple of seconds. I check the communications log and found out that the Android app split the sequence in chunks of 34 commands and executed it as separate sequences running one after the other. Something like this:

1100

command 1

command 2

...

command 34

1101

1100

command 35

command 36

...

command 68

1101

I checked if the robot would be able to handle sequences of more commands and tried a sequence of 46 commands without problem. I haven't ran any further tests to find out what the actual limit is but in any case it seems to be greater than the limit the Android app imposes to itself.

There's a small catch though:

Timing

I'm not sure yet if the Android app has any way to tell if the robot has completed executing a sequence. This would be important if we had to start sending a new sequence when multiple sequences were chained to created a longer equivalent sequence as described above. It is also important if the BLE connection has to be kept alive while the sequence is being executed. In my scripts in Python I had to include a time time delay before closing the BLE connection to give the robot enough time to complete the drawing. I will check the communication logs in greater detail to see if the robot sends any signal to the Android app to acknowledge that it has finished executing a sequence. If it doesn't, there's a chance that the Android app simply estimates the approximate time that it takes to complete a sequence by adding up the approximate time of each movement command in the sequence. Still, I guess this would be quite imprecise given how long it takes for a sequence containing loops to be completed.

Finding "secret" commands not used by the Android app

In my previous update I explained that I started guessing the commands the Android app used to make the robot play different audio samples. This was the only time where I've sent commands to the robot that I haven't seen in the communication logs. Given the way the commands are encoded using plain text, it would be fairly easy to guess other commands that may exist even if the Android app doesn't use them. I don't feel adventurous enough to give that a try because somehow I'm afraid I'll trigger some calibration routine that will break the robot but I may start playing with this idea at some point.

Once again, stay tuned for more updates!

-

Yet a few more findings and how the "advanced coding" mode works (part 1)

12/29/2019 at 14:05 • 0 commentsI captured a further log, this time I tried to define a loop in the advanced coding mode and I also captured the log from drawing something using the freehand mode.

The bad news are that the freehand mode uses exactly the same sequence definition as the basic coding mode, which means that it isn't possible to request turning angles that aren't integer degrees of turn and it isn't possible to request displacements that aren't integer number of centimetres.

The good news are that loops are indeed defined as loops and they aren't unrolled by the app. The beginning of the loop is marked by a command that defines how many times the loop will be played and the end of the loop is marked by another special command. For example, the following sequence of commands draws an equilateral triangle:

1100 # start the definition of a sequence of movements

0700 0003 # start the definition of a loop to be repeated three times

0001 0005 # move forward by 5 cm

0004 0120 # turn to the right by 120 degrees

0701 # end the definition of the loop

1101 # end the definition of the sequence and start the motion

Testing all this from nRF Connect would be quite tedious, therefore I started using a few other tools on my laptop.

The first one I tried was the gatttool in interactive mode, that allows you to manually write to the characteristics of the BLE service and do more or less the same I was doing using nRF Connect. The use of the tool is pretty straightforward and there are plenty of usage examples online (for example). The tool can be used in non-interactive mode to send commands from the terminal. The issue with this method, though is that a single execution of gatttool will open a BLE connection, write to the characteristic and close the connection. This causes the Mind Designer Robot to stop doing whatever it has been asked to do. Consequently, I recommend using gatttool just for debugging things manually.

The other thing I tried was the bluepy library in Python. This library is pretty straightforward to use, it has functions to list the services offered by any BLE device and it can also read and write to any characteristic. I started writing a very basic script to test if the process would work:

#!/usr/bin/env python from bluepy import btle import time dev = btle.Peripheral("18:7A:93:16:82:1B") time.sleep(0.5) dev.writeCharacteristic(0x003d,"1000") time.sleep(0.2) dev.writeCharacteristic(0x003d,"1100") time.sleep(0.1) dev.writeCharacteristic(0x003d,"0001 0005") time.sleep(0.1) dev.writeCharacteristic(0x003d,"0003 0090") time.sleep(0.1) dev.writeCharacteristic(0x003d,"1101") time.sleep(50) dev.disconnect()This code defines a simple sequence where the robot moves forward by 5 cm and then turns to the right by 90 degrees. Sleep intervals of 100 ms are introduced every time a command is sent to the robot. I haven't tested if these delays are required but I see no reason to speed up the process of sending the commands to the robot and this is more or less what I saw the Android app doing.

That's it for this update. I've posted a slightly more intricate script that draws a five pointed star using a sequence with a loop. The python script can be found in the downloads section of the project page.

I want to carry out a few further tests to see if the definition of multiple loops within a sequence makes any difference but I'm not sure what the next steps will be.

Once again, stay tuned for more updates!

-

A few more findings and how the basic "coding" mode works

12/28/2019 at 19:51 • 0 commentsI captured a new communication log, this time using the "coding" mode in the app, which allows you to define a sequence of movements to draw a shape before pressing the "play" button to let the robot go through the sequence. I tried a few different sequences: one where the robot would move forward by 5 cm (the default distance) and then turn to the right by 90 degrees, another one where it would turn 45 degrees, another one where it would move forward by just 1 cm and a further sequence where it would combine moving forward, turning to the right, moving backward and then turning to the left.

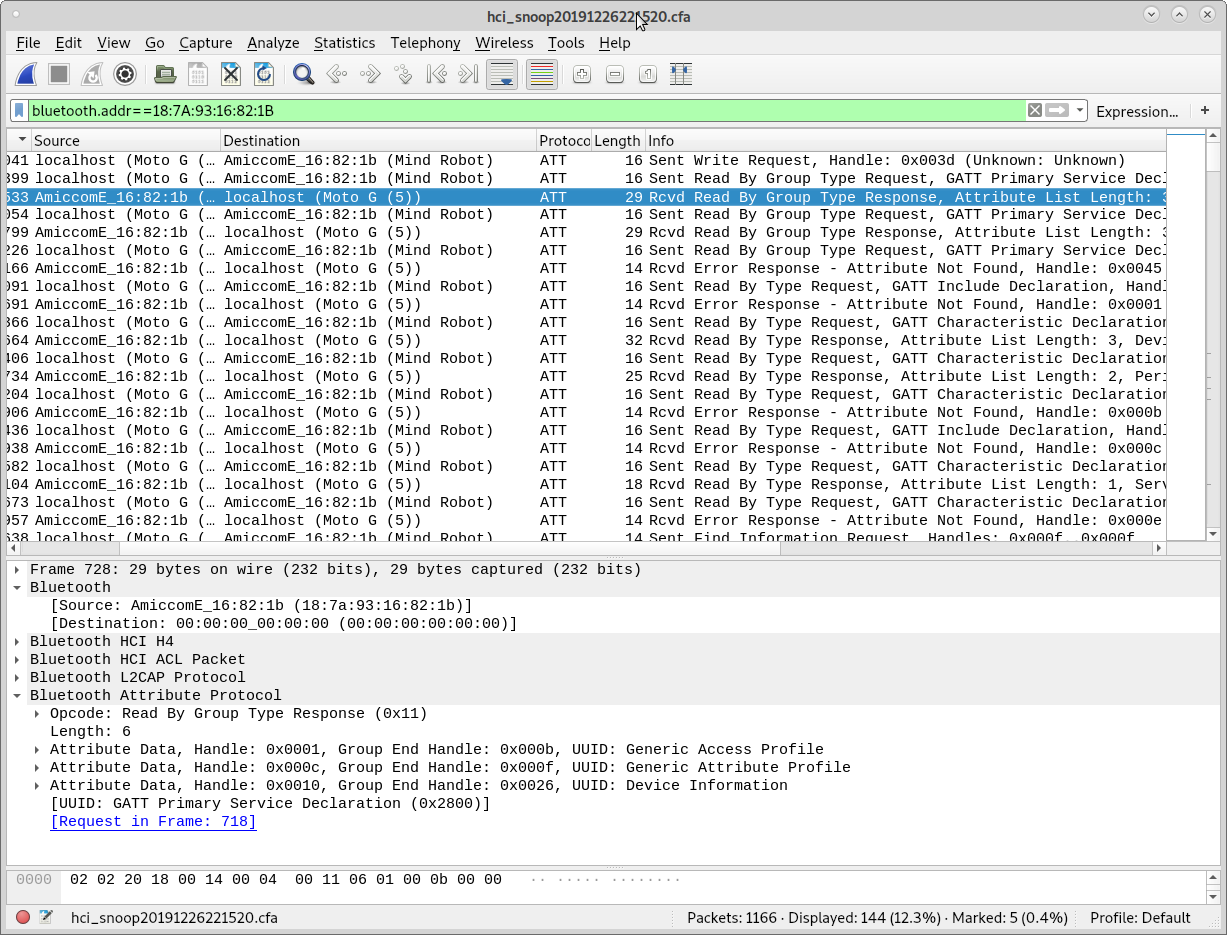

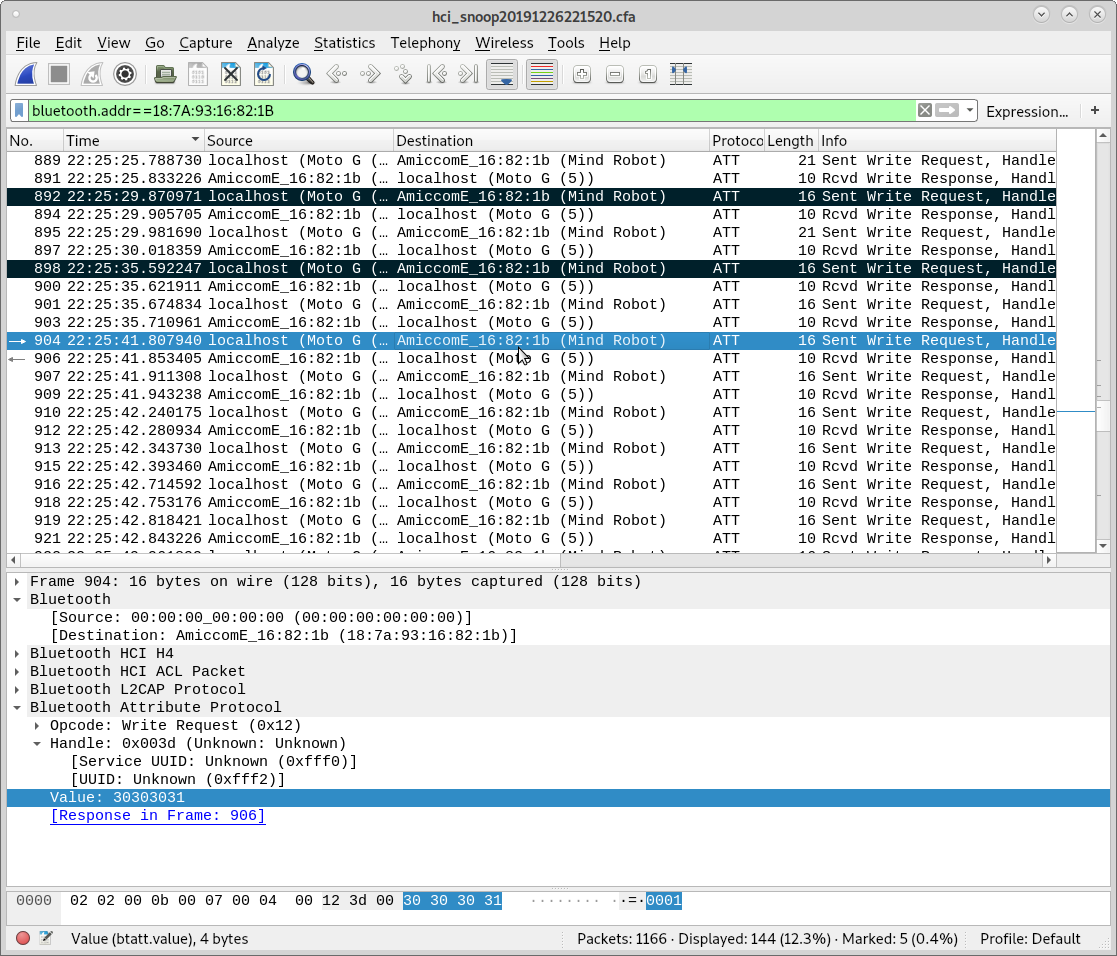

I opened the log in Wireshark and found a couple of tricks to make the inspection of the log much easier. First, I filtered the view to show only the messages sent from my phone to the robot, this was accomplished by using the filter "bluetooth.dst ==" followed by the MAC of the robot. I also created a new custom column where I displayed "btatt.value", which displays the content of the message in hexadecimal form. Finally, I set a few colouring rules to mark a few special messages that I will describe later.

This is the result:

In brief, each "step" in the sequence was sent as a separate message. These messages were longer than those I saw the other day when analysing the real time mode, however the format was pretty straightforward when the message is read as an ASCII string:

move forward by 5 cm: "0001 0005"

turn 90 degrees to the right: "0003 0090"

move backwards by 5 cm: "0002 0005"

turn 45 degrees to the left: "0004 0090"

So basically, the first four characters identify the specific command and second set of four characters separated by a space (0x20) gives the distance in cm or the angle in degrees.

Obviously, the app must tell the robot where the sequence starts and where the sequence ends. This is done using the following messages:

start of sequence: "1100"

end of sequence "1101"

These two messages are very similar to the "stop" command that I found in the real time mode, which is "1000" when read as a string. Similarly, the commands for forward, right turn, left turn and backward in the real time mode are identical to the first four character of the same commands in the "coding mode", (ie "0001", "0003", "0002" and "0004").

When testing all this using nRF Connect by sending the messages by hand, I also realised that timing is important and the robot may disregard the sequence of messages if there's a big delay between the messages. I don't know how long that delay can be but it must be of a few seconds maximum.

Finally, when looking at the communications log, I noticed once again that the app sent messages that made the robot play an audio sample that would say something along the lines of "I'm going to start drawing". This seems to be irrelevant for the functionality of the robot, you can get the robot to follow a sequence without making it play an audio sample. Still, I spent some time creating a list of audio samples of the robot by sending slightly modified commands to the robot. The results are quite surprising: many audio samples say the same but using slightly different words. I suppose they tried to make the robot sound less repetitive. Anyway, here's the list of audio samples that I found, I wrote the description in Spanish because this is the version of the robot that I have (it is available in English, Italian and Spanish):

30 31 30 30 20 30 30 30 31 hola!

30 31 30 30 20 30 30 30 32 hey!

30 31 30 30 20 30 30 30 33 ganas de dibujar

30 31 30 30 20 30 30 30 34 hola! que te apetece hacer hoy yo tengo muchas ganas de dibujar

30 31 30 30 20 30 30 30 35 te apetece programarme?

30 31 30 30 20 30 30 30 36 listos para programar?

30 31 30 30 20 30 30 30 37 aprendamos a programar y a dibujar juntos

30 31 30 30 20 30 30 30 38 dibuja y programa conmigo

30 31 30 30 20 30 30 30 39 que forma geometrica queremos dibujar?

30 31 30 30 20 30 30 30 3A dibujemos juntos

30 31 30 30 20 30 30 30 3B formas geometricas

30 31 30 30 20 30 30 30 3C figuras geometricas

30 31 30 30 20 30 30 30 3D ilustraciones geometricas

30 31 30 30 20 30 30 30 3E imagenes

30 31 30 30 20 30 30 30 3F figuras complejas

30 31 30 30 20 30 30 30 40 tracemos formas

30 31 30 30 20 30 30 30 41 que quieres hacerme dibujar?

30 31 30 30 20 30 30 30 42 que dibujamos?

30 31 30 30 20 30 30 30 43 ponme sobre una hoja de papel e introduce el rotulador

30 31 30 30 20 30 30 30 44 que te apetece hacer hoy

30 31 30 30 20 30 30 30 45 estoy listo para empezar

30 31 30 30 20 30 30 30 46 de acuerdo

30 31 30 30 20 30 30 30 47 entendido

30 31 30 30 20 30 30 30 48 empecemos

30 31 30 30 20 30 30 30 49 venga vamos a jugar

30 31 30 30 20 30 30 30 4A en espera de instrucciones

30 31 30 30 20 30 30 30 4B cargando

30 31 30 30 20 30 30 30 4C pulsa ok para comenzar

30 31 30 30 20 30 30 30 4D cuando termines pulsa ok

30 31 30 30 20 30 30 30 4E donde tengo que ir?

30 31 30 30 20 30 30 30 4F atencion!

30 31 30 30 20 30 30 30 50 [yawning]

30 31 30 30 20 30 30 30 51 venga

30 31 30 30 20 30 30 30 52 arranquemos

30 31 30 30 20 30 30 30 53 volvamos a empezar

30 31 30 30 20 30 30 30 54 sobrecarga de memoria

30 31 30 30 20 30 30 30 55 crey!

30 31 30 30 20 30 30 30 56 yo tengo muchas ganas de dibujarFinal thoughts and next steps:

Reading the commands in hexadecimal made it hard for me to guess what was going on but once I checked them in ASCII the format of the commands became very obvious. I suppose it is handy for developers to use plain text when creating a custom protocol that isn't really subject to any serious constraints in terms of bandwitdh, reliability, robustness, etc...

There are still a few things for me to figure out about the communications protocol. Specifically, I want to see what happens with the loops that the "advanced coding mode" allows you to use. I suspect that the phone application unrolls those loops but I have to test it. I read somewhere that there's a limit of 40 steps in the sequences that are introduced by hand using the keys in the back of the robot but I don't know what the limit is when programming from the Android app. If loops are unrolled, this may become a concern when trying to draw smooth shapes like circles and bezier curves.

I also want to try if the "freehand drawing mode" requires a different type of communication with the robot. I suspect that the only difference is on the Android app side, because the freehand mode allows you to draw shapes on the screen that are translated into motion commands for the robot but I want to see if that's the case. The reason why it might be different is because the freehand drawing mode allows you to draw arbitrary lines on the screen that may require the robot to make turns that do not correspond to integer angles while the commands I found in the coding mode only allow you to send integer quantities for distances in cm and angles in degrees. If the freehand used special commands, this would enable us to make fancier drawings.

Finally, I want to note that I haven't implemented any custom app yet and I've kept using nRF Connect for all my tests. Still, I will soon give the custom app a quick try. The easiest way to implement such app is to use the MIT App Inventor. I used this in my Electric Wheelchair project and it worked like charm; therefore, most likely I will stick to the same method this time.

Stay tuned for further updates!

-

First findings and how "the real-time mode" works

12/27/2019 at 13:27 • 0 commentsBy following the procedure I described in my previous update, I was able to obtain a log file with all the data from the BLE communications that took place between my phone and the Mind Designer Robot. One issue with this procedure, compared to the procedures I had used in the past with wifi communications, is that it wouldn't allow me to check the log in real time. This meant that in order for me to link the messages in the log with the actions of the robot, I had to make it follow a know pattern with known timing. I started with the "real-time control mode", where you can control the car making it move forward, rotate or move backward depending on which arrow you press. I pressed "forward, forward, forward, right, backward, left, forward" and left periods of about 5 seconds between each set of commands. I also wrote down the time when I started.

The log in Wireshark showed many things. I filtered the entries making Wireshark show the messages that had something to do with the MAC address of the robot, this left only the ATT messages being displayed. Btw, an identical procedure with better pictures is show in this article from Medium.

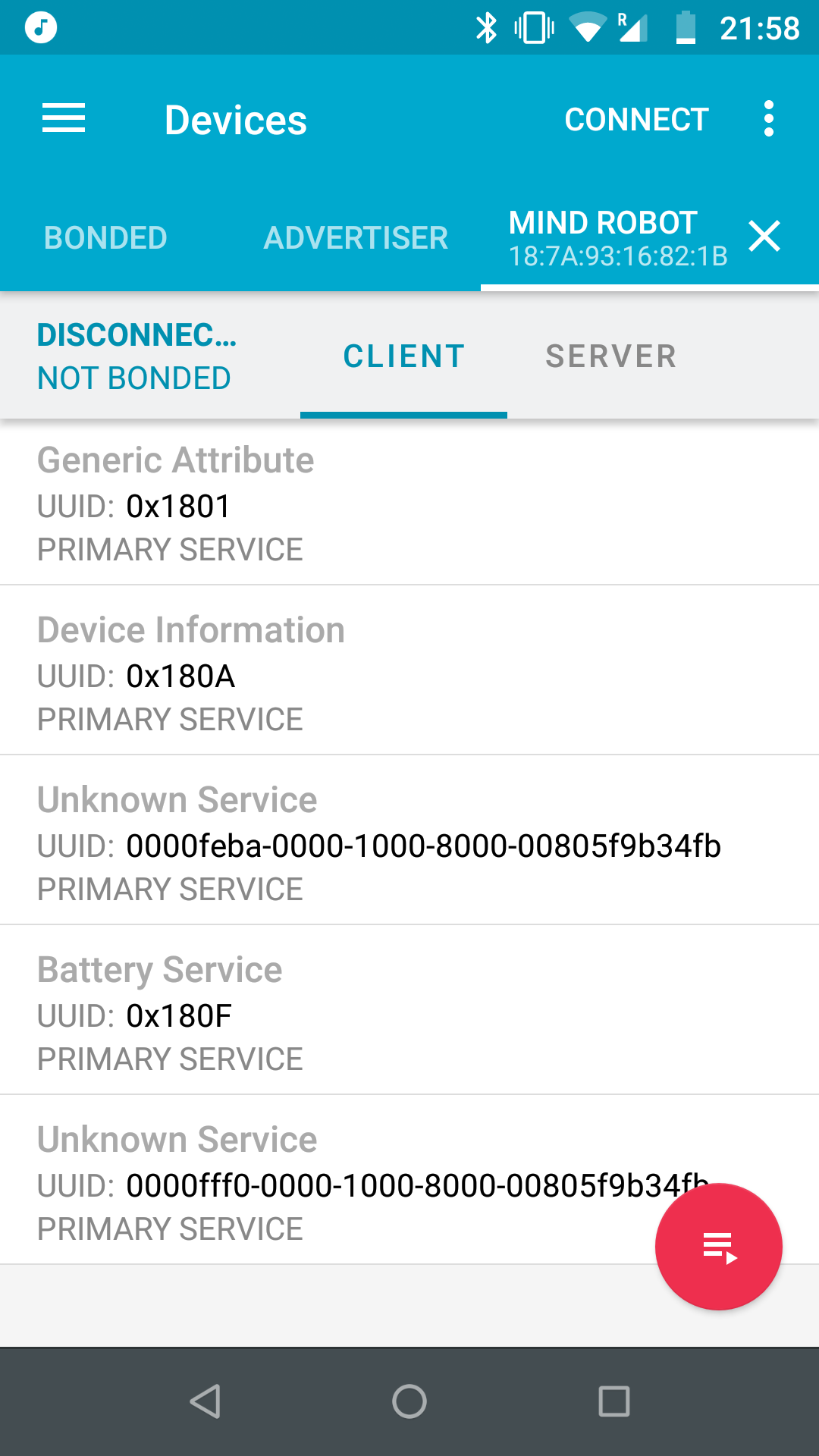

Looking at the messages, it was fairly easy to get an idea of what was going on: the first lot of messages between the app and the toy were "service discovery requests" (please forgive me for using the wrong terminology here) from the app asking the toy to provide the list of BLE services it offers. This same list can be obtained from any BLE device using a very handy Android app called nRF Connect, which helps double-checking if things make sense.

This is the list of services as seen from nRF Connect:

Some services are generic and I presume pretty much any BLE device will feature them, whereas some others listed as "unknown" are likely used to implement very application-specific functions.

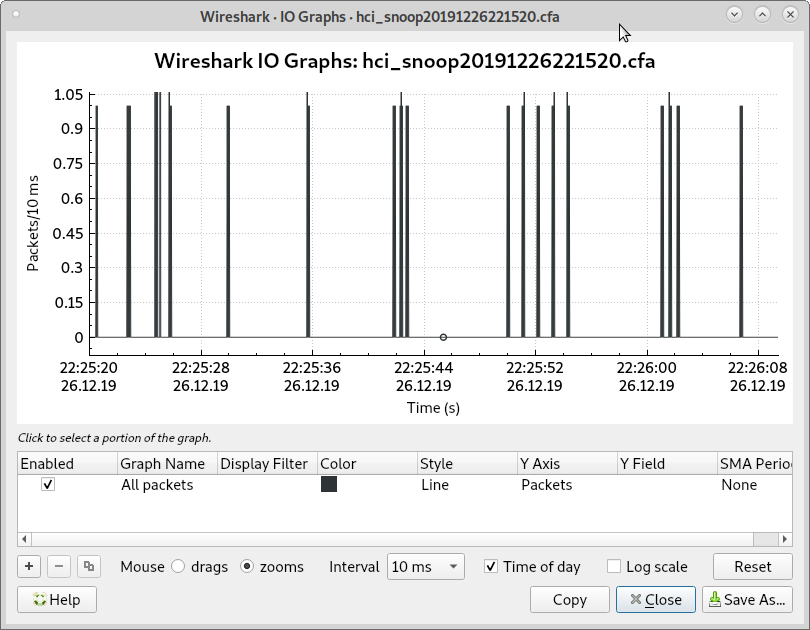

By looking at the I/O graph in Wireshark, which plots the frequency of messages being exchanged over different periods of time, it was fairly straightforward to detect the period that corresponds to my key-presses in the application:

As you can see, the ~5 second gaps are pretty obvious and by looking at the messages that were exchanged in these periods it was possible to identify the purpose of some of them:

I marked the messages that matched the beginning of the ~5 second periods and focused my efforts on reading what was being sent from the phone.

Apparently, the phone was accessing a service with UUID 0000fff0-0000-1000-8000-00805f9b34fb and writing to a characteristic within that service with UUID 0000fff2-0000-1000-8000-00805f9b34fb. Values were written every ~100ms with alternating values: the value 0x31303030 was very common and it would follow other values (e.g. 0x30303031).

nRF Connect allows you to connect to BLE services and read or write to the characteristics by had. This helps testing if messages make sense. Alternatively, if a sequence of messages with "precise" timing was important I could have used a BLE module with Arduino, for example this one from Adafruit with the nrf8001 is very easy to use.

I tried writing a couple of values to the aforementioned UUID and I figured the following:

0x31303030 makes the robot stop

0x30303031 makes the robot start moving forward

0x30303032 makes the robot start moving backwards

0x30303033 makes the robot start turning to the right

0x30303034 makes the robot start turning to the left

Apparently, the 100ms gap between issuing 0x30303031 and 0x31303030 that I had observed were the shortest time the Android app would allow the user to command the robot to move. If one of the "start moving" commands above was sent, the robot would move indefinitely until the stop command was received.

I noticed a couple other things:

- the robot would hang the BLE connection if nothing was sent to it for a certain period of time (about a minute), when this happened, the robot would play an audio sample saying "I'm disconnecting".

- when using the official Android app the robot will say "I'm ready to play" or something along these lines when a playing mode was chosen. First I thought this was a sign that the robot had to be told that one wanted to operate it in "real time control mode" or any other mode. However, it was possible to send the motion commands I describe above as soon as the BLE connection starts. Therefore, I'm inclined to think that the Android app can send messages to the robot to command it to play audio samples and it does so without any implication on the operation logic of the robot.

The real-time control mode is very different from the other modes of operation of the robot, where it follows motion commands similar to those in Logo programming (ie "move forward 1 cm, rotate to the right by 90 degrees", etc). I haven't opened the case of the robot but given how precise the motion is, I'm inclined to thing that it uses stepper motors or even DC motors with simple encoders (given the noise it makes when it moves). Therefore, it wouldn't make sense for the Android app to rely on the timing between the motion commands to make the robot move. Therefore, I suppose that it is possible to send Logo-type commands to control the robot that are different from the "indefinite" motion commands I describe above. My next task will be to find how these commands are sent.

The stuff I described in this post took me a couple of hours to figure out. I was a bit disappointed to see that the commands where identical to the actions associated to the key pressed because I was hoping I would be able to implement a fine joystick control to get the robot to move around complex paths in real time. Still, I'm eager to find out more about the other commands the robot is ready to take from the application, who knows if there's a way to give commands of displacement of the individual wheels, which could be used to implement a custom path planning.

Stay tuned for more updates.

-

Getting Android to log BLE communications and accessing the log

12/27/2019 at 08:02 • 0 commentsI have used Wireshark in the past to capture wifi traffic between an Android phone and a wifi-enabled toy (a quadcopter) using various methods (e.g. capturing the traffic from an open channel, using a laptop as a gateway between the phone and the toy, etc), however I had no idea how to capture Bluetooth traffic. To my surprise, I found out that Android >4.4 has a feature under the "Developer options" menu entitled "Enable Bluetooth HCI snoop log" that dumps all the traffic to a file. It is worth pointing out that I had to restart the phone in order for Android to start logging the traffic (I suppose this is a trap for young players, I hope this note will be useful to someone) and it was hard to tell if the phone was logging the traffic or not. That's because the location where Android stores the log file is device-dependant and I wasted a lot of time and still couldn't figure out where the file was.

The internet came to the rescue and thanks to this post in Stackoverflow I was able to access the log file from my computer. The "Legacy answer" in the post didn't work for me but I learned about a method to dump a lot of useful information from the phone to my computer and find the Bluetooth log there. This implied connecting my phone to the computer via USB, enabling USB debugging and running the following command:

adb bugreport anewbugreportfolderBy the way, installing "adb" was easier than I though. Apparently, there's a package or a collection of packages in Debian that weight just a few MB and enables you to use this command without installing a huge SDK from Android (hooray!).

The command above generates a zip file that contains a lot of stuff. There's a txt file in the root from which a Bluetooth log can be extracted and turned into something Wireshark can read using a procedure described here. There's a catch though: apparently this log will exist even if the "Enable Bluetooth HCI snoop log" is disabled. However, it won't contain all the traffic, only some low level commands issued by Android to configure and operate its Bluetooth hardware (not 100% sure this makes sense). Once I had the option above enabled and restarted my phone, I found a log file inside the zip that I could read using Wireshark and showed the whole communication log. Success.

In a further update I will describe the first things I learned about the communication protocol.

Custom control app for Mind Designer Robot

This project is about reverse-engineering the BLE communications protocol and getting a custom app working

adria.junyent-ferre

adria.junyent-ferre