-

1Understanding of the workflow

As it was mentioned before, aXeleRate can be run on local computer or in Google Colab. We’ll opt for running on Google Colab, since it simplifies the preparation step.

Let’s open the sample notebook

Go through the cells one by one to get the understanding of the workflow. This example trains a detection network on a tiny dataset that is included with aXeleRate. For our next step we need a bigger dataset to actually train a useful model.

-

2Training the object detection model in Colab

Open the notebook I prepared. Follow the steps there and in the end, after a few hours of training you will get.h5,.tflite and.kmodel files saved in your Google Drive. Download the.kmodel file and copy it to an SD card and insert the SD card into mainboard. In our case with M.A.R.K. it is a modified version of Maixduino called cyberEye.

MARK is an educational robot for introducing students to concepts of AI and machine learning. So, there are two ways to run a custom model you created just now: using Micropython code or our TinkerGen’s graphical programming environment, called Codecraft. While the first one is undoubtedly more flexible in ways you can tweak the inference parameters, the second is more user-friendly.

-

3Running custom model with graphical programming environment

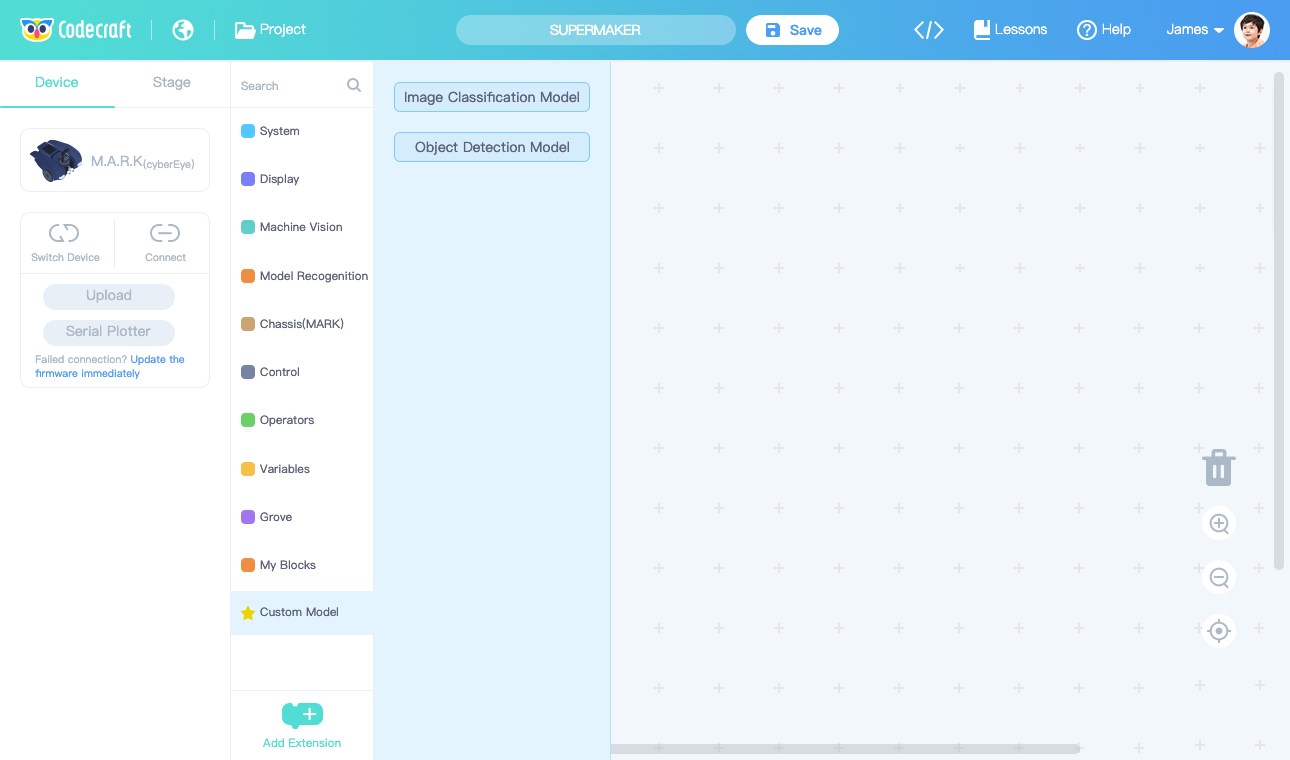

If you opt for graphical programming environment, then go to Codecraft website, https://ide.tinkergen.com and choose MARK(cyberEye) as target platform.

Click on Add Extension and choose Custom models, then click on Object Detection model.

![]()

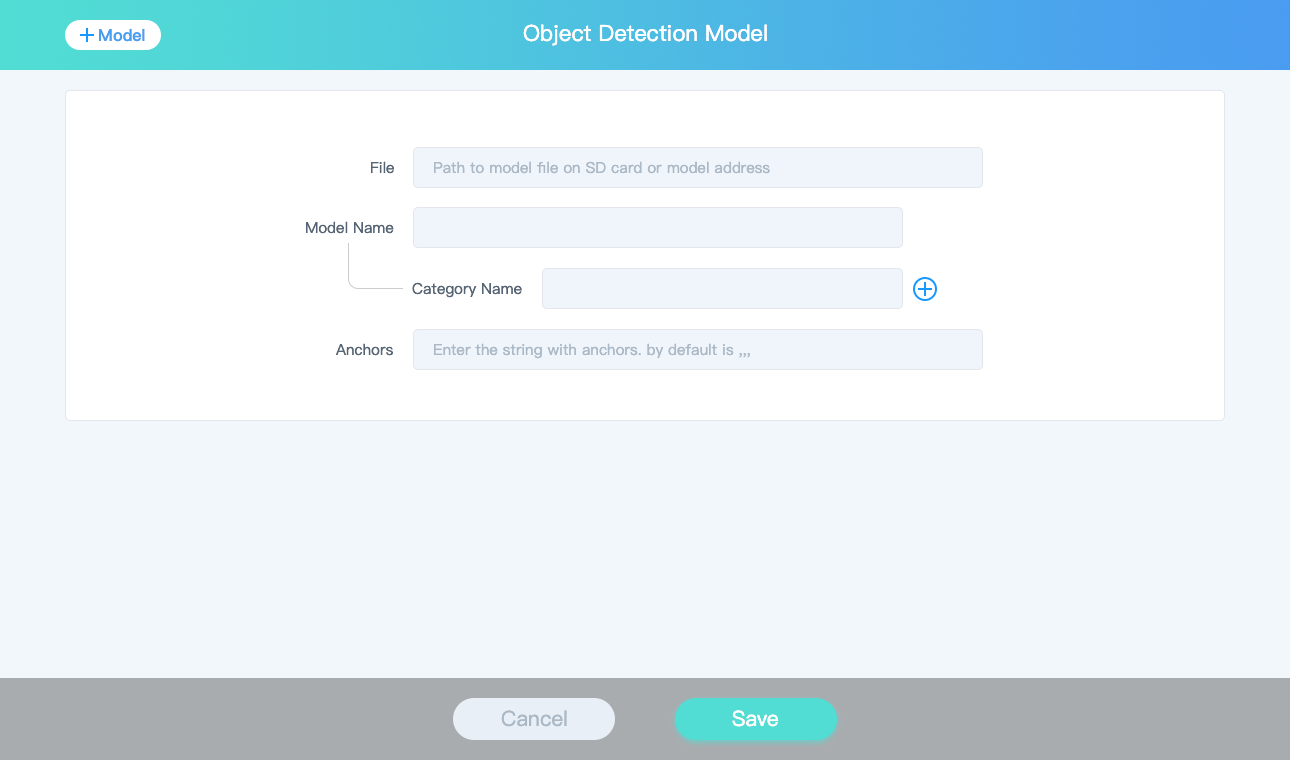

There you will need to enter filename of the model on SD card, the actual name of the model you will see in Codecraft interface(can be anything, let's enter Person detection), category name(person) and anchors. We didn't change anchors parameters, so will just use the default ones.

![]()

After that you will see three new blocks have appeared. Let's choose the one that outputs X coordinate of detected object and connect it to Display... at row 1. Put that inside of the loop and upload the code to MARK. You should see X coordinate of the center of the bounding box around detected person at the first row of the screen. If nothing is detected it will show -1.

-

4Running custom model with Micropython

This allowed us to implement model inference in graphical programming environment. Now we’ll go to Micropython and implement more advanced solution. Download and install MaixPy IDE from here.

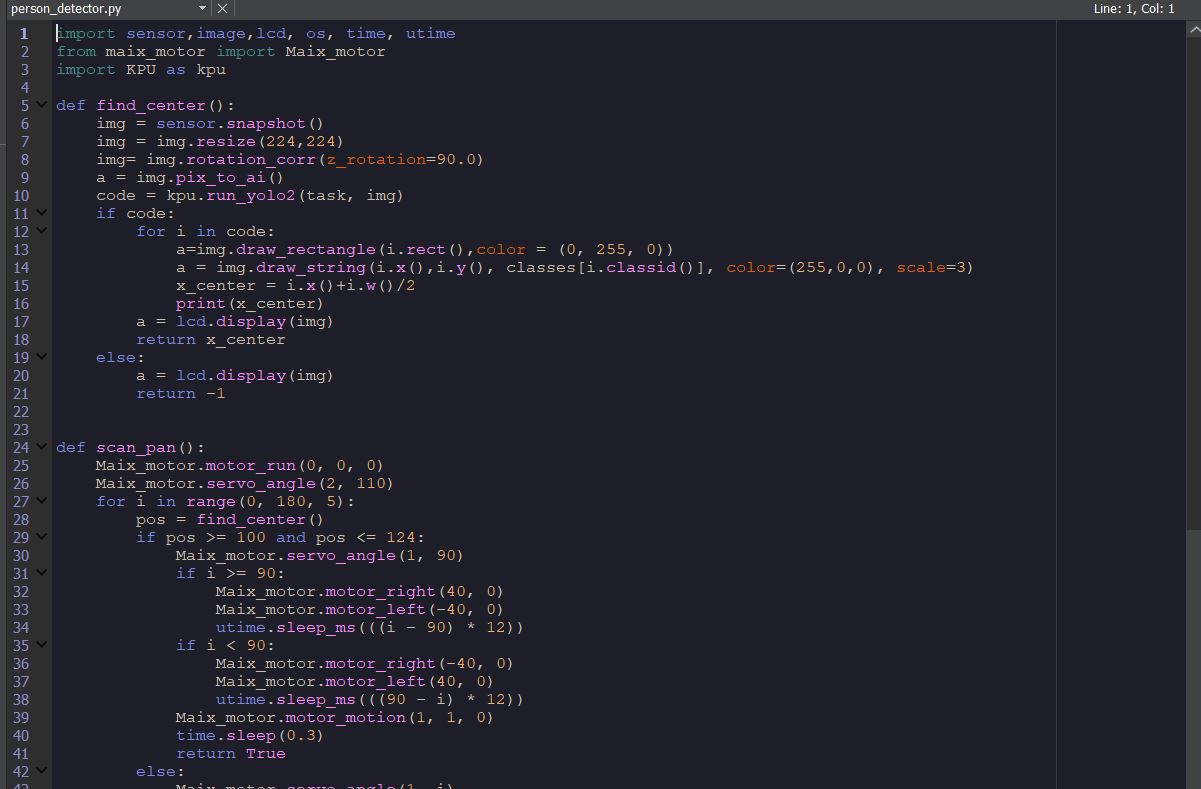

Open the example code I enclose with the article. The code logic is following:

1) We check if there are people detected in find_center() function. If there are people found, it returns the x-coordinate of the center of the biggest detected bounding box. If no people detected, the function would return -1.

2) If find_center() function returns the x-coordinate, we check if it is closer to image center/on the left/on the right, then control the motors accordingly.

3) If find_center() function returns -1, we use servo to do 40 degree tilt scan for people with the camera.

4) If while tilt scan we are unable to find people, the robot does 2 180 degree pan scans.

5) Finally if pan scan doesn’t detect any people, robot starts rotating in place in clockwise direction, while still performing person detection.

Here is the final result of Micropython code in action! It could be improved still to make faster/more robust.

-

5Feew last things

aXeleRate is still work in progress project. I will be making some changes from time to time and if you find it useful and can contribute, PRs are very much welcome! In near future I will be making another video about inference on Raspberry Pi 4 with/without hardware acceleration. We will have another WIP hardware appearance for that one. Which one? Can’t tell you, hush-hush!

Stay tuned for more articles from me and updates on MARK Kickstarter campaign.

Add me on LinkedIn if you have any questions and subscribe to my YouTube channel to get notified about more interesting projects involving machine learning and robotics.

M.A.R.K. Custom DNN Model Training and Inference

Train your machine learning models in Google Colab and easily optimize them for hardware accelerated inference!

Dmitry

Dmitry

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.