Goal

The goal with the project is to be able to get the robot to complete small tasks at home. Either through teleoperation, partly automated or fully automated. With the goal of automating tasks more and more over time. Also to have a friendly and easy to use interface to make it easy to use.

It would be especially useful for elderly or disabled people who need help but are at risk of being infected with COVID-19, influenza or any other illness. The robot can reduce human contact and relieve small tasks that can be difficult if, for example it is difficult to bend down or you sit in a wheelchair, some example tasks could be to help fill water and food bowls for pets, through object detection help to find things at home, or maybe watering the flowers.

Open source

So the plan is to make everything open source, however, i won't make it public while I'm developing the robot. For 2 reasons mainly, so i am going to change a lot while developing the robot, so i think it's better for me to take the developing costs instead of having the people wanting to build the project having to buy new parts as soon as i want to change something. Also i am doing video streaming and teleoperation, there is big consequences for having security holes in the software on these parts, so i really want to address any security issues before i make the code public.

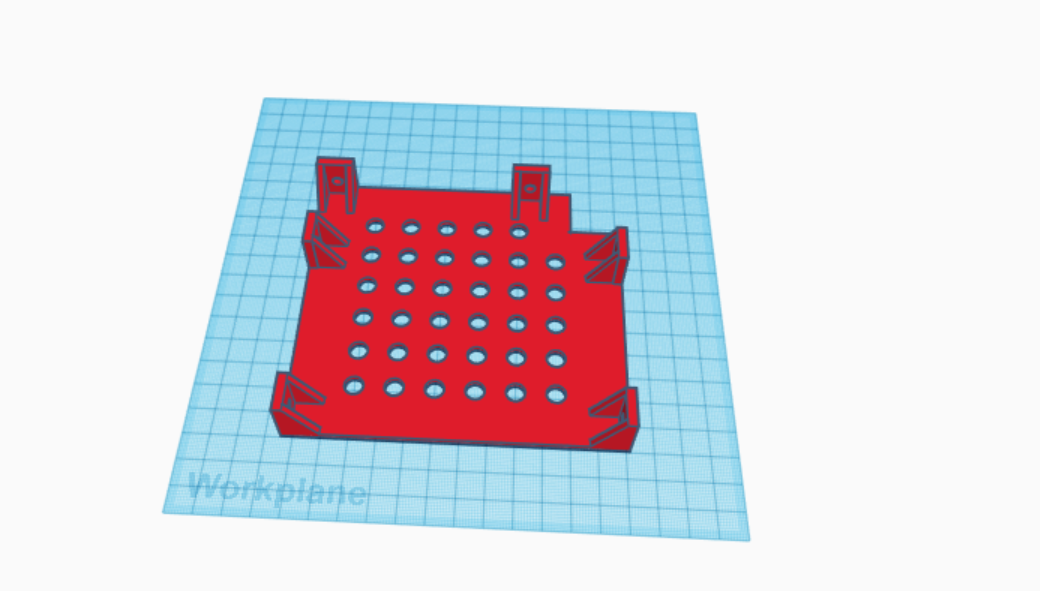

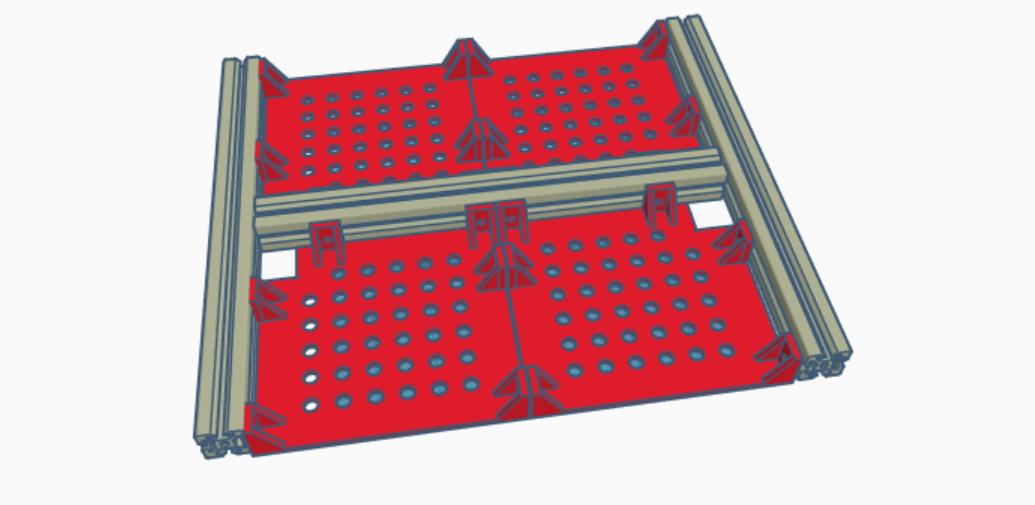

Design

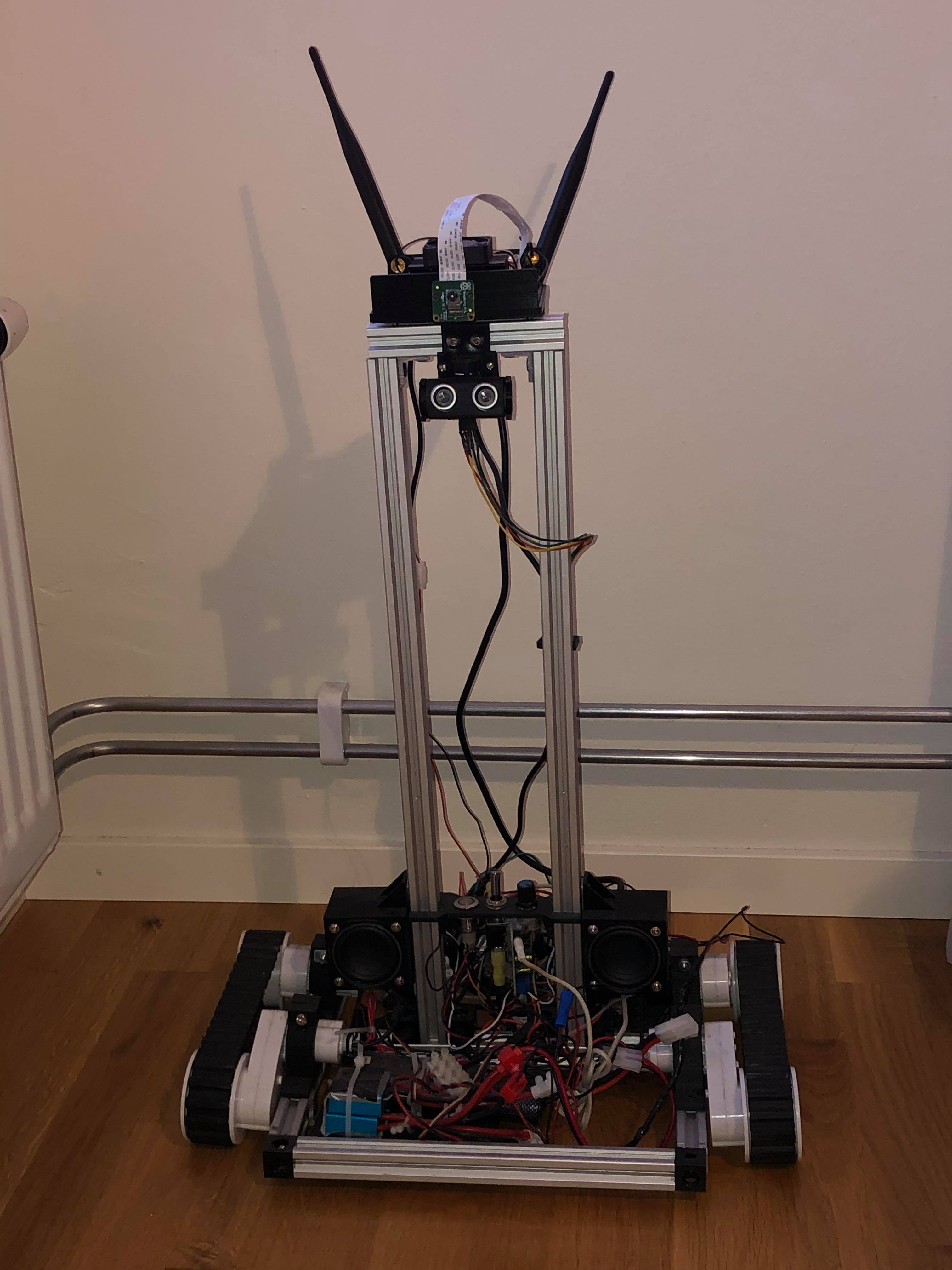

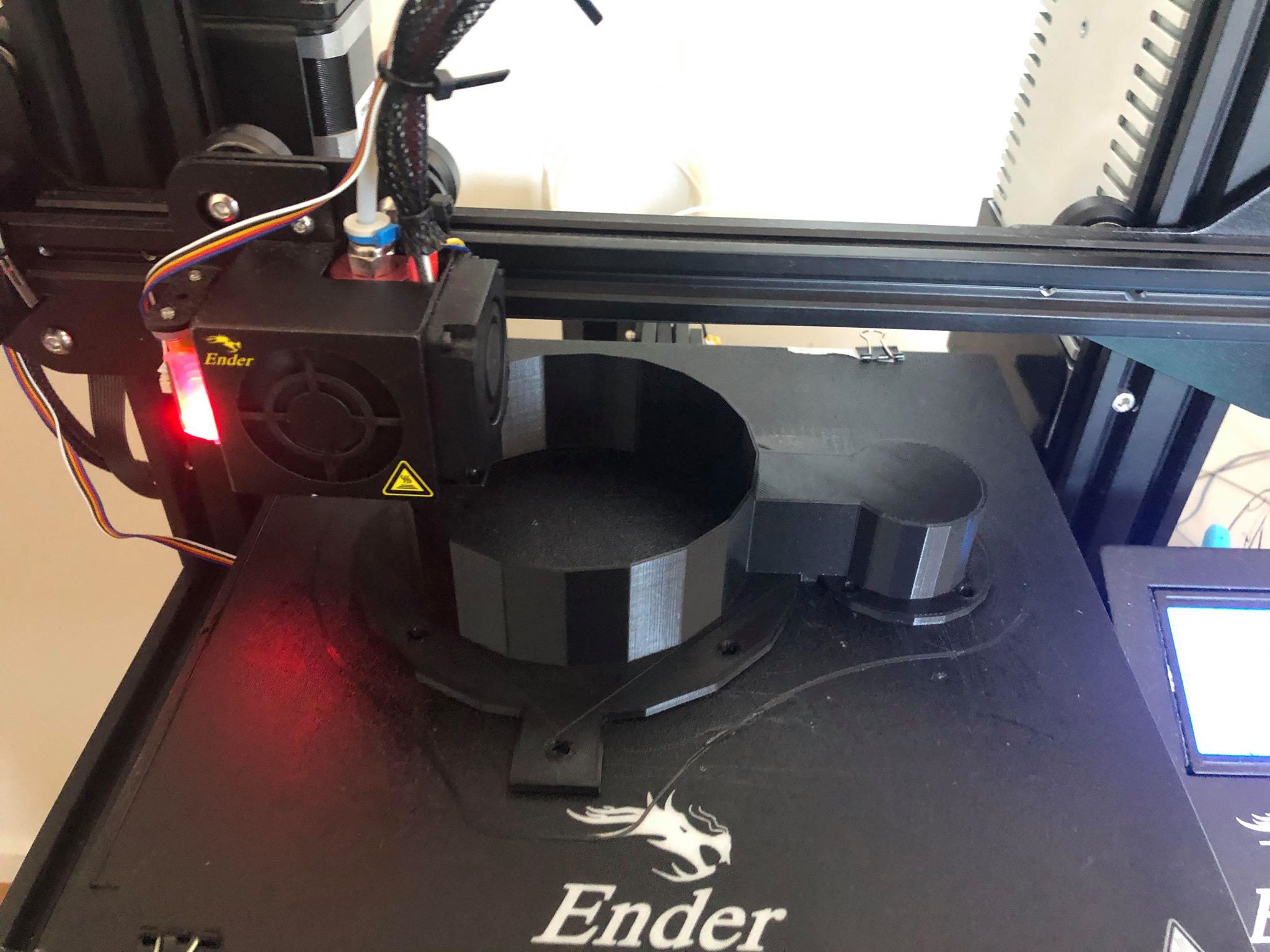

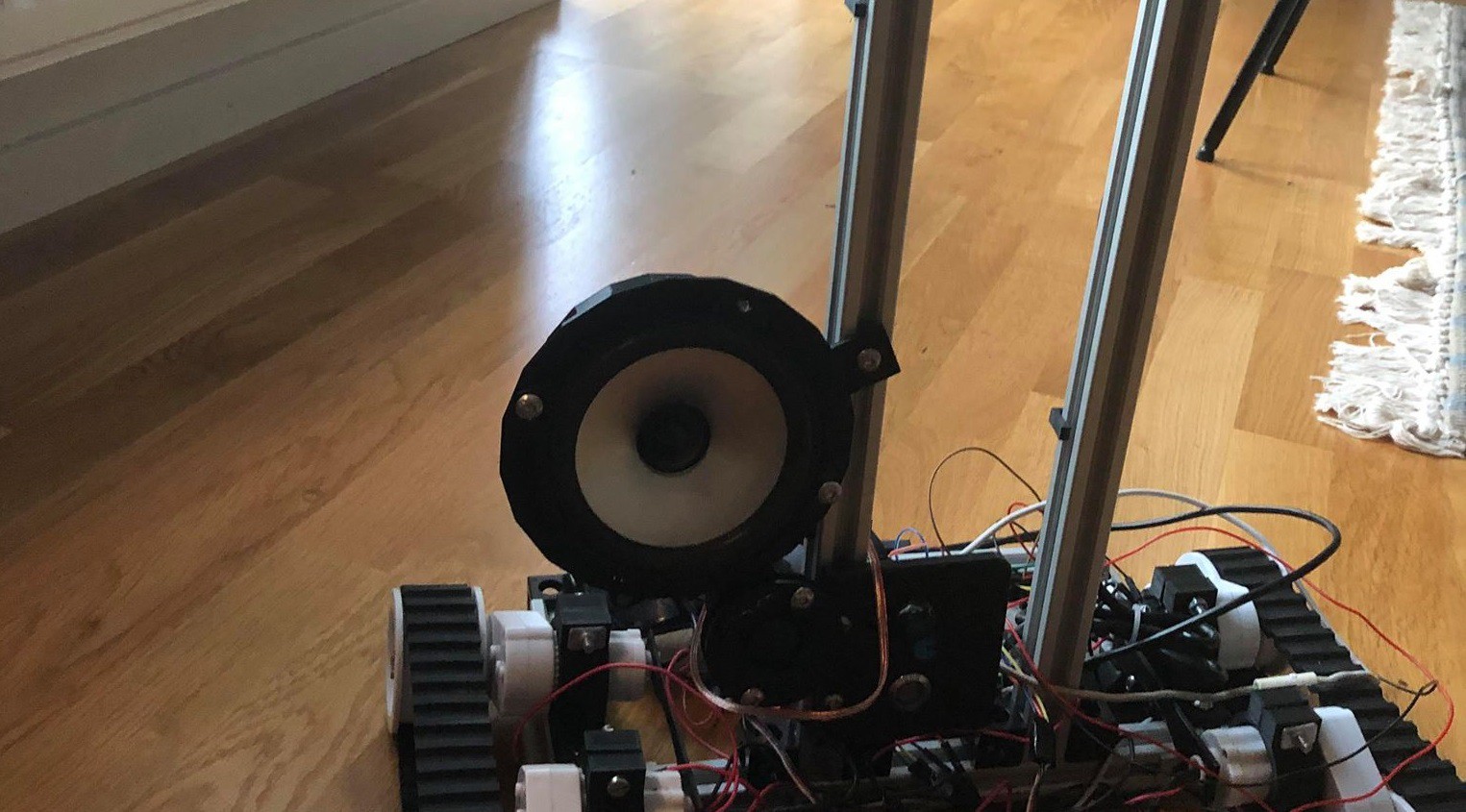

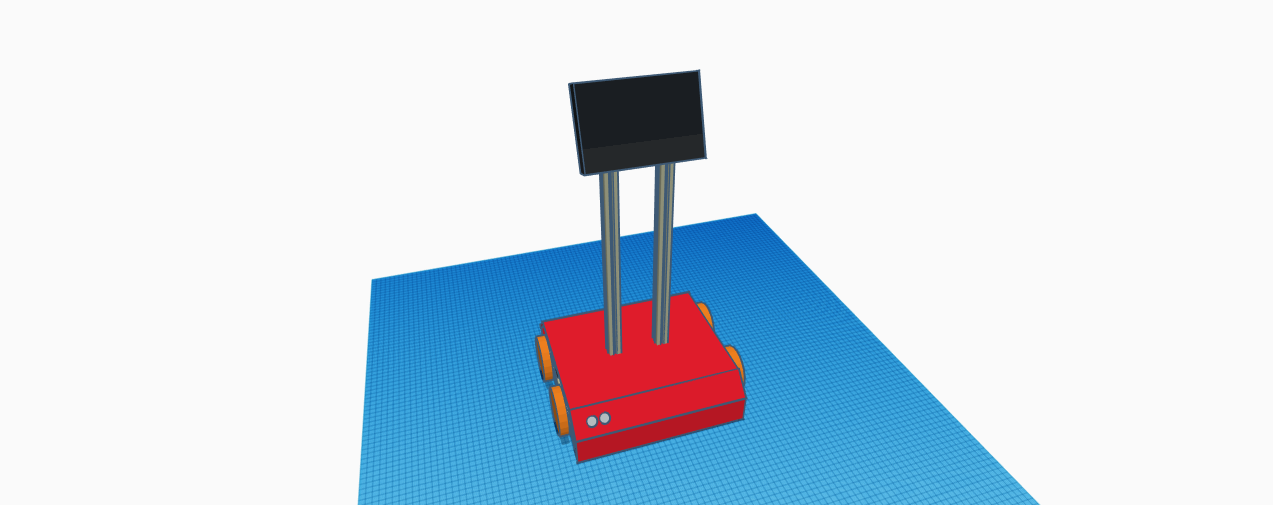

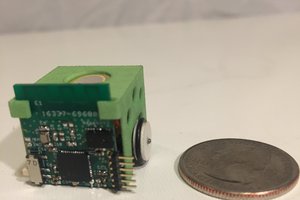

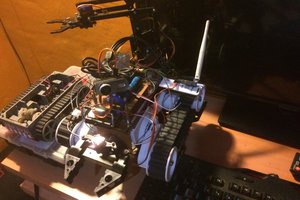

The design is kept quite simple to begin with to focus more on the software side. I will try to use cheap and easy to find components. Right now i have a simple base built on an existing robot platform with 20x20 aluminium extrusions attached to it as a frame for mounting components. On the "head" there is a 7" touchscreen for displaying an animated face and with the possibility to use as an input device.

The goal is to have a good base built for the robot first with the possibility for modular attachments.

Software

I'm using Tensorflow for Object detection and facial recognition. A python script to read sensor data from the arduino and to stream the live video to a server and use Socket.io to send real time commands to the robot so it's able to be remote controlled and start different directives, for example the only one i have now is the "Follow"-mode that use the object detection to follow the object of choice.

Mycroft AI is used as the voice assistent to give commands, play music etc.

Here you can see an early test of the follow mode. Further improvements have been made after this, mostly on making the object recognition faster and i will post more videos of that soon.

Erik Knutsson

Erik Knutsson

William (Bill) Weiler

William (Bill) Weiler

Rishaldy Prisly

Rishaldy Prisly

Andreas Hoelldorfer

Andreas Hoelldorfer

Dan Royer

Dan Royer

How did you get that blue screen