-

Prior work - Using Brain Activity for Smart Home Control, Part 2

07/13/2020 at 14:00 • 0 commentsIn this post entry we continue explore the prior work, on controlling the smart home for locked-in patients using the most universal controller - the brain. Though this technology is still relatively new, and we might not use it in this particular project, a lot of exploration is already there, worth mentioning and thinking about as of the ultimate future.

In this Part 2 I will walk you through the materials we have used as well as the open source setup. We talk about speech recognition, as well as location of the person within their apartment (which specific room they are in).

Design of the Smart Home

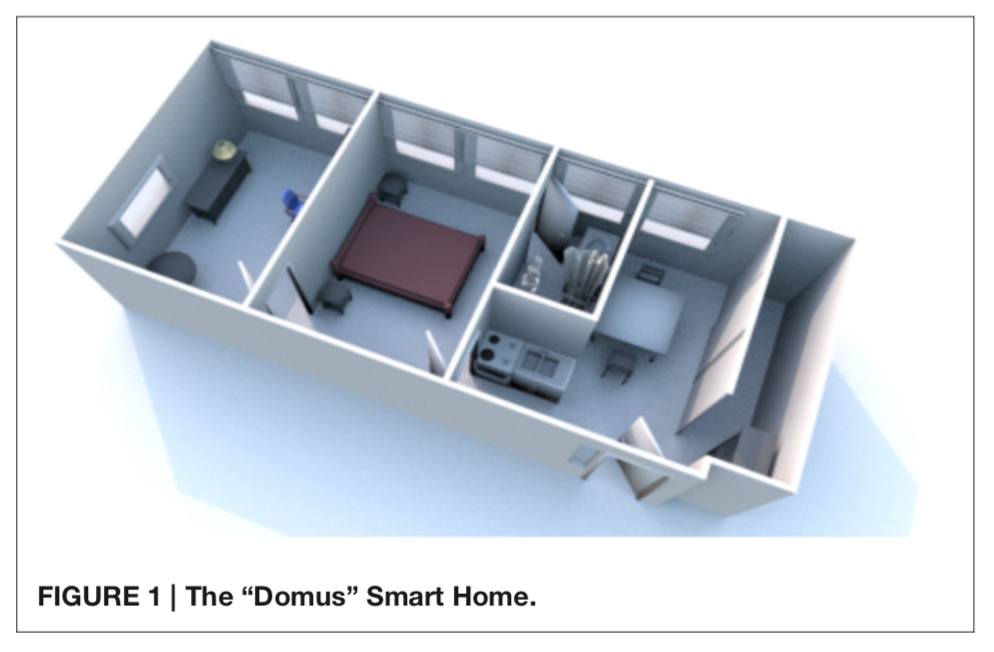

“Domus” Platform

We have performed our study at The “Domus” smart home (http://domus.liglab.fr) which is part of the experimentation platform of the Laboratory of Informatics of Grenoble (Figure 1). “Domus” is a fully functional 40 meters square flat with 4 rooms, including a kitchen, a bedroom, a bathroom and a living room. The flat is equipped with 6 cameras and 7 microphones to record audio, video and to monitor experiments from a control room connected to “Domus.”

![]()

Appliances

The flat is equipped with a set of sensors and actuators using the KNX (Konnex) home automation protocol (https://knx.org/knx- en/index.php).

The sensors monitor data for hot and cold water consumption, temperature, CO2 levels, humidity, motion detection, electrical consumption and ambient lighting levels. Each room is also equipped with dimmable lights, roller shutters (plus curtains in the bedroom) and connected power plugs that can be remotely actuated.

For example, in this experiment, in order to turn the kettle on or off, we directly control the power plug rather than interacting with the kettle. 26 led strips are integrated in the ceiling of the kitchen, the bedroom and the living room.

The color and brightness of the strips can be set separately, for an entire room or individually using the DMX protocol (http://www.dmx- 512.com/dmx- protocol/).

The bedroom is equipped with a UPnP enabled TV located in front of the bed that can be used to play or stream video files and pictures.

Architecture

The software architecture of “Domus” is based on the open source home automation software openHAB (http://www.openhab.org). It allows monitoring and controlling all the appliances in the flat with a single system that can integrate the various protocols used. It is based on an OSGI framework (https://www.osgi.org) that contains a set of bundles that can be started, stopped or updated while the system is running without stopping the other components. The system contains a repository of items of different types (switch, number, color, string) that stores the description of the item and its current state (e.g., ON or OFF for a switch). There are also virtual items that exist only in the system or that serve as an abstract representation of an existing appliance function. The item is bound to a specific OSGI bundle binding that implements the protocol of the appliance, allowing the system to synchronize the virtual item state with the physical appliance state. All appliance functions in “Domus,” such as power plug control, setting the color of the led strips or the audio/video multimedia control are represented as items in the system. The event bus is also an important feature of openHAB. All the events generated by the OSGI bundles, like a change in an item state or the update of a bundle are reported in the event bus. Sending a command to change the state of an item will generate a new event on the bus. If the item is bound to a physical object, the binding bundle will send the command to the appliance using the specific protocol, changing its physical state (e.g., switching the light on). The commands can be sent to an item through HTTP requests made on the provided REST (Gallese et al., 1996) interface. A web server is also deployed in openHAB, allowing the user to create specific UIs with a simple description file containing the items to control or monitor (Figure 2). The user can also create a set of rules and scripts that react to bus events and generate new commands. Persistence services are also implemented to store the evolution of states of items in log files, databases, etc.

Control capabilities

A virtual item was created in the repository to represent the classification produced by the brain-computer interface (BCI) and speech recognition systems. A set of rules was also set to be triggered when this virtual item state changed. Depending on this new state and the current room where the user is located, the rule sent a command to the specifics items to control the physical appliances associated to classification outcomes (TV, kettle power plug, etc.). To control the virtual item, the BCI/speech programs communicated with openHAB using the provided REST API. After a classification was performed, a single http POST request containing the classification outcome a string was sent to the REST interface, changing the state of the virtual item, triggering the rule and therefore allowing the user to control the desired appliance using their brain activity only.

![]()

Figure 2. Visual stimulations used for each concept for BCI control. The cup toggles the kettle on or off, the lamp toggles the lights on or off, the blinds toggle the blinds to be raised or lowered and the TV triggers the TV on or off.

-

The Karate Kid Analogy

07/13/2020 at 01:03 • 0 commentsIn my previous two log entries, I explored two concepts: IMUs and machine learning. In this log, I will try to put the two together and discuss the application relevant to this project for designing a universal remote control for people with cerebral palsy.

PAINTING AT UCPLA

My first introduction to this project was through the Q&A session between Hackaday and UCPLA. Through this video and a second follow-up Q&A session, we learned about one of UCPLA's great programs they offer to their community - fine arts. Before watching these videos, I had no idea what the range of motion individuals with cerebral palsy possessed and watched with curiosity how different individuals overcame their limitations in order to paint. Watching individuals paint with their heads, their feet, and their hands was inspiring. It also sparked a memory for me.

*Image taken from Hackaday/UCPLA Q&A video. WAX ON, WAX OFF

For some reason, I thought about the scenes from the Karate Kid movie where part of Mr. Miyagi's karate training was asking his pupil, Daniel, to do repetitive chores. Daniel grew frustrated doing physical chores but didn't realize the lesson of waxing Mr. Miyagi's car, painting his fence, or sanding his floor. The real purpose of these chores was to teach repetitive motions that were useful for self defence.

*Image source WHAT? HOW IS THIS RELEVANT TO THIS PROJECT?

My thought was, UCPLA is running a painting program to encourage their community to do physical activity while gaining individuality and purpose. These painting motions such as a vertical, horizontal, or circular paintbrush stroke, are different repetitive motions that they are learning. Can we find a way to understand their unique motions and use it for something besides painting, such as controlling smart devices?

Ideally, we want to find a solution that seamlessly integrates with UCPLA's existing programs. One idea was to use IMUs/EMGs to digitally record their painting sessions and through machine learning, classify different gestures. Are there other fun, engaging, physical activities they offer? Music? Video games? Household chores!?!?!?!

-

Can we implement machine learning?

07/12/2020 at 01:24 • 0 commentsLast meeting, machine learning was brought up as a potential option for this project. One key takeaway for designing for the population of individuals with CP is everyone is unique and has different ranges of motion. The question was raised whether or not it was feasible to implement a training model to learn the unique gestures and motions of this population and use their gestures as inputs for our universal remote. The questions that were brought up were: 1) How do we implement it, and 2) How much data do we need to collect?

GESTURE RECOGNITION STUDIES

Gesture recognition and control is an active field of research with its useful applications in human-computer interaction. There are many different variables such as input signals, gestures, and training classifiers that are used. I took a look at 5 studies that were IMU and/or EMG based and summarized them in the table below.

Paper # of Features Input Placement Gestures Population Jiang (2017) 8 4 EMG + IMU Forearm 8 hand (static) 10 Kang (2018) 27 3 IMUs Forearms + Head 9 full body (static) 10 Mummadi (2018) 10 5 IMU Fingertips 22 hand (static) 57 Kundu (2017) 15 IMU + 2 EMG Forearm + Hand 6 static + 1 dynamic 5 Wu (2016) 5 3 IMU Forearm, upper arm, body 3 static + 9 dynamic 10 Paper Sets per Gesture Total Sets Classifier Validation Accuracy Jiang (2017) 30 300 LDA K-fold cross validation 92.80% Kang (2018) 20 200 Decision trees (+bootstrap) Experimental 88.01% Mummadi (2018) 5 285 Decision trees (random forest) K-fold cross validation 92.95% Kundu (2017) 20 100 SVM K-fold cross validation/Experimental 94%/

90.5%Wu (2016) None None None Experimental 91.86% # of Features: The input dimensions of the training model. This could be raw data (accelerometer signals) or processed data (pitch/roll/yaw).

Input: For the inputs, I wanted to only focus on IMU and electrode inputs. Some other studies involve using cameras, which would be a less practical low-cost, open-source solution.

Placement: Most studies focus on forearm movements. The study by Kang et al. was recognizing gestures during stationary cycling which required an IMU on the forehead.

Gestures: Most of these gestures were static hand gestures.

Population: All of these studies were done on able-bodied individuals. For our potential application, we would expect more variability in our data.

Sets per gesture: For each participant, they were asked to repeat all the gestures numerous times for data logging. As an example, Jiang et al. would ask their participants to 30 sets of 8 gestures, for a total number of 240 sets. These sets were taken over 3 training sessions to vary conditions.

Total sets per gesture: The data for all participants were aggregated for each gesture and trained independently. Total sets ranged from 100-300.

Classifier: There is a lot of variability in the models. Most of these classifiers are supervised machine learning models except for the last study, which uses a custom algorithm.

Validation: Most training models were validated using K-fold cross validation. Using the study by Mummadi et al. as an example, they had 5 participants. For their validation, they would aggregate data from 4 participants for training the model and use the 5th participant's data for model validation. This process would be repeated 5 times.

The other method is experimental, which is comparing the trained model to a newly collected dataset. One relevant study was Kundu et al., who validated through the application of controlling a wheelchair. They also compared the operation of a wheelcahir with gesture control vs. a conventional joystick.

Accuracy: Most of these studies showed an accuracy of about 90%. For each study, accuracies for different gestures would vary between 70% to 100%. Most of these studies were proof-of-concept and if they wanted to improve the accuracy, they would collect more data and/or choose distinct gestures.

HOW DO WE IMPLEMENT IT?

Most of these studies were IoT wearable based systems, which is a pretty relevant platform for our project. Implementation can be broken down into a few steps:

- Design a wearable device to collect motion data

- Collect motion data through training sessions (This data is usually labelled for supervised learning)

- Using the collected motion data, train and validate the model

- Process trained model

- Upload firmware with custom trained model data to wearable device for use

Within the coming weeks, I will try to implement this with a small dataset that I collect to get a better idea how feasible it is to continue with this idea.

WHAT WOULD WE NEED TO CHANGE FOR USERS WITH CEREBRAL PALSY?

For the studies summarized, they were all with healthy, able-bodied participants. With individuals with impaired motor control, this may mean the number of gestures they can perform may need to be reduced. This also means the data will not be as consistent, To account for this, we may need to collect more data and also be wary of collecting "bad" data. Lastly, most of the studies used an aggregated dataset of all participants for training and validation. This may not be possible to do with the CP population based on the uniqueness of each person's movements. It is also probably not a good idea to collect able-bodied data and apply it to a CP population. If we cannot aggregate data, this means we would need to collect more data for each user and have an independent model for each person.

HOW MUCH DATA DO WE NEED TO COLLECT?

From the 5 studies, the minimum dataset for a model was 100 sets x 7 gestures. If we are assuming we cannot aggregate user data, this means each participant would need to do 700 actions to match the dataset for the study. This is a lot of data to collect especially considering asking someone with CP to do this. It would be helpful if we could integrate these training sessions with physical therapy sessions. Another helpful approach could be turn these training sessions into video gaming sessions, where these gestures are fun, task-based games.

As an initial feasibility test, I would reduce the number of gestures to 3 and perform 50 sets of each and see what the accuracy would be. I would then try to add a 4th gesture of 50 sets and retrain, to see the effect on the model and go from there.

-

The Complexity of a Simple Task

07/11/2020 at 22:13 • 0 comments![]()

Build A Universal Remote

Sounds like a simple project right? In all intensive purposes it is. Universal remotes have been around for at least 65 years. At first the task was simple, as the number of devices you could or would need to control were only a few. In our day and age, the sky is the limit. Countless number of different devices have some sort of remote or wireless control, and more and more devices are entering the picture every day.For the typical user the control interface is a smart phone or speaker. But what about for the rest of us.

---------- more ----------Build A Universally Usable Universal Remote (U3R?) in 2020

So what about people that cant use a smartphone?

Cerebral Palsy is one of the most common movement disorders in the world, drastically limiting a persons ability to interact with everyday things around us. Many people with movement disorders cannot operate a smartphone, let alone a simple TV remote, or most other remote controls. Theirs an app for that isn't always an option here.

So where do we begin, what would a U3R look like?

Good question! That is exactly what we are trying to figure out!

And So The Complexity Begins

The complexity begins when you dive into the factors that drive this design choice.

- What part of the users body is working enough to be useful?

- Hand, Foot, Head, Knee, Torso, Mouth, Eye, Voice, etc

- What kind of peripherals can they interact with?

- Mouse, Keyboard, Joystick, Buttons, Microphone, Electrodes, Motion Sensor, Camera (Eye, hand, body, gesture tracking), Touchscreen, Trackpad, etc

These can be further broken down into sub categories and other variables like size, sensitivity, durability, repairablility, wearablility, portability, customizability, cost, power consumption, line-of-sight, distance, effort, and more.

How do you make a good design choice when there are so many variables.

Well, if you had several years and a large budged you could do a fully comprehensive study with a large enough pool of test subjects to statistically determine the best combination of features that will meet the needs of the majority of users.

But we only have >2 months and limited budget!

What we need is a Project Overview that fits our time frame and budget.

Team-Based Project Planning

- Project Overview

- Identifies the problem or opportunity

- Identifies assumptions and risks

- Sets the scope of the project

- Establishes project goals

- Lists important objectives

- Determines the approach or methodology

- Work Breakdown

- Keeps the team focused

- Organizes objectives with activities

- Prioritize activities

- Activity assignments

- Provides clarity regarding assignments, project's purpose, resources, and deadlines

- Activity should match the skills of the team member

- Establishes milestone and progress reporting

- Putting the plan into action (Team Leadership)

- Provide leadership and additional resources

- Assist with decision making and problem solving

- Monitor the critical path and team members performance

- Project closeout

- Recognize team members

- Write a postmortem report

- Project status

- Lessons learned

- What part of the users body is working enough to be useful?

-

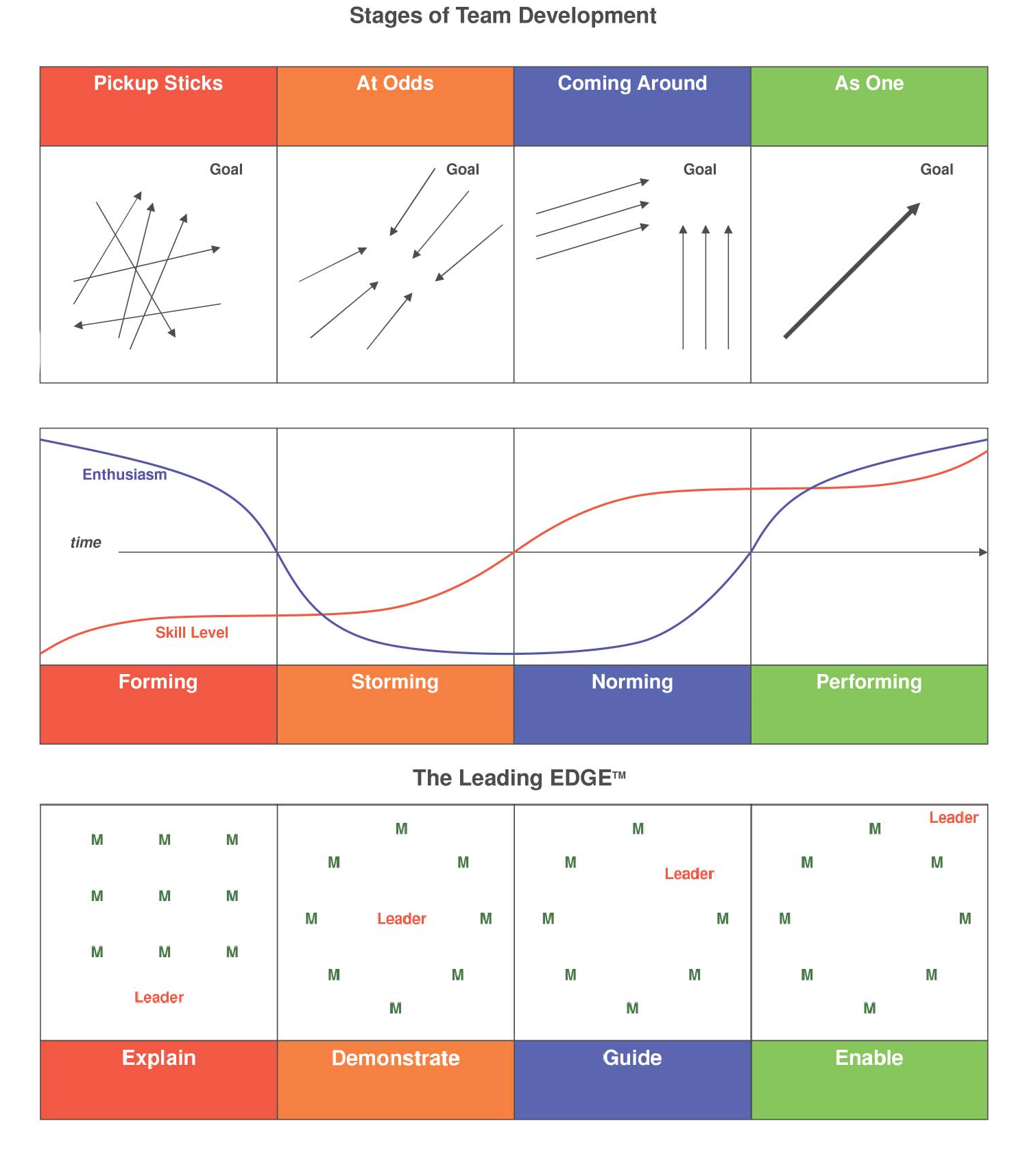

Team Development

07/11/2020 at 20:56 • 0 commentsThe first part of forming a team

Every team starts with individuals coming together to achieve a common goal. Everyone knows this and most of us have been a part of many different teams throughout our life. Sometimes you get to pick your own team members, but in many cases in our life our team is chosen for us. This was certainly true for the UCPLA Dream Team. The fine folks at SupplyFrame DesignLab interviewed several individuals and teams and very carefully and deliberately formed a Dream Team to crush each project.

A Dream Team

If you have been following our progress from the start, about two weeks ago, it is very apparent that our team is not, well, performing like a "Dream Team".

What's going on here?! After much careful planning and getting a group of enthusiastic individuals together with a common goal, this shouldn't be a problem.

We'll there are several reasons why this could be the case. It isn't always easy to identify what is holding a team back. However, I believe that every team follows a pattern or cycle of team development. I first learned about this idea during a BSA leadership training course known as Woodbadge (A multiple day course on skills and methods for advanced leadership).

Many of the methods I learned in Woodbadge are also taught at many other organizations and companies around the world.

For this log I mainly want to focus on the four stages of team development and how it relates to our Dream Team development.

---------- more ----------The following content is directly from the Woodbadge workbook I used.

![]()

- FORMING (“Pickup Sticks”): Teams often begin with high enthusiasm, and high, unrealistic expectations. These expectations are accompanied by some anxiety about how they will fit in, how much they can trust others, and what demands will be placed on them. Team members are also unclear about norms, roles, goals, and timelines. In this stage, there is high dependence on the leadership figure for purpose and direction. Behavior is usually tentative and polite. The major issues are personal well-being, acceptance, and trust.

In our case an added sense of unrealistic expectations was unintentionally instigated by calling us a "Dream Team". While we had some direction, we were unclear about how to proceed with a project that appears simple, but became increasingly complex the more we learned about the end user (more on this in my next log). Also, in the first week of the project it became apparent that our team was lacking the self driven leadership needed to meet expectations. - STORMING (“At Odds”): As the team gets some experience under its belt, there is a dip in morale as team members experience a discrepancy between their initial expectations and reality. The difficulties in accomplishing the task and in working together lead to confusion and frustration, as well as a growing dissatisfaction with dependence upon the leadership figure. Negative reactions to each other develop, subgroups form, which polarize the team. The breakdown of communication and the inability to problem-solve result in lowered trust. The primary issues in this stage concern power, control, and conflict.

In our case, we have a deadline to meet, but we were reluctant to make design choices without gathering more information about our users. Also, every team member has a different product idea and we couldn't decide on what path to take. I feel like we are currently in this stage. - NORMING (“Coming Around”): As the issues encountered in the second stage are addressed and resolved, morale begins to rise. Task accomplishment and technical skills increase, which contributes to a positive, even euphoric, feeling. There is increased clarity and commitment to purpose, values norms, roles, and goals. Trust and cohesion grow as communication becomes more open and task oriented. There is a willingness to share responsibility and control. Team members value the differences among themselves. The team starts thinking in terms of “we” rather than “I”. Because the newly developed feelings of trust and cohesion are fragile, team members tend to avoid conflict for fear of losing the positive climate. This reluctance to deal with conflict can slow progress and lead to less effective decisions. Issues at this stage concern the sharing of control and avoidance of conflict.

- PERFORMING (“As One”): At this stage, both productivity and morale are high, and they reinforce one another. There is a sense of pride and excitement in being part of a high-performing team. The primary focus is on performance. Purpose, roles and goals are clear. Standards are high, and there is a commitment to not only meeting standards, but to continuous improvement. Team members are confident in their ability to perform and overcome obstacles. They are proud of their work and enjoy working together. Communication is open and leadership is shared. Mutual respect and trust are the norms. Issues include continued refinements and growth.

The time each team spends at each stage varies greatly depending on many factors, like team size, severity of conflict, or other team dynamics. It is also common for teams to repeat all or part of the cycle as new team members and or obstacles are encountered.

I'll be sure to add logs as we progress as a team.

- FORMING (“Pickup Sticks”): Teams often begin with high enthusiasm, and high, unrealistic expectations. These expectations are accompanied by some anxiety about how they will fit in, how much they can trust others, and what demands will be placed on them. Team members are also unclear about norms, roles, goals, and timelines. In this stage, there is high dependence on the leadership figure for purpose and direction. Behavior is usually tentative and polite. The major issues are personal well-being, acceptance, and trust.

-

Prior work - Using Brain Activity for Smart Home Control, Part 1

07/10/2020 at 17:43 • 0 commentsPrior to collaborating on this project's challenge, I have been working on using Brain-Computer Interfaces for Smart Home control for people with locked-in syndrome.

Smart homes have been an active area of research, however despite considerable investment, they are not yet a reality for end-users. Moreover, there are still accessibility challenges for the elderly or the disabled, two of the main potential targets for home automation. In this exploratory study we designed a control mechanism for smart homes based on Brain Computer Interfaces (BCI) and apply it in the smart home platform in order to evaluate the potential interest of users about BCIs at home. We enable users to control lighting, a TV set, a coffee machine and the shutters of the smart home. We evaluated the performance (accuracy, interaction time), usability and feasibility (USE questionnaire) on 12 healthy subjects and 2 disabled subjects and reported the results.

This is an on-going research, including 2 published scientific contributions, if you want to learn more.

This post entry will be divided into several parts. I will share more details with you about the research as well as the set of the open-source tools we have used in this work.

Part 1.

Imagine you could control everything with your mind. Brain Computer Interfaces (BCIs) make this possible by measuring your brain activity and allowing you to issue commands to a computer system by modulating your brain activity. BCIs can be used in many applications: medical applications to control wheel chairs or prosthetics (Wolpaw et al., 2002) or to enable disabled people to communicate and write text (Yin et al., 2013); general public applications to control toys (Kosmyna et al., 2014), video games (Bos et al., 2010) or computer applications in general. One of the more recent fields of applications of BCIs are smart homes and the control of their appliances. Smart homes allow the automation and adaptation of a household to its inhabitants. In the state of the art of BCIs applied to smart home control, only younger healthy subjects are considered and the smart home is often a prototype (single room or appliance). BCIs have never been applied and evaluated with potential end-users in realistic conditions. However, smart homes are of the interest to disabled people or to elderly people with mobility impairments who are able to operate appliances within the house autonomously (Grill-Spector, 2003; Edlinger et al., 2009). Studies on disabled users are just as rare as studies in realistic smart homes (with healthy subjects or otherwise). However, the expectations and needs of healthy subjects are biased, as they cannot fully conceive of the difficulties of disabled people and thus of their needs, so performing experiments both with healthy and disabled subjects is of interest for smart home research.

Smart Homes and BCIs

There are several works that use BCIs in smart home environments. A good part of those take place in virtual smart home environment as opposed to a real or prototyped smart home. This can be problematic as it is difficult to recreate in situ conditions in-vitro (Kjeldskov and Skovl, 2007). There are several types of BCI control:

- Navigation (virtual reality only): The BCI issues continuous or discrete commands that make the avatar of the user move through the virtual home. Motor imagery (MI), where users imagine moving one or more limbs to generate continuous control is the most commonly used BCI for navigation.

- Trigger/Toggle: The BCI issues a punctual command that triggers a particular action or toggles the state of an object in the house (e.g., looking at a blinking led on the wall to make the light turn on). Many paradigms can be used for such actions:

- P300: The user looks at successively flashing items to choose from. When the desired item flashes, the brain produces a special signal (P300) that can be detected.

- Steady-State Evoked Potentials (SSVEP): The user looks at flickering targets at different frequencies. We can detect the target the user is looking at and trigger a corresponding action.

- Facial Expression BCI: The facial expressions of the user are detected though their EEG signals.

In this work we use only trigger-type controls. Most BCIs applied to smart homes use paradigms (P300, SSVEP) that require a display with flashing targets that the user must look at. Whereas, here, we use mental imagery, that requires no external stimuli. Mental imagery has never been applied to any practical control application, let alone smart homes. With conceptual imagery the semantics of the interaction is compatible with the semantics of the task. The principle is that users imagine the concept of a lamp (e.g., by visualizing, "imagining" a lamp in their mind) and the BCI recognizes the concept and trigger a command that turns on the light. No movement, voice command is needed from the person, only their brain activity.

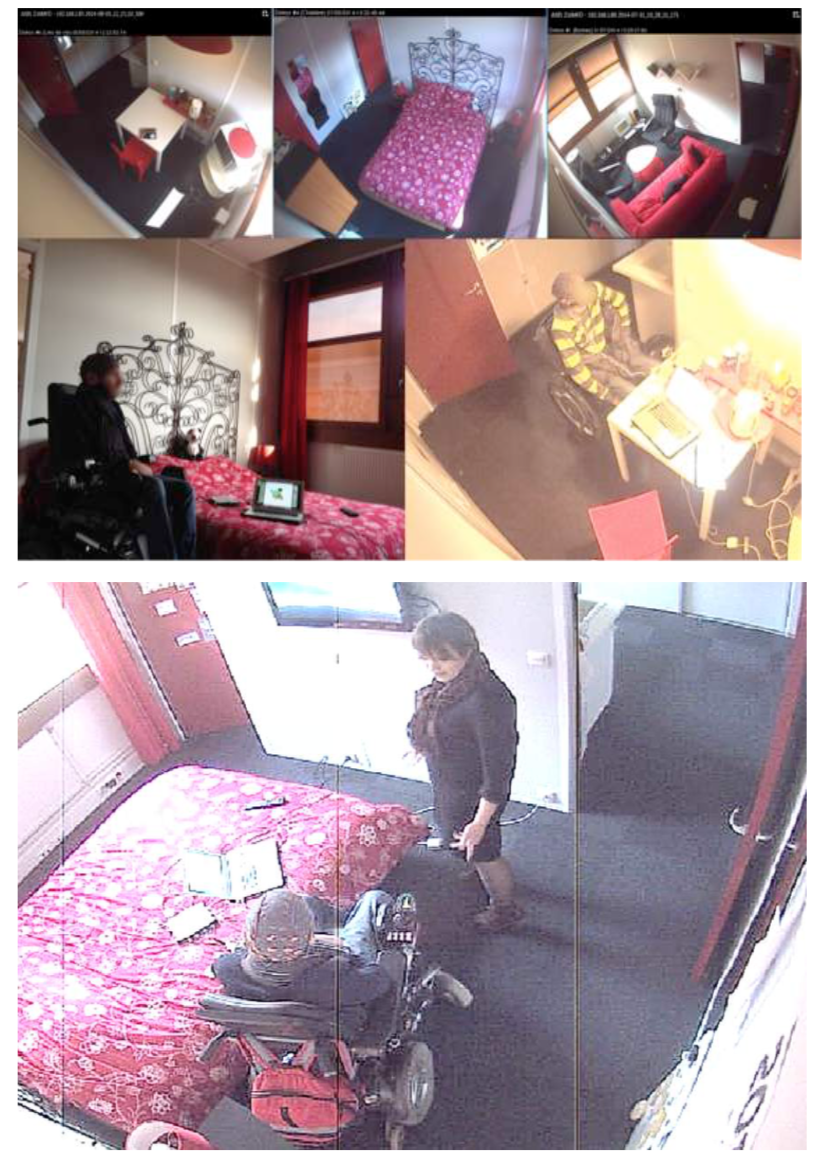

![]()

Image of the participant in the wheel chair performing a test in the smart home. The quality of the images is reduced due to the cameras currently installed and used in the smart home. Please contact nkosmyna AT mit DOT edu in case you want to re-use the image.

All the citations will be provided in Part 3 of this log post, but if you want to check them out now, please go here to get an open-access, free copy of the full, in-depth article of mine!

-

A previous solution

07/07/2020 at 17:26 • 0 commentsBefore joining the hackday prize dream team, I had already built a simple Universal TV remote to assist those with limited dexterity.

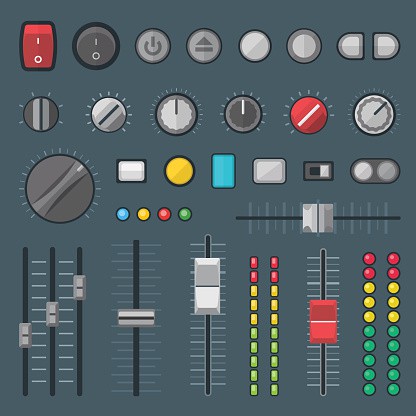

![]()

You can read all about it here: https://rubenfixit.com/2016/08/20/megabutton-universal-remote-mk-ii/

Essentially I created a custom box with recessed arcade buttons and wired it up to an existing universal remote. While this works, it has it's limitations and the current version can no longer be programed because the Logitech universal remote that was used is end-of-life.

-

Exploring Alternative Computer Input Devices: IMU Joysticks

07/06/2020 at 02:05 • 2 commentsFor this project, we want to first brainstorm and explore different input devices that can be used as universal remote controllers. For individuals with cerebral palsy, mechanical joysticks are commonly used for controlling motorized wheelchairs. For the first proof of concept, I wanted to explore the use of joysticks as an input controller. Individuals with CP have difficulty using a mouse and keyboard, so I tried to use joysticks to control my computer.

Since I didn't have a joystick, I used inertial measurement unit (IMU) sensors to act as a "floating" joysticks. I had a few microcontrollers/IMU sensors lying around, so I wanted to do a simple demo using these IMUs to draw using the computer program, Paint.

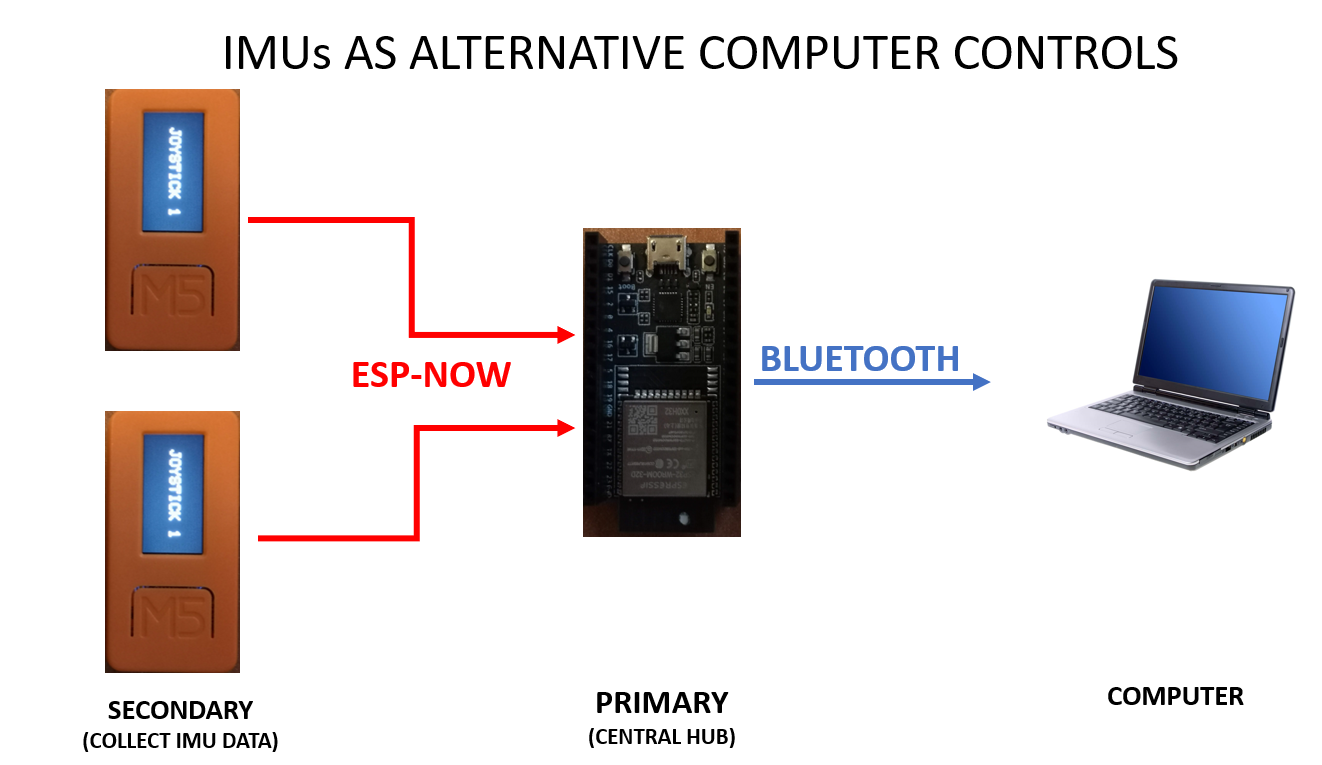

Below is a high-level overview of the architectrue. Two M5StickC watches were used as the inputs. An ESP32-WROOM dev board was used as the primary communication hub, receiving IMU data from the M5StickC watches through the ESP-NOW protocol, which was then processed and translated into mouse and keyboard data and relayed to control the computer via Bluetooth.

Most of these components were used out of the box with no soldering/wiring (except for adding an input button to disable the joystick controls). Below are the 3 controllers.

![]()

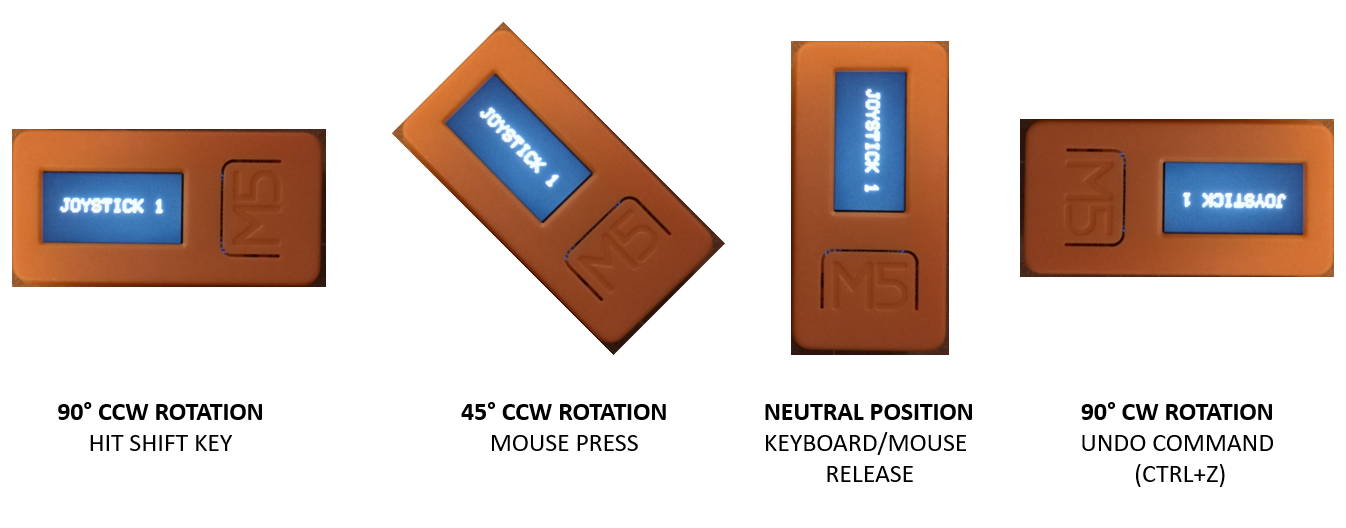

To briefly summarize how this works, Joystick 1 controls the mouse movement functionality. I only used the accelerometer data to detect the tilt angle in two directions (Forward-Backward and Left-Right). Similarly for Joystick 2, I hotkeyed a few mouse/keyboard commands based on tilting the IMU in one direction. Below is an image showing different angles of Joystick 2 mapped onto different mouse/keyboard commands.

With this setup, I attempted to draw a few images using the Paint program on my laptop. The first one was the Supplyframe logo operating both joysticks with my hands.

The next thing I tried was to try to control Joystick 1 (mouse movement) with my head. I wore a hat and placed the joystick in the hat.

This was much more difficult to control and after about 10 minutes, my neck was sore. Part of this exercise was helpful to put myself in the shoes of others and experience using input controllers without hands.

2020 HDP Dream Team: UCPLA

The 2020 HDP Dream Teams are participating in a two month engineering sprint to address their nonprofit partner. Follow their journey here.

Supplyframe DesignLab

Supplyframe DesignLab