How it'll work:

- Install receivers on monitors, smartphones, laptops, tablets, etc.

- Wear headset and look at a device.

- The onboard IR receiver identifies the device you're looking at.

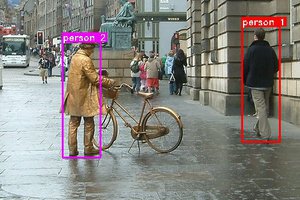

- Computer vision determines the orientation of your head relative to the device's screen

- Computer vision determines your gaze trajectory

- Combine 'em together to determine where you're looking on the device. That's the cursor!

- Blink to click. Squint to drag. Blink twice to scroll.

- The headset transmits your position and clicking to the receiver on the device.

- The device sees the receiver as a touchscreen and gets commands!

Onboard hardware:

- Core unit

- Raspberry Pi Compute Module 3+

- StereoPi carrier board

- Custom interface board

- Quick-disconnect magnetic power jack

- Adorable teeny-weeny heatsink and fan

- Eyepiece

- CSI infrared camera, modified for super-macro

- IR reflectance sensor, to detect high-speed blinks

- IR emitters to triangulate cornea

- Indicator LED's for user feedback

- Forehead piece

- CSI full-color camera to triangulate position relative to target devices

- IR transceiver to identify target device and send gaze position to receiver

- Warning LED when forehead camera is active

- Receivers (not shown, mounted to devices)

- LED indicators to aid positioning

- IR transceiver to transmit device information and receive gaze data

- Bluetooth or USB connection to target device - enumerates as a touchscreen

Zack Freedman

Zack Freedman

AIRPOCKET

AIRPOCKET

JVS

JVS

robheffo

robheffo

Hello, cool accessibility project! Please post any trouble you are having with design or software or computer vision / OpenCV issues. The community will surely have input! Cheers on a really cool HCI accessibility design.