-

1Choosing a Sensor

To briefly explain my sensor choices.

Lidar: I've used "lidar" Time of flight sensors to detect high fives, in the previous version of my wearable. However, it cannot detect arm gestures without complex mounting a ton of them all over one arm.

Stain Sensor: Using the change in resistance stretch rubbers I can get a sense of what muscles I'm actuating or general shape of my arm. However, they are easily damaged and wear with use.

Muscle Sensors: Something like an MYO armband can determine hand gestures, but require a lot of processing overhead for my use case. They are also quite expensive.

IMU: Acceleration and gyroscope sensors are cheap and do not wear out over time. However, determining a gesture from the data output of the sensor requires a lot of manual thresholding and timing to determine anything useful. Luckily machine learning can determine relationships in the data and even can be implemented on a microcontroller with tflite and TinyML.

I chose to go forward with an IMU sensor and Machine Learning. My sensor is the LSM6DSOX from ST on an Adafruit Qwiic board.

-

2Arduino Setup

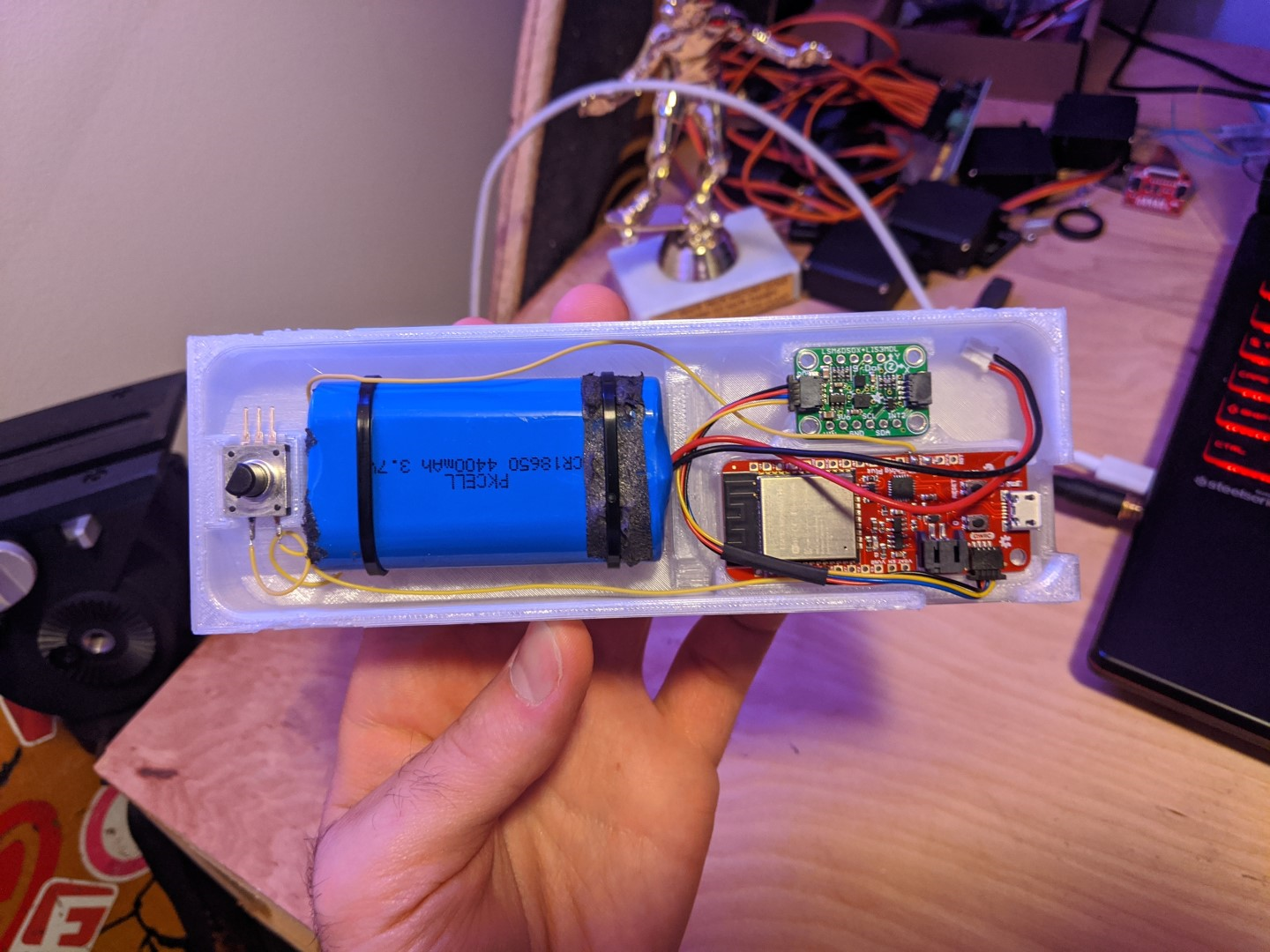

In the Arduino_Sketch folder of this projects Github repo is the AGRB-Traning-Data-Capture.ino. A script to pipe acceleration and gyroscope data from an Adafruit LSM6DSOX 6 dof IMU out of the USB serial port. An ESP32 Thingplus by SparkFun is the board I've chosen to use due to the Qwiic connector support between this board and the Adafruit IMU. A push-button is connected between ground and pin 33 with the internal pull-up resistor on. Eventually, I plan to deploy a tflite model on the esp32, so I've included a battery.

Every time the push-button is pressed, the esp32 sends out 3 seconds of acceleration and gyroscope data over usb.

![]()

-

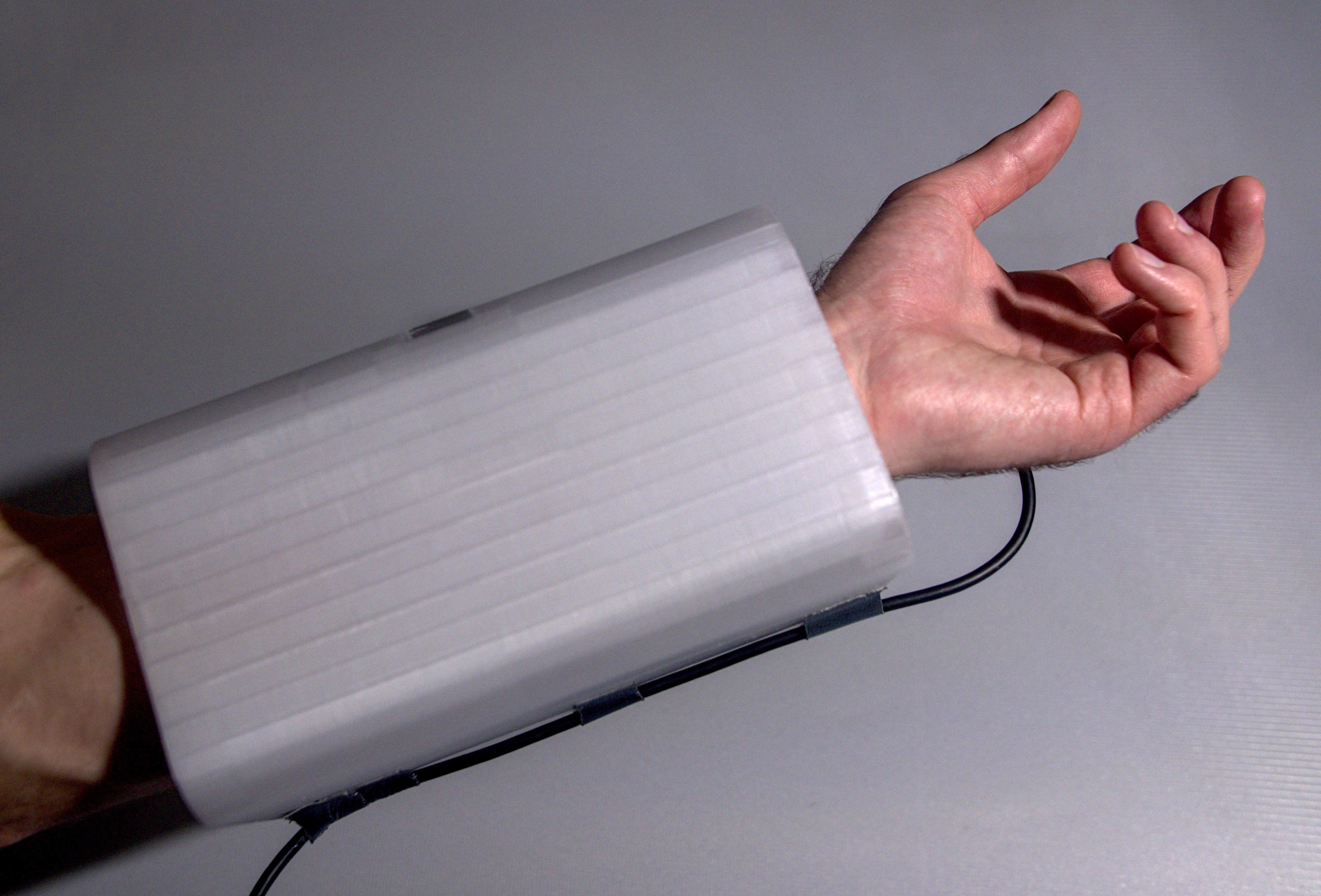

3Build A Housing

I used fusion 360 to model my arm and and a square case like housing. It took some revisions to get it to fit perfect and is actually rather comfortable even on a hot day in Hotlanta. All the cad files are in the linked github on this project.

![]()

-

4Data Collection

In the Training_Data folder of my github repo, locate the CaptureData.py script. With the Arduino loaded with the AGRB-Traning-Data-Capture.ino script and connected to the capture computer with a USB cable, run the CaptureData.py script. It'll ask for the name of the gesture you are capturing. Then when you are ready to perform the gesture press the button on the Arduino and perform the gesture within 3 seconds. When you have captured enough of one gesture, stop the python script. Rinse and Repeat.

![]()

I choose 3 seconds of data collection or roughly 760 data points mainly because I wasn't positive how long each gesture would take to be performed. Anywho, more data is better right?

-

5Creating a Docker File

Why create a docker container? in this project, I'll be exploring data and building models. To allow you to build a model that is close to the one I create and instead of listing out all of the required libraries and packages, you can build this docker container and run it! Docker is awesome for sharing projects and knowing your project will run wherever your container is.

#define base container ARG BASE_CONTAINER=jupyter/scipy-notebook:04f7f60d34a6 FROM $BASE_CONTAINER #create some metadata for the image LABEL maintainer = "Nate Damen <nate.damen@gmail.com>" \ version="0.1" \ description = " Notebooks and data for creating Tensorflow lite model of arm gesture recognition from Accl and Gyro data" #make a working directory WORKDIR /app COPY . /app #mount point VOLUME /app/data #expose the port EXPOSE 8888 USER root RUN apt-get update && apt-get -qq install xxd #install tensorflow 2.2 RUN pip install --quiet --no-cache-dir \ 'tensorflow==2.2.0' && \ fix-permissions "${CONDA_DIR}" && \ fix-permissions "/home/${NB_USER}" #run notebook upon container launch CMD ["jupyter","notebook","--ip='*'","--port=8888","--no-browser","--allow-root","--password=password"] #to build the docker file, cd to the appropriate directory and copy past the following into the terminal: docker build -t atltvhead/gesture_bracer . #to run the this docker file use the following: docker run -e PASSWORD=password -p 10000:8888 -v "C:/Users/nated/Documents/Python Scripts/Atltvhead-Gesture-Recognition-Bracer:/app/data" --name bracer atltvhead/gesture_bracer:latest #to find out what the token is use the following docker exec bracer jupyter notebook list #when restarting the docker container use http://localhost:10000/tree? to go to the notebook #when needing to add software or access the sudo command line for the docker use the following command in a windows cmd: docker exec -it -u root bracer bash -

6Data Exploration

The following conclusions and findings are found in Jypter_Scripts/Data_Exploration.ipynb file:

- he first exploration task I conducted was to use seaborn's pair plot to plot all variables against one another for each different type of gesture. I was looking to see if there was any noticeable outright linear or logistic relationships between variables. For the fist pump data there seem to be some possible linear effects between the Y and Z axis, but not enough to make me feel confident in them.

![Fist Pump Pairplot]()

-

Looking at the descriptions, I noticed that each gesture sampling had a different number of points, and are not consistent between samples of the same gesture.

-

Each gesture's acceleration data and gyroscope data is pretty unique when looking at time series plots. With fist-pump mode and speed mode looking the most similar and will probably be the trickiest to differentiate from one another.

![Gesture Acclerations]()

![Gesture Gyroscopes]()

-

Conducting a PCA of the different gestures yielded that the most "important" type of raw data is acceleration. However, when conducting a PCA with min/max normalized acceleration and gyroscope data, the most important feature became the normalized gyroscope data. Specifically, Gyro_Z seems to contribute the most to the first principal component, across all gestures.

![PCA's]()

-

So now the decision. The PCA of Raw Data says that accelerations work. The PCA of Normalized Data seems to conclude that gyroscope data works. Since I'd like to eventually move this project over to the esp32, less pre-processing will reduce processing overhead on the micro. So let's try just using the raw acceleration data first. If that doesn't work, I'll add in the raw gyroscope data. If none of those work well, I'll normalize the data.

- he first exploration task I conducted was to use seaborn's pair plot to plot all variables against one another for each different type of gesture. I was looking to see if there was any noticeable outright linear or logistic relationships between variables. For the fist pump data there seem to be some possible linear effects between the Y and Z axis, but not enough to make me feel confident in them.

-

7Data Augmentation

Since I was collecting the data myself, I have a super small data set. Meaning I will most likely overfit my model. To overcome this I implemented augmentation techniques to my data set.

The augmentation techniques used are as follows:

- Increase and decrease the peaks of the XYZ data

shiftingFractions=[(3, 2), (5, 3), (2, 3), (3, 4), (9, 5), (6, 5), (4, 5)] #Augment the training set of data def gestureMagnitudeShifting(training,accel_sets, fract): magnitudedf=pd.DataFrame(columns=['gesture','acceleration']) for idx1, aset in enumerate(accel_sets): for molecule, denominator in fract: magSeries = pd.Series(data={'gesture': training['gesture'][idx1], 'acceleration':(np.array(aset, dtype=np.float32) * molecule / denominator).tolist()}) magnitudedf_temp=pd.DataFrame([magSeries]) magnitudedf=pd.concat([magnitudedf,magnitudedf_temp], ignore_index=True) return magnitudedf - Shift the data to complete faster or slower. Time stretch and shrink.

# Time stretch and shrink def time_wrapping(molecule, denominator, data): """Generate (molecule/denominator)x speed data.""" tmp_data = [[0 for i in range(len(data[0]))] for j in range((int(len(data) / molecule) - 1) * denominator)] for i in range(int(len(data) / molecule) - 1): for j in range(len(data[i])): for k in range(denominator): tmp_data[denominator * i + k][j] = (data[molecule * i + k][j] * (denominator - k) + data[molecule * i + k + 1][j] * k) / denominator return tmp_data def gestureStretchShrink(train_set, accel_sets, fract): timedf=pd.DataFrame(columns=['gesture','acceleration']) for idx1, aset in enumerate(accel_sets): shiftedAccels =[] for molecule, denominator in fract: shiftedAccels=time_wrapping(molecule, denominator, aset) timeSeries = pd.Series(data={'gesture': train_set['gesture'][idx1], 'acceleration':shiftedAccels}) timedf_temp=pd.DataFrame([timeSeries]) timedf=pd.concat([timedf,timedf_temp], ignore_index=True) return timedf - Add noise to the data points

# Add Noise def gestureWithNoise(train_set,accel_sets): noisedf=pd.DataFrame(columns=['gesture','acceleration']) for idx1, aset in enumerate(accel_sets): for t in range(5): tmp_data = [[0 for i in range(len(aset[0]))] for j in range(len(aset))] for q in range(len(aset)): for j in range(len(aset[q])): tmp_data[q][j] = aset[q][j] + 4 * random.random() noiseSeries = pd.Series(data={'gesture': train_set['gesture'][idx1], 'acceleration':tmp_data}) noisedf_temp=pd.DataFrame([noiseSeries]) noisedf=pd.concat([noisedf,noisedf_temp], ignore_index=True) return noisedf - Increase and decrease the magnitude the XYZ data uniformly

# Shift data uniformily up or down in mag def gestureTimeShift(train_set, accel_sets): shiftdf=pd.DataFrame(columns=['gesture','acceleration']) for idx1, aset in enumerate(accel_sets): for i in range(5): shiftSeries = pd.Series(data={'gesture': train_set['gesture'][idx1], 'acceleration':(np.array(aset, dtype=np.float32)+ ((random.random()- 0.5)*50)).tolist()}) shiftdf_temp=pd.DataFrame([shiftSeries]) shiftdf=pd.concat([shiftdf,shiftdf_temp], ignore_index=True) return shiftdf - Shift the snapshot window around the time series data, making the gesture start sooner or later

def pad(data, seq_length, dim): """Get neighbour padding.""" noise_level = 1 padded_data = [] # Before- Neighbour padding tmp_data = (np.random.rand(seq_length, dim) - 0.5) * noise_level + data[0] tmp_data[(seq_length - min(len(data), seq_length)):] = data[:min(len(data), seq_length)] padded_data.append(tmp_data) # After- Neighbour padding tmp_data = (np.random.rand(seq_length, dim) - 0.5) * noise_level + data[-1] tmp_data[:min(len(data), seq_length)] = data[:min(len(data), seq_length)] padded_data.append(tmp_data) return padded_data def dataToLength(data_set,seq_length,dim): proc_acc = data_set['acceleration'].to_numpy() pad_train_df = pd.DataFrame(columns=['gesture','acceleration']) for idx4, proacc in enumerate(proc_acc): pad_acc = pad(proacc,seq_length,dim) for half in pad_acc: padSeries = pd.Series(data={'gesture': data_set['gesture'][idx4], 'acceleration': half.tolist()}) paddf_temp=pd.DataFrame([padSeries]) pad_train_df=pd.concat([pad_train_df,paddf_temp], ignore_index=True) return pad_train_df

To address the number of data points inconsistency, I found that 760 data points per sample was the average. I then implemented a script that cut off the front and end of my data by a certain number of samples depending on the length. Saving the first half and the second half as two different samples, to keep as much data as possible. This cut data had a final length of 760 for each sample.

Before Augmenting I had 168 samples in my training set, 23 in my test set, and 34 in my validation set. After I augmenting I ended up with 8400 samples in my training set, 46 in my test set, and 68 in my validation set. Still small, but way better than before.

- Increase and decrease the peaks of the XYZ data

-

8Model Building

As said in the TLDR, the ModelPipeline.py script, found in the Python_Scripts folder, will import all finalized data from the finalized CSVs, create 2 different models an LSTM and CNN, compare the models' performances, and save all models. Note the LSTM will not have a size optimized tflite model.

For which model to use, I want to eventually deploy on the esp32 with TinyML. That limits us to using Tensorflow. I am dealing with time-series data, meaning each data point in a sample is not independent of one another. Since RNN's and LSTM's assume there are relationships between data points and take sequencing into account, they are a good choice for modeling our data. A CNN can also extract features from a time series, however, it needs to be presented with the entire time sequence of data because of how it handles the sequence order of data.

CNN I made a 10 layer CNN. The first layer being a 2D convolution layer, going into a maxpool, dropout, another 2D convolution, another maxpool, another dropout, a flattening, a dense, a final dropout, and a dense output layer for the 4 gestures. See

for mode details.

After tuning hyperparameters, I ended up with a batch size of 192, 300 steps per epoch, and 20 epochs. I optimized with an adam optimizer and used sparse categorical cross-entropy for my loss, having accuracy as the metric to measure.

LSTM Using Tensorflow I made a sequential LTSM model with 22 bidirectional layers and a dense output layer classifying to my 4 gestures.

After tuning hyperparameters, I ended up with a batch size of 64, 200 steps per epoch, and 20 epochs. I optimized with an adam optimizer and used sparse categorical cross-entropy for my loss, having accuracy as the metric to measure.

Model Selection Both the CNN and LSTM perfectly predicted the gestures of the training set. The LSTM with a loss of 0.04 and the CNN with a loss of 0.007 during the test.

Next, I looked at the Training Validation loss per epoch of training. From the look of it, the CNN with a batch size of 192 is pretty close to being fit correctly. The CNN batch size of 64 and the LSTM both seem a little overfit.

![Training Validation Loss]()

![Training Validation Accuracy]()

I also looked at the size of the model. The h5 filesize of the LSTM is 97KB and the size of the CNN is 308KB. However, when comparing their tflite models, the CNN came in at 91KB and the LSTM grew to 119KB. On top of that, the quantized tflite CNN shrank to 28KB. I was unable to quantize the LSTM for size, so the CNN seems to be the winner. One last comparison when converting the tflite model to C++ for use on my microcontroller revealed that both models increased in size. The CNN 167KB and the LSTM to 729KB.

EDIT After some more hyperparameter tweaking (cnn_model3), I shrank the CNN optimized model. The C++ implementation of this model is down to 69KB and the tflight implementation is down to 12KB. The Loss 0.015218148939311504, Accuracy 1.0 for model 3.

So I chose to proceed with the CNN model, trained with a batch size of 192. I saved the model, as well as saved a tflite version of the model optimized for size in the model folder.

# Copyright 2020 Nate Damen # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. import numpy as np import pandas as pd import datetime import re import os, os.path import time from sklearn.model_selection import train_test_split import random import tensorflow as tf def calculate_model_size(model): print(model.summary()) var_sizes = [ np.product(list(map(int, v.shape))) * v.dtype.size for v in model.trainable_variables ] print("Model size:", sum(var_sizes) / 1024, "KB") def makeModels(modelType,samples): if modelType =='lstm': model = tf.keras.Sequential([ tf.keras.layers.Bidirectional( tf.keras.layers.LSTM(22), input_shape=(samples, 3)), # output_shape=(batch, 253) tf.keras.layers.Dense(4, activation="sigmoid") # (batch, 4) ]) elif modelType == 'cnn': model = tf.keras.Sequential([ tf.keras.layers.Conv2D(8, (4, 3),padding="same",activation="relu", input_shape=(samples, 3, 1)), # output_shape=(batch, 760, 3, 8) tf.keras.layers.MaxPool2D((3, 3)), # (batch, 253, 1, 8) tf.keras.layers.Dropout(0.1), # (batch, 253, 1, 8) tf.keras.layers.Conv2D(16, (4, 1), padding="same",activation="relu"), # (batch, 253, 1, 16) tf.keras.layers.MaxPool2D((3, 1), padding="same"), # (batch, 84, 1, 16) tf.keras.layers.Dropout(0.1), # (batch, 84, 1, 16) tf.keras.layers.Flatten(), # (batch, 1344) tf.keras.layers.Dense(16, activation="relu"), # (batch, 16) tf.keras.layers.Dropout(0.1), # (batch, 16) tf.keras.layers.Dense(4, activation="softmax") # (batch, 4) ]) return model def train_lstm(model, epochs_lstm, batch_size, tensor_train_set, tensor_val_set, tensor_test_set, val_set,test_set): tensor_train_set_lstm = tensor_train_set.batch(batch_size).repeat() tensor_val_set_lstm = tensor_val_set.batch(batch_size) tensor_test_set_lstm = tensor_test_set.batch(batch_size) model.fit( tensor_train_set_lstm, epochs=epochs_lstm, validation_data=tensor_val_set_lstm, steps_per_epoch=200, validation_steps=int((len(val_set) - 1) / batch_size + 1)) loss_lstm, acc_lstm = model.evaluate(tensor_test_set_lstm) pred_lstm = np.argmax(model.predict(tensor_test_set_lstm), axis=1) confusion_lstm = tf.math.confusion_matrix( labels=tf.constant(test_set['gesture'].to_numpy()), predictions=tf.constant(pred_lstm), num_classes=4) #print(confusion_lstm) #print("Loss {}, Accuracy {}".format(loss_lstm, acc_lstm)) #model.save('lstm_model.h5') return model, confusion_lstm, loss_lstm, acc_lstm """---------------------------------------------------------------------------""" def reshape_function(data, label): reshaped_data = tf.reshape(data, [-1, 3, 1]) return reshaped_data, label def train_CNN(model, epochs_cnn, batch_size, tensor_train_set, tensor_test_set, tensor_val_set, val_set, test_set): tensor_train_set_cnn = tensor_train_set.map(reshape_function) tensor_test_set_cnn = tensor_test_set.map(reshape_function) tensor_val_set_cnn = tensor_val_set.map(reshape_function) tensor_train_set_cnn = tensor_train_set_cnn.batch(batch_size).repeat() tensor_test_set_cnn = tensor_test_set_cnn.batch(batch_size) tensor_val_set_cnn = tensor_val_set_cnn.batch(batch_size) model.fit( tensor_train_set_cnn, epochs=epochs_cnn, validation_data=tensor_val_set_cnn, steps_per_epoch=300, validation_steps=int((len(val_set) - 1) / batch_size + 1)) loss_cnn, acc_cnn = cnn_model.evaluate(tensor_test_set_cnn) pred_cnn = np.argmax(cnn_model.predict(tensor_test_set_cnn), axis=1) confusion_cnn = tf.math.confusion_matrix( labels=tf.constant(test_set['gesture'].to_numpy()), predictions=tf.constant(pred_cnn), num_classes=4) #print(confusion_cnn) #print("Loss {}, Accuracy {}".format(loss_cnn, acc_cnn)) #model.save('cnn_model.h5') return model, confusion_cnn, loss_cnn, acc_cnn def convertToTFlite(model, modelName): converter = tf.lite.TFLiteConverter.from_keras_model(model) tflite_model = converter.convert() # Save the model to disk open(modelName+'.tflite', "wb").write(tflite_model) # Optimize model for size converter = tf.lite.TFLiteConverter.from_keras_model(model) converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE] opt_tflite_model = converter.convert() # Save the model to disk open(modelName+'_optimized.tflite', "wb").write(opt_tflite_model) basic_model_size = os.path.getsize(modelName+'.tflite') print("Basic model is %d bytes" % basic_model_size) quantized_model_size = os.path.getsize(modelName+'_optimized.tflite') print("Quantized model is %d bytes" % quantized_model_size) difference = basic_model_size - quantized_model_size print("Difference is %d bytes" % difference) if __name__=='__main__': #load in data sets trainingData = pd.read_csv('processed_train_set.csv',converters={'acceleration': eval}) testingData = pd.read_csv('processed_test_set.csv',converters={'acceleration': eval}) validationData = pd.read_csv('processed_val_set.csv',converters={'acceleration': eval}) #Lets make the models sample_len = len(trainingData['acceleration'][0]) lstm_model = makeModels('lstm',sample_len) cnn_model = makeModels('cnn',sample_len) #convert the datasets into tensors tensor_train_set = tf.data.Dataset.from_tensor_slices( (np.array(trainingData['acceleration'].tolist(),dtype=np.float64), trainingData['gesture'].tolist())) tensor_test_set = tf.data.Dataset.from_tensor_slices( (np.array(testingData['acceleration'].tolist(),dtype=np.float64), testingData['gesture'].tolist())) tensor_val_set = tf.data.Dataset.from_tensor_slices( (np.array(validationData['acceleration'].tolist(),dtype=np.float64), validationData['gesture'].tolist())) epochs = 20 batch_size = 192 calculate_model_size(lstm_model) calculate_model_size(cnn_model) lstm_model.compile( optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"]) cnn_model.compile( optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"]) lstm_model, confusion_lstm, loss_lstm, acc_lstm = train_lstm(lstm_model, epochs, batch_size, tensor_train_set, tensor_val_set, tensor_test_set, validationData, testingData) cnn_model, confusion_cnn, loss_cnn, acc_cnn = train_CNN(cnn_model, epochs, batch_size, tensor_train_set, tensor_test_set, tensor_val_set, validationData, testingData) print(confusion_lstm) print("Loss {}, Accuracy {}".format(loss_lstm, acc_lstm)) print(confusion_cnn) print("Loss {}, Accuracy {}".format(loss_cnn, acc_cnn)) lstm_model.save('lstm_model.h5') cnn_model.save('cnn_model.h5') convertToTFlite(cnn_model, 'cnn_model') try: #had some problems with the optimized LSTM model convertToTFlite(lstm_model, 'lstm_model') except Exception: print(Exception) # Convert models to a cc file for use with arduino tflite on linux ## Install xxd if it is not available #!apt-get -qq install xxd #Downloaded a windows port of xxd at https://userweb.weihenstephan.de/syring/win32/UnxUtilsDist.html or use the format-hex in windows ## Save the file as a C source file #!xxd -i cnn_model_quantized.tflite > cnn_opt_model.cc ## Print the source file #!cat /cnn_opt_model.cc #could use the type command on windows to achieve similar -

9Raspberry Pi Deployment

I used a raspberry pi 4 for my current deployment since it was already in a previous tvhead build, has the compute power gfor model inference, and can be powered by a battery.

The pi is in a backpack with a display. On the display is a positive message that changes based on what is said in my Twitch chat, during live streams. I used this same script but added the TensorFlow model gesture prediction components from the Predict_Gesture_Twitch.py script to create the PositivityPack.py script.

To infer gestures and send them to Twitch, use the PositivityPack.py or the Predict_Gesture_Twitch.py. They run the heavy .h5 model file. To run the tflite model on the raspberry pi run the Test_TFLite_Inference_Serial_Input.py script. You'll need to connect the raspberry pi with the ESP32 in the arm attachment using a USB cable. Press the button the arm attachment to send data and predict gesture. Long press the button to continually detect gestures, continuous snapshot mode.

Note: When running the scripts that communicate with Twitch you'll need to follow Twitch's chatbot development documentation for creating your chatbot and authenticating it.

# Predict_Gesture_Twitch.py # Description: Recieved Data from ESP32 Micro via the AGRB-Training-Data-Capture.ino file, make gesture prediction and tell it to twitch # Written by: Nate Damen # Created on July 13th 2020 import numpy as np import pandas as pd import datetime import re import os, os.path import time import random import tensorflow as tf import serial import socket import cfg PORT = "/dev/ttyUSB0" #PORT = "/dev/ttyUSB1" #PORT = "COM8" serialport = None serialport = serial.Serial(PORT, 115200, timeout=0.05) #load Model model = tf.keras.models.load_model('../Model/cnn_model.h5') #Creating our socket and passing on info for twitch sock = socket.socket() sock.connect((cfg.HOST,cfg.PORT)) sock.send("PASS {}\r\n".format(cfg.PASS).encode("utf-8")) sock.send("NICK {}\r\n".format(cfg.NICK).encode("utf-8")) sock.send("JOIN {}\r\n".format(cfg.CHAN).encode("utf-8")) sock.setblocking(0) #handling of some of the string characters in the twitch message chat_message = re.compile(r"^:\w+!\w+@\w+.tmi.twitch.tv PRIVMSG #\w+ :") #Lets create a new function that allows us to chat a little easier. Create two variables for the socket and messages to be passed in and then the socket send function with proper configuration for twitch messages. def chat(s,msg): s.send("PRIVMSG {} :{}\r\n".format(cfg.CHAN,msg).encode("utf-8")) #The next two functions allow for twitch messages from socket receive to be passed in and searched to parse out the message and the user who typed it. def getMSG(r): mgs = chat_message.sub("", r) return mgs def getUSER(r): try: user=re.search(r"\w+",r).group(0) except AttributeError: user ="tvheadbot" print(AttributeError) return user #Get Data from imu. Waits for incomming data and data stop def get_imu_data(): global serialport if not serialport: # open serial port serialport = serial.Serial(PORT, 115200, timeout=0.05) # check which port was really used print("Opened", serialport.name) # Flush input time.sleep(3) serialport.readline() # Poll the serial port line = str(serialport.readline(),'utf-8') if not line: return None vals = line.replace("Uni:", "").strip().split(',') if len(vals) != 7: return None try: vals = [float(i) for i in vals] except ValueError: return ValueError return vals # Create Reshape function for each row of the dataset def reshape_function(data): reshaped_data = tf.reshape(data, [-1, 3, 1]) return reshaped_data # header for the incomming data header = ["deltaTime","Acc_X","Acc_Y","Acc_Z","Gyro_X","Gyro_Y","Gyro_Z"] #Create a way to see the length of the data incomming, needs to be 760 points. Used for testing incomming data def dataFrameLenTest(data): df=pd.DataFrame(data,columns=header) x=len(df[['Acc_X','Acc_Y','Acc_Z']].to_numpy()) print(x) return x #Create a pipeline to process incomming data for the model to read and handle def data_pipeline(data_a): df = pd.DataFrame(data_a, columns = header) temp=df[['Acc_X','Acc_Y','Acc_Z']].to_numpy() tensor_set = tf.data.Dataset.from_tensor_slices( (np.array([temp.tolist()],dtype=np.float64))) tensor_set_cnn = tensor_set.map(reshape_function) tensor_set_cnn = tensor_set_cnn.batch(192) return tensor_set_cnn #define Gestures, current data, temp data holder gest_id = {0:'wave_mode', 1:'fist_pump_mode', 2:'random_motion_mode', 3:'speed_mode'} data = [] dataholder=[] dataCollecting = False gesture='' old_gesture='' #flush the serial port serialport.flush() while(1): try: response = sock.recv(1024).decode("utf-8") except: dataholder = get_imu_data() if dataholder != None: dataCollecting=True data.append(dataholder) if dataholder == None and dataCollecting == True: if len(data) == 760: prediction = np.argmax(model.predict(data_pipeline(data)), axis=1) gesture=gest_id[prediction[0]] if gesture != old_gesture: chat(sock,'!' + gesture) print(gesture) data = [] dataCollecting = False old_gesture=gesture else: if len(response)==0: print('orderly shutdown on the server end') sock = socket.socket() sock.connect((cfg.HOST,cfg.PORT)) sock.send("PASS {}\r\n".format(cfg.PASS).encode("utf-8")) sock.send("NICK {}\r\n".format(cfg.NICK).encode("utf-8")) sock.send("JOIN {}\r\n".format(cfg.CHAN).encode("utf-8")) sock.setblocking(0) else: print(response) #pong the pings to stay connected if response == "PING :tmi.twitch.tv\r\n": sock.send("PONG :tmi.twitch.tv\r\n".encode("utf-8")) -

10Replication

Instructions on how you can build your own gesture detection glove!

- Get started by uploading the

in the Arduino_Sketch folder onto an Arduino of your choice with a button and Adafruit LSM6DSOX 9dof IMU.

- Use the

in the Training_Data folder. Initiate this script, type in the gesture name, start recording the motion data by pressing the button on the Arduino

- After several gestures were recorded change to a different gesture and do it again. I tried to get 50 motion recordings of each gesture, you can try less if you like.

- Once all the data is collected, navigate to the Python Scripts folder and run

and

in that order. Models are trained here and can time some time.

- Run

and press the button on the Arduino to take a motion recording and see the results printed out.

- Get started by uploading the

Gesture Recognition Wearable

Using Machine Learning to simplify Twitch Chat interface for my tvhead and OBS Scene Control

atltvhead

atltvhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.