As explain, edog will participate in the Eurobot cup.

To do so, I need to know the robot position in (x, y) on the table. But I can't use embedded lidar or using triangulation due to its small size and its limited capacity to carry heavy components.

So What I will attempt to do is using a beacon with a camera and estimate the position giving a video flux.

This beacon is composed with an NVIDIA Jetson Nano 2gb board, a TPLink Nano Router, a 7inch touchscreen and a DC converter to 5v and 5A. that allow to used 3s to 6s lipo to power up the sytem.

Here an exemple of the team ARIG Robotique (more infos here)

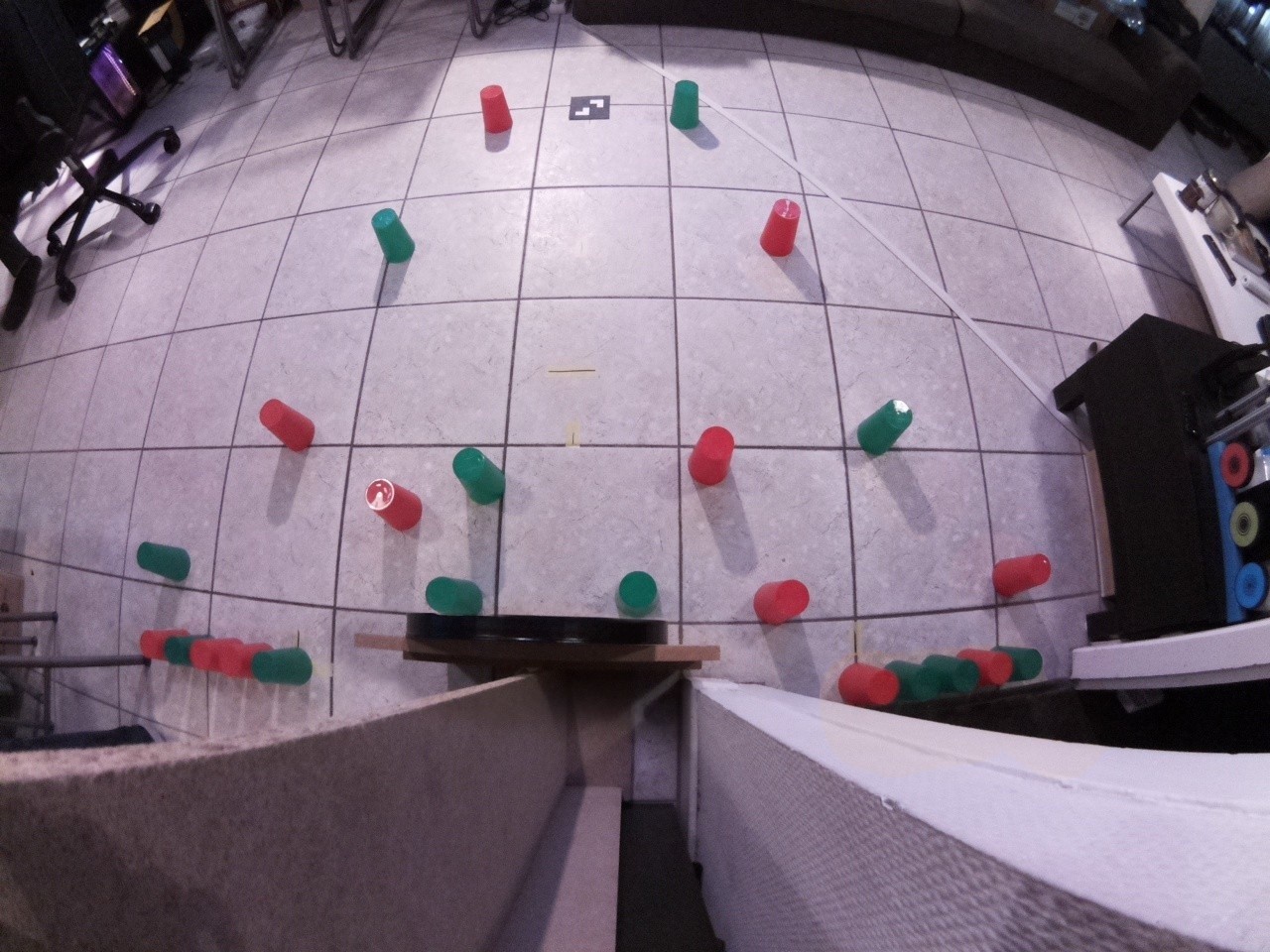

Step #1: original video

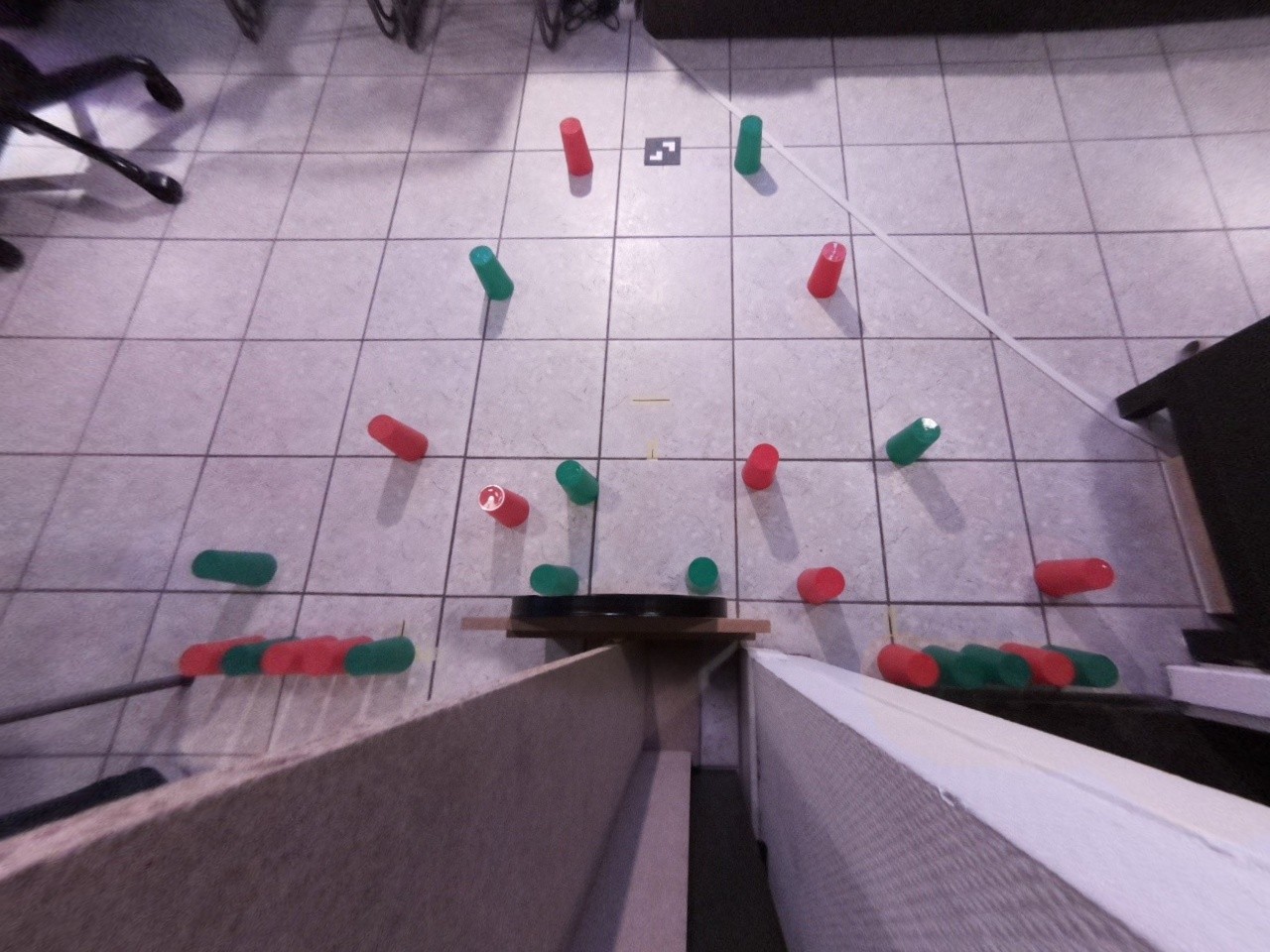

Step #2: Optical distortion usgin OpenCv in python

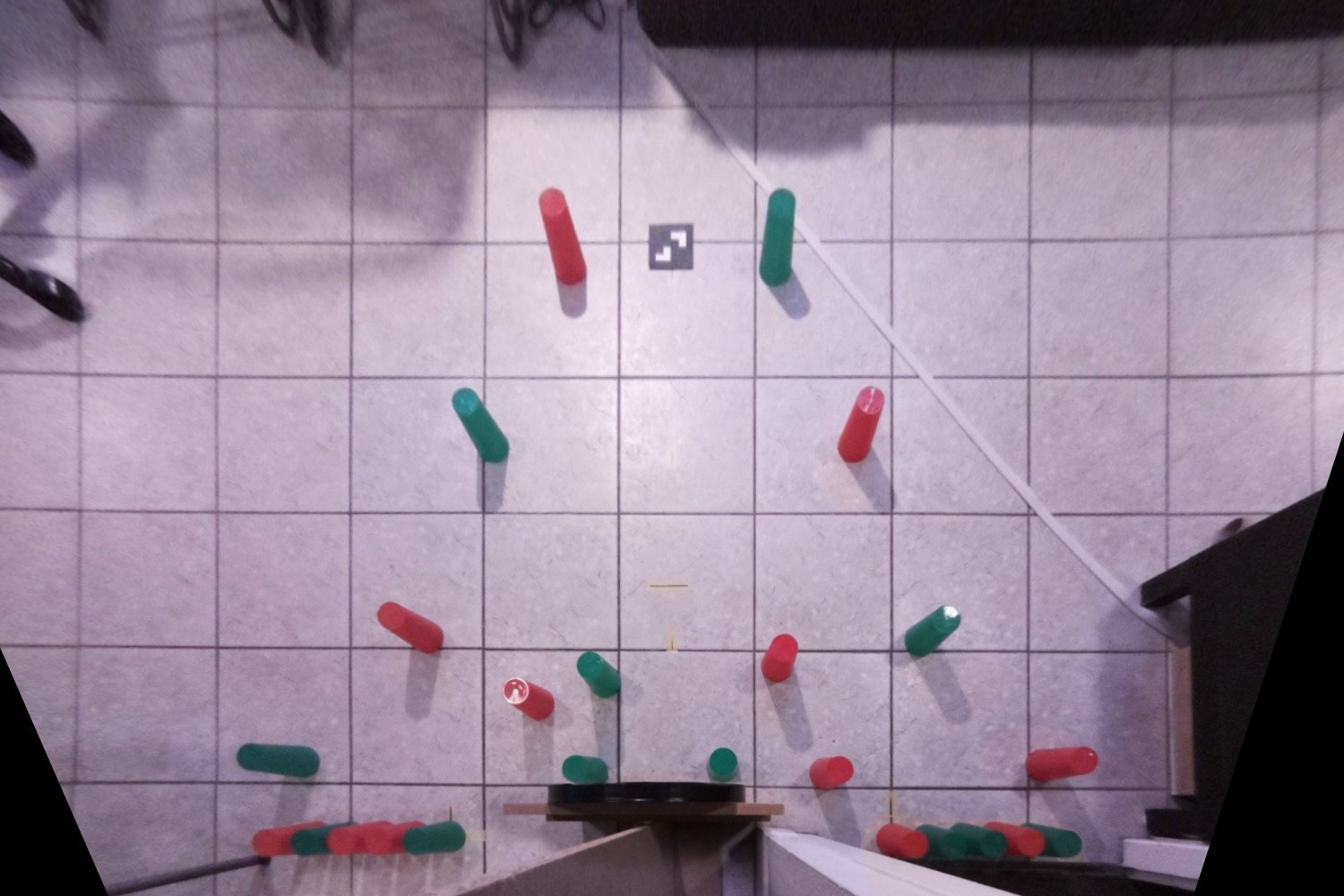

Step #3: projection in an orthonormal coordinate system

Gaultier Lecaillon

Gaultier Lecaillon

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.