-

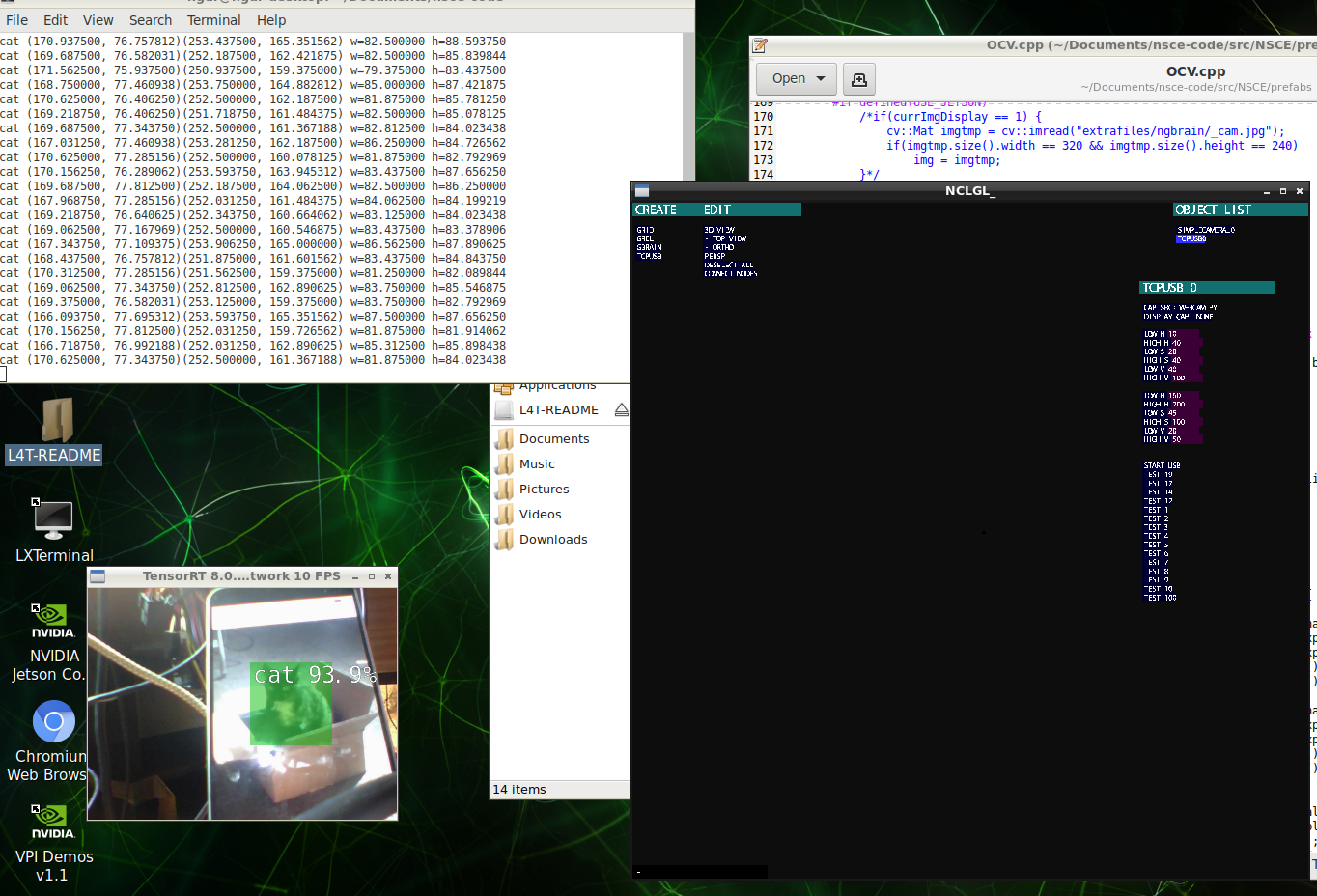

obtained boundingboxes by detectnet

01/08/2023 at 20:29 • 0 commentsalready obtained boundingboxes of cats from detectnet

![]()

-

USBTCP deterministic class

01/07/2023 at 01:12 • 0 commentsI maked a simply USBTCP class to use without neural nework but using the OpenCV part.

Also I can select the capture source for the OpenCV image (desktop or webcam) and able to select who to be the communication (USB or TCP)

For example... I can use NGBrain class with OpenCV desktop mode and receive data by TCP from blender about number of sensors/actions to create respective neurons and to get data of them. Then I send actions to blender by TCP too.

Or I can use NGBrain class with OpenCV webcam mode and receive data by USB from master MCUs about number of sensors/actions to create respective neurons and to get data of them. Then I send actions to MCUs by USB too.

Also can be OpenCV webcam mode and TCP... or OpenCV desktop mode and USB...

With USBTCP class I don't receive data (TCP or USB) to create neurons or use the neural network. This get from OpenCV desktop or webcam mode and receive/send from TCP or USB by deterministic code.

Already I'm using USBTCP class to receive OpenCV webcam image connected to JetsonNano and then move two wheels of a car (above is the Jetson) to follow one double triangle marker that moves.

Now I'm going to use the Jetson python codes to run detectNet and so the car chases cats (or others) instead the double triangle. Like a M3gan ñ_ñ

![]()

-

Learning achieved!!

12/14/2022 at 02:11 • 0 commentsI hope funny things are come from this point :D

-

project unpaused

10/25/2022 at 18:17 • 0 commentsFinally after 31 removals I achieve to leave the callous and stressful city and shared flats and I'm back to countryside and to be accompanied by birds, cats, sheeps and friendly chickens. Every time I lke less the city.

Also I almost have recovered my things from the storage room.

Now I am in a super large farm with no undisturbeds of any kind :D.

About the project I'm problems to make work properly backpropagation and currently I'm preparing another class for reinforcement learning using Q-tables.

-

project paused

07/19/2022 at 14:17 • 0 commentsI was serious problems to have a placefully home latelly and I went to an agreed place but there have been big setbacks and currently I'm sharing house after even bigger problems after abandon the house (lodging house, sleeping on my ancient car with belongings, without air conditioning and heat wave, etc...). it's been crazy.

I don't want to be in a shared house but really the problems could have been worse. I had received many help from my job and I'm very gratefully to them for that.

I had no choice and I had to put all my belongings in a storage room :(. Currently only I have my clothes and my PC now. It's a long story for not to sleep.

I hope being able to have everything back with me and continue with my normal life.

I don't know when could be but I hope soon.

27 removals I have already in my life :S. Always forward ;)

-

graphic card decay

06/22/2022 at 14:13 • 0 commentsI'm trying to continue with the project but I have some problems with the GT220 now (it was time). I think I should wait with this project a little more because also I'm seing I can't fit my habits lately

-

quick projects

04/07/2022 at 21:22 • 0 commentsI have some delay with this project because I still have preparing my new cave and tools. And work for my job :)

I have finished some quicks projects before too.

- one tester to measure kilovolts.

- one controller for the shaker and the resin scatter.

- touching again BJT amplifiers (pre and power) a little and learning about speaker, triodes and pentodes.

- welded one frame to mount trifasic motor and grinder

![]()

![]()

-

Move finished

03/08/2022 at 17:26 • 0 commentsfinally on my new house/laboratory but one week without internet because internet companies (now Movistar).

-

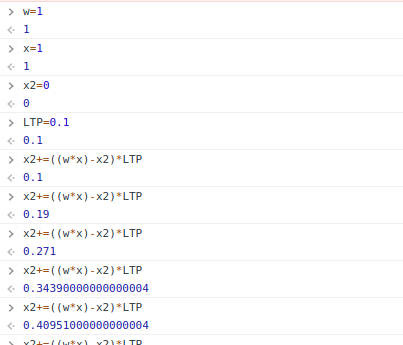

LTP & LTD

02/17/2022 at 18:32 • 0 commentsStill I'm neofite about LTP and currently I'm reading and investigating. This is for other things differently of backprop and the robot part I'm currently to have and it's not have relation "by the moment".

About LTP I was commenting about one filter on weights but reading something more it's not well oriented that I said.

Long term potentiation happens on high frecuency pre & post sinaptic activity.

Long term depression happens on low frequency pre & post sinaptic activity.

Simply I will need detect an high and low pre-post activity and his frecuency value

10Hz = 0.1 = LTD

100Hz = 1.0 = LTP

then only multiply this delta value by the weight value (value+=inputNeuron*weight*0.1) for a controlled value propagation like momentum or a capacitor (low farads=voltage increase more quickly=LTP)(high farads=voltage increase more slowly=LTD) and starting with 0.5 I think... and getting back to 0,5 "with the time" :)

![]()

I will use this thread for updates about this. :D

-

manual plasticity

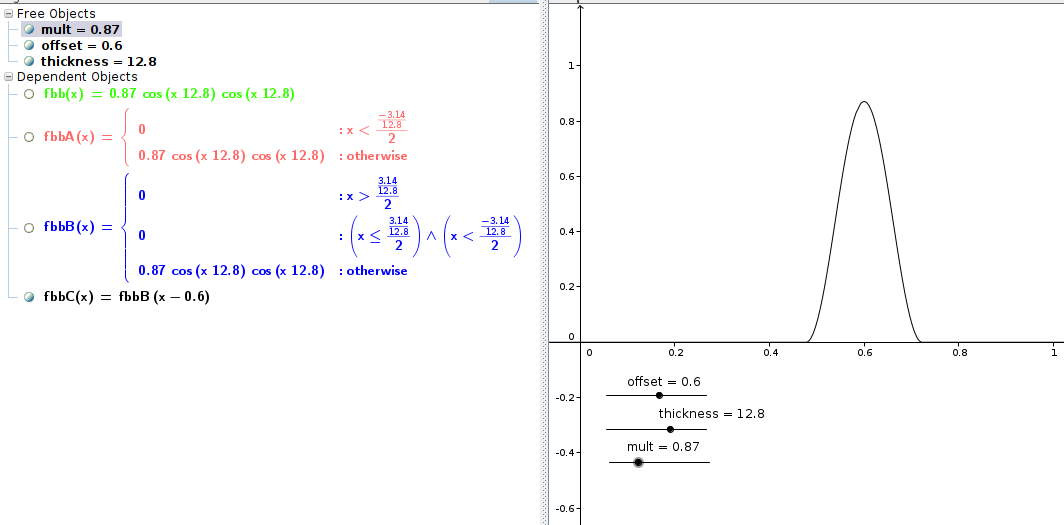

02/13/2022 at 05:43 • 0 commentsfor future experiments on the way to achieve some LTP system.

Neurons can join or out dinamically according to things I will going to test in this mode...

Only hidden neurons can "explore" and when this is allowed to join and this is near to some input neuron then act as "parent" else act as child.

as note: I have an ancient GT220 (3 displays)...

Maybe I can add some value like band pass filter that make adapting over time and it is displaced to the value/range more used habitually(according to amplitude, variations over time...) to multiply along after with a neuron output or weight.

I think store this value along the weight is more adecuate if this only happens in presinaptic and postsinaptic activity at same time, something only detectable by the relation and which this value update would create some kind of complete route starting from some input value until to some output in order to sensibilize for a past/specific input type.

It stays frozen only amplyfing for specific range of input values that was the more used making the function like some type of memory for a specific input or task without needs of disconnect weights for a new task and would do much more less effect over other neurons having trained weights for multiples task.

![]()

https://www.geogebra.org/m/kd5w84bt

the thickness would go narrowing on the time and making displacement every time more dificulty and it allow more refinement, force, contrast and differentiation for that route or input state allowing to put new neurons on the net for this or other tasks (with wide thickness and starting with low amplitude) because the differentiated neuron already only is accepting for specific weight values and transparent for others values because thickness already is short magnifing only where gradient descent led it.

Also I want test what happens if this differentiated weights are detected by the neuron and then could hold his output for more time according to the quantity of these

3drobert

3drobert