Introduction

There is a huge demand for fast and effective detection of COVID-19. There are many studies that suggest that respiratory sound data, like coughing, breathing are among the most effective techniques for detecting the COVID-19. The aim of this project is to show that how the most common respiratory illness such as cough can be detected using voice data and differentiate between healthy breathing and coughs. The same technique can be used to identify other serious respiratory disease such as pneumonia, COVID-19 etc. There are many studies show that the COVID-19 can be easily and effectively identified using cough recordings. Following is the link to such findings:

- Artificial intelligence model detects asymptomatic Covid-19 infections through cellphone-recorded coughs

- COVID-19 Artificial Intelligence Diagnosis Using Only Cough Recordings

- AI4COVID-19: AI enabled preliminary diagnosis for COVID-19 from cough samples via an app

- COVID-19 Artificial Intelligence Diagnosis Using Only Cough Recordings

- Identifying COVID-19 by using spectral analysis of cough recordings: a distinctive classification study

- Robust Detection of COVID-19 in Cough Sounds: Using Recurrence Dynamics and Variable Markov Model

- COVID-19 cough classification using machine learning and global smartphone recordings

- COVID-19 Detection in Cough, Breath and Speech using Deep Transfer Learning and Bottleneck Features

Above are the few findings. In this project I have developed an ML model using Edge Impulse Studio that uses mel-frequency cepstrum (MFCC) to extract voice features from sound recordings and classify between healthy breath and cough. Building the Machine Learning (ML) model that detects COVID-19 requires highly accurate and large amount of voice recording data which is currently not available. But this project shows that such system could be easily build using the technique given here. However the data for building such system can be collected using the technique described in following article:

Utilize the Power of the Crowd for Data Collection

But this also require lot of time and peoples contribution. Apart from analyzing the sound data, there is also a need to maintain a proper distance while going in public places to minimize the spread of respiratory related infections. The sensor node in this project also alerts its user to maintain proper distance when they come closer to other people in public. Thus the system helps in creating/maintaining the Healthy Spaces.

The Project Name

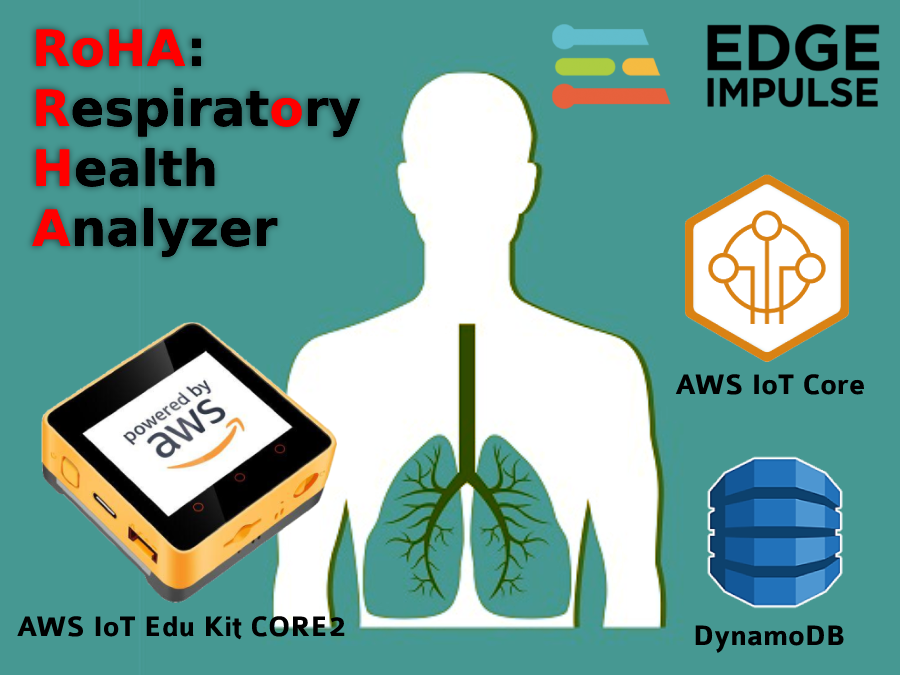

The name of the project is Respiratory Health Analyzer abbreviated as RoHA. RoHA is an Urdu word which means soul or life. The aim of this project is also to save the human life through creating Healthy Spaces.

The Architecture

The following figure show the architecture of the RoHA project. The tinyML model is developed using Edge Impulse. This tinyML model processes the human voice using MFCC to extract features from it which can be used to perform analysis on the patterns of voice data. This model takes input from built in microphone on AWS IoT Edu Kit and analyze the respiratory health based on the sound recording. The inferencing result is then displayed on the sensor node and data is also sent to AWS IoT Core using MQTT protocol. The data is then forwarded to DynamoDB for permanent storage. The custom web app is built using PHP, gathers the data from DynamoDB and display the status of respiratory health, cough count and healthy count. The sensor node also detect human distancing using PIR sensor and alert the person about it on the sensor node screen.

RoHA Architecture

The Steps

Following are the steps involved in developing this project:

- Create a tinyML model using Edge Impulse

- Setup environment for AWS IoT Edu Kit

- Configure AWS IoT core

- Configure IAM

- Configure DynamoDB service

- Create AWS IoT rule for DynamoDB

- Test the AWS IoT rule and DynamoDB table...

timothy.malche

timothy.malche

IOTMCU

IOTMCU

vivek gupta

vivek gupta

Salvador Mendoza

Salvador Mendoza

Anmol Agrawal

Anmol Agrawal