-

CONTEST FINAL SUBMISSION

04/05/2022 at 22:02 • 0 commentsThe contest may have ended but I will continue to work on this project and update you on my progress periodically. Remember that all the code for this project, including the OAK-D Lite blob file are available on this projects' GitHub repository. Thanks to those who have helped me along the way. This project was challenging, and I learned a lot in the process about OpenVINO and DepthAI. It was quite the journey, and we are so glad that it is an operational Livestock and Pet GeoFencing System. As we came to the end of our journey, it was incredibly rewarding to be able to use it at home with my own pets. I have been in contact with a locally based livestock ranch, and we look forward to trying it out with a larger range of live animals. We had great fun with the optional animal classification and look forward to trying it out on a much larger scale soon. We are deeply grateful to OpenCV and the Spatial AI Contest partners and look forward to seeing what the other teams have accomplished.

-

Animal Detection and Classification

04/05/2022 at 21:54 • 0 comments![]()

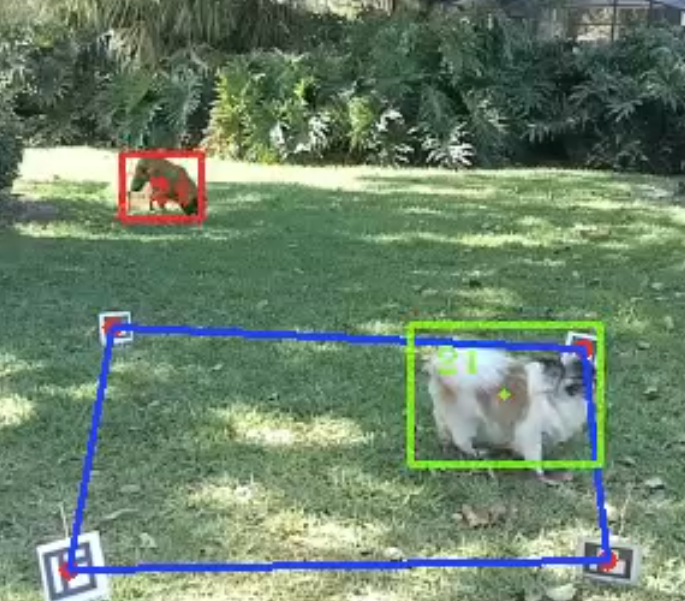

As mentioned in a previous post, we used OpenVINO's SSDLite Mobilenet V2 Intermediate Representation and converted it into a blob file for our OAK-D Lite camera. This model works well as a detector but misclassified animals often. Sheldon, the Tibetain Spanial from the previous post, consistently is identified as a cat, sheep, or horse. While the bulldog is consistently classified as a dog or a horse. This consistent misidentification made us curtail our original plan to include a preditor warning feature into our autonomous system.

-

I can see ArUco markers!

04/05/2022 at 19:45 • 0 comments![]()

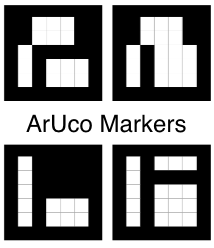

How about those ArUco markers, what is there to say? They are the least informative square marker. Even a barcode gives your more information. But they have a purpose and that is to help computer vision technology locate a specific point in space. ArUco markers and similar alternatives are all around us. Some in malls and others on NASA space equipment.

They come in different grid sizes. I finally settled on a 7x7 grid. When printed on half to full-size letter paper, they can easily mark the edges of a good size back yard and that is exactly how we used them.

![]()

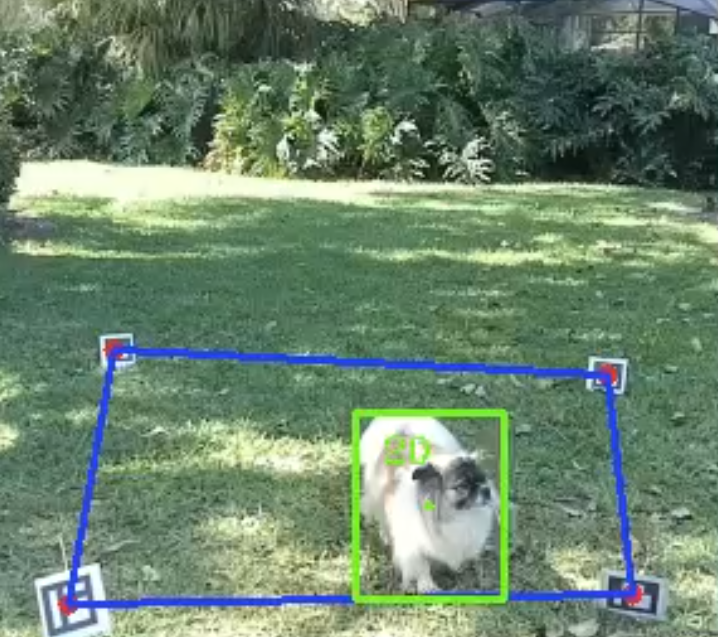

During testing, we put them through their paces. We started with ones about an inch in size. We tested them in different configurations in different light conditions. Markers with a lower numerical value seemed to be able to be seen at a farther distance compared to those with a larger value. The markers pictured are slightly smaller than a half sheet of letter-size paper (about 5 inches).

![]()

These markers worked out really well. Sheldon, the Tibetian Spainial pictured above, was given treats for being such a good model. The picture is a frame from the OAK-D Lite camera. The frame is 300x300 pixels in size. We use a smaller image to speed up detection and classification results.

-

It's all about that base!

04/05/2022 at 17:46 • 0 comments![]()

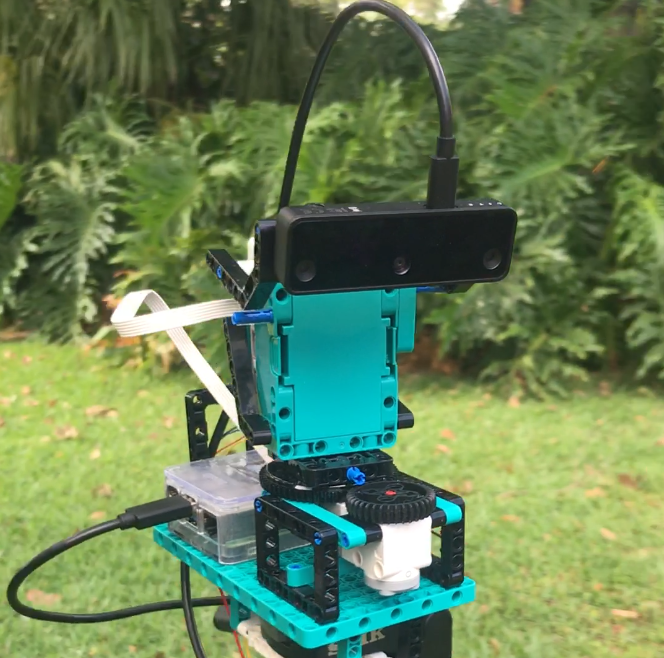

The first design, prior to the LEGO kit arriving.

First major LEGO version.

![]()

Current LEGO version.

The pan and tilt base went through several reversions until it reached its present form. In the current LEGO revision, the camera was flipped so that the cable did not catch on the base while rotating. The tilt motor was removed and was replaced by a pin that could be manually raised or lowered. The gear ratio was swapped from a ~4:1 to a 1.6:1 ratio. A special holder was built so that the base could be mounted on a tripod. A cable management bracket was placed in the back. An IMU holder was placed on the side of the hub and cables were routed out the top and through the cable management bracket. It is simple and efficiently meets our needs.

-

Hub2Hub bluetooth or Hardwire Serial Connection

04/01/2022 at 04:52 • 0 commentsI have been wracking my brain over what to do next. It is a tale as old as time. Someone creates a fantastic python program that looks like it will solve all your woes but it turns out to be a headache to implement because of one reason or another. Hub2Hub repository was once such a headache. The repository is filled with a lot of great information, but unfortunately, I could not get the demo that utilizes the Lego Hub and a Raspberry Pi Zero.

Later I decided to establish a serial connection between the Lego Hub and a Raspberry Pi Zero. I found a great website that outlined what each pin in the Lego Hub ports was for. I was able to establish a connection but data wasn't transmitting and receiving correctly. I tried everything I could think of to fix the problem but at last, this seems to be a dead-end for now.

I will look for another solution.

-

Know When It is Time to Change

04/01/2022 at 04:39 • 0 commentsAfter many weeks of trying to get Microsoft's MegaDetector to function on the OAK-D-Lite, I have switched to using OpenVINO's SSDLite Mobilenet V2 blob. During testing, It did not consistently identify animals with their correct label but it did identify them as animals. This isn't ideal but it will work as a proof of concept.

Converting OpenVINO's intermediary format to a blob was a breeze. I was able to do it without any issue using the Luxonis MyriadX Blob converter. I also tested converting the intermediary files using the BlobConverter command line interface and it worked just as well.

I'm excited to see some real progress after feeling stagnated for so long.

-

Lack of Updates

03/31/2022 at 19:53 • 0 commentsSorry for not posting over these past several weeks. During spring break, I went to help my in-laws rebuild their house. Their house was knocked down during a hurricane and it has taken quite a bit of time to rebuild. We are probably a year out to the point that it is restored to its former glory. While there I injured myself and I have been out of commission. The following posts are updates you would have received.

-

Three Blobs in the Bush and Nothing in the Hand.

02/25/2022 at 06:33 • 0 commentsI have racked my brain in search of a way to get the blobs to run on the Oak-D Lite. I tried to use the Depthai SDK and API. The code I used is available on our project's GitHub repository. The Intermediate Representations (IRs) and blobs are available through the repository as well. Files that are too large to be uploaded onto GitHub have been uploaded to our Google Drive. A link to our Google Drive is posted on the repository.

Our main issue is figuring out what is causing the following issues.

SDK Output:

[1844301091F6B11200] [96.434] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.464] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.497] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.533] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame Starting Loop [1844301091F6B11200] [96.566] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.598] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.633] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.662] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.696] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.732] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.763] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.793] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.830] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame [1844301091F6B11200] [96.859] [ImageManip(5)] [error] Output image is bigger (1080000B) than maximum frame size specified in properties (1048576B) - skipping frame Traceback (most recent call last): File "Desktop/opencv_comp/rgb_md_api.py", line 88, in <module> in_rgb = q_rgb.tryGet() RuntimeError: Communication exception - possible device error/misconfiguration. Original message 'Couldn't read data from stream: 'rgb' (X_LINK_ERROR)'API Output:

Traceback (most recent call last): File "Desktop/opencv_comp/rgb_md_api.py", line 89, in <module> in_rgb = q_rgb.tryGet() RuntimeError: Communication exception - possible device error/misconfiguration. Original message 'Couldn't read data from stream: 'rgb' (X_LINK_ERROR)'Back to the grindstone. I have one more possible solution. If it doesn't work I will switch models. This is not what I want but it might be what I must do.

-

It's raining blobs!

02/25/2022 at 06:16 • 0 commentsAfter starting from scratch a second time, I finally have three blobs. Why three you might ask. Well, that is because I could not figure out which version of TensorFlow that Microsoft CameraTraps' MegaDetector was trained with.

There are seven faster_RCNN transformations config files to use. I am not sure which version of TensorFlow was used so I am testing each one. The following is published on OpenVino's documentation.

faster_rcnn_support.json for Faster R-CNN topologies from the TF 1.X models zoo trained with TensorFlow* version up to 1.6.X inclusively

faster_rcnn_support_api_v1.7.json for Faster R-CNN topologies trained using the TensorFlow* Object Detection API version 1.7.0 up to 1.9.X inclusively

faster_rcnn_support_api_v1.10.json for Faster R-CNN topologies trained using the TensorFlow* Object Detection API version 1.10.0 up to 1.12.X inclusively

faster_rcnn_support_api_v1.13.json for Faster R-CNN topologies trained using the TensorFlow* Object Detection API version 1.13.X

faster_rcnn_support_api_v1.14.json for Faster R-CNN topologies trained using the TensorFlow* Object Detection API version 1.14.0 up to 1.14.X inclusively

faster_rcnn_support_api_v1.15.json for Faster R-CNN topologies trained using the TensorFlow* Object Detection API version 1.15.0 up to 2.0

faster_rcnn_support_api_v2.0.json for Faster R-CNN topologies trained using the TensorFlow* Object Detection API version 2.0 or higher

I successfully created blobs using faster_rcnn_support.json, faster_rcnn_support_api_v1.7.json, and faster_rcnn_support_api_v1.10.json. The rest were Incompatible.

-

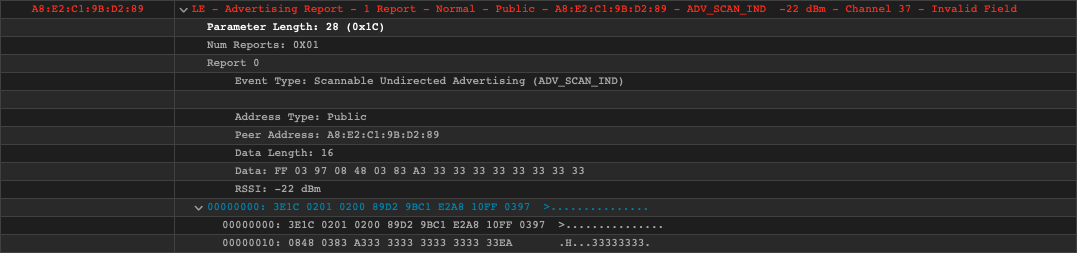

Hub2Hub Communication

02/17/2022 at 05:11 • 0 commentsI manage to get two-way communication between the hub and a computer. I used the following resources:

https://github.com/bricklife/LEGO-Hub2Hub-Communication-Hacks

https://hubmodule.readthedocs.io/en/latest/hub2hub/

![]()

I am still working on getting Hub2Hub to work with a Raspberry Pi.

Livestock and Pet Geofencing

An autonomous system that monitors animals within a zone.

JT

JT