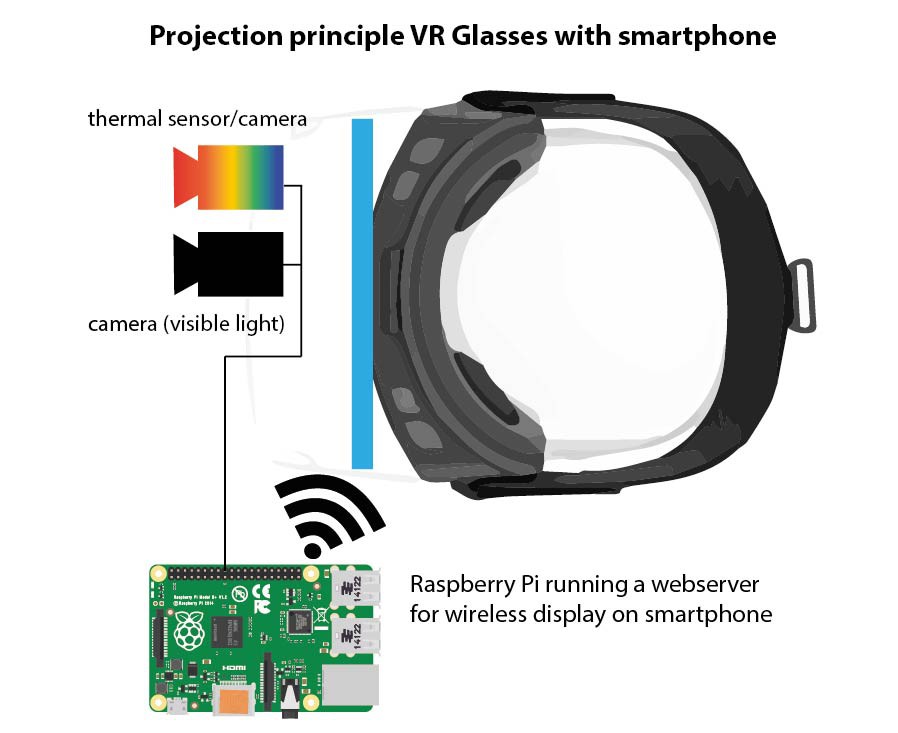

The last prototype we discuss is the VR setup... using existing products like cardboard (or better) goggles combined with a smartphone acting as the display:

This time we can't use optics to blend the thermal image and visible environment into each other, because the user will be completely "shielded" from the environment, just seeing the pictures on the smartphone (well, yes, that's the plan with VR setups!) ;-)

Therefore, we need to capture both the image in visible light and the thermal image, combine them in software, and display the result twice on the smartphone screen. We already discussed that - in order to save money and not use up too many parts - we designed the Raspberry Pi case serving the AR headset already with a "normal" camera for visible light build-in... so it can be used on the AR headset and simultaneously on the VR one as well :-)

As shown in the projection principle, we run a small webserver on the Raspberry Pi that simply displays the pictures captured and combined (while at the same time putting out the thermal-only image to the TFT screen for the AR headset).

See, no wires! At least not between the Raspberry Pi and the smartphone in this setup :-)

Johann Elias Stoetzer

Johann Elias Stoetzer

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.