Firstly, I want to address the issue of my spelling - I'm dyslexic and while I rely on assistive tech to perform read-aloud and spell check, it is not perfect. So please accept my apologies for poorly worded sentences or mistakes.

The #AssistiveTech solution I am presenting was initially designed for a specific client and started as a large joystick with 8 possible positions connected to an Arduino that played pre-recorded MP3 messages. A second client leant of this and wanted one with 4 large push buttons instead of the joystick. Talking to various carers of clients with degenerative muscular disorders It became apparent that there is a need for this kind of support device but it needs to be easily adapted to individuals' needs based on their movement capabilities. To accommodate this, it was decided to make a voice control board that was independent of the input sensing board so variations could be more readily adapted.

Planned Capacitive Touch Version

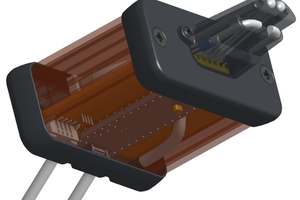

The currently planned design is to use capacitive touch sensors that can sense through a 3D printed case that is customised to meet the clients requirements, Initial tests were performed using off-the-shelf touch sensors but they had varying sensitivity results so a custom PCB was designed with 14 buttons. The number of buttons was based on the inputs available on the Microchip MTCH108-I/SS sensing chip (7 inputs).

The sensitive areas were made to be large enough to easily contact, even with limited movement control, and to aid the finger placement, 3D printed guides were designed in Fusion 360 as shown below. While all 14 positions can play a custom message, the larger positions are intended for common answers such as yes/no.

All messages are recorded to match the user's specific requirements and can be in a voice and language to suit. In some cases, the message is repeated in two languages. One for the user and the other for the carer, which aids both parties.

The device is housed in an enclosure that has inter-changeable finger guides to allow easy modification to meet the users' needs.

Currently, the electronics operate from a battery that will last around 4 days between charges. There is a plan to incorporate wireless charging to make it easier for a mobility-impaired person, avoiding the need to plug in small plugs. This will also improve the splash resistance and cleaning of the device.

The recording of the messages is in an MP3 format and this can be performed on many recording devices including a mobile phone. Message length can be any length but it has been found that less than 10 seconds is ideal. Once recorded, the MP3 are named numerically to correspond to the button position and uploaded to the processor via a computer where it is seen as a USB flash drive.

Because the target user often has poor movement control, the message will only play once per button every 15 seconds, avoiding repeat messages if they hold their finger on the button or press multiple times.

For the prototype, several shortcuts have been made including:

- The processor board is currently using discrete inputs for each channel but is planned to be SPI

- The voice board is an off the shelf unit from DFrobot

Tim

Tim

Chris Graham

Chris Graham

Supplyframe DesignLab

Supplyframe DesignLab

Ultimate Robotics

Ultimate Robotics

Alex

Alex

In 2022 I was asked by the carer of a person with a muscular disorder that prevented them from talking and limited their hand control to basic movements, to give them a way of communicating responses to questions.

To accomplish this, a joystick was interfaced to an Arduino to detect up to 8 positions, which would trigger a voice module to speak a phrase or word such as 'Yes', 'No', 'Toilet', 'Drink' etcetera.

This worked extremely well for a period of time, but as the person's disorder became worse the need to identify new input methods became apparent leading to the need to develop a solution that is adaptable to multiple trigger requirements.