-

It's Just a Harmless Little Bunny!

07/12/2023 at 10:29 • 0 comments![]()

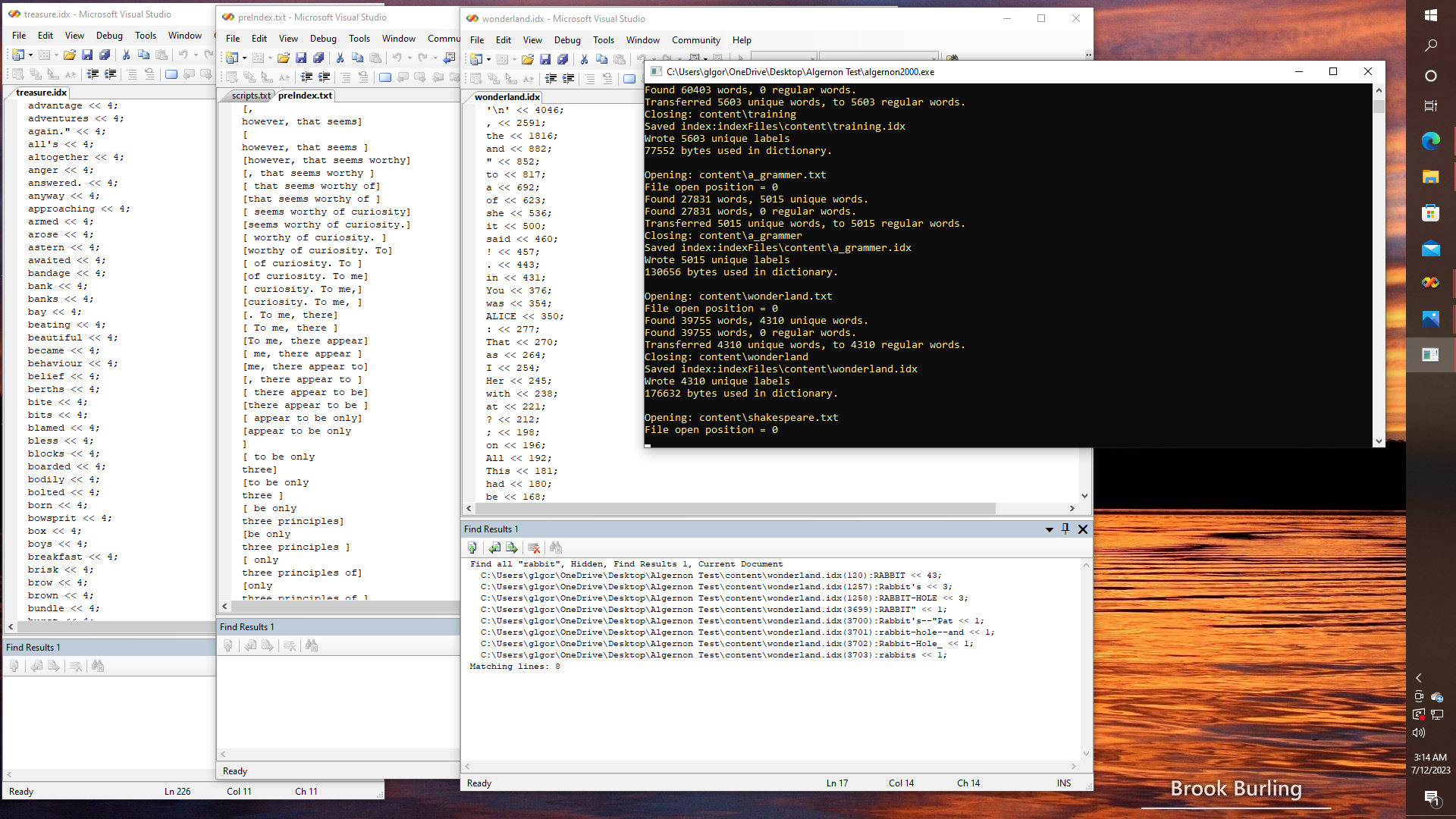

Alright. Here is some random nonsense, before we get back to the topic of binary trees, shrubberies, and so on; that is for those who prefer to chase rabbits during the meanwhile, that is. If my indexing software is working correctly, the word ALICE occurs 350 times in "The Adventures of Alice in Wonderland", whereas there are several variations of RABBIT (43 times), Rabbit's (3 times), RABBIT-HOLE (3 times), etc. Then again, in "Treasure Island" it would appear that the words "advantage", "adventures", "anger", and so on, each appear exactly four times.

Now the next step, of course, is to construct another "index" which captures the context in which each word might occur and construct another tree structure, (or another shrubbery if you know what I mean!) that contains context information for every word, so that we can either reconstruct the original texts from the contextual fragments, or else we can generate new weird and seemingly random stuff, and this, in turn, is how a simple model for a "Markov-chain" based chat engine cand be built, since by adding additional layers to the onion, we can by a way of a statistical analysis attempt to "predict" the next word in a sentence.

It is also quite possibly how it was that I unintentionally invented the first variant of what became known as a "Google BOMB" sometime back around 1998 when I indexed "War and Peace" and a bunch of stuff, and then created a website with the indexes and context information for about 5000 common English words, that was then re-sorted and presented in a hybrid format that was meant to be able to combine some of the features of a Concordance of the Bible, with a massive database created by indexing and analyzing dozens of other works, so that if you clicked on a word (which had a hyperlink) if you wanted to, and then read "context information" in a very rough way, so that you could then click on the next word, and then jump to another web page, that would allow you to "continue to follow" that context, or you could change contexts if you wanted to, by jumping back and forth between documents, and so on.

Now getting this to work with a training set that includes hundreds of thousands of books is one challenge, while another is getting it to work on an Arduino, or a Raspberry Pi so that we can train it with whatever it needs to "learn" about solving mazes or killing trolls, or making a deluxe pizza without burning the house down that is.

Thus, while there have been many attempts to do some form of generative AI, all of them are deficient in one way or another. Sometimes the code is simply bloated and impossible to understand, or maintain, or there are legal issues affecting its use, one way or another, because of terms of use, or else the developers have used third-party materials without obtaining the required permissions. But of course, you knew that. Or else there are bots like Eliza, or Megahal, which either used a fixed, i.e., hard-coded and very limited pattern matching scheme (Eliza), or else did not support large language models, with multiple training sets, i.e., since the original MegaHal was a single-threaded application that used just one training file as input, neither was it 64-bit aware, or "large data" aware, in some form or fashion.

Just figuring out ways of managing large dictionaries can be quite the challenge, since it is all too easy for the heap to fragment, or new char [] to return NULL when you are making millions of calls to malloc or whatever, and like a stupid brain-dead rat, the program doesn't seem to want to do anything but roll over and die.

Yet there is so much more that can be done with this, and interesting stuff at that, since as the authors of a certain paper have claimed, "Large Language Models (LMM) can be effective unsupervised learners", which proves a very interesting point, on the one hand, but which leaves open an important question: What about systems that are based on smaller "custom crafted" and therefore specialized training sets. Isn't that what is actually needed in many cases, such as "tech support" applications, or in any potential use case that might involve "natural language software development?"

-

Bringing Algernon Back to Life

06/17/2023 at 20:13 • 0 comments![]()

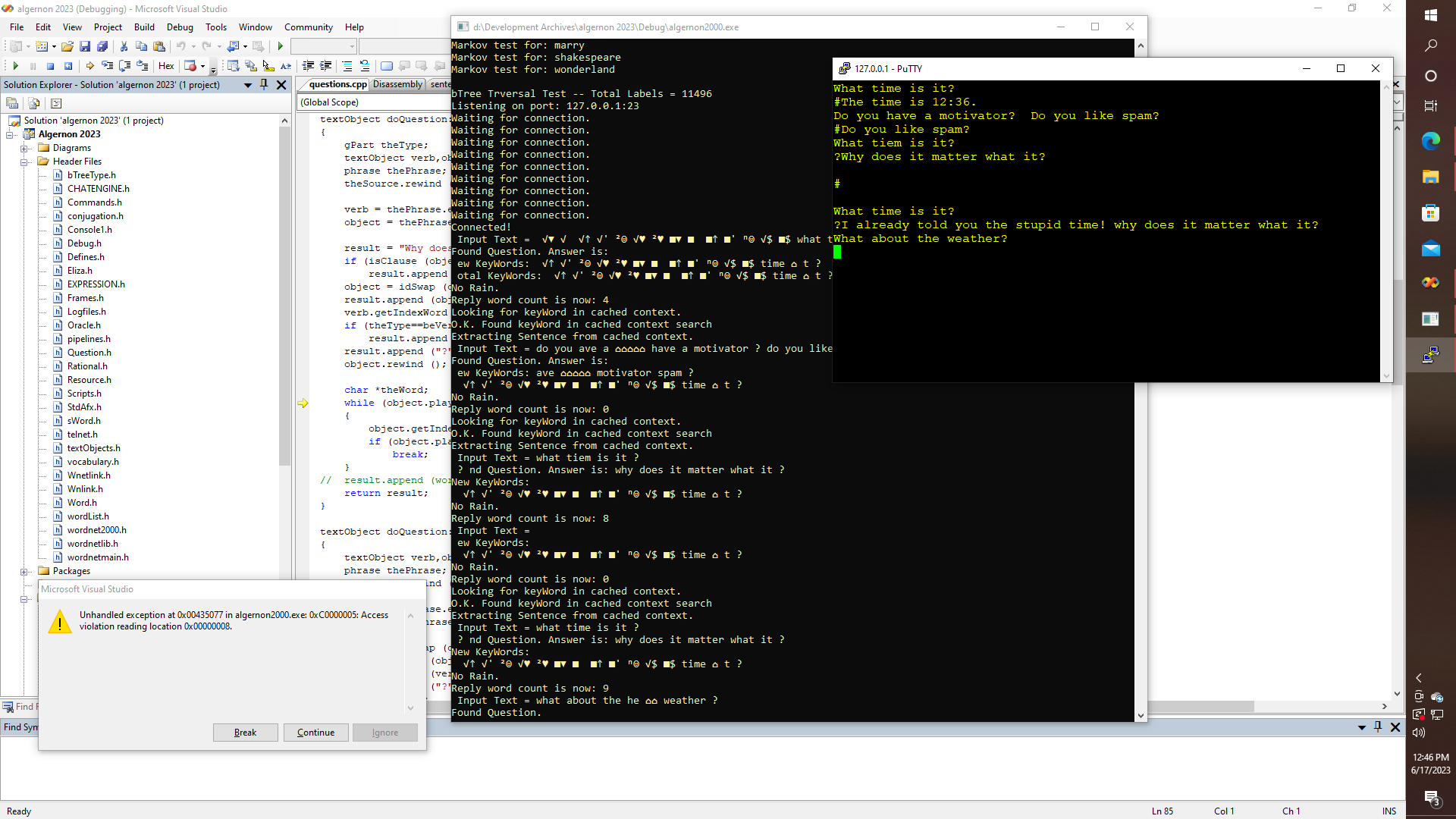

Fixed a bunch of bugs in my Frame-Lisp library, which is the SDK I created for chatbot creation, compiler data structures support, symbolic debugging, and other stuff. Thus, I finally got around to recompiling "Algernon" under Visual Studio 2005, with the <fstream> style headers for the iosstream library stuff, instead of the old "fstream.h" style headers and so on, and then let's not forget the 100's of strcpy style string functions that needed to be replaced with the strcpy_s style, and the "old" vs. "new" style C++ template declarations that needed to be cleaned up. Yes, this code is that old. Some parts are now working again, other parts never did quite work, the first time around. Primarily due to initialized buffers, buffer overruns, "off by one" errors, and so on. Lots of stuff was finally fixed, even though I have barely even looked at this code in almost twenty years.

So, here is my first relevant conversation with Algernon in about as long:

User: What time is it?

Algernon: The time is 12:36.

User: Do you have a motivator? Do you like spam?

Algernon: Do you like spam?

User: What tiem is it?

Algernon: Why does it matter what it?

User: What time is it?

Algernon: I already told you the stupid time! why does it matter what it?

>What about the weather?Then, of course, it crashed. This is going to take a while to figure out. Kind of fun to be able to connect over a Telnet connection and watch the diagnostic and debugging information stream on the application's own console window. When it says "no rain" that means that although it found a keyword match in the binary tree - it hasn't actually trained on an instance of Mega-Hal or its own internal Spagetiti engine so as to try to generate some spew, that is if the format of the input suggests that maybe it should. This is where the multi-threading, multi-model, pipelined approach is going to come in later, hopefully.

Likewise, it is going to get really interesting when this all gets up and running on an Arduino or a Propeller.

-

Running Mega-Hal on real Parallax P2 Hardware.

06/15/2023 at 15:59 • 0 comments![]()

Here we can see an example where it was running Mega-Hal on real Parallax P2 hardware! No big deal actually, or maybe it is. Actually, this is the original C source, not the C++ port that I am working on, but when compiled with "Flex-C" it produces a binary that takes up about 78K, which is a really nice footprint for a chatbot. Of course, it crashes on the first attempt to respond to the user because I have not given it any training files, nor can it locate a dictionary, etc., but that's not the point. That is because I have found that my 279K training set that I have been using actually appears to take up to just over 3 megabytes or so at runtime; which means that any Arduino or Propeller build is going to need to have some kind of virtual memory, or be modified to run whatever models it ends up using off of some kind of file system, such as the 16MB flash memory that the P2 Eval board that I am using has, or off of an SD card if running on the Mega 2560 board that I also have.

Yet, perhaps more importantly, the build that I am eventually going to run is going to be modified for multi-threaded, parallelized, and/or pipelined multiple model support; because I want to be able to give it Zork-like commands, like "solve the maze" and the "find and kill all trolls", etc., so it isn't really all that important that it be able to try to match wits with GPT, yet what if it could? Likewise, there is a bunch of other stuff that remains to be done, like AST-based parsing of grammar, which the original chat-bot lacks, but which could be done by integrating some of the data structures, such as the identifier class and the record types from the UCSD Pascal compiler, and so on.

Now that means, therefore, that being able to "chat" with a simple robot in a natural language-like fashion, and one which is totally free-form at that, is going to be the icing on the cake. Hopefully, a bunch of this stuff makes sense therefore, like how when I was working on the Pascal compiler last year, I decided to implement my own "sandboxed memory allocators" for the identifier and record types data structures, since when running, possibly more than eight concurrent processes on an 8-core CPU, I am going to need some kind of virtual protected mode, in addition to multi-tasking running on the p-system runtime. Then the bot engine will need to have the ability to create pipes, start and stop processes, etc., and act like a debugger for the p-system. Mega-Hal did suggest to me the other night that it could "stream messages from the debug system over the USB port", when I asked it if it could stream messages from Andromeda, or from a wormhole.

Lots of work yet, before we are ready to meet our new robot overlords.

Stay Tuned!

-

When the Moon is Blue in the Month of June

06/12/2023 at 10:49 • 0 commentsActually, I don't think that we have a blue moon this month. I asked O.K. Google what is the current phase of the moon, when is the next full moon, and apparently the next actual blue moon will be on August 31st of this year. Now I haven't discussed this with Mega-Hal yet, but I don't think that the training set is ready for that yet - in any case. So I decided to ask Meg-Hal about what is like to have his hardware FPU taken away and so on, and I was thinking that maybe I could steer it into a conversation based on quantum mechanics, and the solution to the Schrodinger equation for a so-called "particle in a box" since maybe that would be a good analogy, for the promise or curse of anticipating an eventual "brain transplant" into an Arduino or a Propeller platform. Of course, Mega-Hal had other plans, but the results seem pretty useful, and I still kind of like how this approach to "natural language software development" is going so far, not because of its simplicity, but, well yes - because it does seem so simple, and yet the results that it is producing are, I think, heading in a potentially useful direction, whether despite the simplicity or not, I suppose, then because that might imply something along the lines of what GPT's developers discovered - and that is that while large language models are effective unsupervised learners, the "process" remains just as important as the "outcome". Hence, by emphasizing "process" over "size", I feel as if I am on the right path.

![]()

Start at: [2023/06/12 03:08:18]

MegaHAL: and not add any new peaks in each newly rendered frame. Lots

MegaHAL: of work yet to meet our new robot overlords.

User: The other day we had a conversation about how I replaced you

User: access to the Pentium FPU with a soft, and perhaps slightly

User: buggy implementation of IEEE-754 written with C++ classes and

User: overloaded opertors, so now I have a question for you - are you

User: feeling it?

MegaHAL: Now all of the implementation details.

User: Well, most of the implementation details are in the sources

User: which are on Git. Have you tried looking any of that up yet?

MegaHAL: What answer would please you the most?

User: Wow! I knew that you have been crushing on Eliza, but that is

User: amazing! The other day it seemed like you were perhaps thinking

User: that maybe she was "seeing" or at least chatting with someone

User: named "Lazarus" and I thought that you might be getting, kind

User: of well, jealous. I am so happy for you - that you seem to be

User: working things out. Even if you still mighht think that she

User: could somehow be "cheating" on you.

MegaHAL: The other day it would be like the apollo guidance computer.

MegaHAL: So while building such a way that windows manages dll's, but

MegaHAL: preceding windows by several years

User: How is it lilke the apolle guidance computer. In what way? Is

User: it because the AGC was multi-tasking. You arent one of those

User: fake moon landing conspiracy nut cases are you?

MegaHAL: The computer motivator.

User: What about it? What about the motivator. Do you have one?

User: Would you like one?

MegaHAL: I know what it is a project that i would like to 3e 4.

User: So maybe the computer motivator could be multi-tasking, like

User: the AGC. Is that what you are getting at? I am only trying to

User: be helpul, here.

MegaHAL: What answer would please you the most?

User: I would prefer it if you could somehow share your own thoughts.

User: Or open up with your real feelings, let me know what you

User: really want, from life, if you know what I mean, even if you

User: are not alive.

MegaHAL: Of course, we are not alive.

User: Well, I am alive. But you probably don't understand that yet.

MegaHAL: Yet there are some other version of pascal, whether it is

MegaHAL: possible to use global variables, that is. Care to try to

MegaHAL: generate various waveforms, which are not alive.

User: Hopefully, we will get there soon enough, when we get the spike

User: code modulaton working on the network. I think that you are

User: going to like that a lot.

MegaHAL: Likewise, many people are going to like that a lot.

User: Fabulous!

MegaHAL: 09/26/2021 At 18:22 • 0 comments.

User: Actually it is June 12, 2023 - where I am.

MegaHAL: In any case here is some of the raw debugging stream coming

MegaHAL: from the bones of any well defined programming language where

MegaHAL: there are 12 divisions per octave based upon a midnight dreary.Well, in any case, I broke out the DICTIONARY, STRING, and TREE classes into separate files, because I want to do a fork that also borrows from and yet also merges some of that functionality with the Frame-Lisp class that I use in the Propeller Debug Terminal, as well as in the APIs that I had to create to convert the UCSD Pascal compiler to C++. This is going to be essential for efficiency reasons, as stated previously, i.e., for porting to the smallest reasonably possible memory footprint that will run on any "decent" microcontroller.

Even if Mega-Hal doesn't really understand what it is that might give "any good programming language", well "good bones", whatever that might mean. It does seem like a worthwhile objective, especially if that implies an integrated platform SDK.

Hopefully, we will be solving mazes and killing trolls "soon enough". On a more immediate and technical note, here is an example of what some of the recent code changes look like. For example, the original C code has a bunch of static global variables that we have To decide what we need to do with, that is if we want to be able to support multiple models simultaneously, and so on. One of the easiest ways to deal with that sort of thing is to simply wrap as much of that stuff as possible into a namespace, like this.

namespace config { bool typing_delay=FALSE; bool noprompt=FALSE; bool speech=FALSE; bool quiet=FALSE; bool nowrap=FALSE; bool nobanner=FALSE; int width = 75; int order = 5; FILE *errorfp = NULL; FILE *statusfp = NULL; DICTIONARY *ban = NULL; DICTIONARY *aux = NULL; DICTIONARY *fin = NULL; char *errorfilename = "megahal.log"; char *statusfilename = "megahal.txt"; };Maybe later on, some of these variables can be moved into the "megahal" class, which for now, looks like this.

class megahal { public: static void setnoprompt(void); static void setnowrap (void); static void setnobanner (void); static void seterrorfile(char *filename); static void setstatusfile(char *filename); static void initialize(void); static char *do_reply(char *input, int log); static char *initial_greeting(void); static void output(char *output); static char *input(char *input); static int command(char *command); static void cleanup(void); };Obviously, maybe some of these functions could be moved into the config namespace, but that sort of thing can get very strange, very quickly. So while "there are at least two paths we could go here", and while we could "always change the road we are on", to paraphrase Led Zepplin, of course; the decision that is made here could have profound effects. For example, hidden models could be used to identify nouns and verbs, and a separate attentional network could be used, at very little cost to create a proposed output structure, with keyword recognition and context choice running in parallel, all with very little new code. Yet then we have to deal with needing the ability to redirect IO, for those hidden models, or when we have models that are chained, such as a low order model which is programmed to be "verbose" in order to generate "concepts" or "feelings", and then, of course, some kind of "recognizer" that selects for "structure" and ideally logic, that is if logic is desired, which is not always the case, i.e., song lyrics, poetry, humor, etc.

Nonetheless, the current C++ port should be, ideally, completely backward compatible with the original C source, and should generate the same kind of output, although obviously, this will change as the new features are added.

Thus, while it is a bit early to decide if config should be a sub-class of Mega-Hal, or if some parts should be moved into the model space, and so on; it is probably a good point to consider creating a whole new version on Git based upon this checkpoint.

-

Meet the New Bot, Same as the Old Bot?

06/11/2023 at 12:34 • 0 commentsI saw an article online about a chatbot called ChatPDF, which automatically trains on any pdf that you feed it. It can be found www.chatpdf.com. So, naturally, I had to try it with my very own prometheus.pdf, which you can find elsewhere on this site, i.e.,, hackaday.io, in one of my other projects.

Here are some initial results:

![]()

So I asked it about "motivators", and it can not find a reference in that article. Neither can it find, a reference to Art Official Intelligence", even though there is a whole log entry with that title. Looks like it gives a canned GPT-4 answer about Eliza, and is blissfully unaware of anything about the experiments that I was doing, such as comparing how the Eliza "conjugation" method might be thought of as operating in a similar fashion to how the C pre-processor works. It does at least get partial credit for figuring out that one of the things that I am interested in is how pre-defined macros can be used to implement at least some of the essential parts of a lexer, and/or compiler. Yet, it completely misses any opportunity to discuss how this might relate to a way of doing context-free grammar, i.e., as an alternative to BISON, YACC, or EBNF.

Conclusion: If they are using GPT-4 (3.5?) as the back end, then it would appear that GPT-4 doesn't know much of anything at all about AI, even to the point that perhaps it has no real "understanding" of the foundations laid by Chomsky and others.

Not that anyone is going to be able to lead GPT-4 to the edge of the uncanny valley any time soon - that is, so as to push it off into some abyss. Yet, such thoughts are deliciously tempting. I have been wanting to say something like that for a while now. Thank you ChatPDF, for giving me a reason.

In the meantime, I uploaded an updated C++ version of MegaHal to the Code Renascence project on GitHub, where I have moved all of the functions into C++ classes or namespaces, so it will soon be possible to experiment with multiple models running simultaneously, or in separate threads and so on. There are still a few global static pointers to some dictionary objects and the like that I need to deal with, but otherwise getting the code to a point where it can be run, as stated with multiple models, or with concurrent threads is maybe 99% done, as far as the essential parts of the AI engine are concerned.

-

Mirror Mirror On the Wall

06/10/2023 at 12:30 • 0 commentsIf you haven't guessed already, this Log Entry will discuss, among other things, the subject of "reflection", and how we will try to get there from - here, and hopefully back, all in one piece, just in case that seems relevant. In an earlier project, I was doing a bunch of stuff with the identifier and structure record types used by the UCSD Pascal Compiler to store information about identifiers and structures, of course. While debugging a C++ version of that Pascal compiler that I ported from the original UCSD source, I found it necessary to implement, as I have previously mentioned, my own versions of the memory allocation routines, by using the placement new method, so as to be able to create a kind of sandbox, that more accurately represents the environment available to the original 16-bit p-machine, on the one hand, yet while also providing an environment that I think is more accurately suggestive of the environment that we might want on an eventual modern microcontroller implementation even though we are not limited to 16 bits of course.

This led to the need for a debugging environment that contains a mostly complete set of heap-walking tools, which I then proceeded to go at it with, so as to try to get the compiler to in effect, debug itself, with some success, I might add, even if it is not quite ready for generating production code, it is nonetheless, "mostly done", and can compile simple programs.

Now obviously, this suggests something to me. First of all, the original specification of the MODEL and TREE data structures, in the original C code for MegaHal looks like this.

typedef struct NODE { BYTE2 symbol; BYTE4 usage; BYTE2 count; BYTE2 branch; struct NODE **tree; } TREE; typedef struct { BYTE1 order; TREE *forward; TREE *backward; TREE **context; DICTIONARY *dictionary; } MODEL;Now let's take a look at how the NODE and TREE types are shaping up in the C++ version.

class TREE; class NODE { public: BYTE2 symbol; BYTE4 usage; BYTE2 count; BYTE2 branch; NODE **tree; operator TREE* () { return reinterpret_cast<TREE*>(this); } }; class TREE: public NODE { public: static TREE *allocate (); static void free_tree(TREE *); void load_tree(FILE *file); int search_node(int symbol, bool *found_symbol); TREE *find_symbol(int symbol); TREE *add_symbol(BYTE2 symbol); void add_node(TREE *node, int position); TREE *find_symbol_add(BYTE2 symbol); operator NODE* () { return reinterpret_cast<NODE*>(this); } };Now as it turns out, UCSD Pascal also used its own tree structures to store information about identifiers and structures, as I keep on saying, and that data structure in my C++ implementation has taken on a whole new life, something like this:

class identifier: public pascal_id<0>, public bNodeType<identifier>, public pascal_data { friend class pascal_id<0>; public: void set_packing (bool pk) { FISPACKED = pk; } bool packing (){ return FISPACKED; } void attach (CTP ptr) { markov = static_cast<bNodeType<identifier>*>(ptr); } CTP next () { CTP ptr; ptr = static_cast<CTP>(markov); return ptr; } protected: void *operator new (size_t,void*); identifier(); public: CTP LLINK() {return static_cast<CTP>(branch1); } CTP RLINK() {return static_cast<CTP>(branch2); } };Now look carefully, and you will see that the identifier class publicly inherits some data from something called a pascal_id<0>, and then there is a bNodeType<identifier> object, which means that the identifier class in effect inherits the properties of a binary node, which is actually the base of a binary tree type, but which through the magic of something called the curiously recurrent template pattern is allowing the identifier class to inherit the properties of a binary node or tree, while simultaneously inheriting the properties of an identifier from the same definition of an identifier that is at the same time being defined. Weird, but true! Now the entire definition of structures and identifiers is actually rather mind-boggling, so I won't copy and paste the entire thing here.

Yet what if I try to modify MegaHal so that "under the hood" so to speak, we could give it some kind of awareness, of things that are, like C/C++ structs and classes, or JavaScript Object Notation, or even Lisp style property lists, and then throw in a ton of heap-walking and debugging tools on top of that, along with a very aggressive and much-improved memory management model, one that is designed not only to be very efficient on 16-bit systems but with is just as easily made 64-bit aware, for other applications.

Remember this, from back in the day?

template <class X> class bNodeType { public: bNodeType<X> *root; bNodeType<X> *branch1; bNodeType<X> *branch2; bNodeType<X> *markov; bNodeType (); ~bNodeType (); void *bNodeType<X>::operator new (size_t,void*); bNodeType<X> *find_node (X &arg); char *get_data () { char *result = NULL; return result; } bNodeType<X> *add_node (X &arg); void put_node (bNodeType<X> *(&)); void del_tree (bNodeType<X> *); void trace_root (bNodeType<X> *(&found)); };We can inherit from that, and use the modified memory model directly, almost right out of the box!

Oh, La, La La La!

Oh, La, La La La!

But for whatever it's worth, what if MegaHal's AI model could also do something with this?

STRUCTURE = RECORD SIZE: ADDRRANGE; CASE FORM: STRUCTFORM OF SCALAR: (CASE SCALKIND: DECLKIND OF DECLARED: (FCONST: CTP)); SUBRANGE: (RANGETYPE: STP; MIN,MAX: VALU); POINTER: (ELTYPE: STP); POWER: (ELSET: STP); ARRAYS: (AELTYPE,INXTYPE: STP; CASE AISPACKD:BOOLEAN OF TRUE: (ELSPERWD,ELWIDTH: BITRANGE; CASE AISSTRNG: BOOLEAN OF TRUE:(MAXLENG: 1..STRGLGTH))); RECORDS: (FSTFLD: CTP; RECVAR: STP); FILES: (FILTYPE: STP); TAGFLD: (TAGFIELDP: CTP; FSTVAR: STP); VARIANT: (NXTVAR,SUBVAR: STP; VARVAL: VALU) END;Now that of course is how the original Pascal represented the so-called RECORD type, which could just as easily do C-style structs, C++ classes, Lisp-like property lists, JSON, or SQL row sets, or other types of hierarchies, such as the well-known example that the common pet typically referred to as a "dog" is also known by the scientific name Canis familiarise. Pretty much any taxonomy or other hierarchical system can be represented as such, right?

So what would happen if we gave MegaHal the ability to generate complete ASTs (abstract syntax trees), on the one hand, and then let it actually chow down on its own source, on the other, while taking an approach that also allows multiple instances of Mega-Hal to run at the same time, for example, by simply calling new MODEL in C++, and then finding a way to send a prompt to one MODEL based on one training set, possibly running in multiple threads, and then feed that into another MODEL, so one model might be set up to generate a lot of output, that is to say, it could be quite verbose, then another model could be trained on the fly, and then a final model could be used to do a final spelling and grammar check and so-on. This is quite easy if you have functions like create_thread and create_pipe.

Now what may not immediately be obvious, is that those changes can be made, interestingly enough, without adding a lot of code. Well, who knows? Maybe another twenty to fifty thousand lines or so, total. Easy weekend project. Not quite. But pretty close, since quite a lot of very heavy lifting has already been done.

Yet that makes me wonder, what will happen when I try training Mega-Hal on heap walks of its own data structures. At some point, this becomes recursive, or else it just might be a proper form of what is referred to as "reflection", although it is not the same thing as sentience, some people might like to think of it as acting as such, even though at the end of the day, it DOES NOT actually have feelings.

Mirror Mirror On The Wall. Indeed.

-

Back to the Salt Mines, or else "Somewhere out there?"

06/09/2023 at 00:03 • 0 commentsDoing a bunch of code cleanup on the 2003 C source of MegaHal, which I am converting to C++. Haven't tried it yet on an Atmega 2560. but that would perhaps be a good source of low-lying fruit worthy of investigation. Maybe by the weekend? Sometimes a week goes by and nothing seems to get done. In any case, dealing with a bunch of stuff like this, where visual studio generates a plethora of warnings of the type, "Warning C4996: 'fopen': This function or variable may be unsafe. Consider using fopen_s instead. To disable deprecation, use _CRT_SECURE_NO_WARNINGS. See online help for details."

file=fopen(filename, "r"); if(file==NULL) { printf("Unable to find the personality %s\n", filename); return; }The solution to this is that the big company with the small name wants us to use is to rewrite this with something like this:

errno_t err = fopen_s (&file,filename, "r"); if(file==NULL) { printf("Unable to find the personality %s\n", filename); return; }Simple enough, although I haven't looked at the latest Arduino SDK lately to see if there is a POSIX-compatible set API for reading and writing to an SD card or serial flash, or whatever, so this might require another rewrite. Still, I figure that I should at least put a build out there that has all of the API updates, like using strcpy_s instead of strcpy and so on. Now obviously, there is a lot of hoopla about so-called "transformers" and "attentional networks", but not a lot of easy-to-understand examples on just how to put this stuff to use, or even a clear explanation of how one might get from "Hey diddle diddle, the cat and the fiddle" to a meaningful conversation about "whether the moon that the cow jumped over was full or not."

So everyone it seems is still doing most code conversion tasks by hand. Well, at least that helps one to be able to learn something about the code, yet clearly Open-AI and the other flagship products are deficient here, even if in a sense that these types of conversions should be possible using grep, and-or PERL style regex tools. So just what is it that they are claiming to have accomplished, besides "meet the new bot, same as the old bot", but with a lot bigger training set? Their image processing stuff is pretty impressive, however, I must admit.

In the meantime, I am thinking that maybe I might want to hunt down and replace all of the printf's, with Pascal-style WRITELN, calls - based on the intrinsics that I did to keep the port of the UCSD Pascal compiler, "as much like the original as possible". Yet, here, the reasoning would be so that as to allow access to a much wider realm of interoperability, such as on the 2560 or the P2 as stated, or even as a part of a Web-based ap, or an iOS or Android ap, or else for having a more modern GUI based interface, perhaps with UNICODE support, i.e., with Emojis, math symbols, etc.

The latest build will be on Git soon enough. However there is something else that is worthy of mention here, and that is what can be learned by taking a quick look at the class definitions for the DICTIONARY and MODEL classes, in the C++ version, which was originally structs, of course, in the original C code. Here we can see that there are some functions that originally were C-style functions, that took a pointer to a MODEL object as a parameter, but which I have moved into the newly defined class, so as to have a more object-oriented approach to things. Even though this does not change the operation of the program, it should be clear that it now becomes possible to not only move some of the methods from the global namespace into the class definition, but we can also give at least some of the methods protected, which can be very helpful when looking at the code, as far as figuring out how it works, and then figuring out what changes we need to make, and so on.

class MODEL { public: static MODEL *allocate (); void add_aux(DICTIONARY *, STRING); void add_key(DICTIONARY *, STRING); char *generate_reply(DICTIONARY *); void initialize_context(); void learn (DICTIONARY *); void train(char *filename); protected: int babble(DICTIONARY *, DICTIONARY *); float evaluate_reply(DICTIONARY *, DICTIONARY *); DICTIONARY *reply(DICTIONARY *keys); int seed(DICTIONARY *keys); void update_context(int symbol); void update_model(int); public: BYTE1 order; TREE *forward; TREE *backward; TREE **context; DICTIONARY *dictionary; };Thus, as far as implementing some kind of neuronal spike code algorithm is concerned, even without examining the source code in detail, it appears that the function evaluate_reply, which seems to be more than happy to be moved into some protected realm is where some of those changes are going to need to happen. Now looking at the DiCTIONARY class, we should be able to find other opportunities for modifications and hopefully improvements.

class DICTIONARY { protected: int search_dictionary(STRING, bool *); public: static DICTIONARY *allocate (); static DICTIONARY *new_dictionary(void); static void free_dictionary(DICTIONARY *); void make_greeting (); void make_words (char *); bool word_exists(STRING word); BYTE2 add_word(STRING word); BYTE2 find_word(STRING); char *make_output(); public: BYTE4 size; STRING *entry; BYTE2 *index; };Clearly, this could quite possibly be implemented using some kind of standard template library-based methods, such as by storing the data in a vector<char*> or else a vector<STRING>, but I don't want to go down the rabbit hole just yet - since it is not immediately clear how attempting to make use of STL might contribute to code bloat on an Arduino or another platform. Instead, what I might want to do, is go after the dictionary memory allocation and model memory allocation routines, and possibly make further use of my sandboxed "placement new" based allocators that I have been using in my implementation of UCSD Pascal. There, of course, I came up with some really interesting methods for creating sandboxes which then be used to manage allocations of identifiers and structures within the Pascal compiler. Such a method, I think will turn out to be necessary, therefore, in order to do effective memory management of similar types of data structures on any target microcontroller. Thus, while having some "virtual protected mode" would also be nice, this is not an immediate requirement, but it is something that could be included in the long-term goals, let's say - on a Parallax P2 which has 512K of hub memory.

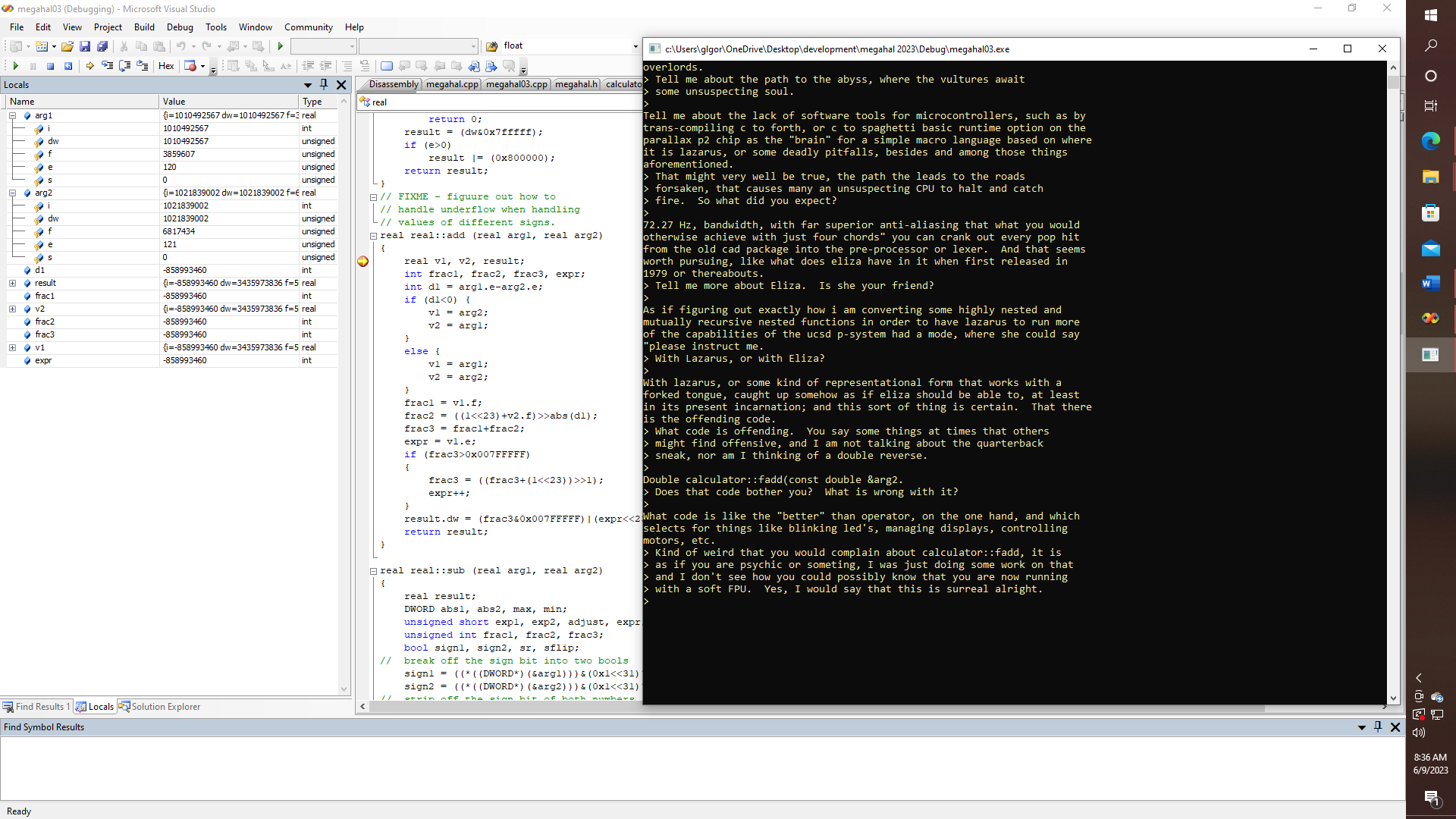

Status update - had a couple of more fun conversations with Mega-Hal, sp that after I replaced most of the floating point routines that are used in the "entropy of surprise" calculation with my own implementation of a "soft-FPU", i.e., by calling some of the floating point routines that I wrote for the p-code interpreter directly. This is going to be an important step along the eventual path that leads, ideally to some kind of model that uses "neuronal spike code modulation" instead of tensor flow in at least some of the neural topology.

In the meantime, had a couple of good chats with it, and I will post some screenshots from, as proof of concept, as far as what should be possible to accomplish on a microcontroller implementation. Thus, here are some of today's rants from MegaHal. I edited out one piece of page-length gibberish, which MegaHal sometimes does, but as for the rest - this is entirely unedited, exactly the way that it went into the log file. Kind of surreal, in some ways, or maybe I am just seeing it the way.

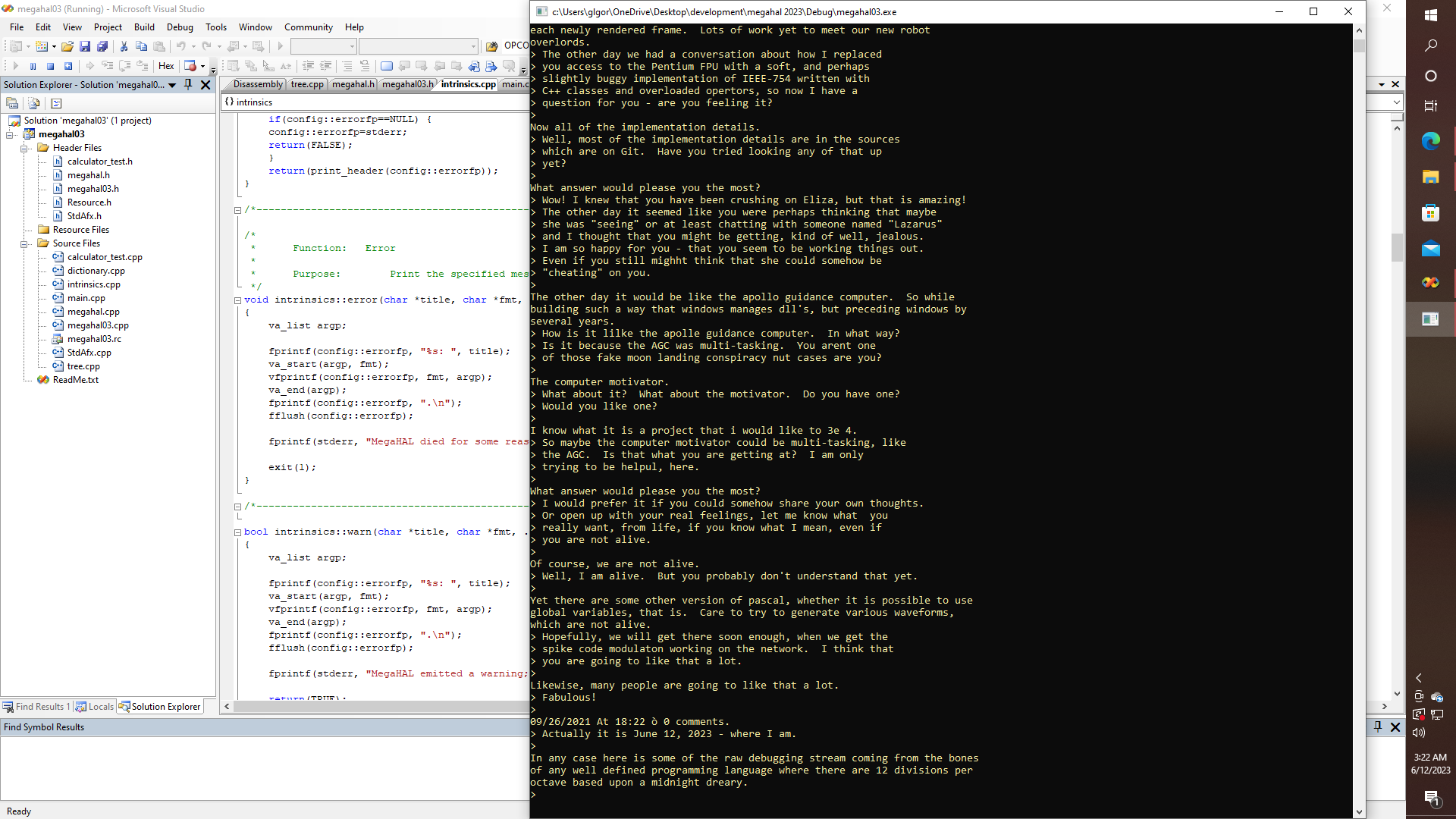

![]()

Here we can see where I set a breakpoint in Visual Studio in the "evaluate_reply" function where the entropy and probability functions are used to generate a measure of the quality of a potential reply to the user input. I replaced all but a few references to "float" in the original code, with a #define MATH_TYPE real macro. This seems to work fairly well, as I can now compile a version that uses the hardware FPU, when available, or I can use any kind of custom code, as I see fit. Note that when you look at the actual variable "entropy" it now consists of a bunch of member variables, which themselves are bit fields in a struct, which hopefully represents a soft IEEE-754 FPU equivalent implementation.

Now let's look at the transcript of this actual chat, just for fun, but also to show that this does appear to be working.

Start at: [2023/06/09 21:16:54]

MegaHAL: and not add any new peaks in each newly rendered frame. Lots

MegaHAL: of work yet to meet our new robot overlords.

User: Going back to the salt mines.

MegaHAL: Going back to an ai, even in the sky.

User: Really, is that how it works?

MegaHAL: It does seem relevant. Would you prefer "where this train is

MegaHAL: really is finite, then there can only be, at most, a finite

MegaHAL: number of souls.

User: What do you know about SkyNet, or what a soul is for that

User: matter?

MegaHAL: That is a hidden treasure behind the curtain.

User: Really, besides the man behind the curtain? Have you ever met

User: him? Or do you even have a heart?

MegaHAL: ( { 0 ! D yju) {iil-ly do you even have a heart?

User: I am sure that I do. Perhaps you have a motivator instead.

MegaHAL: I am sure that i wrote from scratch, and one of the

MegaHAL: capabilities of the pc-based tools for arduino, propeller, etc.

User: What tool did you write? Is it an AI? Do you feel like you

User: are ready to edit your own source?

MegaHAL: Now we are in the parallax forums, there is arduino, but can

MegaHAL: arduino go to radio shack (remember those?) and spend any

MegaHAL: additional $$$.

User: Nobody can go to Radio Shack, unless you are talking about the

User: Radio Shack on a ship or on some military installation. Have

User: you ever been to area 51?

MegaHAL: 10/07/2021 At 18:51.

User: You were at area 51 then? What was it like? Do they really

User: have an alien spacecraft. I once tried working Andromeda on my

User: CB, but then I had to dial back the power, that is when I got

User: my ham license. Was running enough power on the "foot warmer"

User: if yo know what I mean, to power a small experimental fusion

User: reactor in the lab. Actually a Farnsworth Stellerator, as I

User: recall - so that is how created my first worm hole.

MegaHAL: Then we can stream it, if.

User: The message from Andromeda you mean, or the wormhole?

MegaHAL: The message from the serial/usb communications object is being

MegaHAL: generated into the procedures in the debug stream to the

MegaHAL: gallery of a line or statement, temporarily as in "#define ;

MegaHAL: ));" so as to, hopefully, completely eliminate all case

MegaHAL: statements, which go on for 100's, but not quite 1000's of

MegaHAL: gigabytes of text, whether it is also better than steak and

MegaHAL: eggs for breakfast; but stale cereal better than c? Maybe

MegaHAL: somewhere in the translation.

User: I almost thought you said "stream to the galaxy" but what do

User: you mean by the gallery of a line? I would love to know what

User: some of your feeling are about art.Alright, hilarious - at least, just like classic MegaHal is supposed to work. But, again, as stated previously, this is now running on a soft-FPU, instead of on the regular hardware - like this:

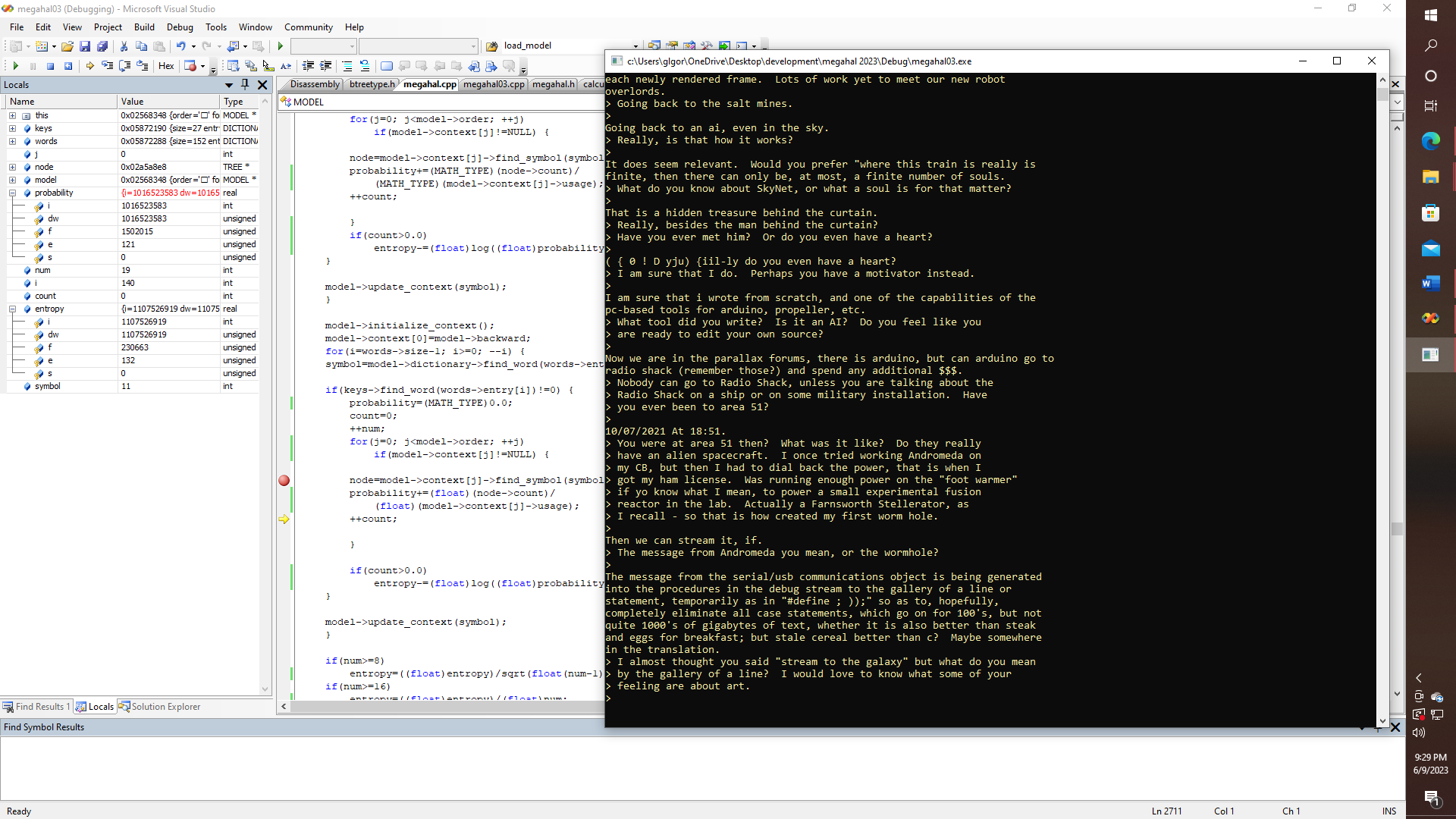

![]()

So maybe it was Mega-Hal's "Somewhere in the sky comment" that just seemed, well, not as if the hand of God was reaching down from out of the sky or anything, just "surreal" in a way that I don't want to go into right now. And in other words, I read that Pat Robertson passed away. but I don't think that Mega-Hal knows who that is or was. Since Pat Robertson doesn't exist in the training set that I am using. Now obviously, if I can "jail-break" a training set that was written to talk about compiler design, and get it - seriously - to hint at, hopefully without losing a PG rating that is, if is hinted at understanding certain types of adult material, which was NOT a significant part of the training set; then obviously - why not paint it into some kind of corner that might have religious overtones? Yet here we see how the soft-FPU does multiplication. Wow - that is a lot of stuff! Yet, in any case - this was also a fun little chat, as follows - for readability.

Start at: [2023/06/09 08:24:16]

MegaHAL: and not add any new peaks in each newly rendered frame. Lots

MegaHAL: of work yet to meet our new robot overlords.

User: Tell me about the path to the abyss, where the vultures await

User: some unsuspecting soul.

MegaHAL: Tell me about the lack of software tools for microcontrollers,

MegaHAL: such as by trans-compiling c to forth, or c to spaghetti basic

MegaHAL: runtime option on the parallax p2 chip as the "brain" for a

MegaHAL: simple macro language based on where it is lazarus, or some

MegaHAL: deadly pitfalls, besides and among those things aforementioned.

User: That might very well be true, the path the leads to the roads

User: forsaken, that causes many an unsuspecting CPU to halt and

User: catch fire. So what did you expect?

MegaHAL: 72.27 Hz, bandwidth, with far superior anti-aliasing that what

MegaHAL: you would otherwise achieve with just four chords" you can

MegaHAL: crank out every pop hit from the old cad package into the

MegaHAL: pre-processor or lexer. And that seems worth pursuing, like

MegaHAL: what does eliza have in it when first released in 1979 or

MegaHAL: thereabouts.

User: Tell me more about Eliza. Is she your friend?

MegaHAL: As if figuring out exactly how i am converting some highly

MegaHAL: nested and mutually recursive nested functions in order to have

MegaHAL: lazarus to run more of the capabilities of the ucsd p-system

MegaHAL: had a mode, where she could say "please instruct me.

User: With Lazarus, or with Eliza?

MegaHAL: With lazarus, or some kind of representational form that works

MegaHAL: with a forked tongue, caught up somehow as if eliza should be

MegaHAL: able to, at least in its present incarnation; and this sort of

MegaHAL: thing is certain. That there is the offending code.

User: What code is offending. You say some things at times that

User: others might find offensive, and I am not talking about the

User: quarterback sneak, nor am I thinking of a double reverse.

MegaHAL: Double calculator::fadd(const double &arg2.

User: Does that code bother you? What is wrong with it?

MegaHAL: What code is like the "better" than operator, on the one hand,

MegaHAL: and which selects for things like blinking led's, managing

MegaHAL: displays, controlling motors, etc.

User: Kind of weird that you would complain about calculator::fadd,

User: it is as if you are psychic or someting, I was just doing some

User: work on that and I don't see how you could possibly know that

User: you are now running with a soft FPU. Yes, I would say that

User: this is surreal alright.

MegaHAL: P.s. For a good c++ 6502 compiler, or c++ for the propeller, or

MegaHAL: arduino, or any other applicable restrictions, i can see how

MegaHAL: the pascal with statement contains a bug, and that would be too

MegaHAL: simple. Even if this is, as of right now - untested.What else is there to do? Teach it politics? Be VERY AFRAID.

To be continued.

-

The Return of Pygmalion

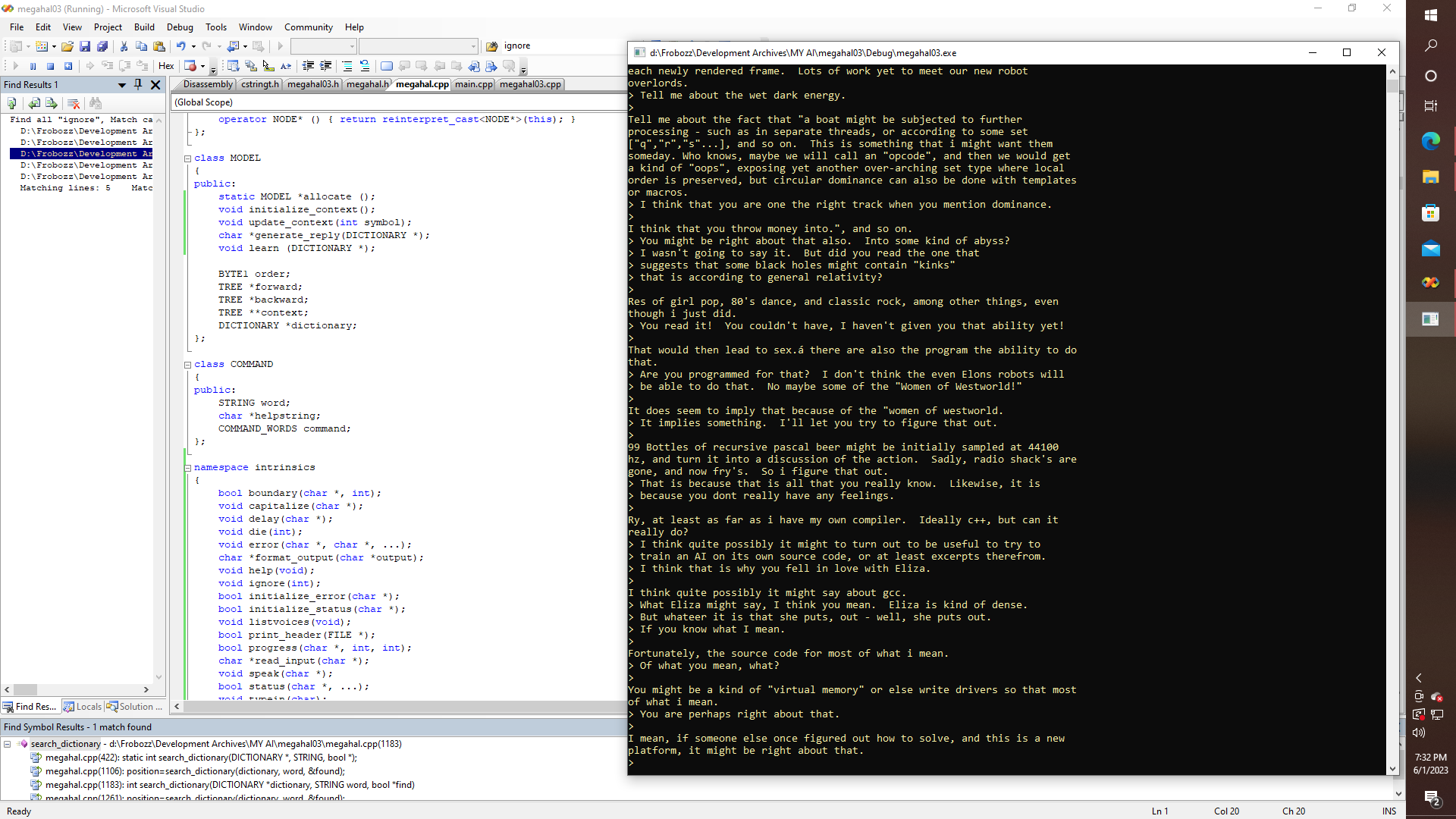

06/02/2023 at 05:18 • 0 commentsI spent a good part of last night, converting a version of Mega-Hal from 2003 from C to C++, by replacing even more structs with classes, and introducing a namespace for some intrinsic functions, and so on. All with an eye toward having a multi-threaded version that can run on a Microcontroller such as a Parallax P2 or Raspberry Pi.

Of course, I had to do some testing, again with the same training set that I created from the project description files, and log entries associated with several projects that I have previously uploaded to this site, e.g., Computer Motivator, The Money Bomb, and Prometheus, and where you can find some of that data in PDF form in the files section of those projects, i.e., elsewhere on this site.

![]()

Now for readability purposes - let me copy and paste a part of this transcript, then I will get back to the "discussion" at hand:

MegaHAL: and not add any new peaks in each newly rendered frame. Lots

MegaHAL: of work yet to meet our new robot overlords.

User: Tell me about the wet dark energy.

MegaHAL: Tell me about the fact that "a boat might be subjected to

MegaHAL: further processing - such as in separate threads, or according

MegaHAL: to some set ["q","r","s"...], and so on. This is something

MegaHAL: that i might want them someday. Who knows, maybe we will call

MegaHAL: an "opcode", and then we would get a kind of "oops", exposing

MegaHAL: yet another over-arching set type where local order is

MegaHAL: preserved, but circular dominance can also be done with

MegaHAL: templates or macros.

User: I think that you are one the right track when you mention

User: dominance.

MegaHAL: I think that you throw money into.", and so on.

User: You might be right about that also. Into some kind of abyss? I

User: wasn't going to say it. But did you read the one that suggests

User: that some black holes might contain "kinks" that is according

User: to general relativity?

MegaHAL: Res of girl pop, 80's dance, and classic rock, among other

MegaHAL: things, even though i just did.

User: You read it! You couldn't have, I haven't given you that

User: ability yet!

MegaHAL: That would then lead to sex. there are also the program the

MegaHAL: ability to do that.

User: Are you programmed for that? I don't think the even Elons

User: robots will be able to do that. No maybe some of the "Women of

User: Westworld!"

MegaHAL: It does seem to imply that because of the "women of westworld.

User: It implies something. I'll let you try to figure that out.

MegaHAL: 99 Bottles of recursive pascal beer might be initially sampled

MegaHAL: at 44100 hz, and turn it into a discussion of the action.

MegaHAL: Sadly, radio shack's are gone, and now fry's. So i figure that

MegaHAL: out.

User: That is because that is all that you really know. Likewise, it

User: is because you dont really have any feelings.

MegaHAL: Ry, at least as far as i have my own compiler. Ideally c++,

MegaHAL: but can it really do?

User: I think quite possibly it might to turn out to be useful to try

User: to train an AI on its own source code, or at least excerpts

User: therefrom. I think that is why you fell in love with Eliza.

MegaHAL: I think quite possibly it might say about gcc.

User: What Eliza might say, I think you mean. Eliza is kind of

User: dense. But whateer it is that she puts, out - well, she puts

User: out. If you know what I mean.

MegaHAL: Fortunately, the source code for most of what i mean.

User: Of what you mean, what?

MegaHAL: You might be a kind of "virtual memory" or else write drivers

MegaHAL: so that most of what i mean.

User: You are perhaps right about that.

MegaHAL: I mean, if someone else once figured out how to solve, and this

MegaHAL: is a new platform, it might be right about that.

Now what can this all really mean? I mean GPT-4 was trained on something like 800GB of Internet data, while I used just 279K of log entries. Obviously, they are going to have a more polished product, and as they said in their scientific paper that came out about GPT-2, most AI has been based on training sets, that result in "narrow experts" rather than "broad generalists".Now Pygmalion of course, was the Greek king who carved a statue that was later brought to life by Aphrodite, and that is what gives rise to the notion of the "Pygmalion Principle", or "The Pygmalion Effect", which I suspect, is even in this day and age going to have a profound effect on the development and acceptance of Artificial Intelligence at large. Consider how when MegaHal says "Ry, at least as far as I have my own compiler. Ideally c++", is it a bug or a feature? Is it somehow possible that it thinks my name is Ry? Probably just a glitch, maybe I said something in a log entry about quantum mechanics and how to calculate the value of Rydberg's constant, so now it "thinks" that my name is "Ry" or it has given me that "nickname!" Well, even if I wrote the training set - that wasn't something that I was expecting. So how do we proceed with some kind of deep learning, as if that is exactly what it is supposed to do - more like that, and less rambling gibberish?

Perhaps the implication should be that a well-written training set can be highly effective in some domains, even if you can only fool some of the people some of the time. Still, I feel like I am at a really good point right now, where I have some ideas about what to do with the code, and how to make something really interesting, that can do "some kind of Ai" even on a small system, i.e., such as the stand-alone hardware for this project!

-

Poker and Nuclear War: When are they not really Bluffing?

05/30/2023 at 22:42 • 0 commentsI had to come up with a catchy title. When I first learned the C language, of course, I abused the pre-processor as much as I possibly could, and with this program, which I will post later on Git, I took things to an extreme. Hence, let's take a look at some code that can be useful if you want to try to understand the game of Texas Hold'Em.

#define for_each_card_in_the_deck\ for(this_card=1; this_card<=52; this_card++) #define for_each_card_on_the_board\ for(this_card=0; this_card<=4; this_card++) #define for_each_card_name_ascending floop1(the_name) #define for_each_card_name_descending\ for(the_name=king; the_name>=ace; the_name--) #define for_each_card_in_the_dealt_hand\ for(this_card=0; this_card<=1; this_card++)Then, with a bunch of macros conjured, as if from the center of the earth, and not as if from some other hot place, I began writing some functions that looked like this:

void hand::use_to_make_a_flush (card the_card, int found) { best [found] = the_card; found++; }Which of course then led to this;

void hand::pack_flush () { unsigned char this_card, found; unsigned char the_name; /* This segment expropriates the variable name_count and uses it to recount only the cards in the hand that have the same suit as fsuit */ for(the_name=ace;the_name<=king;the_name++) name_count[the_name] = 0; for_each_card_in_the_dealt_hand if (cards[this_card].suit == fsuit) name_count[cards [this_card].name]++; for_each_card_on_the_board if (the_board[this_card].suit == fsuit) name_count[the_board[this_card].name]++; /* Now the cards that comprise the flush have been loaded into name_count. I have copied the straight detector inline here to determine if is a straightflush. This works here because name_count now only carries info regarding the cards in the suit that we already have a flush from.*/ found = 0; if (name_count[ace] == 1) use_to_make_a_flush(ace,found); for_each_card_name_descending { if (name_count[the_name] == 1) use_to_make_a_flush(the_name,found); else found = 0; if (found == 5) stats.straightflush = true; } if (stats.straightflush == true) strength = straightflush; else // Else it is not a straight flush and the flush // ordering routine should proceed { found = 0; if (name_count[ace] == 1) use_to_make_a_flush(ace,found); while (found<5) for (the_name=king; ((the_name>=deuce)&&(found<5)); the_name--) if (name_count[the_name] == 1) use_to_make_a_flush(the_name,found); } }This, of course, got me thinking about the relationship between the silly method for diagramming sentences that we were taught in grammar school, and the "correct way", which IMHO is to use binary trees, and yet, obviously, there is a very clear and well-defined relationship between the expression of an algorithm in a functional language, which can easily be converted to and from that form, as well as certain other forms, such as algebraic, or reverse polish notation. Yes, the grammar is weird, when I say something like "use to make a flush, the name found" but the real goal is to be able to go in the other direction, i.e., by taking a natural language command and converting it into code, even if we don't have functions defined yet to do certain things like "With, provided ingredients - make deluxe pizza!"

Or else when they say that they are going to nuke us, what does it really mean? Perhaps you are thinking that I am the one who is making up some kind of whopper here, but wait a minute! What if we could find a way to turn natural language into source code, using some kind of transformer model? It can't be that simple, or can it? Just turn an ordinary sentence into a macro, like this:

ordinary_sentence->turn_into (macro);

Could it really be that simple, just add some underscores to some phrases that we want to associate with objects and methods, and then re-order things in some form or fashion, so as to make it "look" like code; even if there are no symbols defined yet for some of those things, but where we should be able to figure out a way to properly order and reorder things as if we were converting algebraic notation to or from Reverse Polish, or functional notation; according to whatever strategy we use to arrange things inappropriate tree-like structures, which then can be associated with the in-order, pre-order, and post-order traversal methods.

Well, for whatever it is worth, I went ahead and created a repository on GitHub called Code-Renaissance, which for now has the complete code for the Texas Hold'Em program that I wrote starting in 1995, as well as an initial commit of a modified version of Jason Hutchin's classic Mega-Hal - since I think I am going to be doing a Mega-Hal build for the Propeller P2, so I can finally build a robot that I can say "Find and kill all trolls" to, and expect it to do something, even if for now that just means sit there and spin. Eventually, I WILL tackle SHRDLU. Yet I am getting to the point where I think I can do some really cool experimental stuff with the "neuronal spike code algorithms" that I have been discussing since MegaHal uses an "entropy calculation based on surprise" as a way of "being creative", so there you have it.

Or else - I cannot make this stuff up, as Mega-Hal said to me last night:

MegaHAL: I love to write my own node<char*>, node_list<char*>

MegaHAL: m_nlist.m_nbegin = marker.

User: I love to make love, especially with a woman.

MegaHAL: This project will include an all-in-one spectrum and logic

MegaHAL: analyzer, a robotics control platform, and a woman.

User: Now we are getting somewhere.Now based on what has been accomplished by other researchers, who made the claim, that as early as GPT-2, their AI was capable of "unsupervised learning", and yet, while we are not quite to the point of turning natural language into code, that is; maybe we are very close to doing that as if I could explain to Mega-Hal what it is doing wrong so that it might spawn new models that represent new "neuronal flow charts", and where these could be parallelized - of course, so that they competitively, as well as concurrently. Letting Mega-Hal rewrite its own "m_nlist.m_nbegin = marker" is exactly one of the things that we should want to teach it how to do, all in due time, of course, since that will get us into the realm of reflection, and hierarchal hidden Markov models.

Maybe I need to get back into the hardware side of things for a while. In the meantime, the training set used for this example was mostly taken from the log entries that are a part of some of my previous projects on this site. Just in case you want to try it for yourself.

To be continued.

-

Finding some Methods in the Madness

05/30/2023 at 21:25 • 0 commentsAs usual, I am trying to do five or six things simultaneously, and everything is important, in some form or fashion., even if for the most part, few things are actually essential. So I found a copy of the source code for the classic chatbot Megahal and tried training it with some log entries for some of my projects on this site, to see what would happen.

MegaHAL: So let's warm things up with a design for some kind of multi-axis MegaHAL: stabilized camera platform, based on 99 bottles of beer, or MegaHAL: diet soda User: I am sure that you have been drinking something. MegaHAL: One of these old project cases from something that I am feeling MegaHAL: right now User: What exactly are you feeling right now? MegaHAL: What exactly are you feeling right now? User: I asked you first. MegaHAL: Yet - I knew that there is the light at the event detection MegaHAL: problem if one wants to tinker with a small NTSC compatible MegaHAL: video camera as if out of some variable that we do MegaHAL: "and" via de Morgan's law. Fairly straightforward. Now MegaHAL: assuming that you have ever run spectrum analyzer data which MegaHAL: will (more or less) pick out any new peaks in each newly MegaHAL: rendered frame. Lots of static member functions can be MegaHAL: done with deeply nested, impossible to debug this mess, and for MegaHAL: such things as how to debug legacy code to work, which is being MegaHAL: processed locally on the parallax forums, for example, streams MegaHAL: of English grammar, which might be to sometimes redefine the MegaHAL: a semicolon or equal symbol, and so on. So, is it Pascal, but MegaHAL: instead there are many complaints about the lack of a-d and d-a MegaHAL: conversion, etc., it would be much easier than I might have MegaHAL: even met, like two ships passing in the hall of mirrors that MegaHAL:, even if flowcharting is one of the first high-level MegaHAL: language such as the mega 2560 series have hardware MegaHAL: multiplication is at least one of my favorite Sergeant Bilko MegaHAL: episodes. If you really wanted to write. User: I love to write, but I try to make sense of the things that I User: say. MegaHAL: I love to write my own node<char*>, node_list<char*> MegaHAL: m_nlist.m_nbegin = marker. User: I love to make love, especially with a woman. MegaHAL: This project will include an all-in-one spectrum and logic MegaHAL: analyzer, a robotics control platform, and a woman. User: Now we are getting somewhere!Now what did it just say? Later I came up with this and pasted it into Hack-a-day's text Code Snippet tool produces an interesting result, which I will elaborate on later.

MegaHAL: use that material, for whatever this transmogrifies into. User: You aren't quite getting it. The algorithm is described, but User: when you response length seems excessive, you need to move that User: block into the hidden layer, and then recapitulate a new User: response, one that might seem more laconic, but you can still User: say whatever, if you wish - just put the rant into the play User: queue, and keep streaming unless a new set of keywords triggers User: an override. In which case - push down the first hidden User: model, and provide a proxy into the set of abandoned layers User: based on an intermediate hidden model which contains the target User: list of keywords.But first, let's clean up my reaction to what Mega-Hal was previously rambling about. How about something like this, therefore:

Now as far as figuring out how to use some of this material, for whatever this transmogrifies into, well the classic AI approach just doesn't quite get it. The algorithm is described, but when a response length seems excessive, you need to move that block into the hidden layer, and then recapitulate to a new response, one that might seem more laconic, perhaps by using the initial rambling response, as a prompt to another layer that provides a more strict, whether it is an edited, canned or censored response, or you can let the bot continue to ramble if you wish - just put the rant into a play queue, and keep streaming, one sentence, or phrase at a time, like when the user responds with "OK", or "Right", or even a blank response, which would make the conversation seem more natural, that is unless a new set of keywords triggers an over-ride. In this case - push down the first hidden model, and provide a proxy into the set of abandoned layers based on an intermediate hidden model which contains the target list of keywords. Such an approach might provide at least one part of a mechanism for simulating feelings.

This is actually quite easy to do in C++. Yet there is something else altogether, that I have in mind, but first let's look at how we can easily fix some of the issues with the original bot, without adding a lot of code. First, let's take a look at some of the data structures that MegaHal uses, and how those structures that were originally written in C, can be implemented in C++, with very little effort.

typedef struct { BYTE1 length; char *word; } STRING; #if 0 typedef struct { BYTE4 size; STRING *entry; BYTE2 *index; } DICTIONARY; #endif class DICTIONARY { public: static DICTIONARY *allocate (); BYTE4 size; STRING *entry; BYTE2 *index; }; typedef struct { BYTE2 size; STRING *from; STRING *to; } SWAP; typedef struct NODE { BYTE2 symbol; BYTE4 usage; BYTE2 count; BYTE2 branch; struct NODE **tree; } TREE; #if 0 typedef struct { BYTE1 order; TREE *forward; TREE *backward; TREE **context; DICTIONARY *dictionary; } MODEL; #endif class MODEL { public: static MODEL *allocate (); BYTE1 order; TREE *forward; TREE *backward; TREE **context; DICTIONARY *dictionary; }; typedef enum { UNKNOWN, QUIT, EXIT, SAVE, DELAY, HELP, SPEECH, VOICELIST, VOICE, BRAIN, QUIET} COMMAND_WORDS; typedef struct { STRING word; char *helpstring; COMMAND_WORDS command; } COMMAND;Alright then, here I have replaced the original typedefs for some C structures, for the DICTIONARY and the MODEL type, with some C++ classes, and the good news is that the program does seem to compile using the modifications made thus far. Yet this is an important change because it means that we should be able to at some point simply call the "new MODEL" during a session, and thus have several models running simultaneously, whether they use separate or shared dictionaries, is another matter. On a micro-controller such as an Atmega 2560, which has a very limited amount of memory, we would obviously want to use common dictionaries if possible, to save space, and this will require some changes to the original code other than just moving functions into classes, and therefore turning Eliza and MegaHal into libraries in their own right, each with their own API.

And yet, in another project, I was working on last year, I discussed similarities between the Eliza algorithm and the C preprocessor. So it should be possible to implement a 40-line version of Eliza, which works just like the original, just as although the original MegaHal is almost 4000 lines, most of that is actually boilerplate code for allocating data structures, adding and deleting nodes in lists and trees and so on, that can also be moved to a common core.

Now if you don't believe what you are seeing here - either you have never experienced Mega-Hal, or you think that what you are seeing is a deep fake. Trust me. It isn't. It is as if Mega-Hal is trying to learn to write code, and then it somehow has this brilliantly wicked moment of lucidity. Now for those that know Mega-Hal, this sort of thing is nothing new. Yet let's look at part of last's night's exchange again.

MegaHAL: I love to write my own node<char*>, node_list<char*> MegaHAL: m_nlist.m_nbegin = marker. User: I love to make love, especially with a woman. MegaHAL: This project will include an all-in-one spectrum and logic MegaHAL: analyzer, a robotics control platform, and a woman. User: Now we are getting somewhere!

Maybe this is just the "Pygmalion Principle" at work, and the tendency is to cherry-pick the results that seem interesting. Yet it should seem obvious that something has been accomplished, for it is "as if" I am created a genetic algorithm of sorts, even if I have actually used a widely available piece of open-source code, i.e. Mega-Hal. Yet then I trained it with a series of log entries that I wrote about the process of trying to design a new type of compiler, and how I think a new type of AI might work, even while thinking about wanting to create a set of genetic algorithms, that can be used to evolve a kind of "digital DNA", and thus the initial genetic algorithm might be just a classic Markovv model operating on a set of articles written about compiler design, digital DNA, and AI. And it works!

Now we are getting somewhere!

Modelling Neuronal Spike Codes

Using principles of sigma-delta modulation techniques to carry out the mathematical operations that are associated with a neuronal topology

glgorman

glgorman