-

Beyond The (uncanny) Valley of the Shadows

05/29/2023 at 07:55 • 0 commentsI was thinking about writing a log entry with the title "Avoiding Gestalt Conflicts", and that one would have begun with some repeat of a previous rant about how "if nothing is better than steak and eggs for breakfast" and "if stale cereal is better than nothing" then does it not follow that if A is better than B, and if B is better than C, then A must also be better than C also. Then besides that, where are Alice, Tweedle-Dum, and Tweedle-Dee when we need them?

Well, you get the idea, or do you? If you know what Galois fields are, then you might, in addition to the notional concepts of "frame" and context", you should also be able to contemplate some concept of "circular dominance", and based on what I think I remember about those little neon flasher kits that Radio-Shack sold when I was a kid, I think that one of the things that "random connections of neuronal circuits" might end up doing, so to speak - is that they might somehow "adventitiously" create ad hoc ring oscillators, which in turn might give rise to spontaneous Hopfield Networks.

Now, let's suppose that when such networks fire off in random patterns, so to speak, for a long enough period of time, maybe they do something akin to the accumulation of lactic acid in muscle, for example. In effect, they might experience fatigue, so that perhaps such a network will oscillate for a while, and then it might shut down because it could possibly become inhibited by another network that then comes online. If that circuit then fatigues, then what? Does the first circuit then come back online, now that it has had some time to get some "rest and refresh"?

I am imagining a classic double scroll oscillator here, of the type that can be modeled with Chua's circuit. Yet I want to try implementing it using a neuronal topology as stated earlier.

Alright, I found a public domain graphic of the chaotic region standard logistic map on Wikipedia, and for those familiar, and unfamiliar - it looks like this:

![]()

And in other news, one of my favorite pieces to practice on the piano is an "easy" version of the Animals - House of the Rising Sun. So for a good time, if you have access to an electronic keyboard try the pattern 1-2-3-5-4-3 in the right hand, at 120 BPM, 3/4 time in the key of A-minor. Then try to add the sequence A-C-D-F-A-E-A-E in the left hand with the A being the top note and then going down to the C, and so on. So the left thumb is on an A and the little finger is on a C. Try it. Five minutes to learn, a lifetime to master. One full note per measure in the left hand, so that the 8-bar accompaniment should repeat, I think - 23 times. I know. I counted. And I think the "arrangement" just might work pretty well, let's say at a church social, maybe adding a tambourine shake or two, wherever they might seem to belong throughout the song.

So is it possible that "adventitious" Hopfield networks that emerge spontaneously, in real biological systems are the real sequence drivers? I think that we are all taught, whilst growing up that the Cerebellum acted like some kind of sequencer, on the one hand, so the traditional model that we think of might involve either mechanical music boxes, digital counters, and ROM table lookups of the desired patterns.

Yet I think that there is something else going on here and as good as deep learning has become, I think that something important is missing. Now I am not going to jump on the bandwagon of whether some AI or another might have become sentient because I think that sentience requires "feeling", and that just isn't going to happen with deterministic finite automata. No, that's not what I am getting at. I am thinking of something else, since I was reading up on Wikipedia about how Perceptrons work, and how any "linearly solvable system" can be solved with a single-stage Perceptron network, of sufficient size that is. Yet, if a logical problem involves the exclusive-or operation, that operation requires a cascaded system, whether based on a perception-based model, or whether it is traditional digital logic, or whether it is tensor-flow based, for that matter.

So can we do something useful by creating some kind of neuronal macro-blocks that exchange information by using sigma-delta signals? It seems to be just the right flavor of chaos, on the one hand, and yet it seems like it might be very easy and efficient on the other.

Now as discussed earlier, in one of my other projects, Rubidium, I think it was, I implemented a "mostly" correct version of IEEE-754 floating point arithmetic in C++, as a part of a calculator test project, where I assumed a system that had C++ style classes, but which didn't have any logical operations other than bitwise NOR, and logical shift operations. Thus 32 bit IEEE-754 multiplication looks something like this and appears to be mostly correct, even if I didn't yet implement de-normalized input.

real real::operator * (real arg) { short _sign; real result; short exp1 = this->exp()+arg.exp()-127; unsigned int frac1, frac2, frac3, frac4, fracr; unsigned short s1, s2; unsigned char c1, c2; _sign = this->s^arg.s; frac1 = this->f; frac2 = arg.f; s1 = (frac1>>7); s2 = (frac2>>7); c1 = (frac1&0x7f); c2 = (frac2&0x7f); frac3 = (c1*s2+c2*s1)>>16; frac4 = (s1*s2)>>9; fracr = frac1+frac2+frac3+frac4; if (fracr>0x007FFFFF) { fracr = ((fracr+(1<<23))>>1); exp1++; } result.dw = (fracr&0x007FFFFF)|(exp1<<23)|(_sign<<31); return result; }Now is it possible to take an actual compiler that normally generates code that runs on a traditional CPU, and instead, compile for a system that is not only built from nothing but NOR gates, or something like that, but can we replace the NOR gates, counters, etc, with "adventitious neuronal circuits" just to prove that it can be done, on the one hand, and to perhaps give the next generation of AI some kind of "head start".

Then I could compile MEGAHAL, or SHRDLU, or whatever, and whenever the Markov model needs to do a calculation, which in the case of MEGAHAL would be based on the "entropy of surprise" then the whole thing would be running on virtual neural circuits

Seems like it could work on a Parallax P2, with the added option of having some actual analog fun going on, just in case, hopefully - that puts a warm feeling in your motivator.

Stay Tuned.

-

Some Copyright and Other Information

05/12/2023 at 16:28 • 0 commentsI uploaded a background image for this project that I found on Wikipedia. The original source can be found at https://en.wikipedia.org/wiki/File:Delta-Sigma_schematic.svg, which also contains the copyright information which states that that particular image is made available under a Creative Commons License. For use with this project, I converted the file to jpeg and cropped the image to better fit with the way that Hackaday seems to want to further scale and crop whatever you upload for display purposes.

Later on, I will be creating a new project on GitHub entitled Code Renaissance which I am thinking will become a repository for some classic AI stuff, as well as other material that might be useful. Thus one approach that I might want to try is to obtain the source code for the classic chatbot MEGAHAL, and then modify it to make use of the modified Tensor flow style evaluator which I have described so far, i.e., by attempting to use sigma-delta modulation as an "intentionally noisy" ALU that can be run with reasonable efficiency on any microcontroller.

Now as it turns out, the Parallax Propeller P2 chip might be ideal here, since it is capable of performing sigma-delta analog to digital conversion on all 64 pins, which seems to suggest that implementing some kind of simple neural network might just be a matter of choosing some pins to act as outputs, and some to act as inputs, and then tie a group of outputs together via resistors, and let them feed into some of the other pins which could be used as inputs.

Then we can attempt to implement some of the classic neuronal topologies, like Hopfield, feed-forward, back-propagation, adaptive resonance, etc., in addition to the contemporary transformer model. How that might fit into creating a Chat-Bot which can act as a personal assistant or provide some "simulated companionship" remains to be seen, but nonetheless, such a project seems very doable.

-

Then along came a Spider

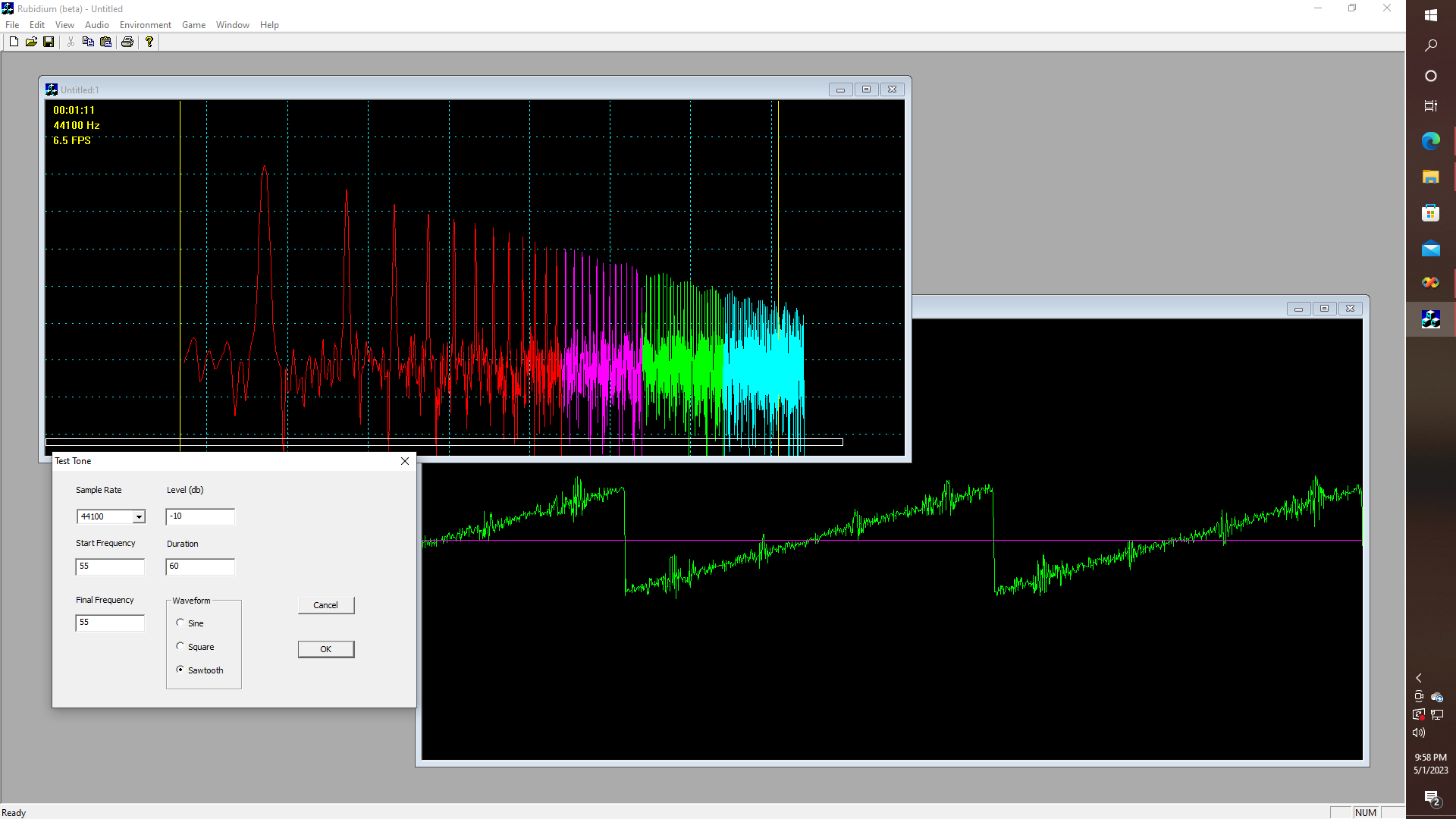

05/02/2023 at 05:18 • 0 comments![]()

Or a sawtooth. O.K. I have this application where I was doing a simulation of how a sigma-delta analog to digital converter works a while back, and this is what I came up with when I used a VERY low bit rate, like 32 bits per sample bitstream model that in turn was being fed through a Gaussian window function in order to recreate 16-bit WAV stereo audio from the 1-bit data stream, with the obvious question being, therefore, just how low can we go, and still get something that might be acceptable in some context, like speech recognition, or music transcription, that is, if all we need to do is recognize pitch, or detect when a particular vowel is being spoken, etc.

![]()

And of course, it looks noisy, like what we see in a science fiction TV show like Lost-In-Space where John Robinson speculates that some derelict alien spacecraft has a "crystalline power source", or maybe Fantastic Voyage, where some scientists have to try to perform some brain surgery on another scientist by boarding a submarine that is then shrunk down to the size of a red blood cell.

But wait a minute! Nowadays, we have fractal coding, chaos theory, and some notional constructs that establish some kind of covariance between the so-called "fractal dimension", Feigenbaum's constant, and a bunch of other stuff that more rigorously generalizes the theory that there is some kind of relationship between the "logistic map" and the otherwise very broad field of "convolutional codes", and of course if sigma-delta is a type of convolutional code, they why not build something based on Chua's circuit that actually tries to model a neuron or two, and see if we can trick an otherwise preposterously simple circuit into doing something like pitch or even vowel recognition, that is by using a hybrid approach that incorporates op-amps, flip-flops, and possibly a 555 or two?

Modelling Neuronal Spike Codes

Using principles of sigma-delta modulation techniques to carry out the mathematical operations that are associated with a neuronal topology

glgorman

glgorman