face detector written mostly in assembly, model size roughly 25 kb (with 8 bit quantized weights). Sorry can’t share any details just yet…

SPEED!

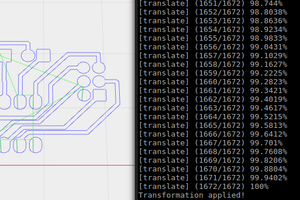

My priority is to get a model to run fast enough on an ESP32 S3. This means we have to make some tough decisions... Even though you should always keep the quality of your code to a high standard, when it comes to pure optimization nothing is sacred. If you're lucky, the compiler will do most of the work optimizing the code, but when it comes to custom instruction sets like the one found in the ESP32 S3 we're out of luck. We have a few choices, and I would always advice to start with a high level option, like an API that abstracts some of the internals, and move your way down if you run into obstacles. This is what I did here, I went from ESP-DL to ESP-DSP to raw assembly in order to get things done. Maintainability, Reusability, Readability? Out the window! And in return we get full control over the SIMD instructions that make this model go fast!

ESP-DL

The AI framework built by espressif, containing samples on how to do face detection and face recognition, can be found here: esp-dl (github) . This all looks great at first sight, and I started to try and make an interface between PyTorch and how this framework expect its models. I built a custom QuantizationObserver to make sure the quantization to int8 takes into account the limitations of the ESP-DL framework and converted my model. I quickly ran into some issues / bugs, probably on my side, that needed to be resolved.

So I started to look into the source code of the framework to see if I was using the proper permutations of data and hit a brick wall in the form of library files. No source, other than the headers, is shared with this project, making it a black box. It requests of you to use a custom model converted tool for which also no source is shared. I can't work like that, so I ditched the idea of using this framework.

ESP-DSP

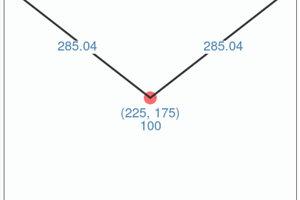

The DSP framework build by espressif, that exposes an API using the extended instructions (SIMD) present on the ESP32 and ESP32-S3 SoCs, esp-dsp (github). It has functionalities like convolution and matrix multiplication, so I figured I could use that to create my own convolution / linear layers. In the end, a convolution or linear layer is just a reordering of data (unfold), a matrix multiplication and folding to the output shape. A matrix multiplication is just a set of dot products. There is a header dspi_dotprod.h that takes in 2d "images" (one input, one kernel) and performs a dot product, on quantized data ([u]int8, [u]int16). It suggests that you can set up the strides for x and y, which should make it possible to define patches of a source image, keeping the stride of the source image, without copying data around.

I wrote a function to split an image up into patches, the size of the kernel and put it through this function, but I got horrible results. Luckily this project does have source code available, assembly code to be exact, so I dove into the code and realized that it would fall back to slow code if the patch isn't aligned properly, or has weird sizes, and a slew of other constraints on the data before it would run an optimized path. Furthermore, the function does not saturate the result, meaning that if your dot product is larger than a certain maximum, it would simply take the lower 8 bits, instead of clamping it to the output range.

EE.VMULAS.S8.ACCX.LD.IP

Because the ESP-DSP project has its source code available, I decided to look into it and use assembly language to do exactly what I need to do. And this is where the world of possibilities opened up. The star of the show: EE.VMULAS.S8.ACCX.LD.IP, an instruction that performs a fused multiply + add + load, and gives us options on how to increase the pointer to the source image at my own discretion (with some acceptable restrictions). Now we are in Xtensa territory, which has a properly documented ISA and documentation. I...

Read more » E/S Pronk

E/S Pronk

Luke Thompson

Luke Thompson

Andy

Andy

arun.mukundan

arun.mukundan

ziggurat29

ziggurat29

This project is simply awesome!

I have recently been using ESP32S3 to run target detection AI projects, but I am using the SSCMA open source project (https://sensecraftma.seeed.cc/), and through the EDGE IMPULSE platform (https://edgeimpulse.com/ ) to train the FOMO model.