First our project started when in April of 2015, Jules Urbach CEO and cofounder of OTOY with Oculus CTO John Carmack, has announced the "Render the Metaverse" contest that aims to get the community involved in generating immersive 360-degree panoramas using their software suite.

http://www.roadtovr.com/render-metaverse-contest-otoy-john-carmack-signs-judge/

But we realized that certain technical, economic, practical and even philosophical details were lacking in how the virtual reality industry was presenting and driving the adaptation of virtual reality technology to the world. Especially in how to get the content, virtual reality equipment (HMDs) and the networks used to do this made the largest amount of public possible, to a certain extent we are promoting the democratization of technology in the least intrusive, economically sustainable, natural, safe and comfortable for the rest of the community of developers and final users of this new medium of communication and digital exchange space, especially on issues of Interactive Telepresence and Collaboration Tools.

From there I was involved in the development of a Open Source Cloud for Real-Time Multimedia Comunications platform using concepts such as, Software Defined Networking, Super Scalability, WebRTC, Computer Vision, Augmenting Reality and Multisensory Multimedia to build an Interactive Telepresence Service based on the project Nubomedia and its Kurento Media Server Engine that we called Ceento.

http://www.nubomedia.eu/ http://www.kurento.org/

But then we realized that we needed to reinterpret the graphic representation of the content we were transmitting and adapt our technology to the Virtual Reality system and that's when we met JanusVR and its intent to build a comprehensive ways to construct content for JanusVR, especially JanusWEB is the webGL/webVR version of JanusVR.

http://www.janusvr.com/

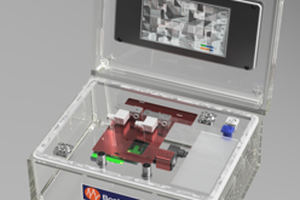

From there we started with a more open concept in designing a Hardware Reference Design platform based on Open Hardware and Software that anyone could build and be able to use our tools to build a device suitable for public or private use for many people as possible. Then in the middle of last year came a momentum in the development of the glTF format that could convey the content in a much more efficient and agnostic way of the tools used to create it using open standards:

https://uploadvr.com/john-carmack-metaverse-gltf/

http://www.roadtovr.com/rendering-metaverse-otoys-volumetric-lightfield-streaming/

Although I could spend more time explaining the rest, I would rather spend more time filling Hackaday's profile with explanatory articles on each of the key points in the history of our development as well as the components and technologies we are using. We are looking for a new concept far from the huge device connected to your head like the Hololens or other HMD, or even some over-estimated mixed reality lenses that are still attached to a obscure black box for processing and display.

We do not intend to build the Metaverse, I believe that nobody could take the attribution or authorship for it, just as the Internet since it was conceived was a global effort to invest, build and normalize the structure that has today, a collective effort as I said before.

Like any new disruptive technology in which large corporations want to have their piece, they have always tried to monopolize and capitalize over the free distribution of knowledge and technology transfer. Nowadays, with all the work being done by open source SW / HW movements, those corporations understood that they need that power to accelerate the adoption and early marketing of their products to those early prosumers or adopters.

What the JanusVR project proposes is to create a transformation for the next generation of the web, where flat web pages become 3D spaces where the content is multimedia, corporeal and interactive, the way we handle it in the real world and each Hyperlink to become a portal between...

BetaMax

BetaMax

Aleksandar Bradic

Aleksandar Bradic

Look cool, if you need any help on the Janus side let me know