## December 30, 2024 Year-End Update ##

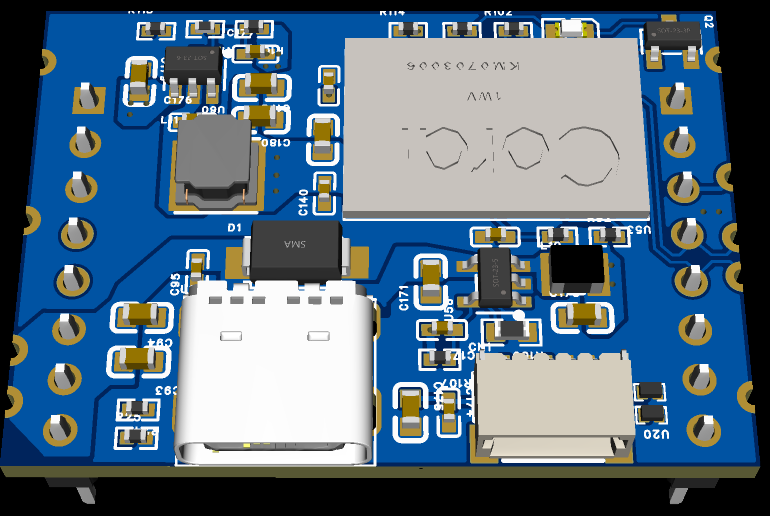

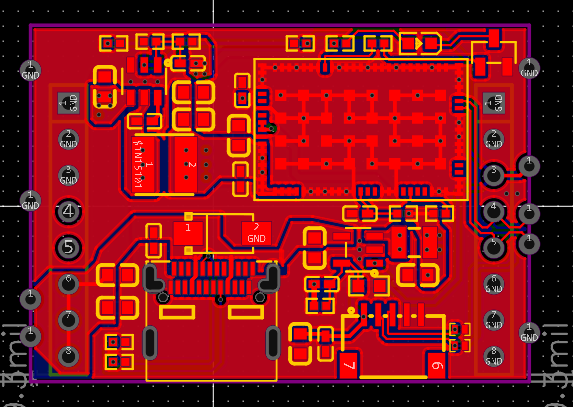

The TPU module design for AI acceleration has been completed and is currently undergoing PCB testing. The module uses a Google coral TPU with a stamp hole interface compatible with the peripheral board and can be directly added to the peripheral board to work with the core board, becoming a powerful CPU+TPU edge AI inference machine. The neural network inference efficiency can reach 4TOPS (4 trillion operations per second), with a total board power consumption of only 6W. If needed, two TPU modules can be added to achieve 8TOPS inference speed. Here are two design diagrams:

TripleL Robotics

TripleL Robotics

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.