This was developed in January 1994 using TopSpeed Modula-2 Version 1.17 for DOS (text mode and VGA graphics).

To run the program, you can download DOSbox.

Background

At that time, I was taking an introductory AI class at the University of Hamburg (Prof. Peter Schefe, R.I.P.), and implemented this as a demonstration of "MNIST"-like pattern recognition using a three-layer perceptron with backpropagation learning for the AI lab class.

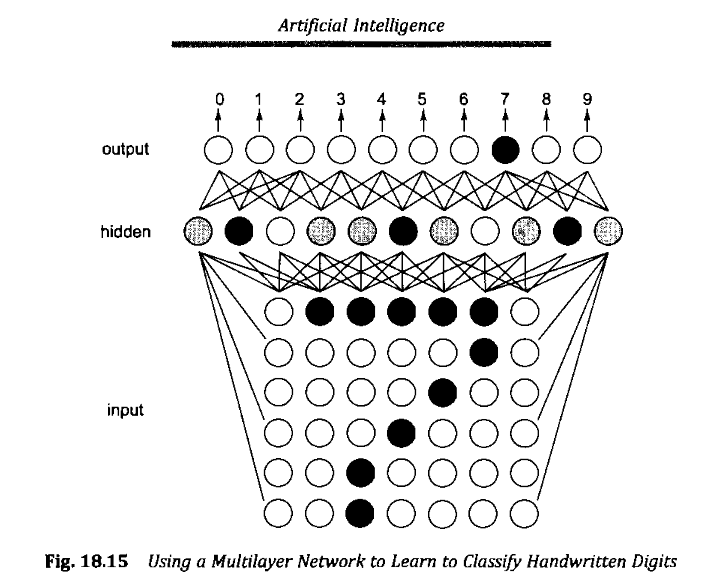

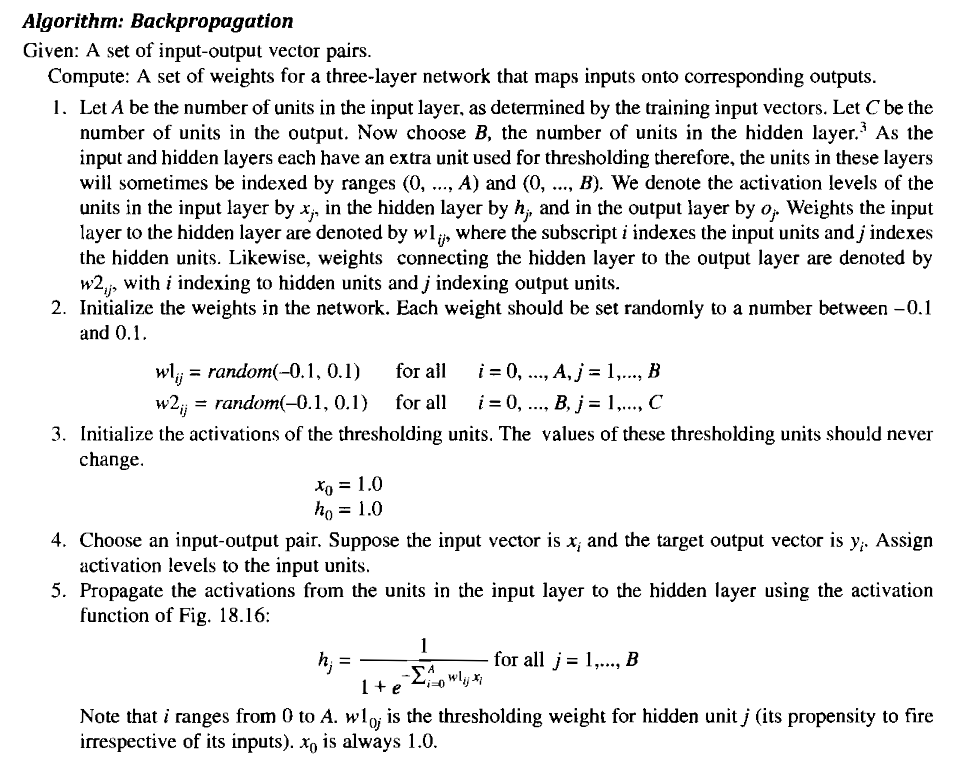

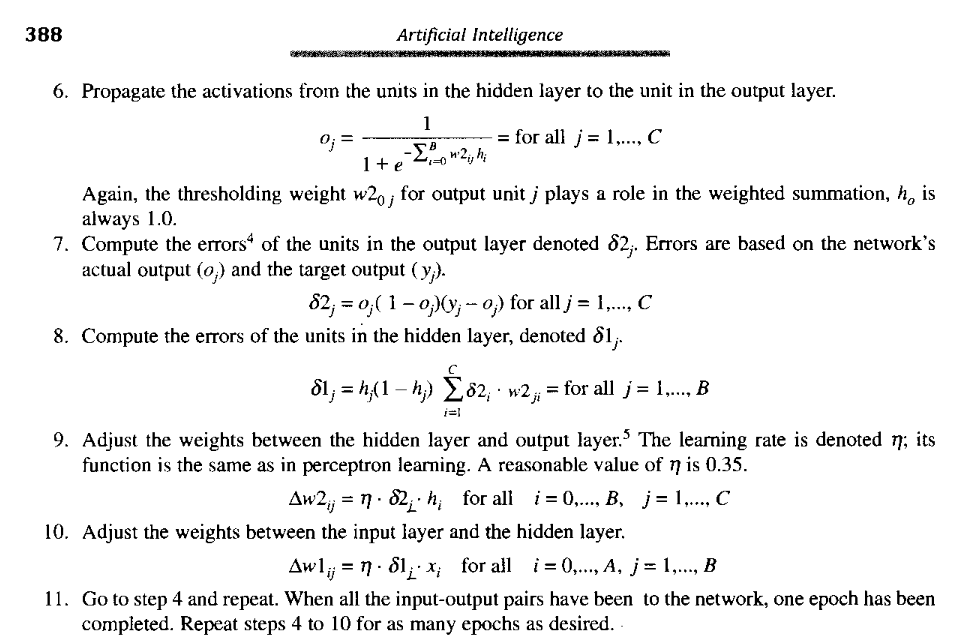

The three-layer perceptron and backpropagation learning algorithm was described in pseudo-code in English in the well-known 1991 AI book "Artificial Intelligence - Rich, E. and Knight, K, 2nd edition, McGraw-Hill".

Program

The workflow with this interactive program is as follows:

-

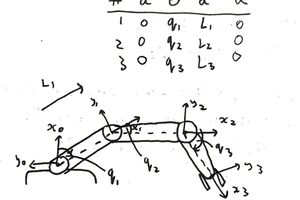

Determine the topology of the three-layer perceptron, and other hyper-parameters such as the learning rate and the number of training epochs:

-

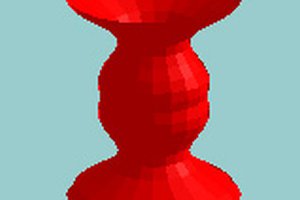

Use the pattern editor to create the "training data", i.e., the "MNIST"-like one- or two-dimensional patterns that the perceptron shall learn to recognize. Use the keypad number keys (

4,6,8,2) for cursor movement, and the5to toggle a bit in the pattern. Use+and-to switch between patterns, or completely clear the current pattern with thelkey: -

With the patterns (= training data) specified, start the backpropagation learning process by leaving the editor with the

ekey.The learning process starts, and the progress and convergence is visualized - the loss function for each pattern is shown graphically epoch by epoch. Usually, it converges quickly for each pattern; i.e., 100 epochs are typically more than enough with a learning rate of ~1. The graphs require a VGA graphics card (DOSbox emulates it):

-

With the three-layer perceptron fully trained, we can now use it for inference. After training for the requested number of epochs, the program returns to the pattern editor.

We can now recall the individual training patterns and send them to the perceptron with the

skey; the editor then shows the ground truth training label as well as the output computed by the network underNetz:. This simply shows the levels of the output perceptrons binarized via a> 0.5threshold foronvs.off. Compare theNetz:classification result with the--->training label. If the net was trained successfully, the training and computed labels should match for each pattern. Toggle through the different patterns with the+and-keys, and repeatedly send them to the network vias.Check the predefined patterns for correct classification, and also modify them a bit (or drastically; i.e., change them with the editor and feed the modified patterns into the perceptron using the

skey).Sometimes, the perceptron learned to focus on a few characterstic "bits" in the training patterns; it is interesting to remove as many bits as possible from the patterns without changing the classification results. This "robustness" to noise and large changes in the input pattern without affecting classification was (and still is) a selling point for perceptrons and/or neural networks, in general.

Source & Executable

You can find a DOS executable as well as the TopSpeed Modula-2 source code here.

Enjoy!

Source Code

MODULE Neuronal;

(* Algorithmus aus "E. Rich/K. Night: Artificial Intelligence" *)

(* Implementiert und Umgebung von Michael Wessel, Januar 1994 *)

FROM InOut IMPORT Read, ReadInt, WriteInt, WriteString, Write, WriteLn;

FROM RealInOut IMPORT ReadReal, WriteReal;

FROM MathLib0 IMPORT exp;

FROM Lib IMPORT RAND;

FROM Graph IMPORT InitVGA, TextMode, GraphMode, Plot, Line;

(* maximal 10 Output-Units, 20 Hidden-Units und 8x8=64 Input-Units *)

TYPE net = RECORD

Input : ARRAY[0..64] OF REAL; (* Level d. Input-Units *)

Hidden : ARRAY[0..20] OF REAL; (* Level d. Hidden-Units *)

Output : ARRAY[1..10] OF REAL; (* Level d. Output-Units *)

Goal : ARRAY[1..10] OF REAL; (* verlangter Output *)

w1 : ARRAY[0..64],[1..20] OF REAL; (* Gewichte Input->Hidden *)

w2 : ARRAY[0..20],[1..10] OF REAL;...

Read more »

Michael Wessel

Michael Wessel

ziggurat29

ziggurat29

Jamie Ashton

Jamie Ashton

talofer99

talofer99

Bruce Land

Bruce Land

No no, you got it all wrong - ceci n'est pas une labler!