In our last blog post, which you can find here, we discussed the concept of spectral resolution and its direct relationship to the pixel size of the detector. In this post, we will discuss how to select detector length and the focal length of the imaging lens, which are crucial for the instrument's performance.

1. The Role of the Focusing Lens and Detector

The focusing lens (also known as the imaging lens) is a key component in a spectrometer. Its primary function is to focus the dispersed light from the grating onto the detector. The detector, typically a linear array sensor, then measures the intensity of each wavelength.

A key design consideration is ensuring that the full range of wavelengths you want to measure (λmin to λmax) fits precisely onto the physical length of your detector.

2. The Relationship Between Wavelength Span and Detector Length

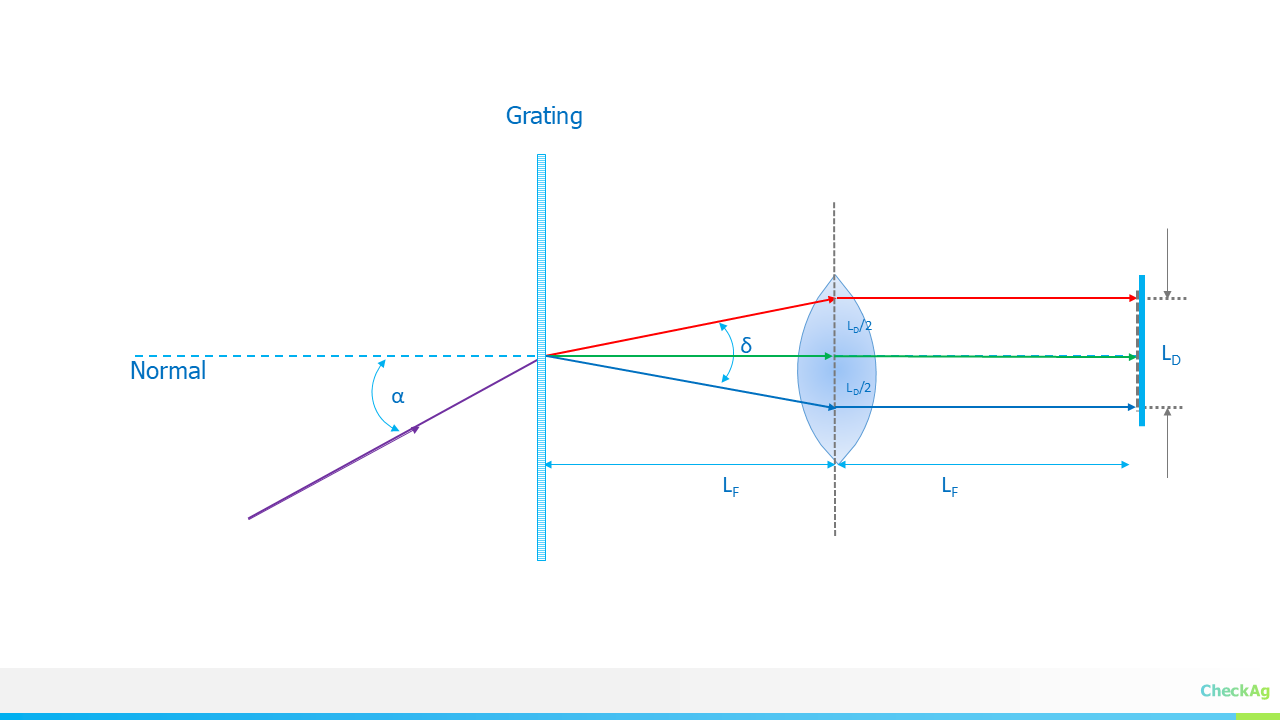

The angular span of the dispersed light, δ=βλmax−βλmin, is the difference between the diffraction angles for your maximum and minimum wavelengths. The focusing lens converts this angular span into a linear distance on the detector.

The relationship between the detector length (LD), the focusing lens's focal length (LF), and the angular span (δ) is given by the following equation:

This equation highlights the trade-off: for a fixed angular span, a longer focal length (L_F) will result in a larger image on the detector, requiring a longer detector.

3. Calculating the Focusing Lens Focal Length (L_F)

Often, the detector length (L_D) is a known value—you have a specific sensor you are designing around. To find the required focal length of the focusing lens, we can rearrange the equation above.

The angular dispersion describes how much the diffraction angle changes with a change in wavelength. It's defined as:

- m: The diffraction order

- d: The groove spacing of the grating

- β: The diffraction angle

The linear dispersion, which relates the change in position on the detector (dx) to the change in wavelength (dλ), is given by:

We can approximate the linear dispersion for the full wavelength span. The change in position, dx, corresponds to the detector length, LD, and the change in wavelength, dλ, corresponds to the wavelength span, λmax−λmin.

Rearranging this equation to solve for the focal length of the focusing lens (LF), we arrive at the formula :

This equation provides a direct and practical way to calculate the required focal length of the focusing lens based on your detector length, grating parameters, and the desired wavelength range.

Practical Approach

Since both the detector length and the focusing lens focal length are unknowns in the spectrometer design, a common and practical approach is to fix one of them to find the other.

In practice, it can be challenging to find a detector with the exact length calculated above. Also, customizing a lens is costly, and stock lenses have standard focal lengths like 30mm, 50mm, 75mm, 100mm etc. Therefore, a more practical procedure is to calculate the detector length for the available focal lengths and then select the most optimal detector-focal length combination.

- Select a Trial Focal Length: Choose a commercially available focal length for your imaging lens. Common options are 30mm, 50mm, 100mm, 150mm, 200mm, etc. A longer focal length will result in a longer detector length and higher spectral resolution.

- Calculate the Required Detector Length: Using the focal length you've selected, plug the values for the other known parameters (m, d, β, and the wavelength span) into the equation above to calculate the required detector length,LD.

- Choose a Close Detector: Compare your calculated LD to the available sizes of commercial detectors. Select a detector that is the closest match to your calculated value.

By following this method, you can start with a standard component (the lens) and then find a detector that is a good fit for your system's required wavelength span and spectral resolution.

Tony Francis

Tony Francis

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.