Motivation

Capturing parallel display signals isn’t very complicated when using popular microcontrollers with a wide range of fast parallel peripherals, such as the PIO on the Raspberry Pi Pico or the PARLIO on the ESP32-P4. Traditional interfaces like external memory controllers or camera interfaces can also be used to capture this data.

But what if you want to do the capturing on a very basic microcontroller? I wanted to test whether this would work using a general-purpose controller. I had a WCH CH32V203 lying around, so I decided to start with that. Due to my recent projects, I used the familiar signal from my Game Boy Advance.

Project Execution & Problem Solving

I had the following plan: I wanted to trigger on the VSYNC (start-of-frame) signal using an external interrupt. After this, another external signal interrupt from the pixel clock would trigger a DMA transfer, which moves the contents of a GPIO port input register containing 16 pin states into memory. Not a bad plan, but I was surprised to discover that it isn’t possible to trigger DMA transfers directly from external signals.

A deeper look into the DMA peripheral led me to the capture mode of the timer peripherals. This mode allows an external signal to trigger a DMA channel. Normally, it is intended to transfer the counter register, but there is nothing stopping you from using a GPIO port input register as the source address. And it actually worked.

With this setup, capturing the data became possible, but it was essentially useless because the frame data couldn’t be stored anywhere. A whole frame, 240 by 160 pixels with 2 bytes per pixel, would require 75 KiB of RAM, while the controller only had 20 KiB.

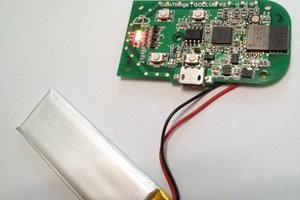

Great! A Frankenstein-like peripheral driver made from a timer, DMA, and basic GPIO, glued together with a few lines of code. Luckily, I also wanted to explore the TinyUSB library’s video capabilities and came across the CH32V305RBT6, which is essentially a CH32V203 with 50 percent more RAM and, most importantly, a high-speed USB port.

TinyUSB includes an implementation for UVC, or USB Video Class, normally used for webcams, and it was used in the Gameboy Interceptor, a Classic Gameboy (DMG-01) capture device by Sebastian Staaks (https://github.com/Staacks/gbinterceptor). It uses the full-speed USB port of a Pi Pico. However, for the higher resolution and color depth of the Gameboy Advance, a full-speed interface isn’t fast enough. This made the CH32V305 an excellent candidate, especially at a price of 1.50 USD per unit for 10 pieces. Still, there isn’t enough RAM to store a full frame, and TinyUSB only supports transferring complete frames.

After spending some time with the TinyUSB code, I managed to modify the video class driver to support “on-the-fly” data streaming without a frame buffer. I first experimented with generated data and then integrated my capture driver from the CH32V203. I modified it to provide a ring buffer that stores data line by line along with the line index. This allowed the USB video class code to synchronize on the first frame. After that, it retrieves data as quickly as possible. If it is too fast, it waits until new line data is received. With a ring buffer of about 50 lines, this worked quite well.

You can find my changes to the tinyUSB library here: https://github.com/embedded-ideas/tinyusb. I made a pull request so it might end-up in the mainline repo.

Unfortunately, this was not the end of the challenge. The captured data was RGB565, but the supported video formats were only MJPEG, which we cannot calculate fast enough, and YUV2. YUV2 still requires calculations beyond the CPU’s capabilities. At this point, it was useful that the AGB doesn’t fully use RGB565: the lowest green bit is tied to ground. This means we are effectively dealing with RGB555, or 15-bit data.

This allowed an easy solution: a lookup table. By storing 8-bit YUV values for each possible color, we require 96 KiB, which can...

Read more »

Randy Elwin

Randy Elwin

trollwookiee

trollwookiee

Krists

Krists

David

David