🗣️ DIY ESP32-S3 AI Voice Assistant with MCP Integration

Voice assistants have gone from costly commercial devices to DIY maker projects that you can build yourself. In this project, we demonstrate how to create a personal AI voice assistant using the ESP32-S3 microcontroller paired with the Model Context Protocol (MCP) to bridge embedded hardware with powerful cloud AI models.

This assistant listens for your voice, streams audio to an AI backend, and speaks back natural responses. By combining Espressif’s Audio Front-End (AFE), MEMS microphone array, and MCP chatbot integration, this project brings conversational AI into your own hardware — no phone required.

🔊 What It Does

🧠 AI Conversations — Speak to the device and get natural-language responses powered by cloud AI through MCP.

👂 Audio Capture & Playback — Uses digital MEMS microphones and a speaker to handle voice input and output.

🔗 MCP Integration — The Model Context Protocol lets large language models interact with device capabilities.

🤖 Wake-Word Detection — Always-on listening for trigger phrases with real-time AI replies.

📡 Smart Home & Device Control — Extend beyond chat to control IoT devices via AI commands.

🛠️ How It Works

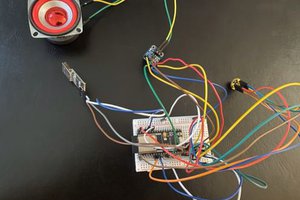

- Hardware — Based on the ESP32-S3-WROOM module for dual-core processing and Wi-Fi/Bluetooth connectivity.

- Audio Front End — Espressif’s AFE handles wake-word detection, noise suppression, and beamforming with digital MEMS mics.

- Cloud AI Pipeline —

- Audio is streamed to a backend that performs automatic speech recognition (ASR).

- Transcribed text is fed into large language models via MCP.

- Wikipedia

4. Responses are turned into natural speech via TTS and sent back.

5. MCP Protocol — Acts as a standardised interface for AI models to “call” device functions, opening up possibilities beyond conversation, such as controlling other hardware.

🔩 Hardware Components

- ESP32-S3-WROOM-1 MCU — dual-core microcontroller with Wi-Fi/Bluetooth.

- Digital MEMS Microphones — high-quality audio capture.

- I²S Audio Amplifier & Speaker — for clear voice feedback.

- Power Management — battery charger and buck-boost regulator for portable use.

- Visual & Control Interfaces — RGB LEDs and buttons for status and manual control.

🧠 Software & Tools

- ESP-IDF & VS Code — firmware development environment.

- Espressif AFE Library — optimised voice wake and capture stack.

- MCP Chatbot Framework — cloud-side AI integration.

- WebSocket / MQTT — bidirectional streaming for low-latency communication.

![]()

📈 What You’ll Learn

This project isn’t just about building an ESP32 AI voice assistant— it’s a practical intro to embedded AI, cloud integration via MCP, and real-time voice interaction with constrained hardware. By splitting audio I/O and heavy AI lifting between the ESP32 and cloud backend, you get a responsive system with flexible intelligence potential.

Whether you want a personal AI companion, smart home controller, or an edge voice interface for robotics, this project is a powerful starting point.

ElectroScope Archive

ElectroScope Archive

voidrane Splicer

voidrane Splicer

Next Builder

Next Builder

Robert Manzke

Robert Manzke