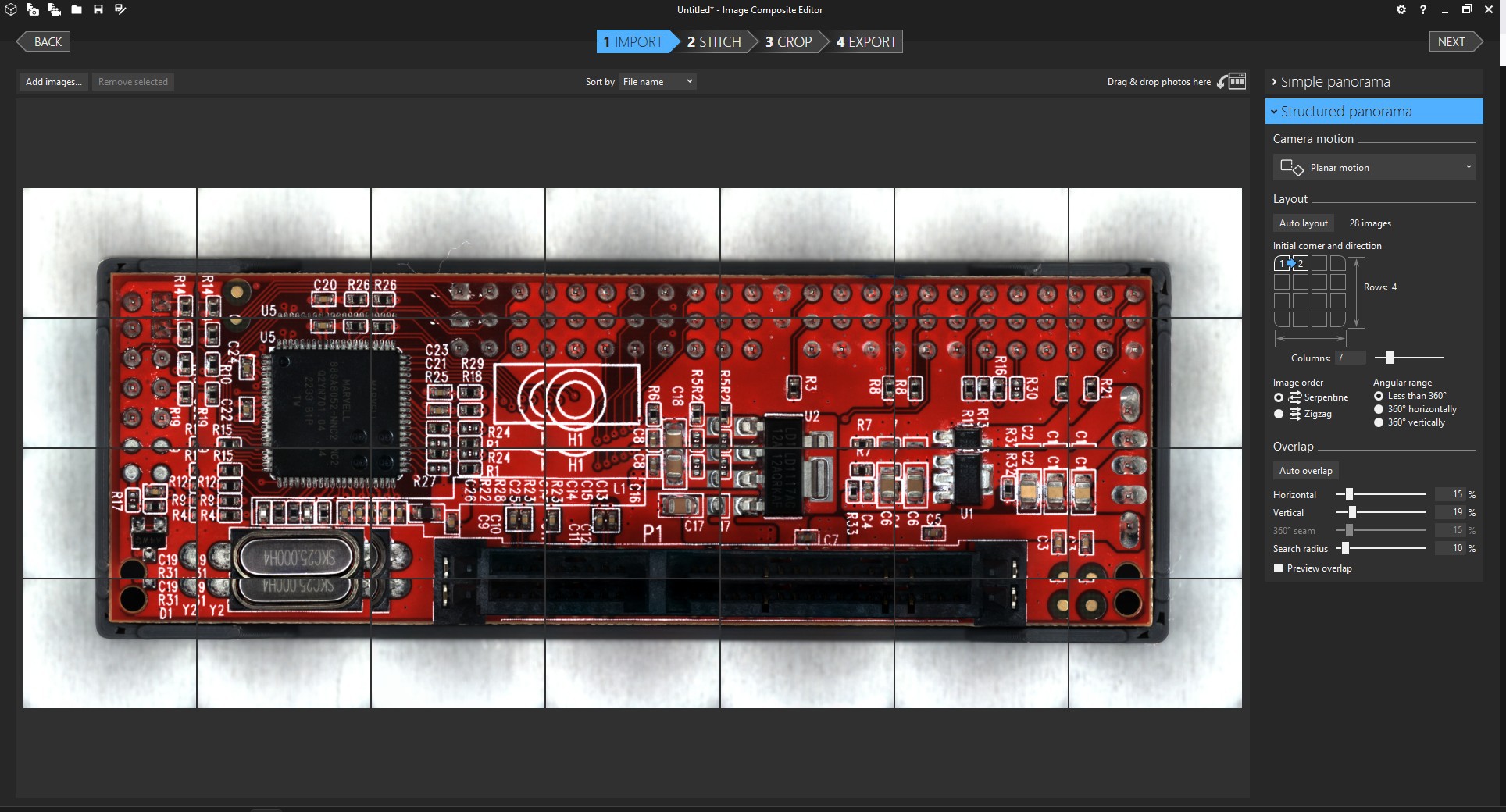

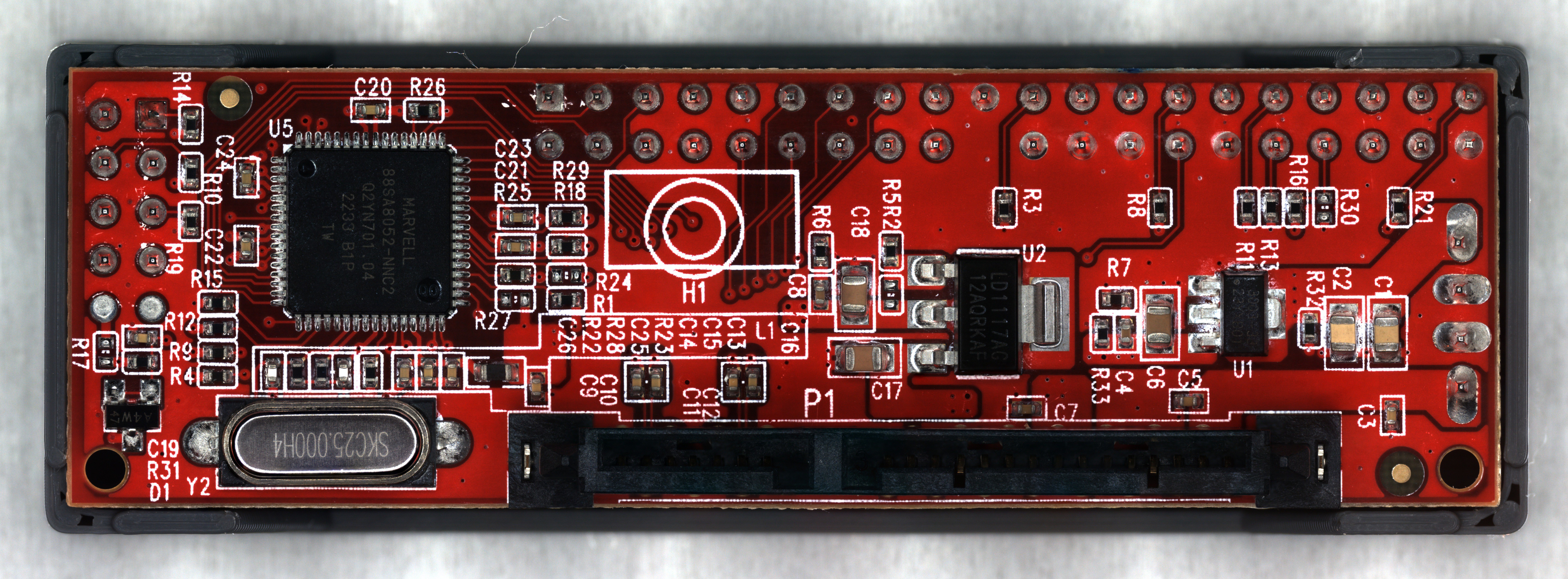

This project was mostly inspired by my experience with Keyence VHX series microscopes. At a previous job of mine involving high reliability electronics qualification, a VHX-6000 scope was the most widely used tool in my department. It was an older model that had developed some quirky behaviors over the years, but it was extremely intuitive to operate and could image everything from entire PCB assemblies to individual wire bonds inside ICs. I was also told that it cost more than most new cars.

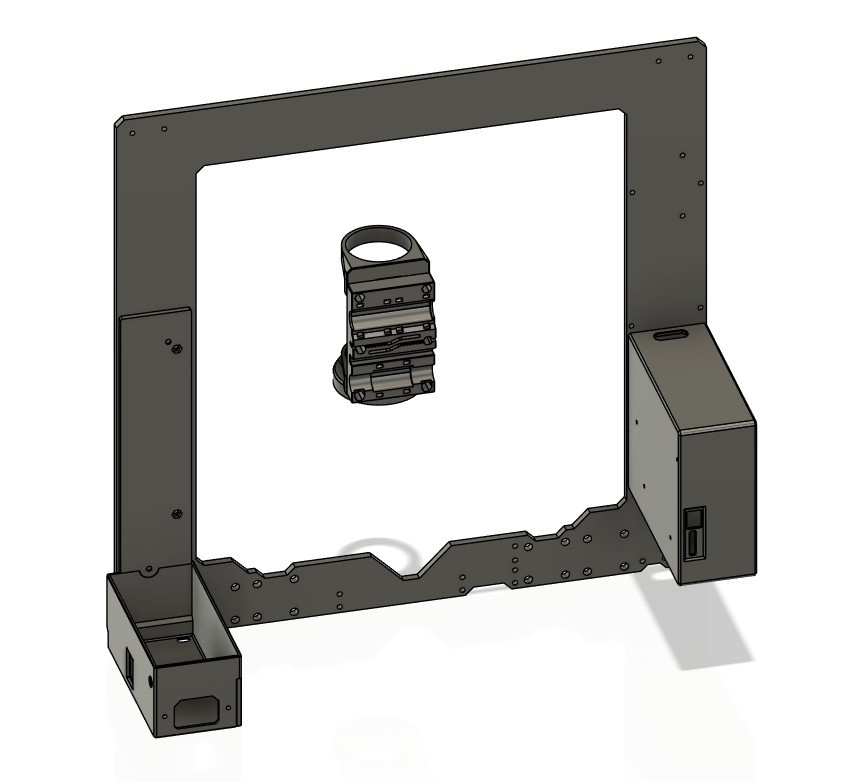

My attempt is worse than a VHX scope in almost every aspect (besides cost), but I think most of the limitations I encountered could be improved with some effort.

loudaslife

loudaslife

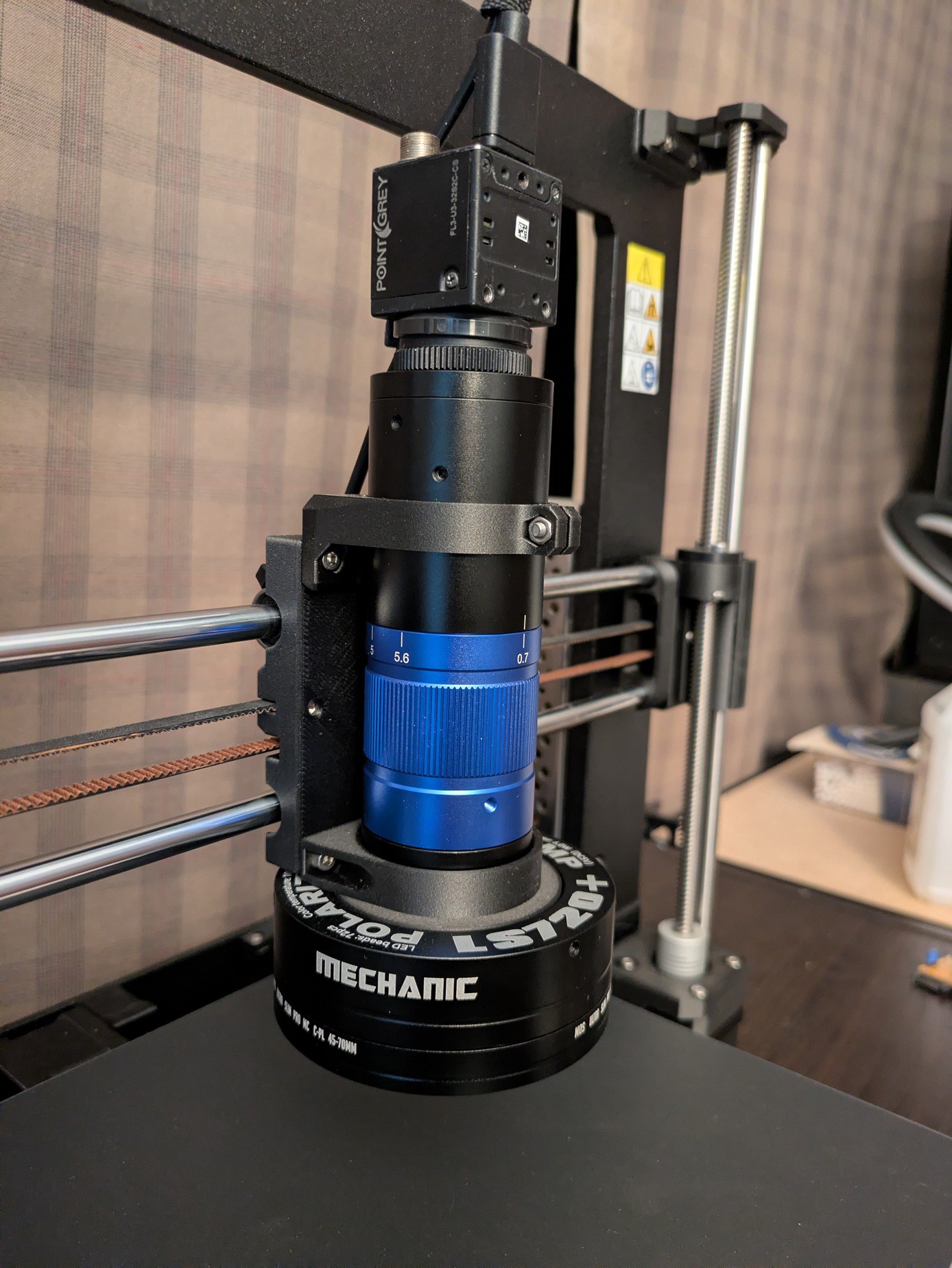

I picked the lens first, and after a lot of searching I settled on a cheap unbranded version of a

I picked the lens first, and after a lot of searching I settled on a cheap unbranded version of a