-

Progress on V2!

01/18/2021 at 02:06 • 0 commentsJust wanted to post here that I've been working on V2 of this camera and have made significant strides in the on-camera optical flow pipeline, so check it out if you haven't yet! https://hackaday.io/project/165222-vr-camera-v2-fpga-vr-video-camera

For those who haven't seen it yet, V2 is an FPGA 360 video camera that's I'm developing to perform image warping, optical flow estimation, and image stitching all on-camera, in the FPGAs, in real time at 30fps. It's a much more advanced version of V1 (the project you're currently looking at) which simply captures raw bitmaps directly from the image sensors and saves them to a microSD card for later warping and stitching on a desktop PC. -

Moving Towards Version 2

07/14/2018 at 20:55 • 0 commentsIt's been a while since I've updated this project, and I have to admit my progress has slowed down a bit, but I'm far from done working on the 360 camera. I've spent most of my time recently pondering how to create a new camera that will be able to not only capture 360 video, but stitch it together in real time. This is obviously a massive increase in difficulty from the current state of the camera, where the FPGA really doesn't do much work, it just writes the images to the DDR3 and they are read by the ARM processor which stores them on a MicroSD card and then my PC does the stitching.

There are 3 main components that I need to figure out for real-time stitched 360 3D video.

- Cameras

- The current cameras I'm using are the 5MP OV5642. These are well-documented around the web and easy to use, but unfortunately they cannot output full resolution at above ~5fps, which is a necessity for video.

- The sensor that looks most promising for video right now is the AR0330. This is a 3MP 1/3" sensor that can output at 30fps in both MIPI formats and through a parallel interface that is the same as the one used by the OV5642. Open source drivers are available online and assembled camera modules can be purchased for $15 or so on AliExpress; I bought a couple to evaluate. These conveniently can be used with these ZIF HDD connectors and their corresponding breakout board.

- As shown in the graphics in my previous project log, to get 3D 360 video you need a minimum of 6 cameras with 180 degree lenses.

- Video Encoding

- Put simply, as far as I've found, there is currently no way for a hobbyist to compress 4K video on an FPGA. I would be more than happy to have anyone prove me wrong. Video encoder cores are available, but they are closed source and likely cost quite a bit to license. So, the video encoding will have to be offloaded until I become a SystemVerilog guru and get 2 years of free time to write an H.265 encoder core.

- The best/only reasonably priced portable video encoding solution I've found is the Nvidia Jetson TX2. It can encode two 4K video streams at 30fps, which should be enough to get high-quality 360 3D video. I was able to purchase the development kit for $300, which provides the TX2 module and a carrier PCB with connectors for a variety of inputs and outputs. Unfortunately it's physically pretty large, but for the price you can't beat the processing power. The 12-lane CSI-2 input looks like the most promising way to get video data to it if I can figure out how to create a MIPI transmitter core. I've successfully made a CSI-2 RX core so hopefully making a TX core isn't that much harder...

- Stitching

- The hardest component to figure out is stitching. My planned pipeline for this process will require one FPGA per camera, which will definitely break my target budget of $800, even excluding the Jetson.

- The steps to stitch are: debayer images, remap to spherical projection, convert to grayscale and downsample, perform block matching, generate displacement map, bilateral filter displacement map, upsample and convert displacement map to pixel remap coordinate matrix, remap w/displacement, and output.

- This needs to be done twice for each camera, once per each eye. With pipelining, each function needs to run twice within ~30ms.

- Currently the DE10-Nano looks like the only reasonably priced FPGA with (hopefully) enough logic elements, DDR3 bandwidth, and on-chip RAM. I'll almost certainly need to add a MiSTer SDRAM board to each DE10 to give enough random-access memory bandwidth for bilateral filtering. The biggest issue with the DE10-Nano is that it only has 3.3V GPIO, which is not compatible with LVDS or other high-speed signals, so it will be a challenge to figure out how to send video data quickly to the TX2 for encoding.

- The MAX 10 FPGA 10M50 Evaluation Kit has also caught my attention because of its built-in MIPI receiver and transmitter PHYs, but 200MHz 16-bit DDR2 is just not fast enough and it would probably not be possible to add external memory due to the limited number of GPIOs.

At this point, the video camera is 99% vaporware, but I've been making some progress lately on the stitching side so I figured it was time to make a project log. I've created a 16x16 block matching core that worked pretty well in simulation, as well as a fast debayering core and I'm working on memory-efficient remapping. I'll keep posting here for the near future but if/when the ball really gets rolling I will create a new project page and link to it from here. I plan to dip my toe into the water by trying first to build with 2 or 3 cameras, and if that works well, I'll move to full 360 like I did with the original camera. If anyone has any suggestions for components, relevant projects, or research to look at, I would love to hear from you.

- Cameras

-

Refining 360 Photos

01/15/2018 at 05:02 • 0 commentsI've spent the last couple months on this project trying to get the photo output to be as detailed and high-quality as possible, and I'm pretty happy with the results. The results are still far from perfect, but they've improved considerably since the last photos I posted. In my next couple build logs I'll break down some of the different changes that I've made.

Camera Alignment

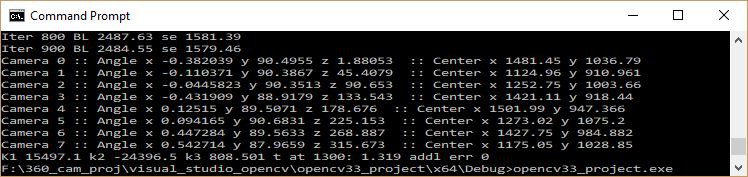

One of the largest issues with my photos that caused viewer discomfort was vertical parallax. Due to each camera having a slight tilt up or down from the horizontal axis, objects appearing in the one eye view would appear slightly higher or lower than their image in the other eye. I had created a manual alignment program that allowed me to use the arrow keys to move the images up and down to position them, but it took forever to get good results with this, especially when considering the three different possible axes of rotation.My solution, emulating the approach of Google's Jump VR, was to use custom software to figure out the rotation of each camera in 3D space, and translate this to how the images should be positioned. I chose to use the brute force method - estimate the positions and then use an iterative algorithm (Levenberg-Marquardt) to correct them. The alignment algorithm is described below:

- Use guess positions and lens characteristics to de-warp fisheye images to equirectangular and convert to spherical projection

- Use OpenCV SURF to find features in the spherical images

- Match these features to features in images from adjacent cameras

- Keep features that are seen from three adjacent cameras

- Use the spherical and equirectangular matrices in reverse to figure out the original pixel coordinates of the features

- Set up camera matrices for each camera with 3D coordinates, 3D rotation matrix, and 2D lens center of projection (pixel coordinates)

- Begin parameter optimization loop:

- Begin loop through the features with matches in 3 images:

- Calculate the 3D line corresponding to the pixel at the center of the feature in each of the 3 images

- When viewed in the X-Y plane (horizontal plane, in this case), the three lines form a triangle. Calculate the area of the triangle and add this to the total error. This area should ideally be zero, since the lines should ideally intersect at one point.

- Calculate the angles of each line from the X-Y plane, and calculate the differences of these angles. Add this difference to the total error. Ideally, the difference between the angles should be almost zero, since the cameras are all on the X-Y plane and the lines should all be pointed at the same point.

- Figure out the derivatives of all of the parameters (angles, centers, etc.) with respect to the total error and adjust the parameters accordingly, trying to get the total error to zero

- Repeat until the total error stops going down

- Begin loop through the features with matches in 3 images:

This is a greatly simplified version of the algorithm and I left a number of steps out. I'm still tweaking it and trying to get the fastest optimization and least error possible. I also switched to the lens model in this paper by Juho Kannala and Sami S. Brandt. In addition to the physical matrix for each camera, the optimization algorithm also adjusts the distortion parameters for the lenses. Currently, I'm using the same parameters for all lenses but I may switch to individual adjustments in the future.

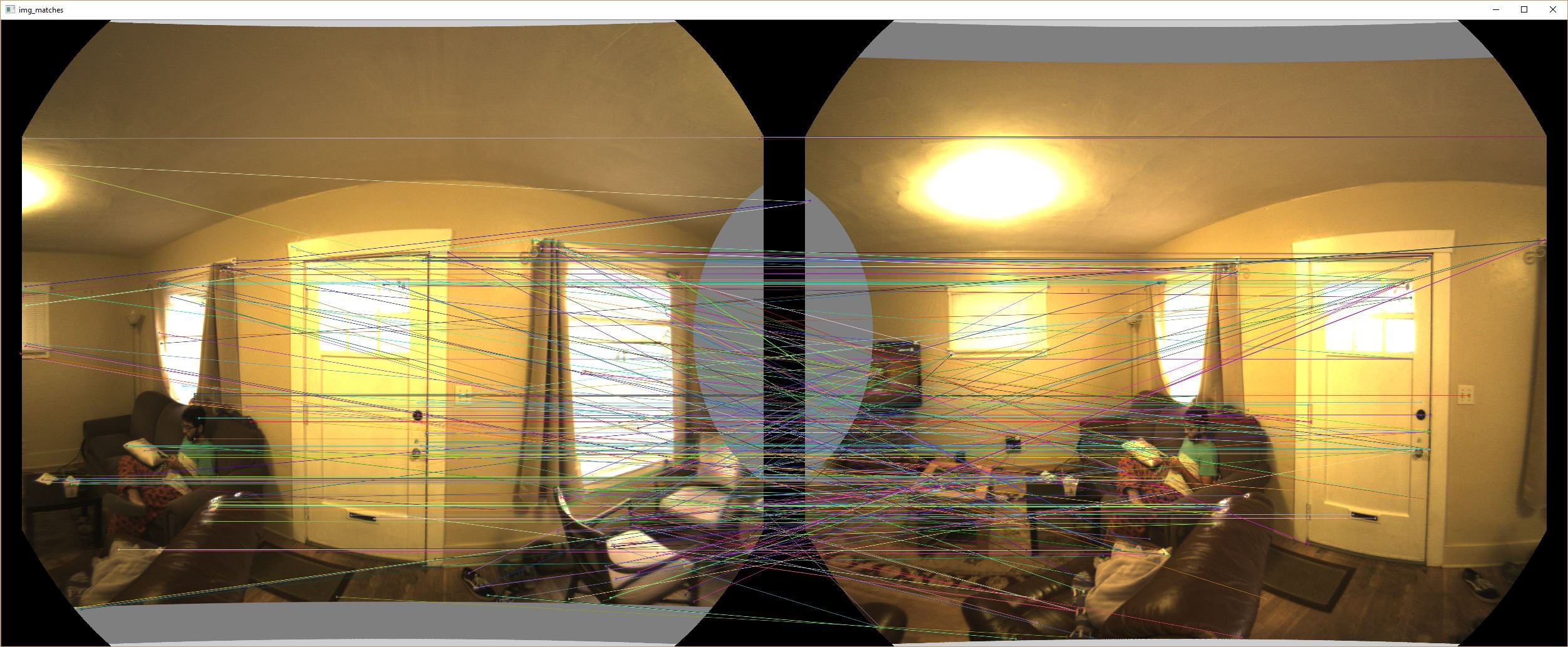

Also, here's a visual representation of the feature-finding process.

Here are three of the eight original images that we get from each camera:

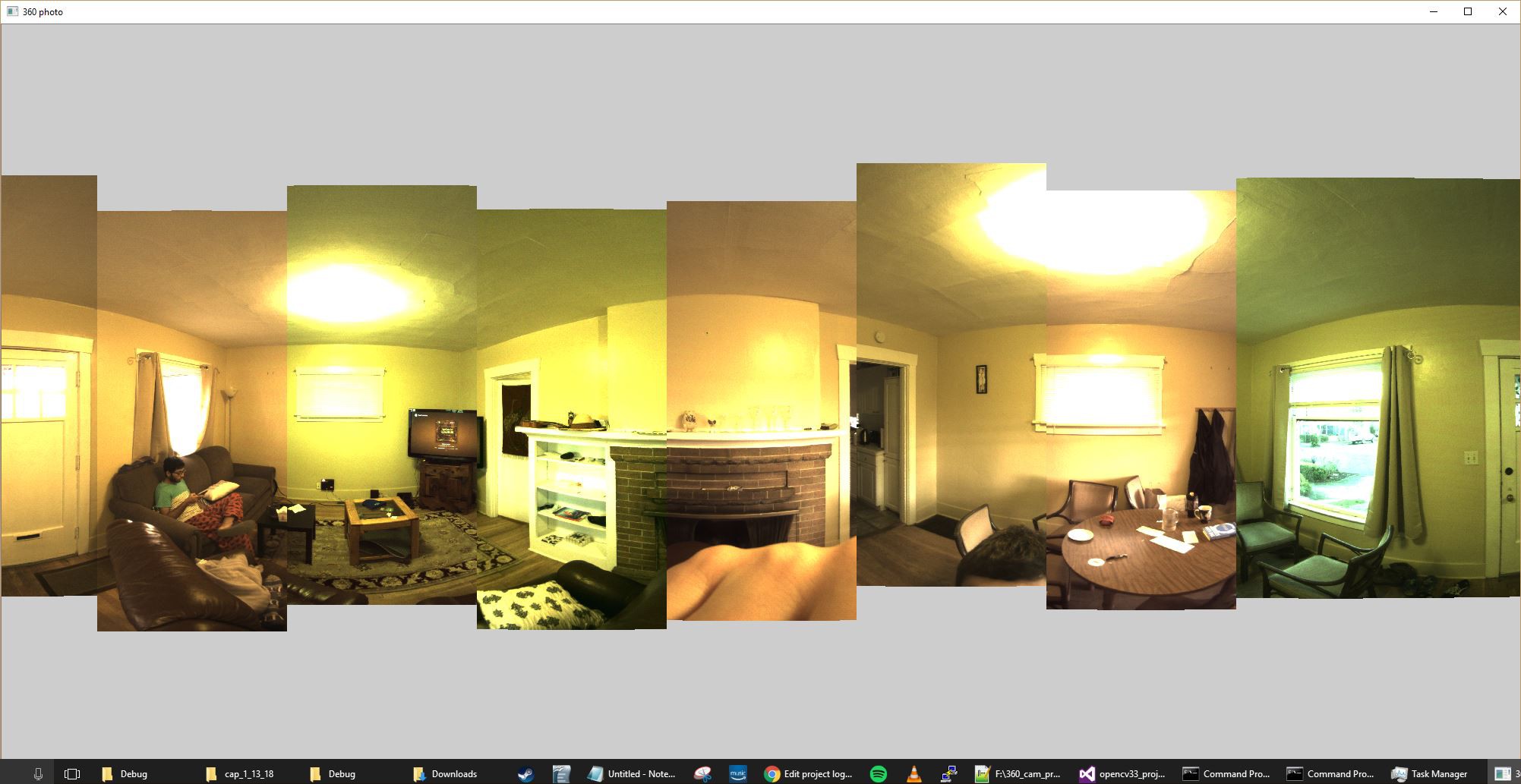

The 8 images are then de-warped and converted to spherical projection. Here is the initial 360 photo produced from the guess parameters.

It looks pretty decent, but there are clear vertical misalignments between some of the images.

Using SURF, features are extracted from each image and matched to the features in the image from the next camera to the left, as shown below.

Features that are found in images from three adjacent cameras are kept and added to a list. An example of one of these features is shown below.

You can clearly see my roommate's shirt in each of the three original images. The coordinates of the shirt are reversed from the spherical projection to coordinates in the original projection, and used to guess a 3D vector from each camera. If the parameters for the cameras are perfect, these vectors should intersect in 3D space at the point where the shirt is, relative to the cameras. The iterative algorithm works to refine the 3D parameters for each camera to get the vectors for each of the features to align as closely as possible. Once the algorithm is done, the new parameters for each camera are displayed and saved to a file that can be read by the stitching software.

Here's the left-eye view of the final stitched 360 photo:

![]()

As you can see, there's still some distortion, but the vertical misalignment has been greatly reduced.

-

Optical Flow

11/15/2017 at 18:12 • 0 commentsIt's been a while since my last update, not because I've stopped working on this project, but because I've been sinking almost every minute of my free time into rewriting the stitching software. The output is finally decent enough that I figured it's time to take a break from coding, install the other four cameras, and write a new post about what I've been doing. A few days after my last log entry, I happened across Facebook's Surround 360 project, an amazing open-source 360 camera. The hardware was pretty cool but it cost $30,000, which was a little outside my budget. What really caught my eye was their description of the stitching software, which uses an image processing technique called "optical flow" to seamlessly stitch their 360 images.

Optical flow is the movement of a point or object between two images. It can be time-based and used to detect how fast and where things are moving. In this case, the two images are taken at the same time from different cameras that are located next to each other, so it tells us how close the point is. If the object is very far away from the cameras, there will be no flow between the images, and it will be at the same location in both. If the object is close, it will appear shifted to the left in the image taken from the right camera, and shifted to the right in the image taken from the left camera. This is also known as parallax, and it's part of how your eyes perceive depth.

With optical flow, we can create a "true" VR image. That means that every column of pixels ideally becomes the column of pixels that would be seen if you physically placed a camera at that location. This allows a seamless 3D image to be viewed at any point in the 360 sphere. With my previous stitching algorithm, only the columns at the center of the left and right eye images for each camera were accurate representations of the perspective from that angle. Between these points, the perspective became more and more distorted as it approached the midpoint between the two cameras. If we use optical flow, we can guess where each point in the image would be located as seen by a camera at column in the image.

This is easier said than done, however. I decided to roll my own feature-matching and optical-flow algorithm from the ground up, which in hindsight, may have been a little over-ambitious. I started off developing my algorithm using perfect stereo images from a set of example stereo images, and it took two weeks to get an algorithm working with decent results. Using it with the images from the 360 camera opened a whole other can of worms. It took redoing the lens calibration, adding color correction, and several rewrites of the feature-matching algorithm to get half-decent results.

Here's a re-stitched image using the new algorithm:

![]()

As you can see, there's still plenty of work to be done, but it's a step in the right direction! I'll upload my code so you can check out my last few weeks of work.

-

Success!

10/16/2017 at 08:08 • 1 commentTL;DR: I've successfully captured, warped and stitched a 200-degree stereoscopic photo! Check it out in the photo gallery. Full 360 will come soon, once 4 more cameras show up.

After the last log, the project went dormant for a couple weeks because I was waiting on new lenses. However, the new ones arrived about a week ago and I was very happy to see that they have a much wider field of view! They are so wide that it seems unlikely that their focal length is actually 1.44mm. I'm not complaining, though. Below is a photo from one of the new lenses.

![]()

The field of view on these lenses is pretty dang close to 180 degrees, and as you can see, the image circle is actually smaller than the image sensor. There is also a lot more distortion created than there was with the older lenses. This caused some serious issues with calibration, which I'll cover below.

Camera Calibration

In order to create a spherical 360 photo, you need to warp your images with a spherical projection. I found the equations for spherical projection on this page, and they're pretty straightforward, however, an equirectangular image is required to use this projection. The images from the new lenses are pretty dang far from equirectangular. So far, in fact, that the standard OpenCV camera calibration example completely failed to calibrate when I took a few images with the same checkerboard that I used to calibrate the old lenses.

This required some hacking on my part. OpenCV provides a fisheye model calibration library but there is no example of its usage that I can find. Luckily, most of the functions are a 1 to 1 mapping with the regular camera calibration functions, and I only had to change a few lines in the camera calibration example! I will upload my modified version in the files section.

Cropping and Warping

Once I got calibration data from the new lenses, I decided to just crop the images from each camera so the image circle was in the same place, and then use the calibration data from one camera for all four. This ensured that the images would look consistent between cameras and saved me a lot of time holding a checkerboard, since you need 10-20 images per camera for a good calibration.

Stitching

OpenCV provides a very impressive built-in stitching library, but I decided to make things hard for myself and try to write my own stitching code. My main motivation for this was the fact that the images would need to wrap circularly for a full 360-degree photo, and they would need to be carefully lined up to ensure correct stereoscopic 3D. I wasn't able to figure out how to get the same positioning for different images or do a fully wrapped image with the built in stitcher.

The code I wrote ended up working surprisingly decently, but there's definitely room for improvement. I'll upload the code to the files section in case anyone wants to check it out.

The algorithm works as follows:

The image from each camera is split vertically down the middle, to get the left and right eye views. After undistortion and spherical warping, the image is placed on the 360 photo canvas. It can currently be moved around with WASD or an X and Y offset can be manually entered. Once it has been placed correctly and lined up with the adjacent image, the area of each image with overlap is then cut into two Mats. The absolute pixel intensity difference between these mats is calculated and then gaussian blurred. A for() loop iterates through each row in the difference as shown in the code snippet below:

Mat overlap_diff; absdiff(thisoverlap_mat, lastoverlap_mat, overlap_diff); Mat overlap_blurred; Size blursize; blursize.width = 60; blursize.height = 60; blur(overlap_diff, overlap_blurred, blursize); for (int y = 0; y < overlap_blurred.rows; y++) { min_intensity = 10000; for (int x = 0; x < overlap_blurred.cols; x++) { color = overlap_blurred.at<Vec3b>(Point(x, y)); intensity = (color[0] + color[1] + color[2]) * 1; if (y > 0) intensity += (abs(x - oldMin_index) * 1); intensity += (abs(x - (overlap_blurred.cols / 2)) * 1); if (intensity < min_intensity) { min_index = x; min_intensity = intensity; } } //Highlight the pixel where the cut was made, for visualization purposes overlap_blurred.at<Vec3b>(Point(min_index, y))[0] = 255; oldMin_index = min_index; cut[y] = min_index; }Each pixel in each row is given a score, and the pixel with the lowest score is chosen to be where the cut is placed between the two images. The gaussian blur ensures that the cut line isn't jagged and rough, which causes strange artifacts in the output.

I also implemented very simple feathering along the cut line, but the code is too ugly to post here. This full stitching process is repeated for the left and right halves of each image, and the two stitched images are vertically stacked to create a 360 3D photo! It's not a full 360 degrees yet because I only have 4 cameras, but I just need to order 4 more, hook them up and I should have some glorious 360-degree 3D photos. If you have any sort of VR headset, check out the attached photo to see my current progress.

Next Steps

Obviously, the most important step is to get 4 more cameras to bring it up to 360 degrees. However, there are many other things that I'll be working on in addition, including:

- JPEG/Video Capture

- Evening out white balance/exposure between cameras

- Better stitching

- Onboard warping and stitching

- Running my image-capture OpenCV software in Linux on the board when the board is started, so a monitor isn't needed to capture images

- Making a full housing with battery pack and controls

- Add a 9th vertically facing camera to get the sky

-

Lenses...

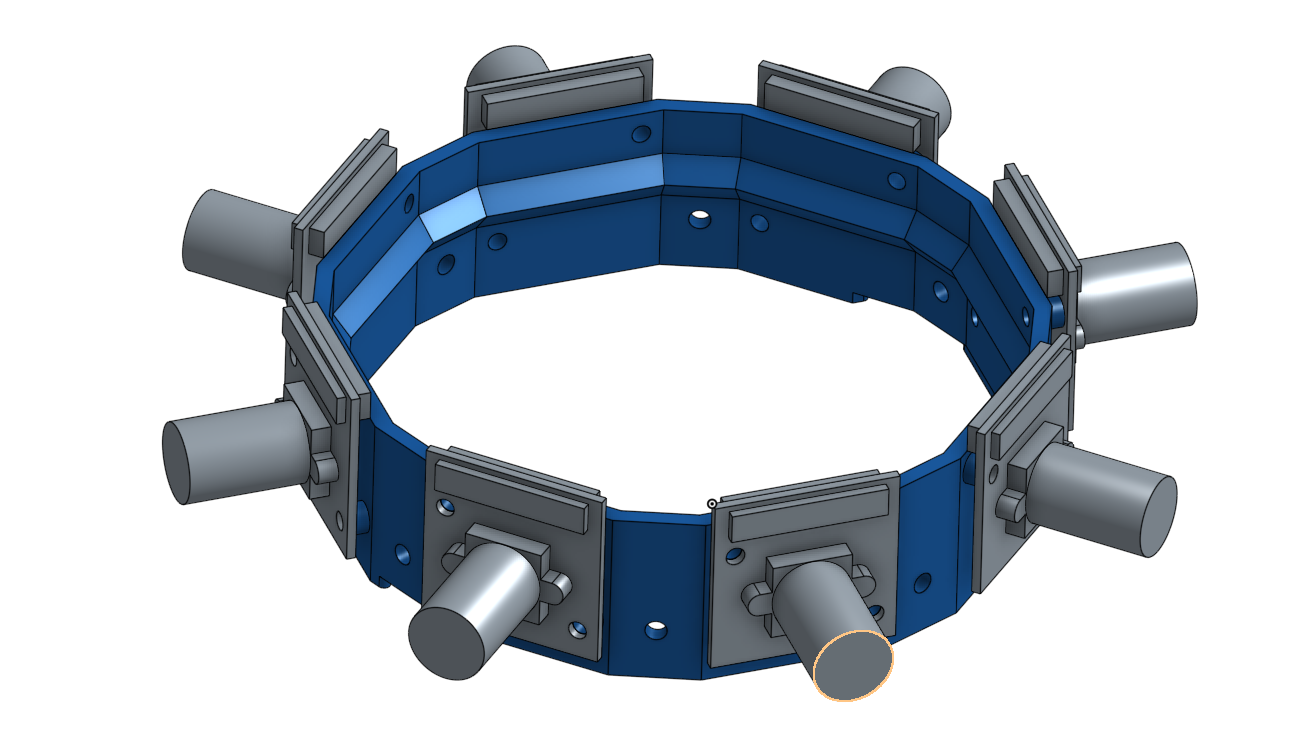

09/24/2017 at 01:23 • 0 commentsThe layout for my camera is an octagon with each camera lens positioned approximately 64mm (the average human interpupillary distance) from those next to it. A 3D image is constructed by using the left half of the image from each camera for the right eye view and the right half of each image for the left eye view. That way, if you are looking forward, the images you should be seeing in front of you should be from two cameras spaced 64mm apart. I created some shapes in OnShape to help myself visualize this.

The picture above is a top-down view of the camera ring. The blue triangle is the left half of the field of view of the camera on the right. The red triangle is the right half of the field of view of the camera on the left. By displaying the view from the blue triangle in your right eye and the red triangle to your left eye, it will appear to be a stereoscopic 3D image with depth. By stitching together the views from the blue triangles from all 8 cameras and feeding it to your right eye, and doing the opposite with the red triangles, it will theoretically create a full 360 degree stereoscopic image (as long as you don't tilt your head too much).

For this to work, each camera should have at least 90 degrees of horizontal field of view. This is because to keep the left and right eye images isolated, each camera must provide 360/8 = 45 degrees of horizontal FOV (field of view) to the left and right eye images. However, you want at least a few degrees extra to seamlessly stitch the images.

Focal Lengths And Sensor Sizes

I figured I'd play it safe when I started and get some lenses with plenty of FOV to play around with, and then go smaller if I could. Since the OV5642 uses the standard M12 size lenses, I decided to just order a couple 170 degree M12 lenses from Amazon. However, when I started using them, I realized quickly that the horizontal field of view was far less than 170 degrees, and even less than my required minimum of 90 degrees. It turned out that the actual sensor size of the OV5642 is 1/4", far smaller than the lens's designed image size of 1/2.5". Below is an image of the first lens I bought, which looks pretty much the same as the rest.

![]()

At that point, I thought that I had learned my lesson. I did some quick research and found out that focal length is inversely proportional to a lens's field of view, and I figured that if the FOV of my current lenses was just under 90 degrees, picking lenses with a slightly shorter focal length would do the trick. My next choice was a 1.8mm lense from eBay. The field of view was even wider than before... but still not larger than 90 degrees. The OV5642's image sensor is just too small.

![]()

The image above is from two cameras that are facing 90 degrees apart. If the lenses had a field of view of over 90 degrees, there would be at least a little bit of overlap between the images.

Now that I'm $60 deep in lenses I can't use, I'm really hoping that the next ones work out. I couldn't find any reasonable priced lenses with a focal length of less than 1.8mm in North America, so I have some 1.44mm lenses that will show up from China in a couple weeks and I'm crossing my fingers.

-

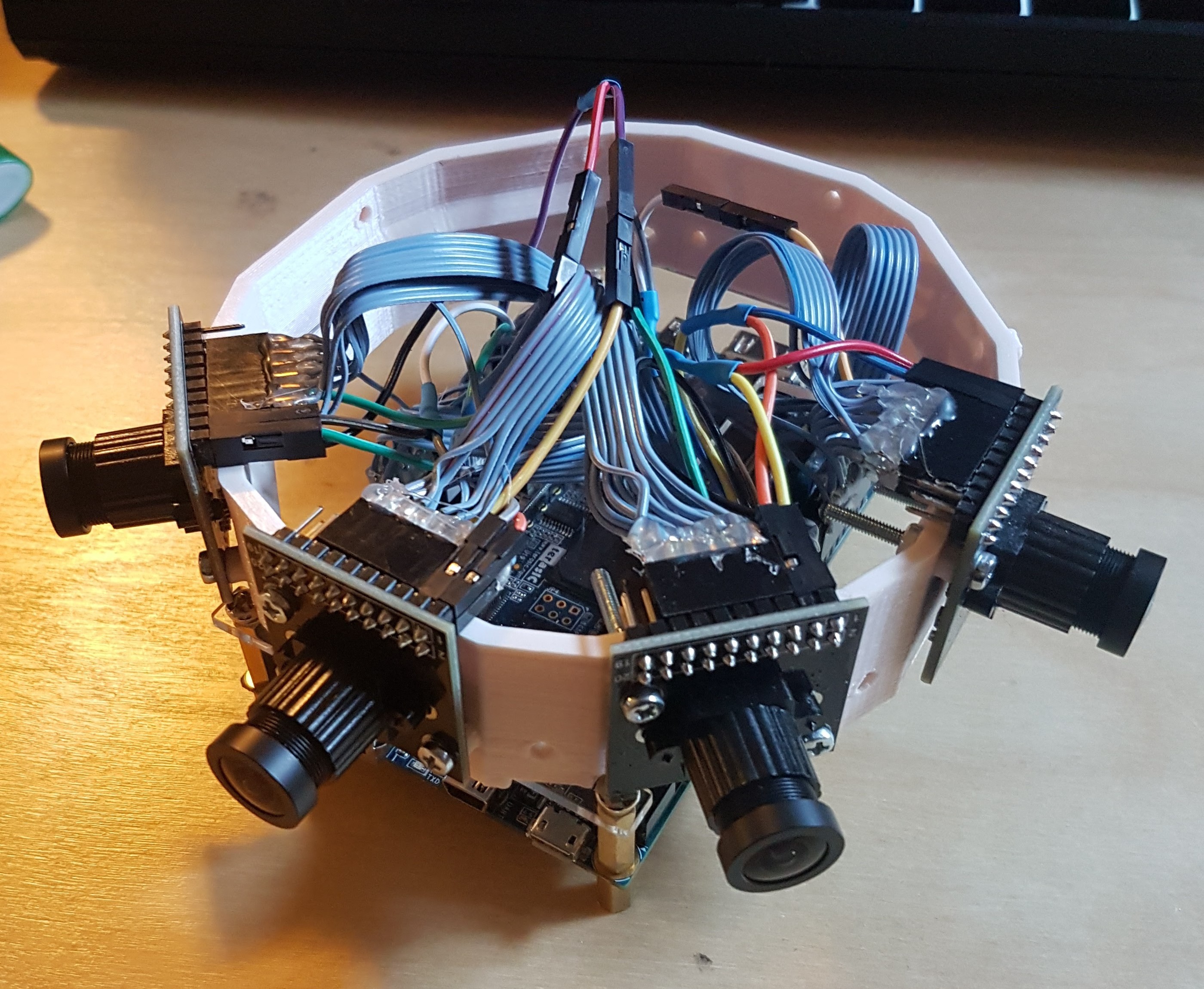

Building a Frame

09/23/2017 at 23:38 • 0 commentsAfter successfully capturing images from my OV5642 module, I decided it was time to build a camera mount to (eventually) hold all 8 cameras. I used OnShape, a free and easy-to-use online CAD program to design it. The result is pretty bare-bones for now, but it sits on top of the development board, holds all the cameras in place and gives convenient access to the camera wires and board pins.

I got it printed for only $9 in a couple of days by a nearby printer using 3D hubs. Here's a link to the public document in OnShape, in case you wish to print or modify it yourself.

I then used some old ribbon cable and quite a few female headers to create the monstrosity that is shown below.

![]()

-

Progress!

09/18/2017 at 03:00 • 0 commentsI've made quite a bit of progress since the last log, and run into a few road blocks on the way. Here's where I'm at right now.

Image Capturing

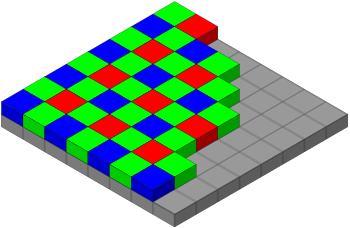

Using the OV5642 software application guide, I copied the register settings from the "Processor Raw" into my custom I2C master and used this to successfully get Bayer RGB data from the sensor! This outputs a 2592*1944 array of 10-bit pixels. In case you aren't familiar with Bayer image formatting, here's how it works:

![]()

On the actual image sensor, each pixel captures one color. Half of them are green, and the rest alternate between blue and red. To get the full RGB data for a pixel, the colors of the nearest pixels are averaged. Looking at a Bayer image like the ones I captured at first, it just looks like a grayscale photo. However, if you look closely, you can see the Bayer grid pattern. OpenCV includes a function called cvtColor that makes it incredibly easy to convert from Bayer to regular RGB.

Memory Writer

In order to take the streaming image data from the OV5642's Digital Video Port interface and write it to the Cyclone's DDR3 Avalon Memory-Mapped Slave, I had to create a custom memory writer in VHDL. I might have made it a little more complicated than I needed to, but it works fine and that's all that matters. I will upload it in the Files section as mem_writer.vhd.

Saving the Images in Linux

To read the images from DDR3 and save them to the MicroSD card, I re-purposed one of the OpenCV examples included on the LXDE Desktop image. I didn't feel like messing around with creating a Makefile, so I just copied the directory of the Houghlines example and changed the code to do what I wanted and rebuilt it. Here's the most important parts:

int m_file_mem; m_file_mem = open( "/dev/mem", ( O_RDWR | O_SYNC ) ); void *virtual_base; virtual_base = mmap( NULL, 0x4CE300, ( PROT_READ | PROT_WRITE ), MAP_SHARED, m_file_mem, 0x21000000 ); if (virtual_base == MAP_FAILED){ cout << "\nMap failed :(\n"; }I set up my OV5642 stream-to-DDR3 writer module to write to the memory address 0x21000000, and simply used the Linux command mmap() to get access to the DDR3 at this location. The size of this mapping, 0x4CE300, is simply the size of the imager (2592 * 1944 pixels).

Then, the OpenCV commands to save the image from DDR3 onto the MicroSD card are very simple.

Mat image = Mat(1944, 2592, CV_8UC1, virtual_base); imwrite("output.bmp", image);This doesn't include the Bayer-to-RGB conversion, which was a simple call to cvtColor.

In my next log, I will discuss the parts of the project that have gone less smoothly...

-

Linux on the dev board

09/01/2017 at 04:49 • 0 commentsThe DE10-Nano board ships with a MicroSD card that is loaded with Angstrom Linux. However, you can also download a couple of other MicroSD images with different Linux flavors from the Terasic website. Since I planned to use OpenCV to capture and stitch images, I downloaded the MicroSD image with LXDE desktop, which handily already has OpenCV and a few OpenCV example programs on it.

U-Boot

The DE10 Nano uses an application called U-Boot to load Linux for the ARM cores to run and to load an image onto the FPGA. I don't know exactly how it works, but I've been able to get it to do my bidding with a bit of trial and error. The first thing I attempted to do was set aside some space in the DDR3 memory for the FPGA to write to, so the ARM wouldn't attempt to use that space for Linux memory. This turned out to be fairly simple, as I was able to follow the example "Nios II Access HPS DDR3" in the DE10 Nano User Manual. This example shows how to communicate with U-Boot and Linux on the DE10 using PuTTY on your PC, and set the boot command in U-Boot to allow Linux to only use the lower 512MB of the DDR3. Here's the pertinent snippet that you enter into U-Boot before it runs Linux:

setenv mmcboot "setenv bootargs console=ttyS0,115200 root=/dev/mmcblk0p2 rw \ rootwait mem=512M;bootz 0x8000 - 0x00000100"

My next task was a bit more difficult. I also needed my FPGA to be able to access the DDR3 to write images for my OpenCV program in Linux to access. This required the use of a boot script that tells U-Boot to load the FPGA image from the SD card and then enable the FPGA-to-DDR3 bridge so it can be used.

I used the helpful instructions on this page to create my boot script:

fatload mmc 0:1 $fpgadata soc_system.rbf; fpga load 0 $fpgadata $filesize; setenv fpga2sdram_handoff 0x1ff; run bridge_enable_handoff; run mmcload; run mmcboot;

And that did it! I had my FPGA writing directly to the DDR3. The next step was to actually get pictures from the cameras...

VR Camera: FPGA Stereoscopic 3D 360 Camera

Building a camera for 360-degree, stereoscopic 3D photos.