-

Sparkfun - "Enginursday" Blog Feature

10/26/2017 at 22:49 • 0 commentsWe were featured on the Enginursday blog on Sparkfun today!

This is an exciting bit of exposure. It's always great to get your name out there, but I'm growing to appreciate the contacts I've been making through comments. Now to keep gaining steam.

https://www.sparkfun.com/news/2507

![]()

Marshall Taylor is a Sparkfun engineer who has been following SNAP and offering advice pretty much since the beginning. I guess he finally decided the project had grown up enough to spark the interest of their community. Hopefully we can keep up the pace and move into hardware come spring - I wouldn't want to keep our new audience waiting.

-

Proto I Hardware Captures

10/21/2017 at 09:39 • 0 commentsHere are several captures taken from our Proto I hardware while attempting to clean up the sound. The low resolution and noise inherent to the R200 can make things pretty noisy. Sorry for the low volume!

Special thanks to Morgan for being such a good sport and waving all night.

-

Proto I BOM

10/21/2017 at 09:27 • 0 comments -

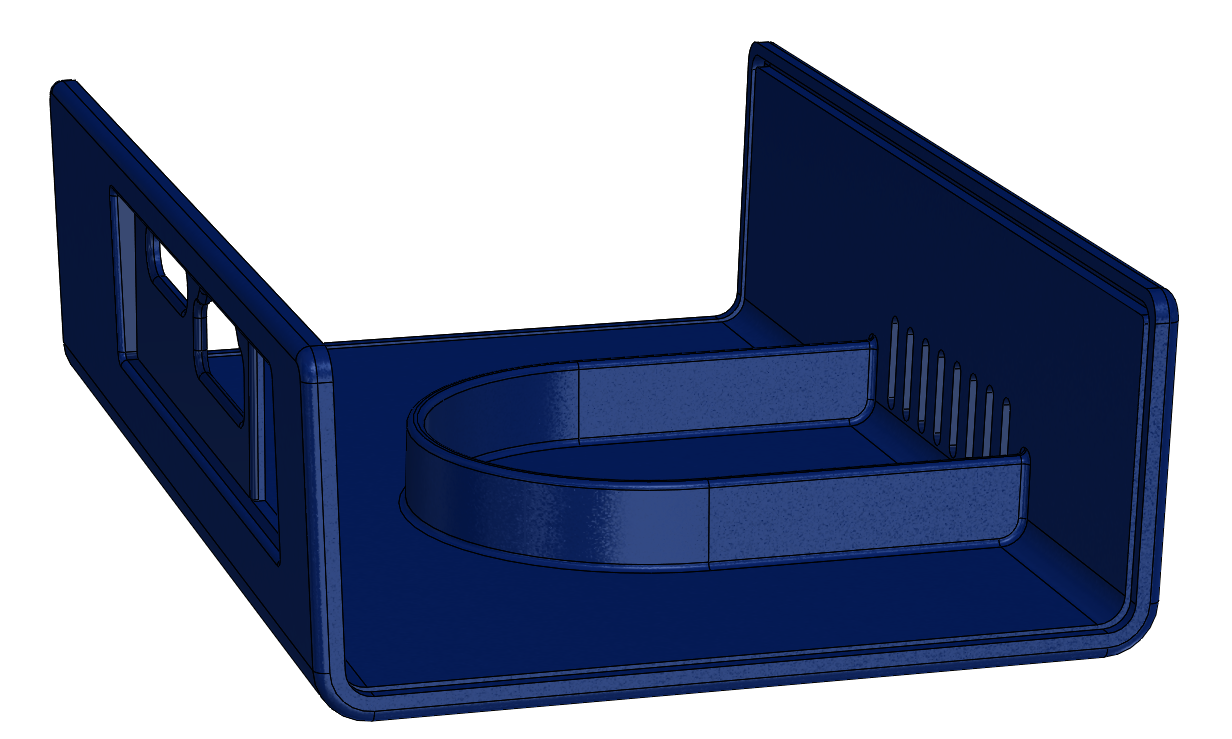

Updated R200 Prototype Hardware

10/21/2017 at 08:59 • 0 commentsThe next generation of SNAP will still be using the R200. We plan on moving to a new sensor array in the spring of next year, but until then we have work to do!

To keep everyone on the team comfortable, we've designed chassis for the UP board and are moving from the classic hot-glued headsets to a lighter weight fully printed version, as seen below.

The chassis for the UP board consists of an aluminum tray which is required to provide adequate grounding for the USB and Ethernet ports. The plastic lid SNAPs into place by rocking in over the side panel connectors. This

small housing will likely be worn on a belt, with the battery pack tucked neatly into a back pocket.

![]()

Inside the lid is an integrated baffle which directs airflow from the vent slots in the side, into the fan. Vent holes in the rear of the chassis then exhaust the warm air. This part is close to being ready for injection molding, however certain features would require side pulls. The snap feature in particular may need to be reworked unless the part can be flexed from the core reliably.

The new headset is very simple, and not much to look at, but looks aren't everything. This assembly will weigh nearly two ounces less than the old version, which is a load off the user's nose!

![]()

-

Future Hardware Concepts

10/21/2017 at 08:37 • 0 commentsHeadset Development

The current headset is defined by the R200, which is an ungainly rectangular block that doesn't fit anywhere on your face. Comparing it to Geordi's visor is complimentary, but probably not a good sign for users who want to avoid the fashion statement.

The future SVO headset will take into account feedback we've gotten about our current design to keep the eyes free of obstacles so that partially sighted individuals can take advantage of all of their senses. Some of our concept art explores integrating cameras into more popular eyeware to take advantage of the aesthetic benefits.

![]() Some considerations for a headset like this:

Some considerations for a headset like this:- Flexure of the frame will cause misalignment of the cameras which may make SVO impossible to accomplish.

- Headsets should come in both clear and shaded lens options.

- Three cameras may be better than two for achieving the desired 180° FoV.

- Each camera will require a controller which will take up much more space. This could be located behind the head, or merged with the earpiece.

- Communications lines may be routed and merged behind the head.

- It is probably not desirable to merge the earpieces directly with the headset. It would be inconvenient to need to remove/replace them each time the glasses were removed for cleaning or adjustment.

- Communications could be routed through the earpieces. USB connection to the earpiece could be made via magnetic jack for ease of use.

Controller Development

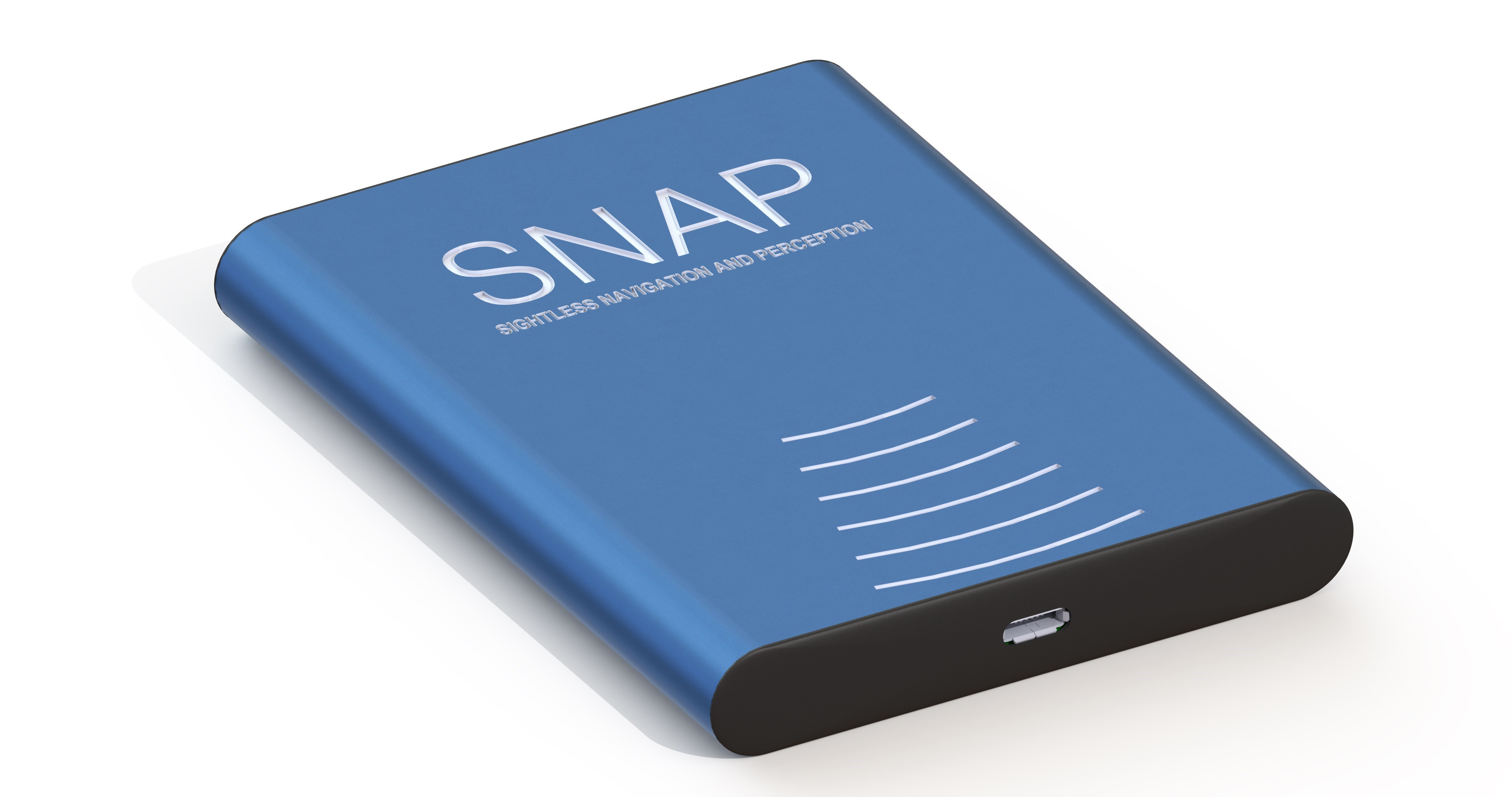

To reduce costs, the controller housing will be as simple as possible. This concept uses a preexisting aluminum extrusion profile which has been cut to length and anodized, forming the entirety of the chassis in one solid body. Milled features are shown in this image, although the intricacy of the text would likely prove to be cost prohibitive. Silk screen or labeling are more realistic options.

![]()

Some considerations for the chassis:

- Dimensions of chassis shown are 3x4x0.5 in

- Battery life can be extended by omitting a fan, but the board is likely to overheat unless extremely low power components can be utilized. Cell phone components may be sufficient.

- Plastic end caps will support the PCB at each end, pressing high powered components against one face of the chassis, while the battery pack takes up the majority of the underside of the board.

- Clear anodize would make for a more effective thermal solution.

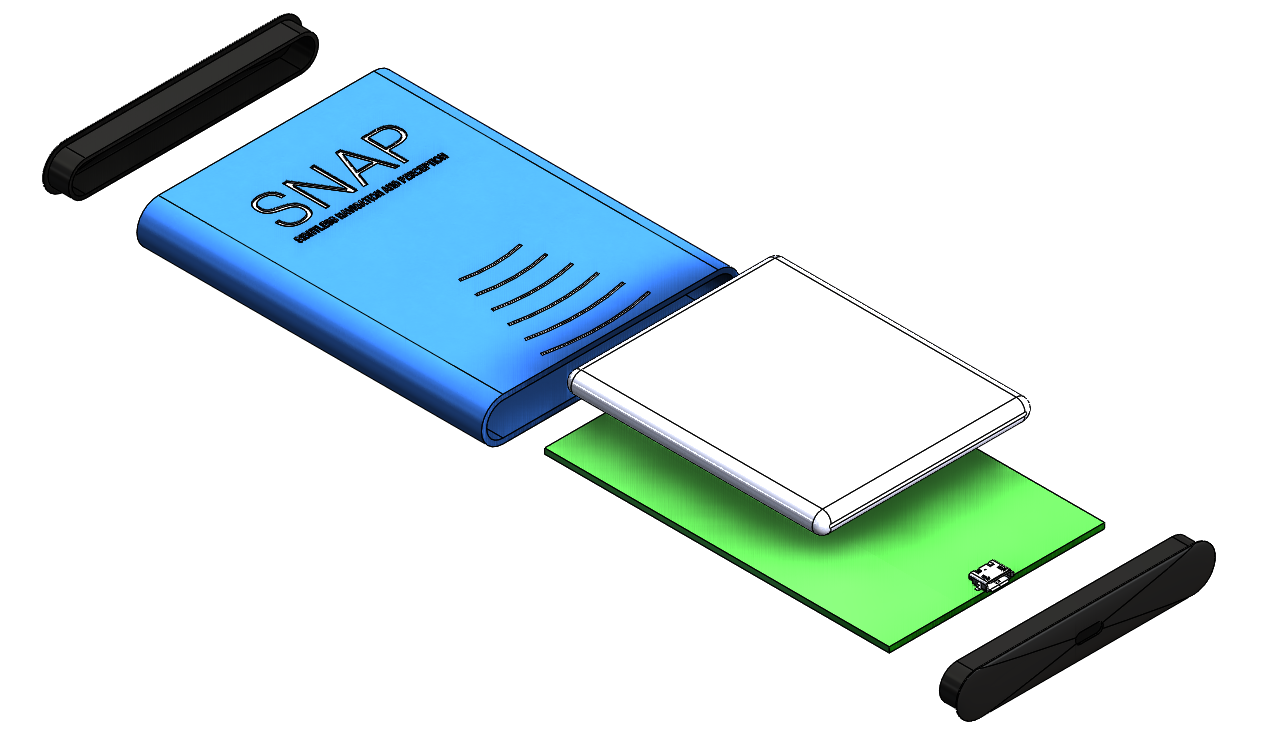

Exploded Assembly

Removing the endcaps we are able to slide the PCB out either end. These end caps will either glue in place, or snap in with an interstitial gasket. The former option would make for a better seal, possibly up IP67 depending on the USB C connectors available. But can we resist making it SNAP together?

The FPGA/SoC would be placed on the far side of the board. Considering the size of the battery, this would nearly need to be a one sided board. It is likely that the battery will need to be larger still, and that the chassis will need to grow. The overall footprint is on par with an average cell phone, but because we will be powering two cameras and headphones and be running image manipulation continuously, it will consume quite a bit more power on average.

Better hardware estimates will be available after we have created a SVO prototype and have a firm grasp of the processing power required.

![]()

-

Stereo Visual Odometry

10/21/2017 at 07:55 • 0 commentsSVO is looking to be our next sensor of choice. I want to discuss some of the pros and cons of this method, as well as compile some project learning resources.

To define the desired feature set of the vision sensor, we should first recognize that the sensor unit consists of not just the physical sensor parts, but the entire sensing system from the outside world right up to the depth map input where SNAP's modular feedback software takes over. We would like this system to feature the following, in no particular order of importance:

- Distance to objects (ok, this one is pretty important)

- Relative velocity of surroundings

- Edge and plane detection (or support for this)

- Low cost (monetary)

- Diverse surface compatibility

- Low noise

- HIgh reliability

- Small Form-factor

There are a few other requirements such as ergonomics which we will take as a given. This list is in essence why SVO looks so good. The biggest shortcoming is in surface compatibility, as SVO has a hard time with unfeatured, clear, and reflective surfaces. Since that is true of all high resolution SLAM systems, it's hard to count as a negative. One thing to consider is that most of the SVO tutorials and tools are focused around SLAM techniques, and are dead set on absolute positioning, which we don't care about. That might mean that we can save processing (and coding) time by skipping those steps.

Chris Beall at Georgia Tech put out this extraordinarily awesome overview of what SVO entails which has made the process actually easy to understand, and look deceptively easy to accomplish. It makes sense to discuss methodology by following Mr. Beall's step by step process, so here goes:

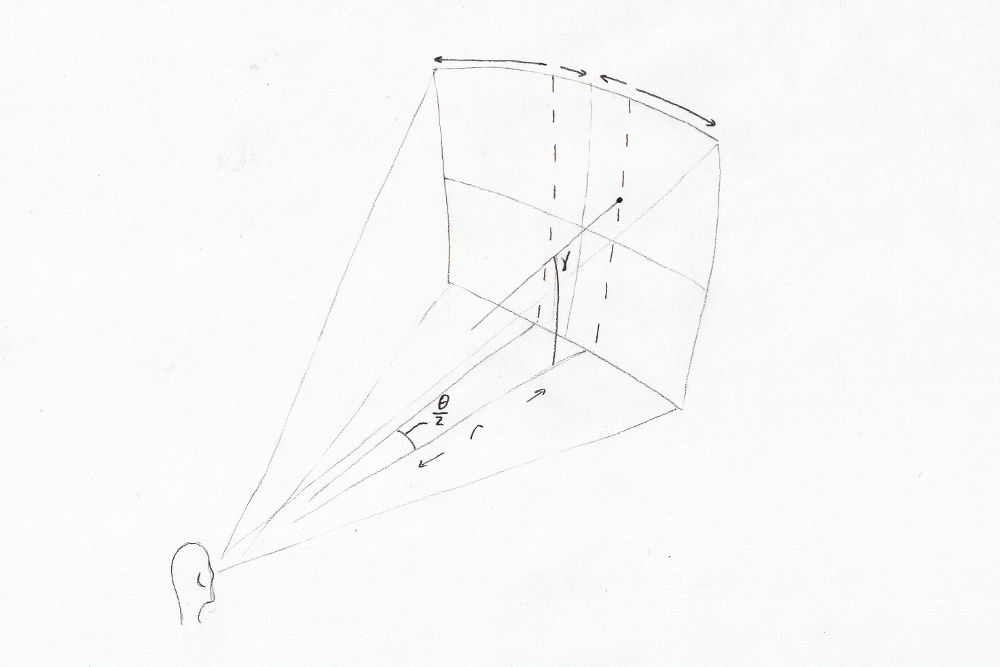

1) Epipolar (Stereo) Rectification

There is a nifty overview of image rectification in OpenCV using the "Pinhole Camera Model" which assumes the image is not distorted by lenses before being captured. This is clearly never the case, but if a camera is small enough, and the distance between the lens and image sensor is negligible, we can use the pinhole model with relatively little error. Adjustments can then be made for lens effects as discussed in this paper on rectifying images between >180° fish eye lens cameras.

My biggest question here is whether this is done realtime for each frame, or if you simply establish transformation arrays characteristic of the pair of cameras.

Something to note on rectification: keeping your cameras closer together makes rectification easier, but also makes distance calculations more prone to error. Human vision accomplishes depth perception astoundingly well, but it does so by combining stereo depth perception with lens distortion and higher level spacial awareness. In our application we can likely accept more error in distance measurements than most SLAM enthusiasts, so long as we don't cause excessive noise in the far field.

2) Feature Extraction

We recognized SIFT from OpenCV, but while reading up on their site, I was happy to find that there is also a SURF library. The Feature Detection and Description page has a great overview of both.

The real challenges here, so far as I can predict, will be in maintaining frame rate while casting enough points to prevent voids in the depth map, and writing our own feature extraction routines to hone in on objects of interest. There is a good chance we will end up wanting to mesh or best fit planar surfaces so as not to drown our user's hearing in the sound of blank walls.

3) Stereo Correspondence

Once again OpenCV comes to the rescue with a page on Stereo Correspondence. This step seems to be more straight forward.

Unless I simply misunderstand, stereo matching is necessary to triangulate distances, but not much else. While there's a chicken and egg problem at hand, we might be able to skip matching some points to save time if we are running plane detection.

4) Temporal Matching

Here's the OpenCV resource you've come to expect. As I understand it, this is expensive from a processing standpoint, but I am still fuzzy on details. It seems to me that if you are matching between cameras quickly, you could match between frames just as easily using the same method. Depending on whether rectification is done realtime or established in advance as I asked earlier, this may not be too terribly expensive.

We should remember that because we aren't looking for absolute positioning, the absolute magnitude of velocity is of secondary importance. A much less precise temporal matching (or temporal rectification) method could be employed to save time with little to no impact on the usability of the device.

5) Other SLAM Topics

Due to our lack of interest in absolute positioning, relative pose estimation and absolute orientation are not necessary.

-

Promo Video

10/21/2017 at 06:03 • 0 commentsWe've made a new Promo Video!

-

2017 Capstone Senior Design

10/21/2017 at 05:59 • 0 commentsMuch of the coding behind SNAP was accomplished with the help of CS students at the University of Idaho through a Capstone Senior Design project. This year, students will be focusing on packaging the simulator into an easily distributed installer. They will also be adding features so that we can more easily manipulate the sound outputs.

Overview of Senior Design Goals

10/20/2017 Status and Planning

In January, the team will begin looking into Stereo Visual Odometry systems as an alternative to the R200 camera. If successful, movement in this direction will represent a huge jump from pieced together 3rd party boards and devices, to developing our own purpose built hardware.

-

Hardware Troubleshooting

10/20/2017 at 19:46 • 0 commentsThe RealSense is extremely easy to work with, and has provided a great development platform to jump off from, but for a number of reasons this device is simply not suited to the application. Here we discuss the shortcomings of the R200, what we have learned from it, and what we think we should do in the future.

1) Narrow field of view:

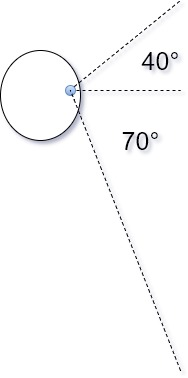

The R200 sports a 59° azimuthal and 46° vertical Field of View (FOV). This narrow perspective makes it difficult to keep track of what objects are directly to the sides, and undermines our utilization of binaural localization. Ideally we would like to have a full hemispherical FOV, providing 180° feedback in both axis, although the sensors required to achieve this might be ungainly. To limit bulk, it is likely acceptable to restrict the vertical axis to 110°, with an offset as shown below. This corresponds roughly to the FOV of human vision, for which we have a lot of data.

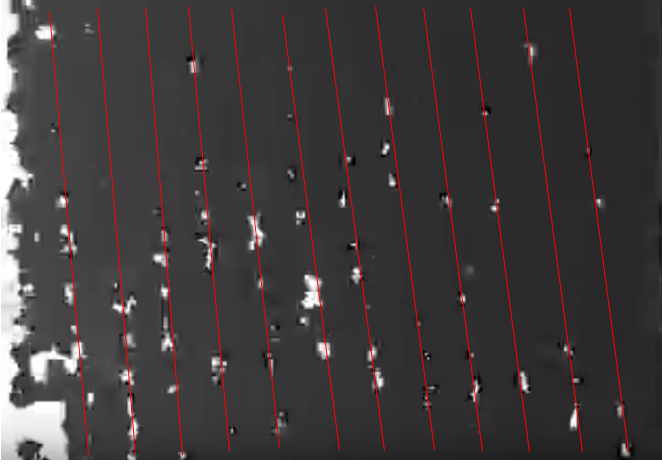

2) Poor Resolution

The depth camera has limited resolution which prevents us from increasing the audio resolution. The image below is of a wall when viewed at an angle. The visible striping (red lines added) is the space between measured depth layers. This becomes much more apparent when you watch video of the output. Intel likely left gaps in the resolution to reduce incorrect measurements due to noise. Unfortunately for us, all of those spaces cause gaps of silence which make the output sound choppy. Those aren't the White Stripes we want to listen to!

While spending more on a nicer camera may let us crank up the resolution, and extensive filtering may help reduce the audio impacts, these sort of artifacts are going to be present in depth cameras no matter what we do. This is one of the major reasons we've been thinking about Stereo Visual Odometry (SVO).

3) Noisy & Vacant Images

The R200 does pretty well with nearby objects, but when the surface is somewhat shiny, or it gets further than about 8 feet away, the depth camera starts getting less certain about where exactly things are. This comes through as grainy images, with static-like patches of varying depth which pop in and out. In the image below, you might think Morgan is standing in front of a shrub, based on the speckled appearance of the object. Although it isn't the nicest piece of furniture, this is in fact a couch, not a plant.

All that static comes across as a field of garbled sound. It isn't steady, like the audio queues for Morgan, but poppy and intermittent, just like the visual artifacts would lead you to expect. Once again, filtering can be employed to reduce this noise, at the cost of speed, but on some level, this is a fact of life for IR imaging.

In the lower right hand corner of this image you can see a rectangular hole to infinity. The image is of my workbench, which looks messy because it is, and the vacant corner is an LCD monitor. The R200, like many long range IR, structured light, and LiDAR sensors, doesn't detect clear or reflective objects. This might be the hardest obstacle for a robotic vision system to overcome, since no single sensor is very good at everything. We may find that it is necessary to integrate supplementary sensors into our final SNAP to handle clear objects like glass doors and windows.

4) Cost Prohibitive

SNAP's stated goal is to provide effective navigation and perception assistance for $500 or less. Although the R200 is a dirt cheap by my usual robotic vision standards, the $100 - $150 price tag makes it an easy target when we are trying to cut costs, and the lack of alternate sources makes it a high risk option when trying to make an assistive device available to people off the beaten path. This is theoretically another benefit to SVO, which can employ $10 - $20 cameras (although supplementary hardware will impose additional costs).

-

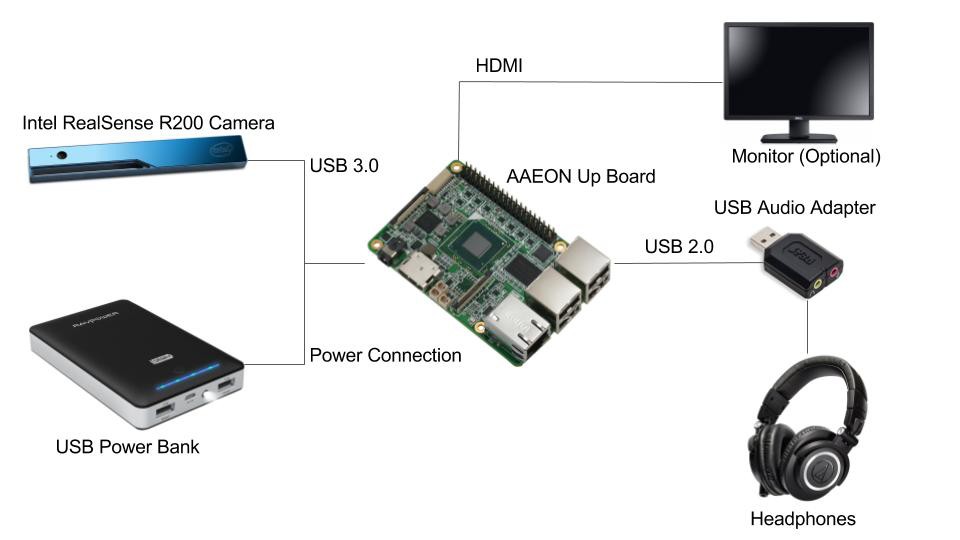

Hardware Block Diagram

10/20/2017 at 01:41 • 0 commentsWe've created a graphical block diagram to describe the current hardware setup.

![]()

Image Sources: Intel, Amazon

SNAP: Augmented Echolocation

Sightless Navigation And Perception (SNAP) translates surroundings into sound, providing continuous binaural feedback about the environment.

Dan Schneider

Dan Schneider

Some considerations for a headset like this:

Some considerations for a headset like this: