Problem:

• There are over 43 million worldwide with a total hearing loss.

• The Cochlear Implant is expensive an requires a surgical procedure to setup

• Sound location awareness is not provided by existing devices and requires a special hearing dog to aid the user identify important sounds around them. Hearing dogs are expensive and the training between user and hearing dog extensive.

• They often require high precaution while venturing outside due to the lack of sound location awareness

• Most products in this domain seem to target the speaking and communication aspect of sounds and not the general day to day context provided by the ear.

Background:

I, Manoj Kumar along with my partner Vignesh have always found assistive technology devices to be interesting due to the real impact the devices have on the people using them. I admire the artistic potential of modern technology coupled with elegant design. Sensing in particular draws my attention due to feeling of augmentation of my existing perception. The visually impaired live a normal life by embracing the additional information provided by their remaining senses. After seeing a talk from David Eagleman on his hearing assistance vest, I wanted to work on adapting positional sound to an Augmented Reality headset.

Solution:

SonoSight- The AR glass for hearing impaired

(Solution image, Sketch)

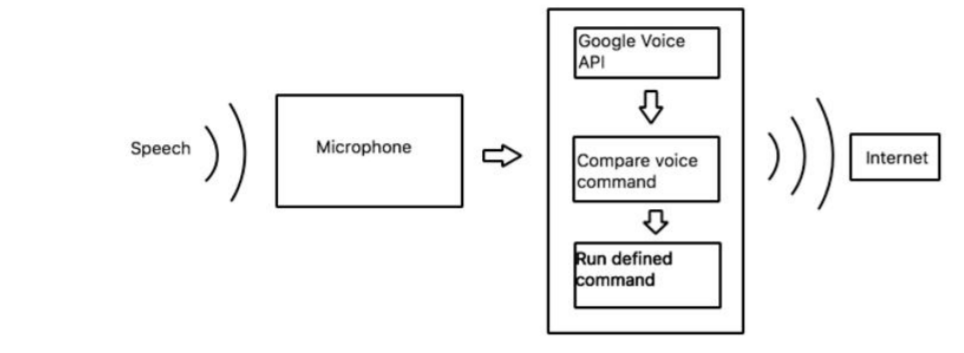

Our solution is an affordable Augmented Reality headset with multi-microphone setup to assist the hearing impaired user identify various sources of sounds around them in an unobtrusive and intuitive manner. SonoSight uses a low cost holographic projection setup based on the DLP2000 projection engine. The various sound sources would be identified using acoustic triangulation and mapped accordingly while taking the user's position and orientation into account. We propose the use of FFT to determine the various frequency bands to identify the source of sound and to isolate speech. Other than the sound source awareness conveyed to the user, We propose near real time speech to text translation by sending an audio signal to the google speech API which can help the user assimilate information from people who cannot use sign language. We aim to go beyond simple voice to text translation while we use voice for communication in daily life, Hearing goes beyond that to things we take for granted. It gives us spatial awarness and often alerts us to things in the environment (Eg: Doorbell, walking along a road or being called by someone from behind)

Design Goals:

- Real-time voice to text: Provide automatic speech recognition for voice around the person once the person enters the sphere of normal hearing and provides quotations for environmental sounds. We might even be able to provide translations for multiple people if all goes well.

- Localize sound: The visualizations should provide clear and minimal information about the location of the various sounds around the person.

- Glanceable: The directional information should be easy to-understand at a glance.

- Responsive: The visualization should be real time and respond to the user moving their head.

- Coexist: The visualizations should work in tandem with the hearing impaired person's own techniques like lip reading and should be harmonious and adaptable.

- Spherical sensing: The visualizations should provide a spherical spatial acoustic mappings of information.

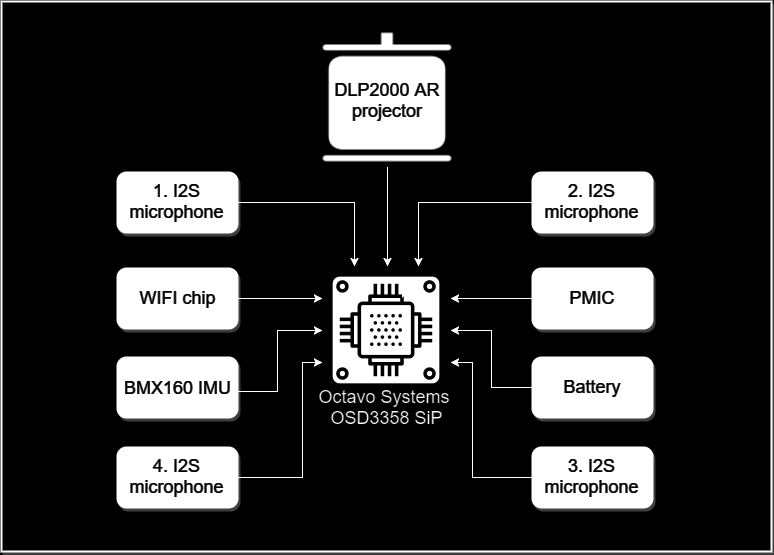

Block Diagram:

(Block Diagram of the final system)

The components which would make up the initial system are:

1) DLP2000 projector system

2) Beagleblone Black

3) BNO055 IMU

4) 4x Microphones (I2S or Analog)

5) USB Wifi Adapter

Holographic AR Projection:

For our holographic projection system we went with a beam splitter assembly, With the DLP microprojector behind us. The prototype had to be confined to this tight space due the space constraints of the beaglebone cape. We have been in talks with supplier who can hook us up with a compact SPI protocol DLP2000 module including the optical assembly. ...

Read more » Manoj Kumar

Manoj Kumar

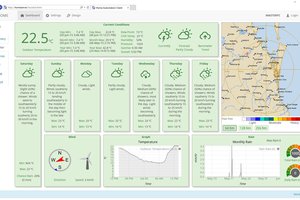

(Figure: Our implementation for the voice to text)

(Figure: Our implementation for the voice to text) (Figure: AR glass projection system)

(Figure: AR glass projection system) (Figure: DLP pico projector)

(Figure: DLP pico projector)

Bothwell

Bothwell

robweber

robweber

Elaine Cheung

Elaine Cheung