-

Second Demo of BlinkToText Software

10/18/2017 at 04:19 • 0 commentsI have finished a second major upgrade of the software.

The demo video demonstrates the new sound effect feature.

The user can send their blinked message as an SMS via the SMS Button.

For future development, I would like to improve the blink detection algorithm. I want to make the software work better at long distances.

-

Sound Effects and Customization

10/16/2017 at 05:15 • 0 commentsI have made two major updates to the software.

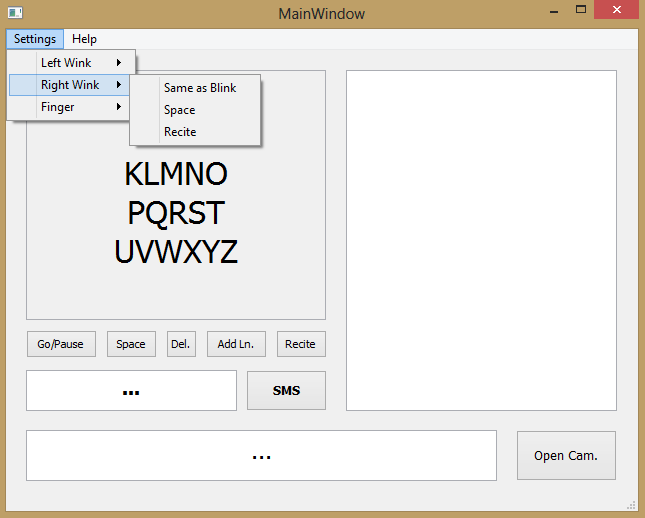

Whenever the software recognizes a blink, it emits a beep sound effect. The beep sound effect let's a user know that their blink has been detected.The software has a customization feature. BlinkToText is built around blink detection because almost all people with severe paralysis retain that ability. If a paralyzed person can conduct other body movements as well, the software should take advantage of that. With the customization feature, users can set certain actions as hot keys. If the program detects a certain body movement, it will automatically do the preset function. Currently, the software can detect three additional actions besides blinking.

- Left Eye Wink

- Right Eye Wink

- Finger Movement

Users can use these body movements to trigger any of the button functions. Additionally, users can use any of these body actions instead of blinking, with the Same as Blink Option.

Everyone’s disability is different. Ideally, every disabled person would have a custom made solution for their problems. Unfortunately, this option is outside of the financial constraints of many families. BlinkToText offers a partially customizable system that works for most people.

![]()

[Figure 1: Customizing BlinkToText]

-

First Demo of BlinkToText Software

10/08/2017 at 02:48 • 0 commentsI have developed a program that allows users to convert eye blinks to text. There are many things that I would like to change about the software, but I do have a demo version ready. You can view the demo below.

The software automatically cycles through a series of rows. If the user blinks, the software iterates through each element of the selected row. To differentiate between intentional and unintentional blinks, the software only recognizes blinks that last longer than 1.25 seconds. The user can select an element by blinking again.

The user can view themselves with the camera feature.

The user can recite her/his text using the recite button.

I feel that the software would make a good note taking tool for paralysed people. A series of notes can be amassed in the right dialog box. The software speeds up note taking by predicting the next word that the user might blink. The Go/Pause button allows users to start and stop note taking at will. A key goal of the program is that it can be operated with complete independence by paralysed people. With the Go/Pause button the program can be run at the user’s discretion. A computer can be set at the end of the user’s bed/ wheelchair, etc so that they can express themselves as they please.

I had many ideas for the text selection. I was not sure what the most convenient way to select text would be. An acquaintance suggested copying a nine digit cell phone pad.

[1, Figure 1: Nine Digit Cell Phone Pad]

I looked into the Minuum keyboard system, as well.

I learned about the row column method in an unusual way. I was watching a video clip of Breaking Bad, and noticed one of the actors communicate using a text chart. I was disappointed by the nurse not being able to understand the patient. I believe if the man could operate the chart independently, he would not have that problem.

Currently, the GUI’s Nurse Button has no programmed function. Eventually, I want the user to be able to send their message as a text, email, and/or something else. The Nurse Button is for that purpose.

Improvements

There are a lot of improvements that I would like to make to the interface. I hope that I can finish all of these improvements before Oct. 16/17.

I would like to make the button font size significantly bigger. Ideally, bedridden patients would be able to use the software by having a computer permanently placed at the foot of their bed. Currently, the text is readable from five feet away with 20/30 vision. Unfortunately, stroke and other diseases that cause paralysis can also affect vision. The software should be accessible to people with poor vision, as well.

I think sound effects would improve the user experience. I tended to keep my eyes closed for longer than needed in the demo. If a sound effect signalled when a blink was detected, I could open my eyes sooner.

References

[1] S. Jain. Print All Possible Words From Phone Digits. 2017. Available: http://www.geeksforgeeks.org/find-possible-words-phone-digits/

-

Developing GUI Part 2

10/07/2017 at 05:48 • 0 commentsI will house the application in a desktop app. If the application is easy to install, I figure it will be almost as accessible as a website. I have made the GUI using PyQt. It is important that all GUI functions are controllable by eye blinks. Paralysed people must be able to use the software with complete independence.

[Figure 1: BlinkToText Desktop App GUI]

-

Developing GUI Part 1

10/07/2017 at 05:43 • 0 commentsI want the entire application to be housed inside of a website. That would make it quite easy for anyone to access the software.

I built simple interface for this website. The website can accessed at this link.

https://github.com/mso797/BlinkToText

I am having difficulty getting the front end to talk to my python program. I need the python program to run on the client side. AJAX calls do not seem to have the speed that I need.

[Figure: 1 BlinkToText Mock Website]

-

Detecting Eye Blinks in Python

10/07/2017 at 05:12 • 0 commentsI have developed a simple python program that can detect eye blinks. The program uses the cv2 library to handle the image processing. I am using a facial Haar Cascade to detect a face within the videofeed. I then detect eyes using another Haar Cascade within this region of interest.

If the eyes disappear for a certain period of time, then the program assumes that the person blinked. Quickly, I noticed that I unintentionally blink a lot.

Ergo, my program only recognizes a blink if it lasts longer than 1.25 seconds. Thus, unintended blinks are filtered out.

[Figure 1: Detecting Eye Blinks with Python]

The program can also detect winks and differentiate left eye winks from right eye winks. I think this adds an exciting option for customization. Users can use winks as hotkeys to transmit specific data. For example, winking could let the user instantly delete the last transmitted word.

Instead of using a Haar Cascade to detect when an eye is open, I would rather use a Haar Cascade to detect when an eye is closed. Or both. I believe that this would make the program more precise. I am looking into training my own Haar Cascade to serve this function. This is not a vital task because the eye blink detector is already fairly accurate.

-

Eye Blink Detection Algorithms

10/07/2017 at 02:24 • 0 commentsThere are multiple ways to detect eyes in a video. This is a relatively new problem, so the standard technique has not been established. I have researched different methods, all of which have their own pros and cons. I hope to give a brief synopsis of each technique below.

Detecting Eye Blinks with Facial Landmarks

Eye blinks can be detected by referencing significant facial landmarks. Many software libraries can plot significant facial features within a given region of interest. Python’s dlib library uses Kazemi and Sullivan’s One Millisecond Face Alignment with an Ensemble of Regression Trees[3] to implement this feature.

The program uses a facial training set to understand where certain points exist on facial structures. The program then plots the same points on region of interests in other images, if they exists. The program uses priors to estimate the probable distance between keypoints [1].

The library outputs a 68 point plot on a given input image.

[1, Figure 1: Dlib Facial Landmark Plot]

For eye blinks we need to pay attention to points 37-46, the points that describe the eyes.

In Real Time Eye Blinking Using Facial Landmarks[2], Soukupová and Čech derive an equation that represents the Eye Aspect Ratio. The Eye Aspect Ratio is an estimate of the eye opening state.

Based on Figure 2, the eye aspect ratio can be defined by the below equation.

[2, Figure 2: Eye Facial Landmarks]

[2, Figure 3: Eye Aspect Ratio Equation]

“The Eye Aspect Ratio is a constant value when the eye is open, but rapidly falls to 0 when the eye is closed.” [1] Figure 4 show a person’s Eye Aspect Ratio over time. The person’s eyeblinks are obvious.

[2, Figure 4: Eye Aspect Ratio vs Time]

A program can determine if a person’s eyes are closed if the Eye Aspect Ratio falls below a certain threshold.

Clmtrackr is another facial landmark plotter. In previous logs, I had attempted extracting accurate and consistent eye position data from the plot. Because of this experience, I am eager to explore completely different blink detection algorithms and techniques.

Detecting Eye Blinks with Frame Differencing

Frame differencing is another blink detection technique. Essentially, a program compare subsequent video frames to determine if there was any movement in a select eye region.

In most programs, the first step is to detect detect any faces in a videofeed using a face detector, such as the Viola-Jones Face Detector. The face detector returns a bounding box of an area that contains a face, as seen in Figure 5.

[Figure 5: Face Detector Placing Bounding Box Over Face]

The program then analyses this region of interest for eyes using a similar detection tools. The program places a bounding box on any regions of interest.

[Figure 6: Eye Detector Placing Bounding Boxes Over Eyes]

The program then compares the difference the eye region of interests in subsequent frames.[5] Any pixels that are different can plotted on a separate image. Figure 7 demonstrates a program using frame differencing to detect hand movement. A Binary Threshold and Gaussian Blur filter the images.

[Figure 7: Frame Differencing Program Detecting Hand Movement]

Detecting Eye Blinks with Pupil Detection

Using pupils to detect eye blinks has a similar process to frame differencing. Once again, the program starts off by placing a bounding box on any detected faces within a region of interest. The program then detects general eye regions within the face bounding box.

The program analyses the eye region of interest. The program runs a circle hough transform on this area. If the software can detect a circle roughly in the center of the region and that circle takes up roughly the right amount of area, the software assumes that the circle is the pupil. If the pupil is detected, then the software can assume that the eye is open[4].

[Figure 8: Circle Hough Transform Detecting Pupil]

[Figure 9: Circle Hough Transform Detecting Pupil on User With No Glasses]

References

[1] A Rosebrock. (2017, Apr. 3). Facial landmarks with dlib, OpenCV, and Python [Online]. Available: https://www.pyimagesearch.com/2017/04/03/facial-landmarks-dlib-opencv-python/

[2] T. Soukupova and J. Cech. (2016, Feb. 3) Real-Time Eye Blink Detection using Facial Landmarks. Center for Machine Perception, Department of Cybernetics Faculty of Electrical Engineering, Czech Technical University in Prague. Prague, Czech Republic. [Electronic]. Availible: https://vision.fe.uni-lj.si/cvww2016/proceedings/papers/05.pdf

[3] V. Kazemi and J. Sullivan. (2014) One Millisecond Face Alignment with an Ensemble of Regression Trees. Royal Institute of Technology Computer Vision and Active Perception Lab. Stockholm, Sweden. [Electronic]. Availible: https://pdfs.semanticscholar.org/d78b/6a5b0dcaa81b1faea5fb0000045a62513567.pdf

[4] K. Toennies, F. Behrens, M. Aurnhammer. (2002, Dec. 10). Feasibility of Hough-Transform-based Iris Localisation for Real-Time-Application. Dept. of Computer Science, Otto-von-Guericke Universität. Magdeburg, Germany. [Electronic]. Available: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.69.3667&rep=rep1&type=pdf

[5] B. Raghavan. (2015). Real Time Blink Detection For Burst Capture. Stanford University. Standford, CA. [Electronic]. Available: https://web.stanford.edu/class/cs231m/projects/final-report-raghavan.pdf

-

Problems Detecting Pupil Movement Through Javascript

10/05/2017 at 03:59 • 0 commentsI have been trying to develop an accurate eye tracker in with Clmtrackr for a week now. Unfortunately, I cannot precisely detect eye movement to the degree that I require. At most, I am able to detect if the pupil is on the left side or right side of the eye. Even this small feat requires basic environment variables, such as lighting, to be constrained.

I researched other software example that use Clmtrackr to detect eye movement. They seem to suffer from similar problems. This example eye tracker shares the same issues that my program has.

https://webgazer.cs.brown.edu/demo.html?

I feel like Clmtrackr is not meant for the eye tracking that I require.

Additionally, many of my acquaintances that have tried out the software felt eye strain after extended usage. I definitely do not want to make any patients that use the software uncomfortable. I get the feeling that using pupil movements to communicate long messages might cause some uncomfortable eye strain.

From now on, I plan on using eye blinks as a means of communication, instead of pupil movement. There is already a long history of people sending long Morse Code messages through eye blinks.

I have been testing python algorithms that detect eye blinks. My prototype programs have provided encouraging results. It seems like I can accurately detect if a person in blinking in a variety of environments. I am going to move away from Clmtrackr to OpenCV for my eye detection.

Eventually, I believe I can host my python program in a Django website.

-

Detecting Pupil Movement Using Javascript

10/05/2017 at 03:22 • 0 commentsI want to build the entire software program using web tools like Javascript and RoR. This would allow me to host the entire application on a website and allow anyone in the world to easily to access it. There are many Javascript libraries for computer vision. I am prototyping with clmtracker. Clmtracker detects faces within a given video feed. The software overlays any detected faces with a green mask. There are points laid out on specific features of the face, such as the mouth, nose, and eyes. Ten points describe the geometry of both eyes.

[1, Figure 2: Representation of Points in Clmtrackr Face Matrix]

Points 27 and 32 describe the centerpoints of the pupils. Currently, I am writing a software program that uses clmtrackr to detect faces in a videofeed. The software then measures the distance between the Point 27 and 32 and the four corners of each eye. If the length falls below a certain threshold, the program will know that the eye is in a certain corner.

References

[1] A. Venâncio. Plot of Significant Facial Features. 2017. Available: http://andrevenancio.com/eye-blink-detection/

-

Sending Information Through Eye Movement

10/05/2017 at 03:02 • 0 commentsAlmost all paralyzed people are able to move their eyes and blink. I want the software to detect pupil movement. A person can only blink their eyes, but they can move their pupils left, right, up, and down. People could transmit more information with less effort through pupil movement.

Jason Becker came up with a convenient eye chart that helps people communicates words through eye movement. When using the chart, Jason moves his eye to the top left, top, top right, bottom left, bottom, bottom right corner of his eye. This action signals which group of letters he wants to pick. He then moves his eye up, down, left, or right to pick a specific letter. To communicate, Jason needs someone to read his eye movements and decode his message. A video of Jason using this system can be seen at the link below.

http://jasonbecker.com/eye_communication.html

This process can be automated. Using a computer vision algorithm, a webcam can track his eye movements. Ideally, this system will be non invasive. A camera will not need to be mounted to a person’s head. A person should be able to communicate their eye movement by looking at a laptop that is a reasonable distance away. A coefficient can be assigned to each letter depending upon the likelihood of the letter being selected. This coefficent can vary depending on the word being spelt.

[1, Figure 1: Eye Chart used in David Becker System]

References

[1] G. Becker. Vocal Eyes Becker Communication System. 2012. Available: http://jasonbecker.com/eye_communication.html

BlinkToText

BlinkToText is an open source, free, and easy to use software program that converts eye blinks to text.

Swaleh Owais

Swaleh Owais