-

Sublime

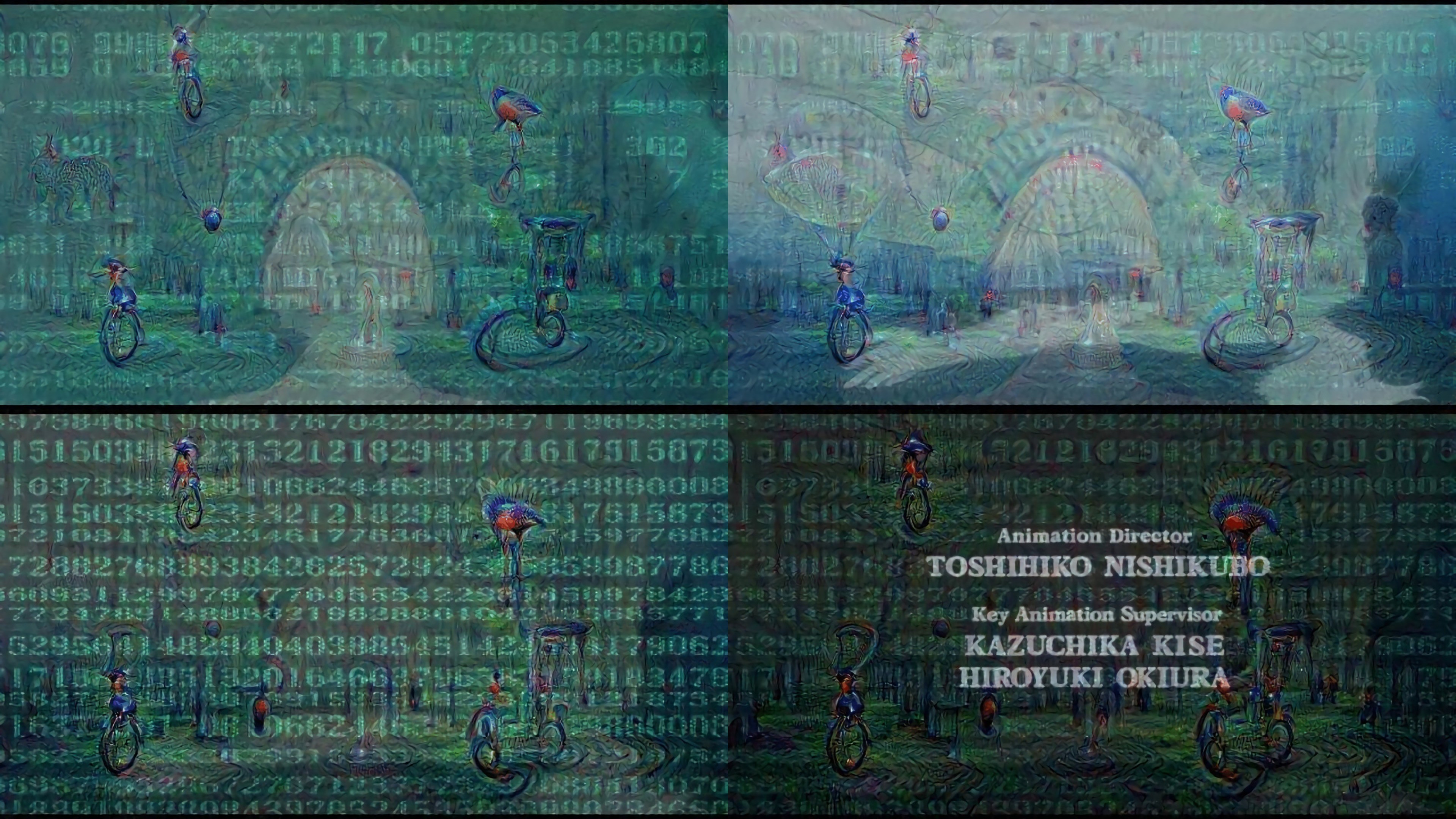

11/07/2015 at 07:53 • 0 commentsArtificial Neural Networks and Virtual Reality

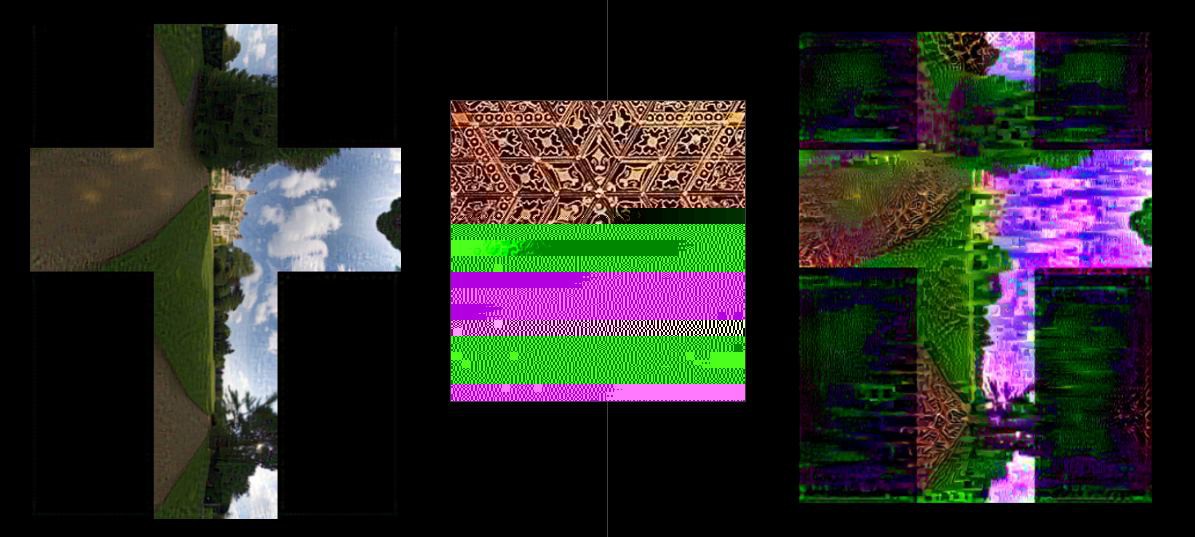

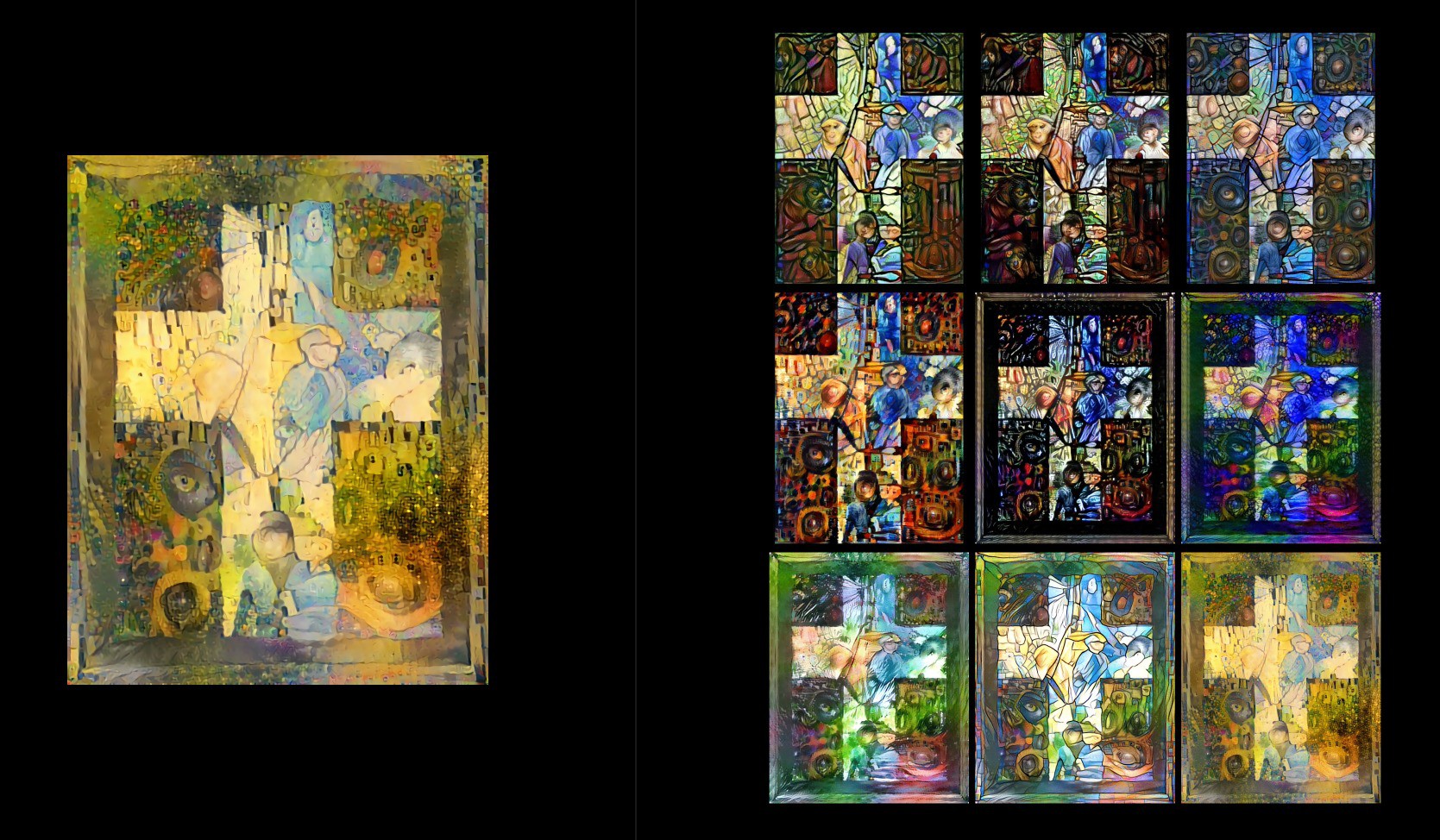

![]() A vision came to me through the 802.11 in the form of a dream-catcher.

A vision came to me through the 802.11 in the form of a dream-catcher.I had a collection of panospheres I scraped, with permission, from good John and got inspired to create.

![]()

I spun up some Linux machines and began to make something. I'll let the pictures do more talking.

![]()

![]()

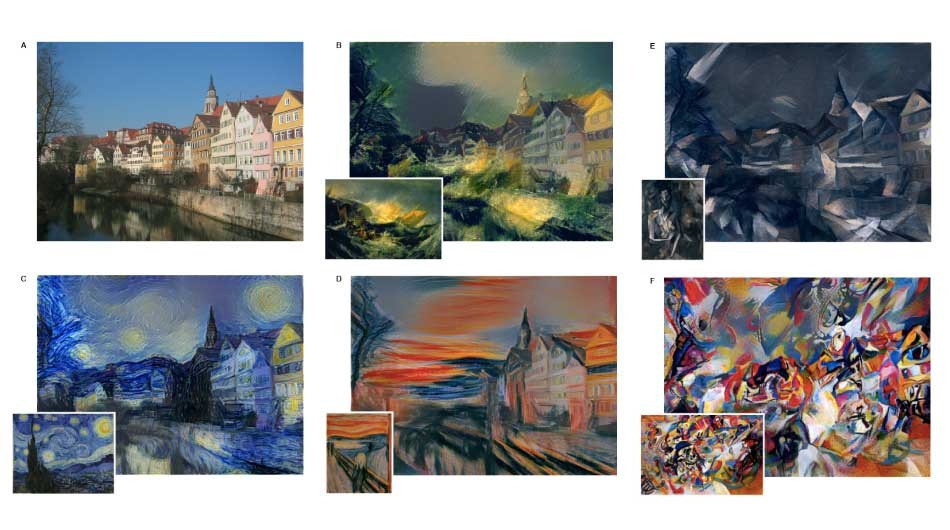

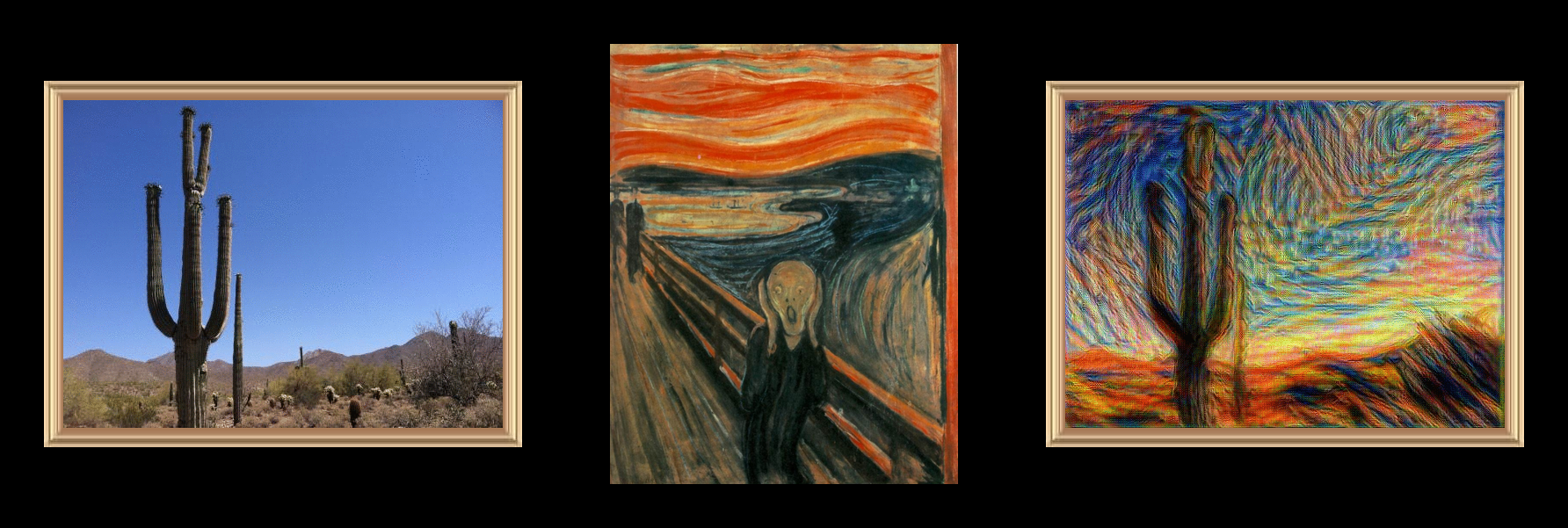

#!/bin/bash # cut the strip and turn it into a cube-map # adjoin http://www.fmwconcepts.com/imagemagick/adjoin/index.php i=`ls | grep jpg` convert "$i" -crop 256x256 out_%02d.jpg adjoin -m V out_04.jpg out_01.jpg out_05.jpg test.jpg adjoin -m H -g center out_00.jpg test.jpg test1.jpg adjoin -m H -g center test1.jpg out_02.jpg out_03.jpg "$i" mogrify -rotate 90 "$i" rm {test,test1}.jpg rm out_*Convolutional neural networks are mathematically modelled after biology and give the machine a deep understanding of the context of information, most often images. I trained neural-style with a variety of artists throughout history such as Picasso, Chirico, Monet, Dali, Klimt, stained glass scenes of nativity, video games, and other experiments.

![]()

![]()

![]()

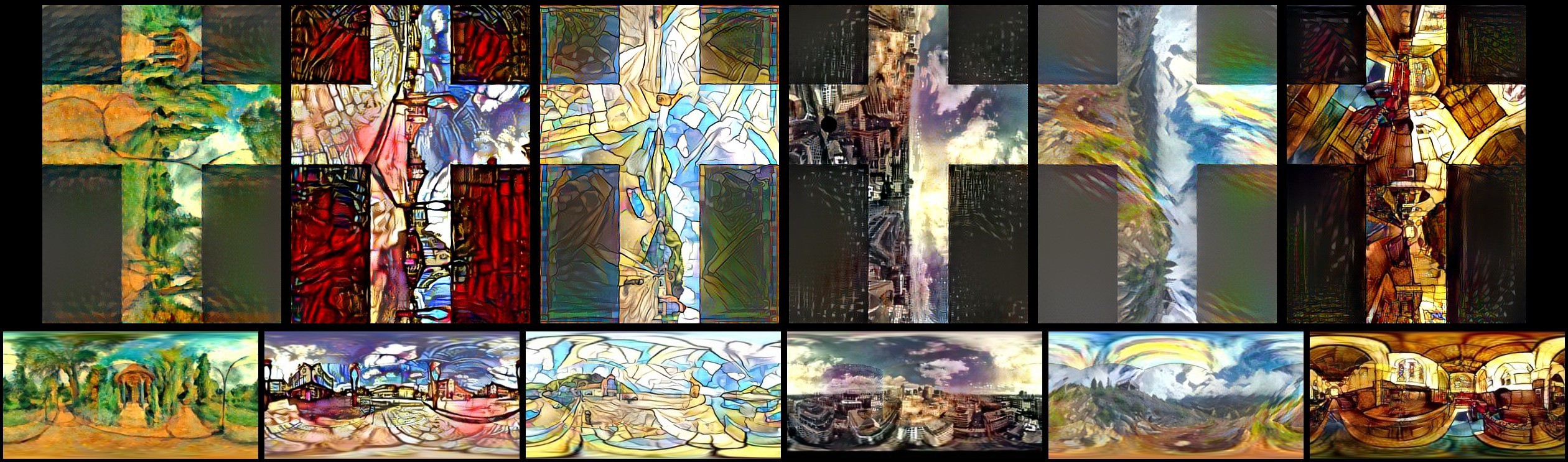

Frames are saved between iterations, allowing a viewpoint into the mind of the program.

![]()

![]()

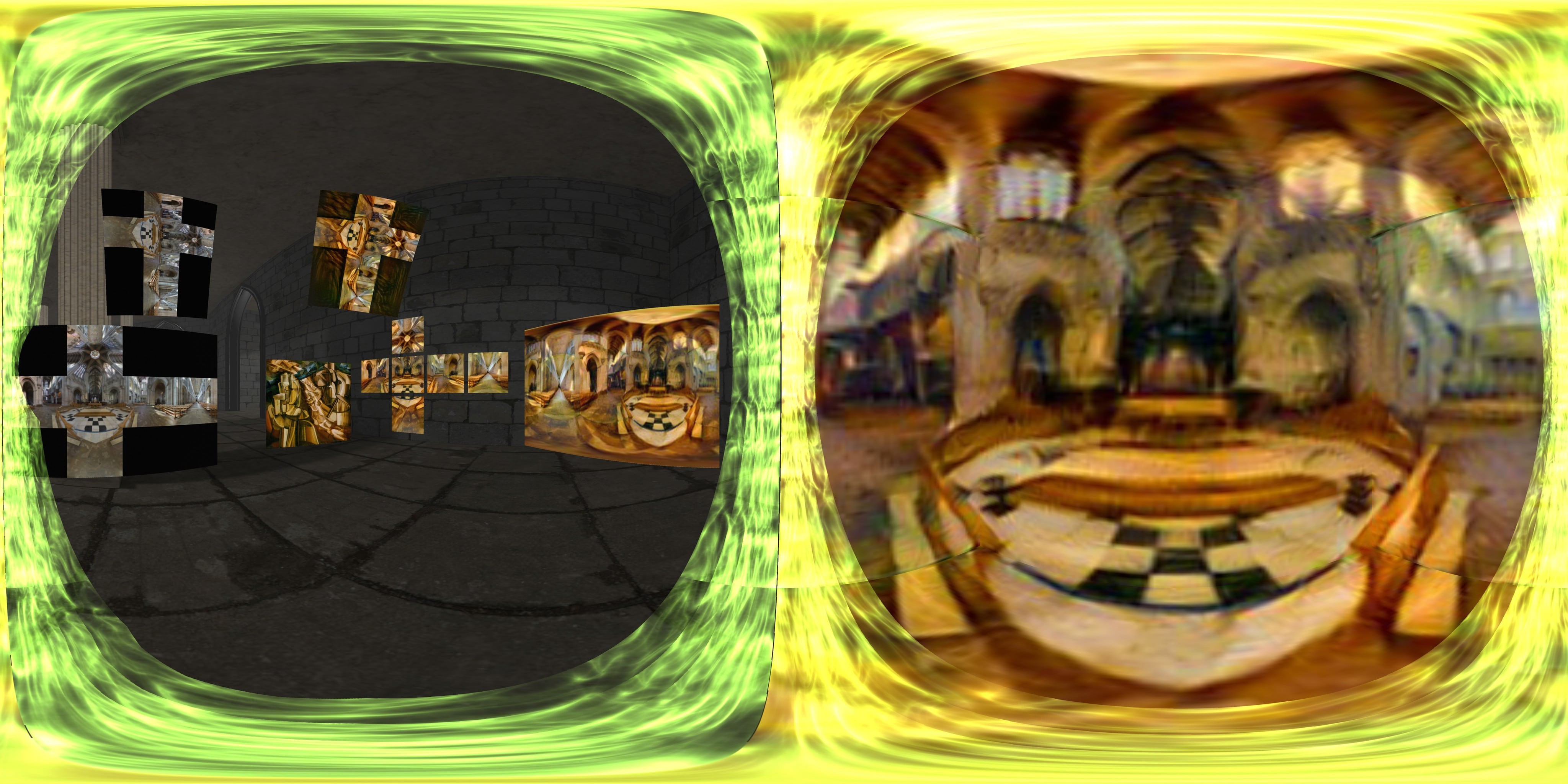

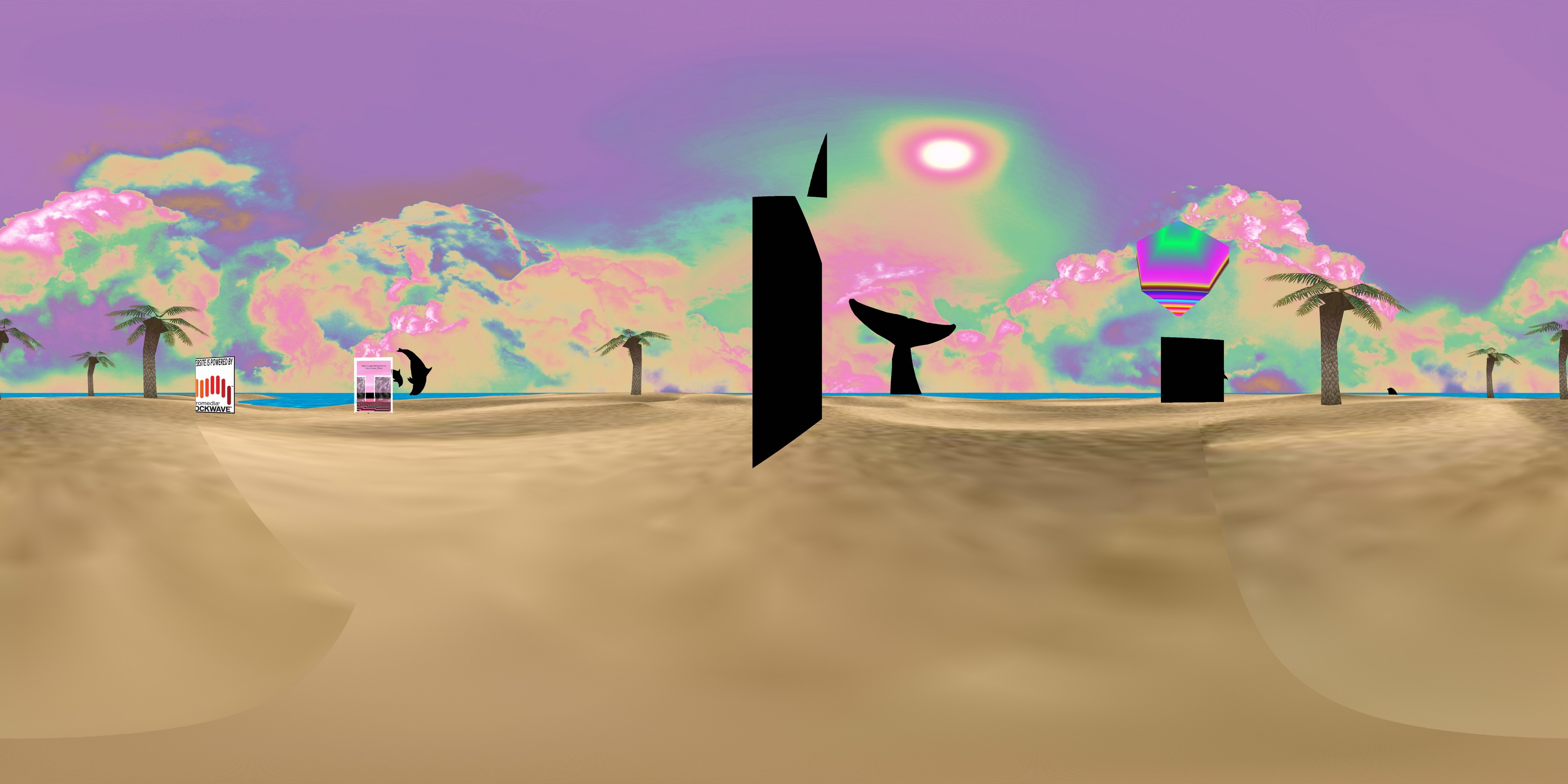

The cross is a portal into a world from which is rendered by unfolding the cross into a skybox.

[ View above image in WebVR here ]![]()

![]()

After chopping it up into cubemaps, I made a simple FireBoxRoom to render the skybox:

<html> <head> <title>Sublime</title> </head> <body> <FireBoxRoom> <Assets> <AssetImage id="sky_down" src="09.jpg" tex_clamp="true" /> <AssetImage id="sky_right" src="06.jpg" tex_clamp="true" /> <AssetImage id="sky_front" src="05.jpg" tex_clamp="true" /> <AssetImage id="sky_back" src="07.jpg" tex_clamp="true" /> <AssetImage id="sky_up" src="01.jpg" tex_clamp="true" /> <AssetImage id="sky_left" src="04.jpg" tex_clamp="true" /> </Assets> <Room use_local_asset="plane" visible="false" pos="0.000000 0.000000 0.000000" xdir="-1.000000 0.000000 -0.000000" ydir="0.000000 1.000000 0.000000" zdir="0.000000 0.000000 -1.000000" col="" skybox_down_id="sky_down" skybox_right_id="sky_right" skybox_front_id="sky_front" skybox_back_id="sky_back" skybox_up_id="sky_up" skybox_left_id="sky_left" default_sounds="false" cursor_visible="true" > </FireBoxRoom> </body> </html>![]()

There were some obvious defects:

One can see the outline of the skybox caused from by edges interfering when processing the cross:

![]()

The maximum output from a GTX 960 can only manage default 512 resolution before running out of CUDA memory:

/usr/local/bin/luajit: /usr/local/share/lua/5.1/cudnn/SpatialConvolution.lua:96: cuda runtime error (2) : out of memory at /home/alu/repo/cutorch/lib/THC/THCStorage.cu:44![]()

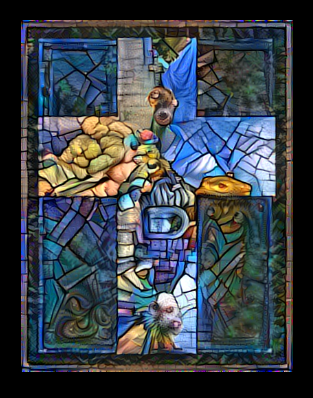

I proceeded to create one final piece before ending the experiment by combining multiple different neural networks together: waifu2x, deepdream, and neural-style:

You can watch the video of the transformation here:![]()

The final piece after many layers:

![]()

Part 2: Minerva Outdoor Gallery

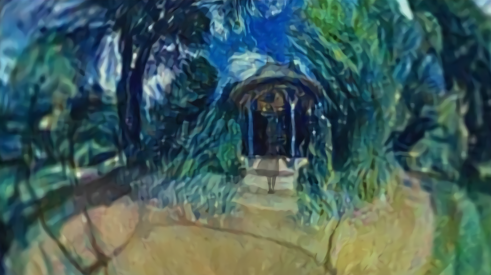

What if instead of looking at art in a virtual gallery, you could go inside of the art and be in the painting?![]()

This time, I chose to preprocess the cubemaps into equirectangulars first so that the edges can be blended seamlessly into one image. This type of format is best for video as well. Take equi in Janus with [p] or ctrl-f8.

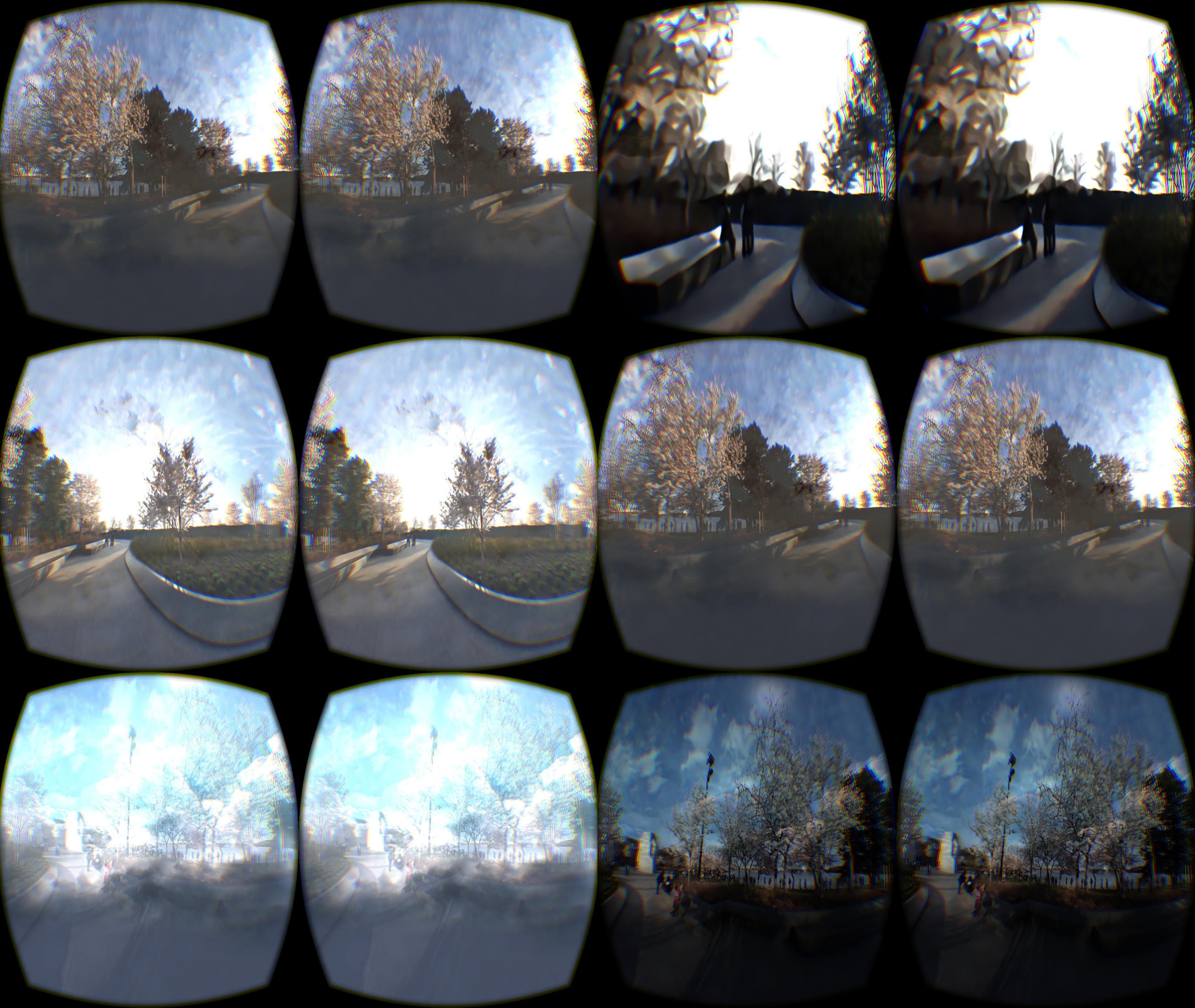

It takes about 2 minutes to process a single frame with 400 iterations at 512 resolution output. HD video will have to wait until I can upgrade the setup.![]()

![]()

![]()

View with cardboard:

http://alpha.vrchive.com/image/PN

http://alpha.vrchive.com/image/P5

http://alpha.vrchive.com/image/PO

http://alpha.vrchive.com/image/oFhttp://alpha.vrchive.com/image/ot

http://alpha.vrchive.com/image/Pa

http://alpha.vrchive.com/image/eJ![]()

The beginning is near

![]()

-

Janus Fleet

10/26/2015 at 23:31 • 1 commentLink to Part 1: Dockerized Multiserver

Part 2 of Microservice Architectures for a Scalable and Robust Metaverse

![]()

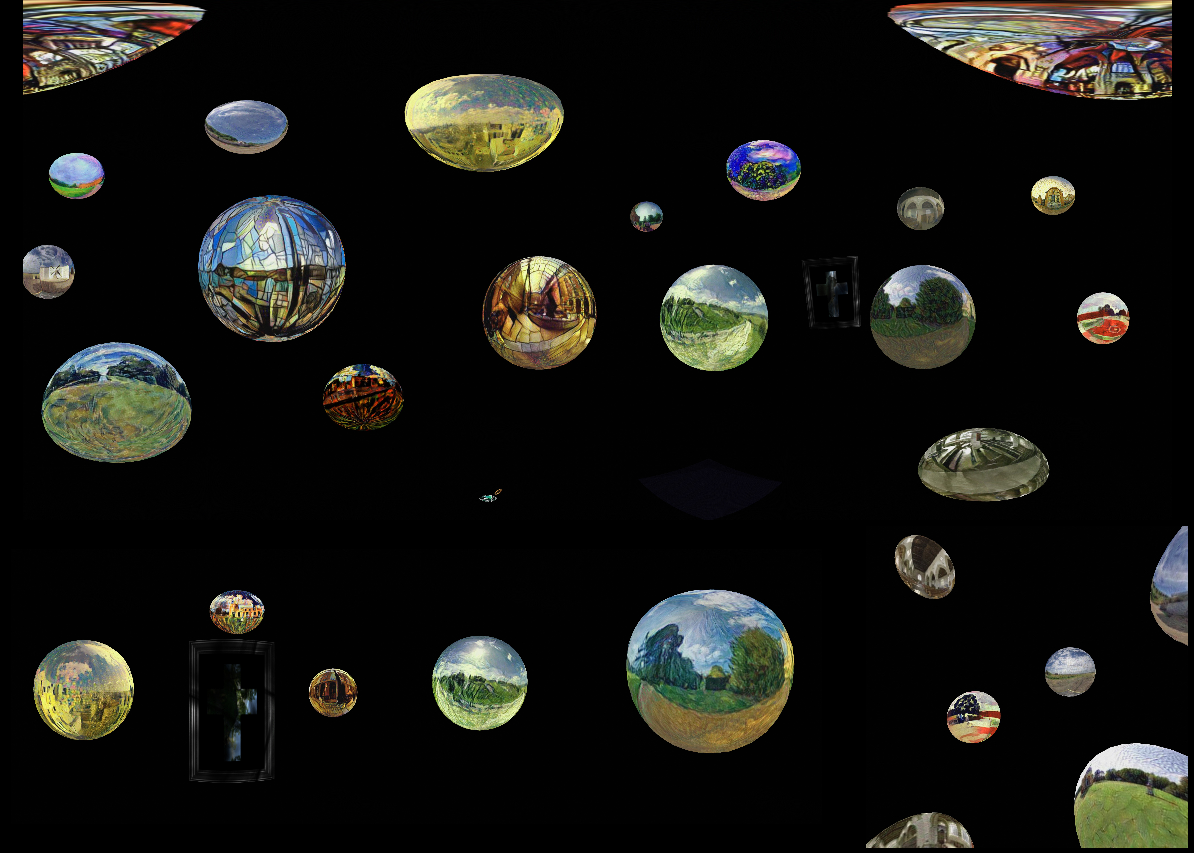

The Janus multi-server enables multiplayer and is allows one client to share information with another client. The server software talks to every connected JanusVR client to know who else is in the same virtual space and where other avatars are. It is what glues the Metaverse together.

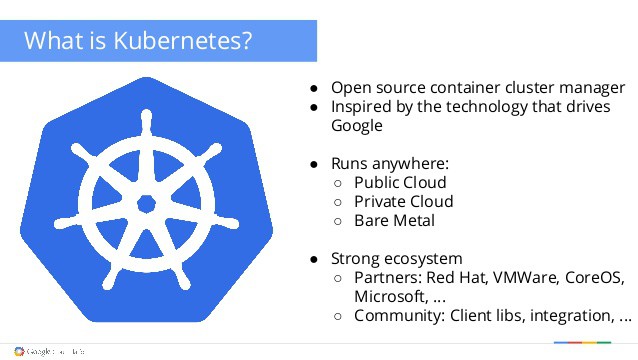

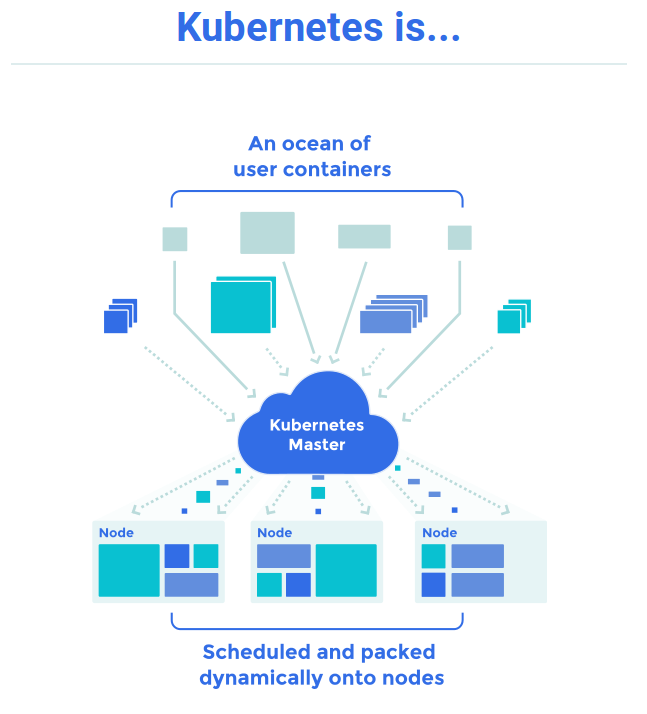

It's time to give the Janus-Server the love and attention it needs to be more robust when receiving unexpected amounts of traffic. The solution I have been investigating is Kubernetes; a system for managing containerized applications across a cluster of nodes.

The idea is that you abstract away all your hardware from developers and the 'cluster management tool' sorts it all out for you. Then all you need to do is give a container to the cluster, give it some info (keep it running permanently, scale up if X happens etc) and the cluster manager will make it happen.

![]()

Greek for "Pilot" or "Helmsman of a Ship"

Kubernetes builds upon a decade and a half of experience at Google running production workloads at scale, combined with best-of-breed ideas and practices from the community.

Reference to Google paper [Lesson's Learned while developing Google's Infrastructure]

Production ready as of 1.01: https://github.com/kubernetes/kubernetes/releases/tag/v1.0.1![]()

Key Points:

- Isolation: Predictable, idealized state in which dependencies are sealed in.

- Portability: Reliable deployments. Develop here, run there.

- Efficient: Optimized packing; idealized environments for better scaling.

- Robust: Active monitoring, self healing. Easy and reliable continuous integration.

Introspection is vital. Kubernetes ships with [ https://github.com/google/cadvisor ] cadvisor for resourcce monitoring and has a system for aggregating logs to troubleshoot problems quickly. Inspect and debug your application with command execution, port forwarding, log collection, and resource monitoring via CLI and UI

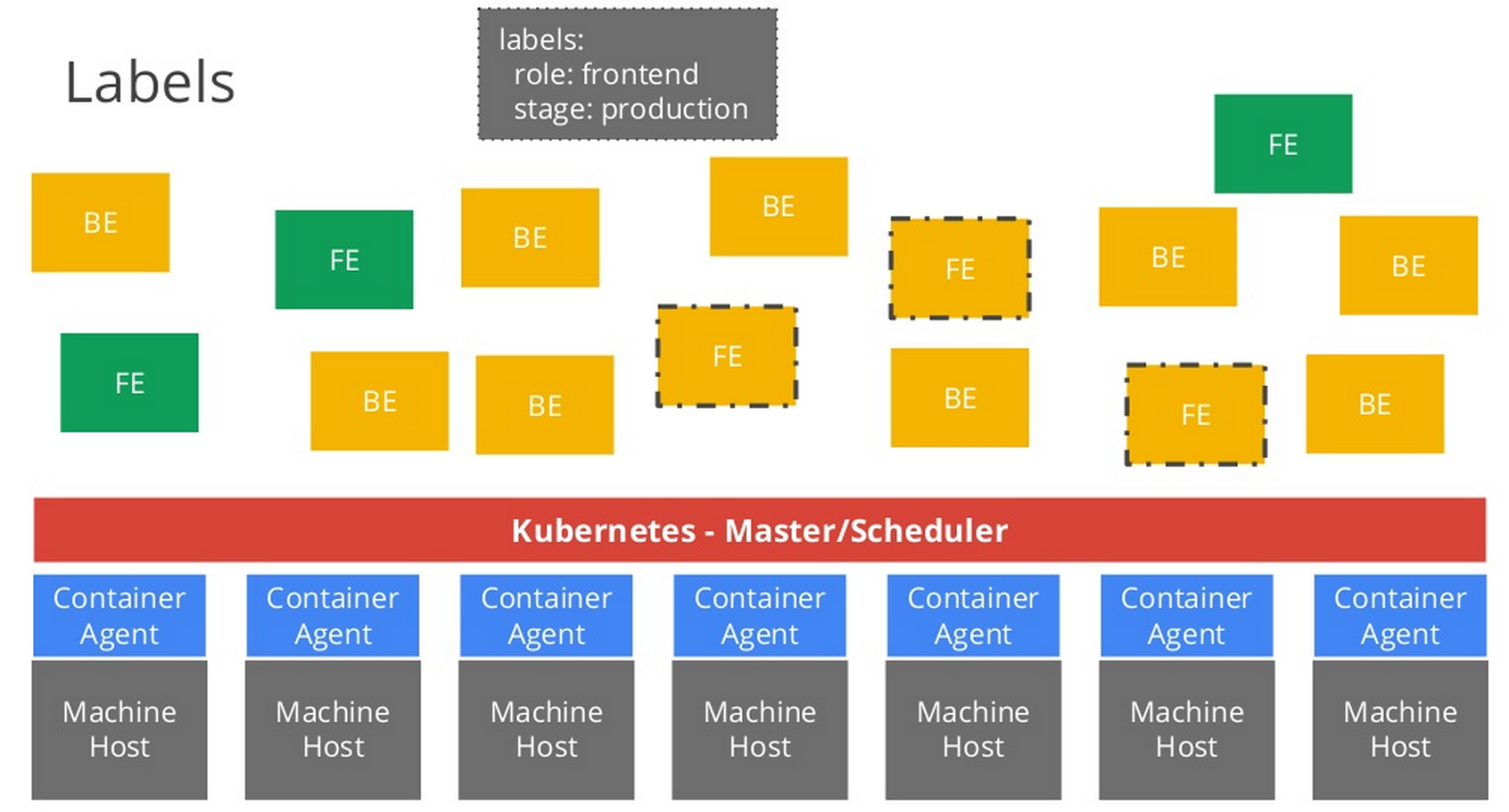

Kubernetes handles scheduling onto nodes in a compute cluster and actively manages workloads to ensure that their state matches the users declared intentions. Using the concepts of "labels" and "pods", it groups the containers which make up an application into logical units for easy management and discovery.

https://kubernetes.io/docs/![]()

Best practice for containerized applications is to run one process per container. The node.js server should be linked to the mysql database container. Decoupling applications into multiple containers makes it much easier to scale horizontally and reuse containers. Let's start with the node.js portion. Note that this current implementation is nearly working. Make sure to have Docker installed, then docker pull node in a terminal.

**This current Dockerfile is still broken**

FROM node EXPOSE 8080 RUN git clone https://gitlab.com/alusion/avalon-server.git RUN cd avalon-server && npm install && npm install forever -g && \ npm install forever-monitor WORKDIR avalon-server/ RUN chmod +x start_node.sh ENTRYPOINT ["/avalon-server/start_node.sh"]forever is a simple CLI tool for ensuring that a given script runs continuously.

config.js is configured with hardlinks currently, this will be changed in the future.

I have some questions for the linux container community:

- Does it matter if the node app be run as user as opposed to root?

- Why does the server seem to exit after it starts?

- How does one generate secure certificates without the interactive prompt? (my guess is a bash script but who wants to config?)

The start_node.sh script looks as follows:

# Generate the certificates sh generate_key # Start the server node server.js # Not sure yet where to add this but the container keeps exiting. # tail the logs # tail -f server.logIf I run tail -f server.log, the container will stay open but the container will fail to build because it hangs there.

In order to get the mysql container, type into a terminal docker pull mysql

** This Dockerfile is still broken **

FROM mysql ADD janusvr.sql /tmp/ RUN adduser --home /home/janus --disabled-password --gecos '' janus RUN MYSQL_ROOT_PASSWORD="changeme" RUN MYSQL_DATABASE="janusvr" RUN MYSQL_USER="janusvr" RUN MYSQL_PASSWORD="janusvr" CMD mysql -u janusvr -p janusvr janusvr < /tmp/janusvr.sql EXPOSE 3306The latest MySQL image has environment variables passed in for configuring the database.

## ------ MySQL Container ------ ## # mysql basics # SHOW DATABASES; USE janus; SHOW TABLES; SHOW FIELDS FROM users; DESCRIBE users; # Start a mysql server instance docker run --name some-mysql -e MYSQL_ROOT_PASSWORD=my-secret-pw -d mysql:tag # Connect to MySQL from an application docker run --name some-app --link some-mysql:mysql -d application-that-uses-mysql # View MySQL Server log through Docker's container log docker logs some-mysqlHelp Needed

The janusvr.sql tables and the other files can be found here: https://gitlab.com/alusion/avalon-server

In order to test if the multiserver works, you can clone https://gitlab.com/alusion/paprika and just have to change line 18 in https://gitlab.com/alusion/paprika/blob/master/dcdream.html to point to the mutliserver as indicated by the Docker container:

<Room server="DOCKER_IP_ADDRESS" port="5567" ...Docker for the Raspberry Pi: http://blog.hypriot.com/downloads/ might be good for testing...

![]()

Lead engineer of kubernetes describe differences between Mesos and Kubernetes + Fleet / Swarm.

http://stackoverflow.com/questions/27640633/docker-swarm-kubernetes-mesos-core-os-fleetThe Open Container Initiative is a lightweight, open governance structure, to be formed under the auspices of the Linux Foundation, for the express purpose of creating open industry standards around container formats and runtime.

http://www.opencontainers.org/Another solution recommended by a brilliant hacker: http://opennebula.org/

To be continued...

-

Minerva

10/08/2015 at 02:35 • 0 commentsMinerva is the Roman goddess of wisdom and sponsor of arts, trade, and strategy.

![]() Yesterday marked the opening of MinervaVR. You can explore the gallery from the main lobby in JanusVR.

Yesterday marked the opening of MinervaVR. You can explore the gallery from the main lobby in JanusVR.The virtual art show went well, now my work in producing a mixed reality show here in LA begins. I have begun to print and document some progress on Instagram [alusion_vr]. Already sold my first T-Shirt too! More prints and designs will be on the way soon, including hoodies. If you'd like one, I accept PayPal / Bitcoin and they're $25 a shirt.

![]()

Goods will be sold at the art show event in Los Angeles and can be ordered online [email]. Will release more details soon. The Net-Art / Surveillance / Mixed Reality themed part of the art show will be simultaneously virtual and physical via 360 livestreaming and projection mapping.

![]()

For one of my installation pieces, I plan to have realtime glitching by embodying the artist within AVALON. Meet AVA: Autonomous Virtual Artist.

![]()

One antenna monitors and extracts images that pass through the 80211 spectrum while the other is meant to connect to other AVALON nodes to form a mesh net. AVA is equipped with cameras so that it can process the seen with the unseen for new interesting composition possibilities [will update to a 360 camera someday.]

Explore the world around you in ways that you didn't imagine. Information that passes through here shall stain elements in the virtual worlds in interesting ways, whether it be a skybox, the textures on objects in VR, framed surfaces, or whatever else. Think chroma-key:

![]()

AVA can replace the textures with camera passthrough or debri from the WiFi traffic.

MinervaVR will sponsor you If you're an artist interested in hanging your work how it's meant to be seen while reaching a global audience. All the time. Animators can showcase their animations or even immerse the user within a 360 video gallery!

My next goal is to give the Janus multi-server love and attention it needs then work on the coolest Bitcoin integration that will have people literally throwing money at their screens ;)

Hack with us. #janusvr [freenode] babylon.vrsites.com [mumble]

![]()

See you in the metaverse. alusion.net

-

The VR Art Gallery pt. 1

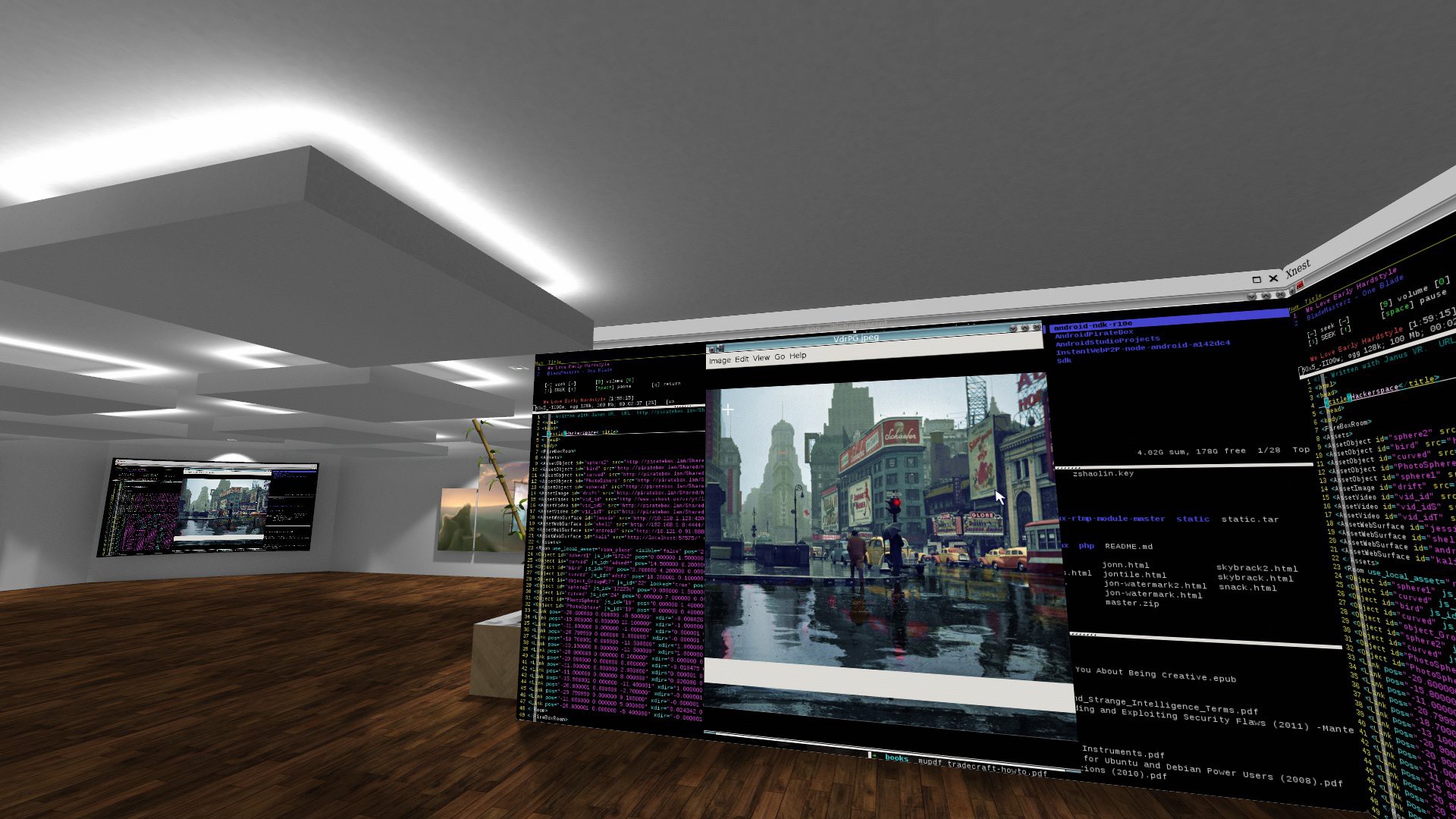

09/29/2015 at 05:03 • 0 commentsSince my last post, I've shared my neural network inspired creations with others and the idea of creating a VR came about a week ago so I'd like to dedicate this post to how quickly we are able to materialize ideas into VR.

![]()

The theme of my art has been 'Made with Code' combining the power of the Linux command line with some creative hacking. For me, the art is all about process of which I use to achieve the end result. Glitch Art is one of my favorite styles and I highly suggest checking out this excellent short introduction done by PBS explaining the movement:

If you read my previous posts you'll know that my work flow typically involves a combination of FFmpeg and ImageMagick strung together with bash loops to process a large set of images and video at a time. It's smart to keep the ImageMagick documentation open for easy reference when figuring out how to do certain manipulations from the command line.

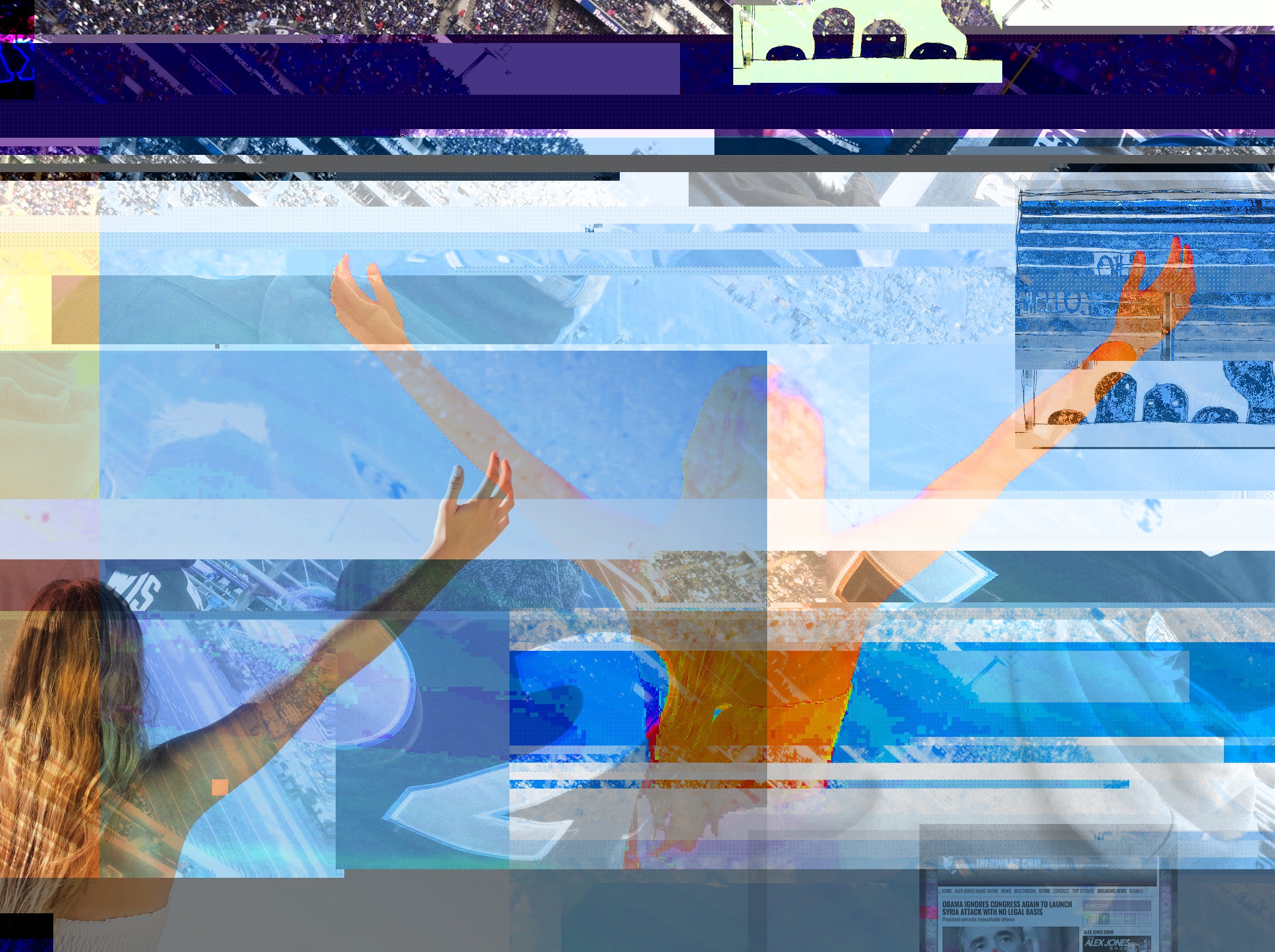

Databending is the act of corrupting files containing data with the intent to create Glitch Art. The results of which can be described as beautifully broken:

This image is constructed entirely by code.![]()

I was inspired by one of my favorite artists, Jullian Oliver, whom creates awesome electronic artworks that combine creative hacking and information visualization.

In order to create a less deterministic method of sourcing content for artworks, I took to the air and took images extracted from public insecure WiFi traffic. This is why you should use https-Everywhere

I had a packet capture from a cool coffee shop I visited whilst visiting Colorado, that I kept as a memoir. I employed a method of extracting images from captured wireless traffic. The good parts are here:

#!/bin/bash # Requires aircrack-ng, foremost, tcpflow and ImageMagick on a UNIX host # Extract JPG/PNG/GIF images from captured network traffic # Shell script adopted from Julian Oliver PCAP=$1 # We have to remove the outer 802.11 (wireless) headers from # each packet or we won't be able to reach those data packets # deep inside. airdecap-ng, normally used for decrypting WEP/WPA # packets, is great at this: airdecap-ng $PCAP # Write out filename of the decapped file DCAP=${PCAP%.*}-dec.$(echo $PCAP | awk -F . '{print $NF}') # Create a directory based on the decap file name DIR=$(echo $(basename $DCAP) | cut -d '.' -f 1) # Make the directory and move into it. mkdir $DIR && cd $DIR # Run tcpflow on the decapped pcap data. This 'flows' out-of-order # packets into TCP streams for each Local IP:PORT <-> Remote IP:PORT # session. It then writes out binary blobs for each session to the # current directory. These blobs contain the data. tcpflow -r ../$DCAP # Make a data directory mkdir data # Run foremost on the flowed stream and move to that directory, # extracting any data types it finds on the way and writing them # to a 'data' folder. foremost -i * -o dataThe data gets categorized in separate folders according to file formats [PNG/GIF/JPG/ZIP]. It's possible to even visualize the image traffic because of the way tcpflow organizes the data:

![]()

The glitchy pictures is in effect from the forensics utility foremost which reassembles the image data from incomplete TCP streams reflowed with tcpflow. If one were to use the more well known driftnet, the images tend to be cleanly captured off the network. One idea that I would like to implement on a later note is to assemble all the half broken images into a giant "stream" by continually adjoining them all into one long image file. I could then map this onto a rotating object within VR that can create the effect of a flowing river of WiFi traffic :)

Next, I utilized a ruby script a friend on IRC made that glitches images together. You can read more about the script here. The output of groups of images together create interesting collages from the inputs you give them. The output is saved as glitch.png. I wrote a nifty one liner that can make 100 collages at a time by shuffling and glitching 10 random images at a time:

#!/bin/bash set -e for i in {001..100} do ls -1 *.jpg | shuf -n 10 | xargs ./glitch.rb 25 cp glitch.png 1/out_$i.png done ## Can also be made into an alias as well alias kek="for i in {001..100}; do ls | grep jpg | sort -R | tail -n 10 | xargs ./glitch.rb 25 && cp glitch.png 1/out_$i.png; done"The trick to making this script work is by making sure that the directory is clear of corrupt files that will cause the script to crash.![]()

Boom, create a batch of 100 different collages and can then go through them, evolving the algorithm while picking the best ones. These were taken from a recent packet capture:

![]()

In order to randomize further, one could also pre-process the images all the same size by using something like mogrify -resize 512x512! *.png because the ruby script will take the largest image as the base -- which is super awesome as demonstrated in this example:

![]()

360 photosphere with glitches surrounding WiFi traffic sprinkled in.

My other inspiration came from the anniversary of 9/11, the day that changed the world. I call this piece "The Patriot Act" which is in reference to the PATRIOT act that passed 45 days after the attack on 9/11, enabling so much of the surveillance state to grow to where it is today.

![]()

I've taken my hobby interest in deep learning to the next level to see if I can make a creative AI that is able to make it's own original art inspired by the world around it.

About a month ago, a paper was published regarding an algorithm that is able to represent artistic style. http://arxiv.org/abs/1508.06576

![]()

Soon after the paper was published, the open source community quickly went to work to release neuralart. Entire rooms dedicated to art made with artificial neural networks within the art gallery.

![]()

![]()

For progress on the art gallery, Aussie has been documenting this project from the start. This is how much we've been able to accomplish in 6 days:

Test 2:Test 3: Test 4:

The plan is to have the gallery complete by Thursday, in which I'll update you on plans for the art show and a walk-through of the gallery itself!

![]()

-

DEFCON / Deep Dream

08/26/2015 at 01:49 • 1 commentMy objective is to synthesize textures with artificial neural networks such as deep dream for virtual / augmented reality assets because it's cool.

At DEFCON I was showing off AVALON, the anonymous virtual / augmented reality local network in the form of a VR dead drop:

![]()

When connected to the wifi hotspot, the user could begin to explore a portfolio of my works and research in a Virtual Reality gallery setting. Upon connecting to the WiFi, users become automatically redirected to the homepage. This is the 2D front end. I will record a video to show how this connects to a 3D virtual reality back-end soon.

![]()

The website for AVALON and the VR back-end shall go online in the next couple weeks for demo purposes after I fix some bugs.

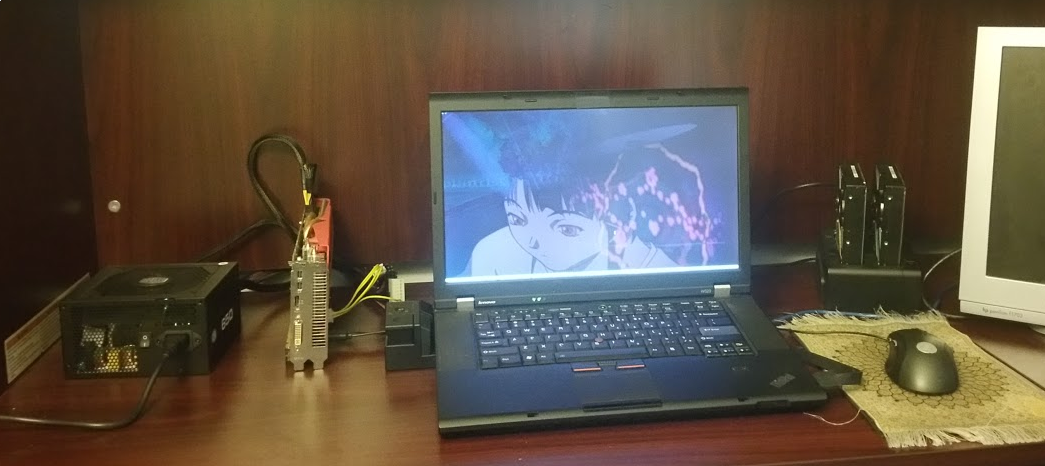

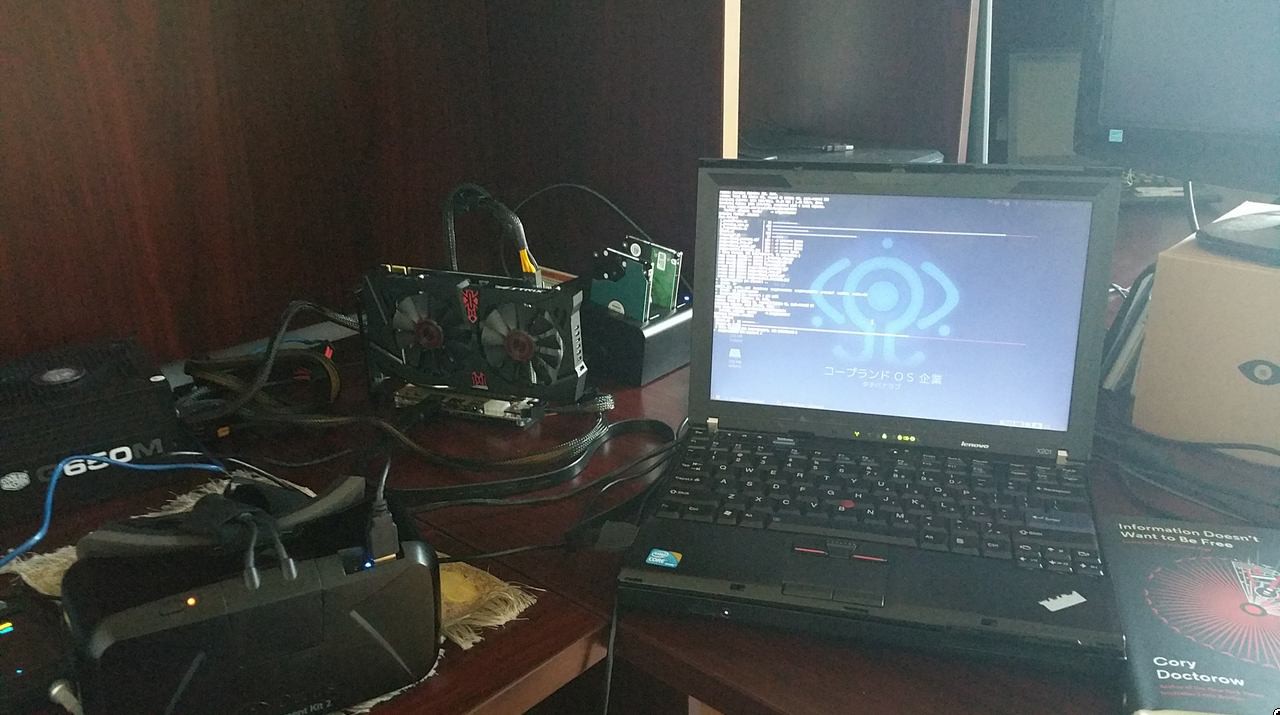

I have made a substantial amount of progress in the past month that I must begin to share. First off, I'd like to continue off the last log's experiments with deep dream. I woke up one day and found $180 bucks in my pocket after laundry, it was the perfect amount I needed for a graphics card to give me a 20x boost in artificial neural network performance. A few hours later, I have returned with a GTX 960 as an external GPU into my Thinkpad X201 and configured a fresh copy of Ubuntu 14.04 with drivers and deep dream / Oculus Rift VR software installed. Here's a pic of the beast running DK2 @ 75 FPS and Deep Dream. I have a video to go along with it as well: Thinkpad DeepDream/VR

![]()

I've been doing some Deep Dream experiments with DeepDreamVideo

The results have been most interesting when playing with the blend flag, which takes the previous frame as a guide and accepts a float point for how much blending should occur between the frames. Everything I created below was made using the Linux command line.

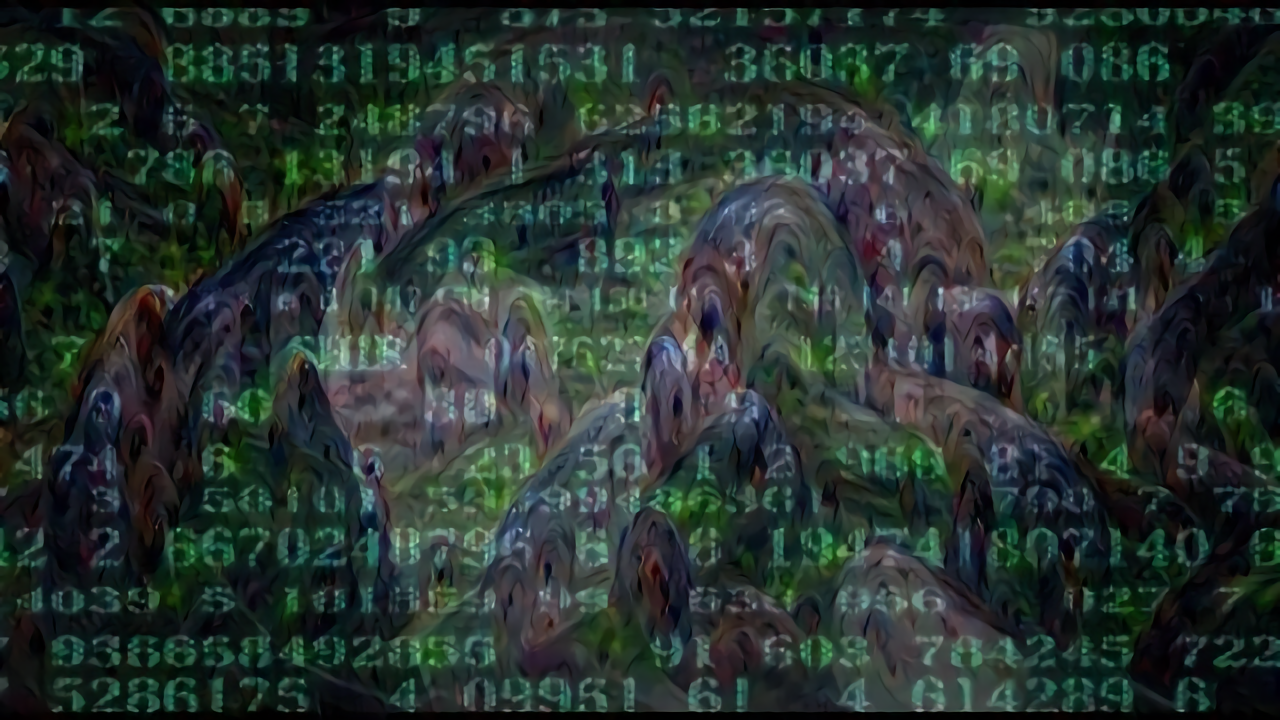

The video I took was the original 1995 Ghost in the Shell Intro. I used FFMPEG to extract frames every X second from the video in order to create test strips. When I went to go test, I got some unexpectedly pleasing results! In this test, I extracted a frame every 10 seconds.

![]()

You can see the difference between them from the raw footage to the dream drip:

![]()

![]()

![]()

I used 0.3 blending and inception_3b/3x3 for this sequence. I really like how it came out.

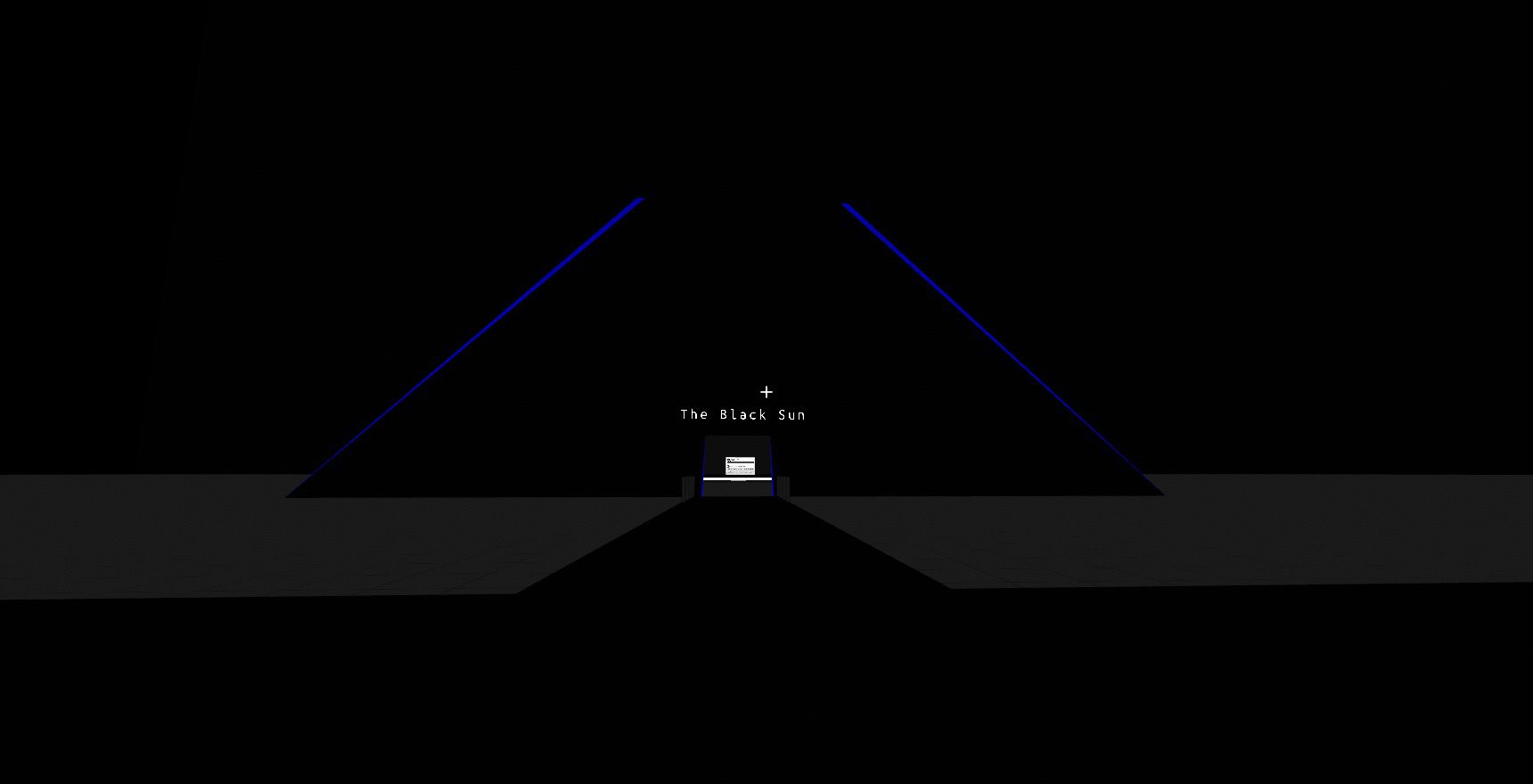

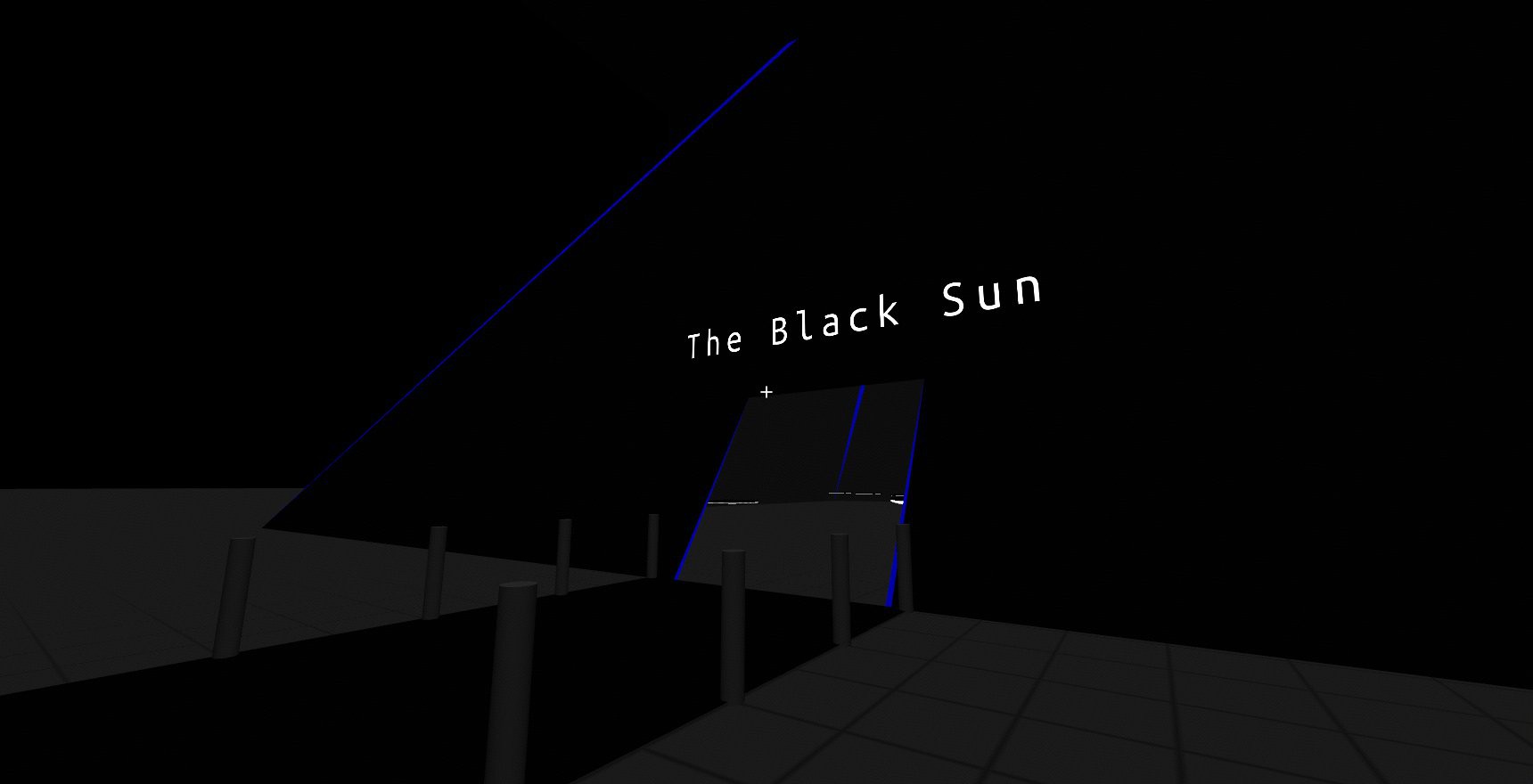

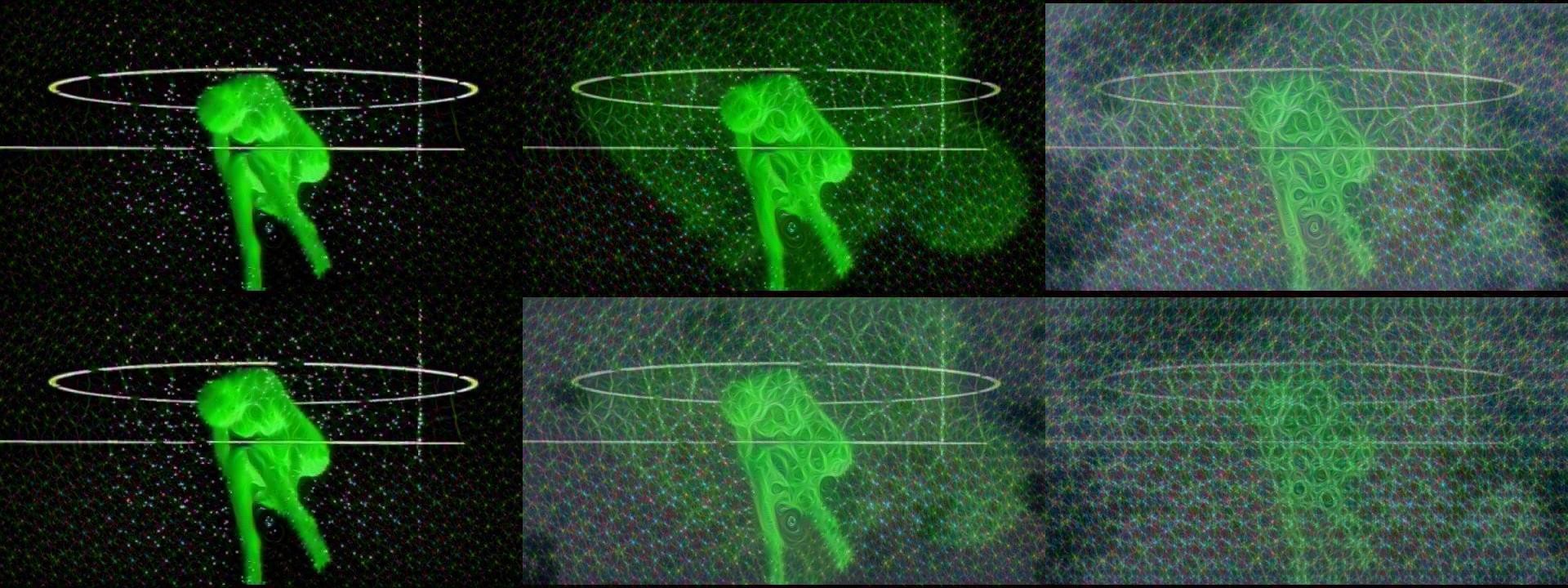

![]() I'm beginning to experiment with textures for 3D models within a virtual reality world. I'd like to texture objects with cymatic skins that react to music. Right now, I have created a simple fading gif to just test out the possibilities. The room I plan to experiment within will be with the Black Sun:

I'm beginning to experiment with textures for 3D models within a virtual reality world. I'd like to texture objects with cymatic skins that react to music. Right now, I have created a simple fading gif to just test out the possibilities. The room I plan to experiment within will be with the Black Sun:![]()

# Drain all the colors and create the fading effect for i in {10,20,30,40,50,60,70,80,90}; do convert out-20.png -modulate "$i"0,0 fade-$i.png; done # Loop the gif like a yo-yo or patrol guard convert faded.gif -coalesce -duplicate 1,-2-1 -quiet -layers OptimizePlus -loop 0 patrol_fade.gifI think this would be cool if it could update every X hour or react semantically to voice control. So the next time someone says "Pizza", the room will start dreaming about pizza and will reflect the changes within the textures of various objects.

Here is the finished Ghost in the Shell Deep Dream animation:

-

Enhance

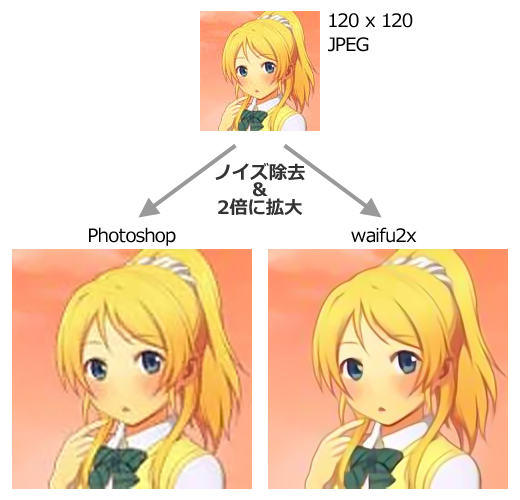

07/11/2015 at 07:32 • 0 commentsNeural networks may be used to enhance and restore low resolution images. In this post I explore image super-resolution deep learning in the context of Virtual Reality.

Because I'm waiting to snipe a good deal for a GPU (which performs CNN 20x faster than CPU), a friend has blessed me with a remote Arch Linux box with a GTX 770 with CUDA installed, i5-4670K @ 4GHz. Thanks gb.

In these experiments, I am using deep learning in order to input low resolution images and output high resolution. The code I am using is waifu2x, a neural network model that has been trained on anime style art.

![]()

Installing it was relatively straightforward from an Arch system:

# Update the system sudo pacman -Syu # Create directory for all project stuff mkdir dream; cd $_ # Install neural network framework, compile with CUDA git clone https://github.com/BVLC/caffe.git yaort -S caffe-git # Follow instructions in README to set this up. git clone https://github.com/nagadomi/waifu2x.gitArch Linux is not a noob-friendly distro, but I'll provide better instructions when I test on my own box.When ready to test, the command in order to scale the image will look like this:

# Noise reduction and 2x upscaling th waifu2x.lua -m noise_scale noise_level 2 -i file.png -o out.png # Backup command in-case of error. th waifu2x.lua -m noise -scale 2 -i file.png -o out.pngOriginal resolution of 256x172 to a final resolution of 4096x2752.![]() 16x Resolution

16x Resolution![]()

The convolution network up-scaling from original 256x144 to 2048x1152.

![]()

Thumbnails from BigDog Beach'n video magnified 8x resolution.

![]()

Intel Core i5-4670K CPU @ 4GHz GeForce GTX 770 (794 frames, 26 seconds long)

- 256x144 -> 512x288 0.755s per frame 10min total

- 512x288 -> 1024x576 1s 668ms per frame 22min total

- 1024x576 -> 2048x1152 6s 150ms per frame 80min total

- 2048x1152 -> 4096x2304 8s per frame 94min total

Here's a 4K resolution (16x resolution) video + links for the other resolution videos are on the vimeo page.

For the next experiment, I took a tiny 77x52 resolution image to test with, the results were very interesting to see.

![]()

![]()

- 154x104 492ms

- 308x208 594ms

- 616x416 1.12ms

- 1232x832 3.16s

- 2464x1664 10.56s

Because the resolution is higher than most monitors, I am creating a VR world so that the difference can be better understood. It will be online soon and accessible through JanusVR, a 3D browser, more info in next post.

![]() The image was enhanced 32x. The result tells me that waifu2x can paint the base, while deep dream can fill in the details. ;)

The image was enhanced 32x. The result tells me that waifu2x can paint the base, while deep dream can fill in the details. ;) The neural network that's been trained on Anime gives a very smooth painted look to it. It's quite beautiful and reminds me of something out of A Scanner Darkly or Waking Life. The neural network restores quality to the photo!

I took this method into the context of VR, quickly mocking up a room that anybody can access via JanusVR by downloading the client here. Upon logging in, press tab for the address bar to paste the URL and enter through the portal by clicking it.

https://gitlab.com/alusion/paprika/raw/master/dcdream.html

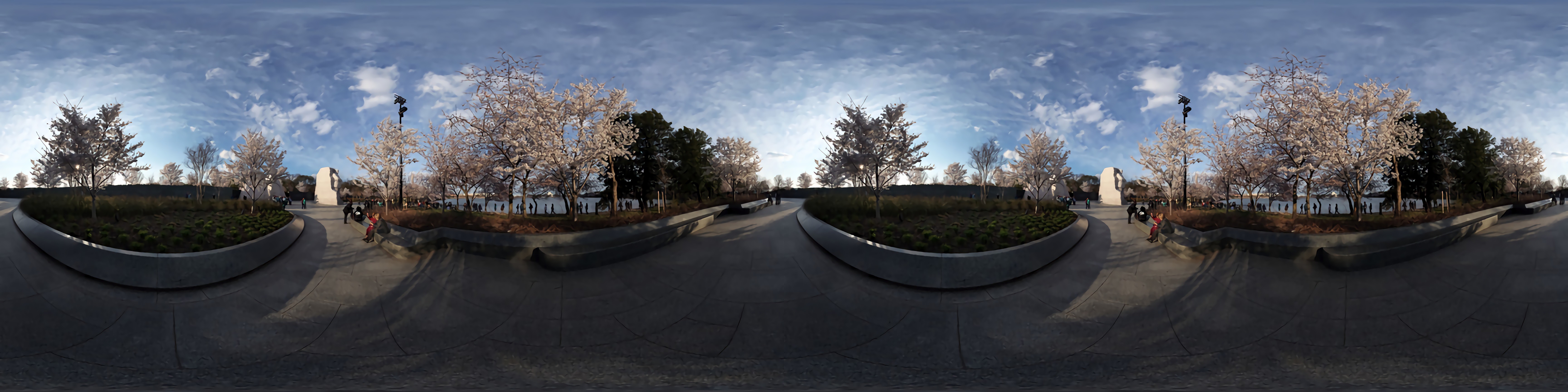

The original photograph is by Jon Brack, a photographer whom I've met in DC that shoots awesome 360 Panoramas. For this example I used a 360 image of the MLK memorial in Washington, DC. First, I resized a 360 image to 20% of it's original size to 864x432, and input it into waifu2x, up-scaling 8x resolution into 3456 x 1728. Then I format the image into a side by side stereo format so that it can be viewed in 3D via an HMD.

![]() Enhance.

Enhance.![]() The convolutional neural networks create a beautifully rendered ephemeral world, up-scaling the image exponentially.

The convolutional neural networks create a beautifully rendered ephemeral world, up-scaling the image exponentially. ![]() It was like remembering a dream.

It was like remembering a dream.![]()

to be continued

-

Deep Dream

07/07/2015 at 06:03 • 0 commentsAbout a week ago, Google had released open source code for the amazing artificial neural networks research they put out in June. Neural networks are trained on millions of images, progressively extracting higher features of the image until the final output layer comes up with an answer as to what it sees. Like how our brains work, the network is asked "based on what you've seen or known, what do you think this is?" It is analogous to a person recognising objects in clouds or Rorschach tests.

Now, the network is fed a new input (e.g. me) and tries to recognize this image based on the context of what it already knows. The code encourages the algorithm to generate an image of what it 'thinks' it sees, and feeds that back into the input -- creating a positive feedback loop with every layer amplifying the biased misinterpretation. If a cloud looks a little bit like a bird, the network will make it look more like a bird, which will make the network recognize the bird even more strongly on the next path and so forth.

![]()

You can visit the Inceptionism Gallery for more hi-res images of neural net generated images.

I almost immediately downloaded the deepdream code upon its release, and to my relief it was written in python within an ipython notebook. Holy crap, I was in luck! I was able to get it running the following morning and create my own images that evening! My first test was of Richard Stallman, woah O.o

![]()

I made several more but my main interest is with Virtual Reality implementations. The first tests I did in that direction was to take standard format 360 photospheres I took using Google Camera and to run them through the network. Here's the original photograph I took:

![]()

and here's the output:

![]()

I can easily view this in my Rift or GearVR, but I had a few issues with this at first:

- Because the computer running the network is an inexpensive ThinkPad, I was limited to what type of input I fed into the network before my kernel would panic.

- The output was low resolution which would be rather unpleasant to view in VR

- Viewing a static image does not provide a very exciting and interactive experience with a kickass new medium like Virtual Reality.

The first issue is something I would probably be able to handle with an upgrade, which I have been able to provide for my workstation laptop via external GPU adapter:

![]()

That is a 7970 connected via PCI express to my W520 Laptop. It needs its own power-supply as well. Had to sell that GPU but I'll be in the market for another card. VR / Neural Network research requires serious performance.

The second issue was the low resolution. I had previously experimented with waifu2x, it uses Deep Convolutional Neural Networks for up-scaling images. Here's an example I made:

![]()

This super-resolution algorithm can be subtle but its brilliant and it made my next test a lot more interesting! I have the original side by side with the up-scaled dream version:

![]()

Here's some detail of the lower resolution original image:![]()

And here's the output from the image super resolution neural net:

![]()

The reason it has taken on some cartoonish features is because the network was trained on a dataset consisting of mostly anime (lol), but I find that it makes the results more interesting because I find reality to be rather dull.

Lastly, the third problem I have with these results is that the experience of seeing these images in VR is still not yet exciting. I have brainstormed a few ideas of how to make Deep Dream and VR more interesting:

- Composite the frames into a video, fade in and out between raw image and dream world.

- Implement a magic wand feature (like photoshop / gimp) and select a part of the world as input into the neural network and output back into the image in VR. I found this: Wand is a ctypes-based simple ImageMagick binding for Python.

- Leap motion hand tracking in VR to cut out the input from the 360 picture inside and output back into a trippy object in VR or composite back into the picture.

- Take a video and have a very subtle deep dream demo with only certain elements [such as the sky] be fed as input rather than the whole image (same concept as previous two points)

- Create my own data sets by scraping the web for images to train a new model with.

- Set up a Janus Server and track head rotation / player position / gaze. The tracking data will be used to interact with deep dream somehow...

- Screen record the VR session and use unwarpVR ffmpeg scripts to undo distortion and feed input into neural network. More useful when paired with Janus server tracking.

- Record timelapse video with a 360 camera and create deep dream VR content.

- Experiment with Augmented Reality

I'll have a VR room online later today testing various experiments out. To be continued...

-

X11 Application Migration pt. 1

06/06/2015 at 03:44 • 3 commentsI needed more than just having an SSH tunnel from within Janus. The ability to have remote X applications migrated into VR gives a metaverse developer much more power to access and create content without having to leave the client. I strongly believe that when done right, this is to be the next great leap in computing. The office can exist anywhere -- it doesn't carry the limitations of physical hardware and can be accessed with a Virtual Reality headset which is a multiplier of how many displays you can interact with instead of the single monitor on current laptops / tablets. You have better privacy as well since people can't shoulder surf you when the screen is directly in front of your eyes. I think that you, the reader, will understand better once you see a functional computing environment in Virtual Reality.

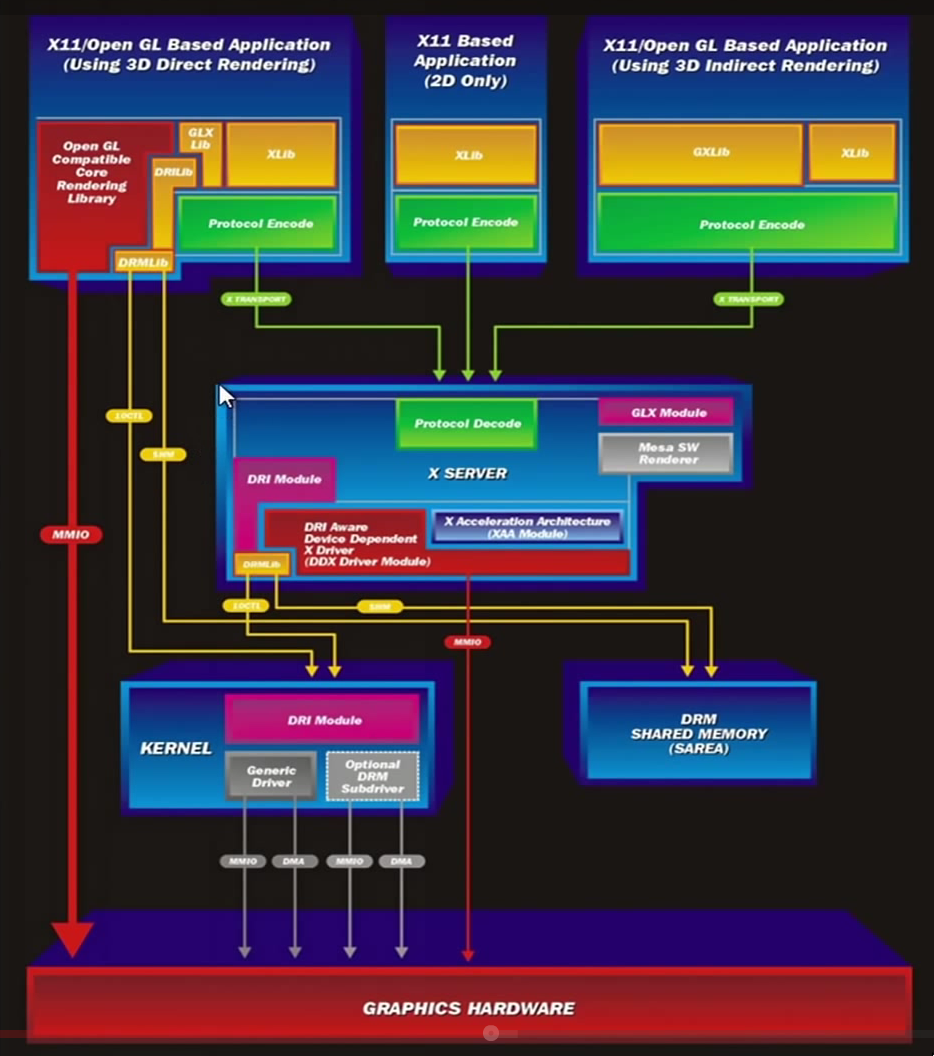

![]()

First, I knew that accessing VNC over the web browser is nothing new and that I wanted to do things differently. I began to look at methods of transporting individual x applications, learning much about how X server and websockets work in the process. The X server code is more complicated than you might think, which is the bad for security.

![]()

I understand that simpler and more maintainable compositors are in the works (Wayland) but I just wanted to make a point that the way we interact within VR in the future would abstract greatly from where we are today using 2D interfaces and a keyboard/mouse. JanusVR already supports leap-motion and soon we can walk around in 3D environments with technology coming from Valve such as Lighthouse later in the fall. Tomorrow we will be interacting with computers with via voice, touch, sight, and movement. A good example I can think of is how we currently use Blender, a free and open source 3D modeling program. It's too difficult for someone to use Blender for simple operations. I'm looking forward to programs such as Tilt Brush that use Virtual Reality to create 3D content. Anyways, PoC || GTFO.

I began to look into WebSockify, a WebSocket to TCP proxy/bridge that allows a browser to connect to any application/server/service. WebSockets traffic is translated to normal socket traffic and traffic is forwarded between the client and target in both directions. Another useful piece to know is Xvfb: (X Virtual Frame Buffer) is an X server that can run on machines with no display hardware and no physical input devices. It emulates a dumb framebuffer using virtual memory. The virtual server does not require the computer it is running on to have a screen or any input device, only a network layer is necessary. An alternative to Xvfb is Xdummy: a script that uses LD_PRELOAD to run a stock X server with the "dummy" video driver. Next, I needed to find a way to process X programs through a web browser where I can view it inside Janus...

Xpra (X Persistent Remote Applications) is free and open source software that allows you to run X11 programs from a remote host and direct their display to your local machine, and display existing desktop sessions remotely. You can disconnect from these programs and reconnect from another machine without losing any state as well as having remote access to individual programs. Compared to VNC, Xpra is rootless and applications forwarded by Xpra appear on your desktop as normal windows managed by your window manager. Think of it as 'screen' for X11. It connects as a compositing window manager to a Xvfb display server that takes the window pixels and transports them into a network connection to the Xpra client.

TL;DR: Xpra is 'screen for X': allows you to run X programs, usually on a remote host and direct their display to your local machine. Xpra works by connecting to an Xvfb server as a compositing window manager.

I learned during my initial tests that the Ubuntu repo maintained an older version of Xpra so the first step will be to upgrade to the latest version. Winswitch is a frontend to Xpra and the latest version (0.15.0) is bundled with it. The instructions for downloading it are here, and for Ubuntu 14.04:

# instructions must be run as root sudo su - # Import the packagers key curl http://winswitch.org/gpg.asc | apt-key add - # Add repo and install winswitch (comes with latest version of xpra) echo "deb http://winswitch.org trusty main" > /etc/apt/sources.list.d/winswitch.list; apt-get install software-properties-common >& /dev/null; add-apt-repository universe >& /dev/null; apt-get update; apt-get install winswitch # Must have these also installed for server with HTML5 support apt-get install xvfb websockify* You can also download a bleeding edge package from here or stable builds from here.Now, to test that it works we will try forwarding xterm into the browser window.

xpra start --bind-tcp=0.0.0.0:10000 --html=on --start-child=xterm

Point the browser / log in through Janus and browse to http://localhost:10000, the xterm should now appear in the browser window.![]()

Login via 127.0.0.1 and port 10000. The xterm should appear on a WebSurface asset within Janus. Single application forwarding works! Xpra is successfully forwarding single X11 programs through to my web browser and into Janus. I can potentially distribute programs individually to people via different ports and display servers. Another idea might be to to use Docker containers for distributing applications with into VR. Just know that the applications don't need to be mounted on a wall and can be held in your hands like a tablet or displayed transparently like an AR-HUD interface. The WebSurface is an Asset that can be applied to any kind of 3D object. The desktop is no longer restricted to a single display or monitor. but can be dispersed in a virtual environment. It is sort of like the difference between a television and a projector, your applications are free to be projected onto any surface in virtual reality! This is the beginning of a 3D window manager built inside a Metaverse application.

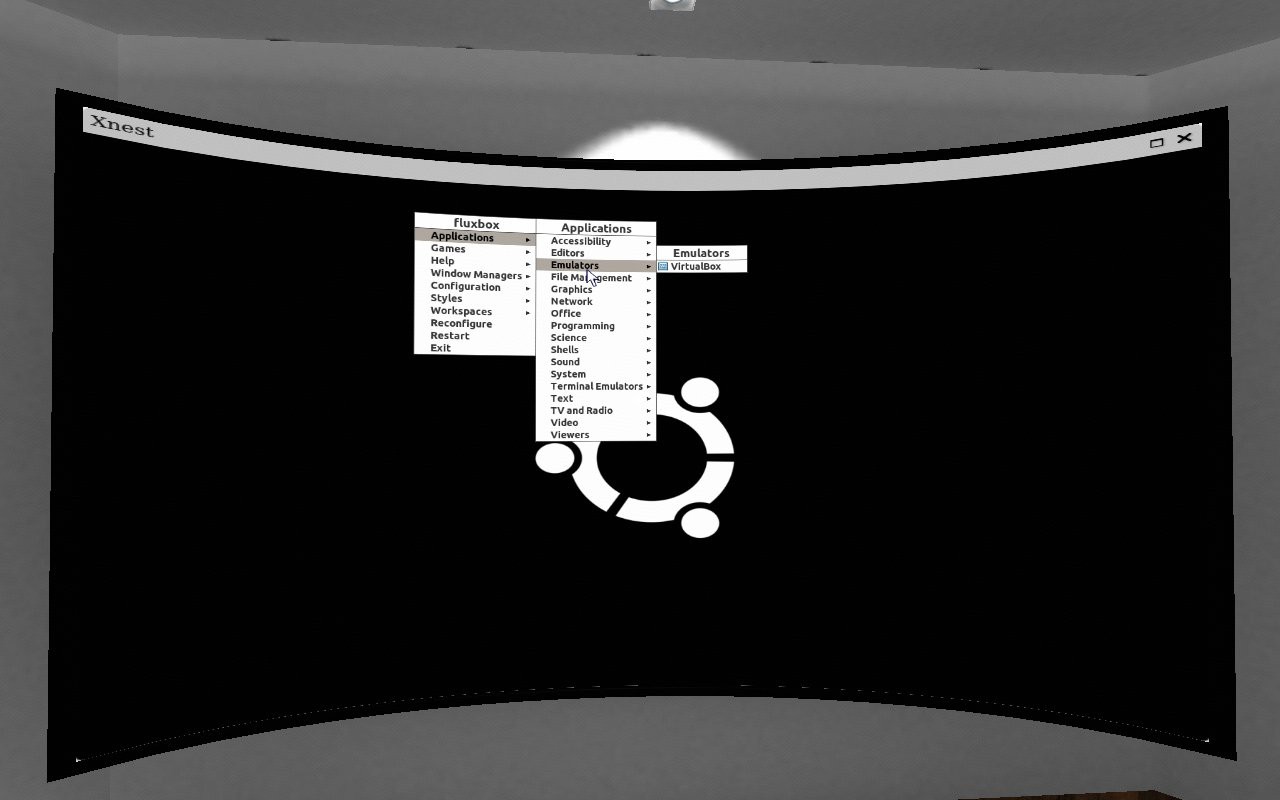

For my next test, I used Xnest which can be used to run a virtual desktop of another computer within a window. On separate terminals my command looks like this:

# Initiate the Xpra server with nested X server xpra start --bind-tcp=0.0.0.0:10000 --html=oc --start-child="Xnest :200 -ac -geometry 1280x800+24" :100 # Start awesome window manager on :200 from separate terminal DISPLAY=:200 awesome& # tail the log file tail -f /home/user/.xpra/:100.log

Now you can point the websurface URL to http://localhost:10000.

Not only it works but regular X11 applications can easily be launched from within too!![]()

![]()

Tabbed browsing is possible because now you are interacting with YOUR web browser. It has all of your bookmarks and plugins loaded. This is an extension of YOUR desktop environment into JanusVR. Making rooms in Janus is much easier when you have access to your desktop; there's less need to exit the client.

The next step I am working on is to free the applications from being clumped together on a single plane. Imagine having a file browser in your hands or the image viewer on the wall of your room. The desktop environment expands beyond your screen and into the world around you as part of the space. Individual X11 window applications can be represented into virtual 3D objects using this method. The combination with low latency SSH tunneling is going to initially enable the developers to do more from inside Janus. My goal goes much further beyond that however as I aim to decentralize, demonetize, and distribute functional computing environments within collaborative Metaverse spaces. When people hunger for more beyond simply gaming or consuming media on their VR devices, I'll be there to give them productive multi-user environments that will enable them to work in ways never before possible.![]()

To be continued...![]()

-

Inspiration for doing this

06/02/2015 at 04:52 • 0 commentsI'd like to dedicate a project log over what exactly inspired me to do what I am doing right now. The first question belongs to the most important, what is the metaverse?

![]()

The Metaverse is a collective virtual shared space, created by the convergence of virtually enhanced physical reality and physically persistent virtual space, including the sum of all virtual worlds, augmented reality, and the internet.

The Metaverse, a phrase coined by Stephenson as a successor to the Internet, constitutes Stephenson's vision of how a virtual reality-based Internet might evolve in the near future.

. . . the Metaverse describes a future internet of persistent, shared, 3D virtual spaces linked into a perceived virtual universe, but common standards, interfaces, and communication protocols between and among virtual environment systems are still in development.

WikipediaThere will be 360 capture devices coming out soon from GoPro, Samsung, & Google. The hardware design for this camera array will be open and film-makers can use off the shelf cameras coupled with the Google stitching software to create very high quality 360 captures for VR. I've researched every 360 camera in the past and would say that Google puts out the best solutions for those looking to get into immersive film-making.

![http://www.popsci.com/sites/popsci.com/files/styles/large_1x_/public/screen_shot_2015-05-28_at_6.20.28_pm.png?itok=K-s4kioX]()

A person can 3D print the shell and use their own cameras or buy a 360 capture gimbal from GoPro for $3000:

![http://cnet2.cbsistatic.com/hub/i/r/2015/05/28/b2125eec-445a-4ab0-8c5d-5d707bdd5192/resize/770x578/268f12ff53034d4965fc3e1381c9d133/code2015201505271723048185-l.jpg]()

William Gibson's books have heavily influenced me growing up. Stories from the Sprawl Trilogy have seen it's influence to this day in modern culture with films such as The Matrix and Ghost in the Shell which have been greatly inspired by the themes written in these 80s cyberpunk classics. Some of the technology detailed in these stories is worth mentioning such as SimStims:

SimStim is literally Simulated Stimulation, and is a logical parallel to VR. Rather than experiencing a full VR or AR experience in which your mind is placed inside a metaverse matrix, a completely simulated reality; or the joys of physical reality -meatspace- with additional VR components grafted on, SimStim opens up a third possibility.

Viewing the world through another person's eyes, hearing with their ears, feeling with their skin, smelling with their nose. Full sensory stimulation of another person, total passivity.

Then he keyed the new switch.

The abrupt jolt into other flesh. Matrix gone, a wave of sound and colour…She was moving through a crowded street, past stalls vending discount software, prices feltpenned on sheets of plastic, fragments of music from countless speakers. Smells of urine, free monomers, perfume, patties of frying krill. For a few frightened seconds he fought helplessly to control her body. Then he willed himself into passivity, became the passenger behind her eyes.

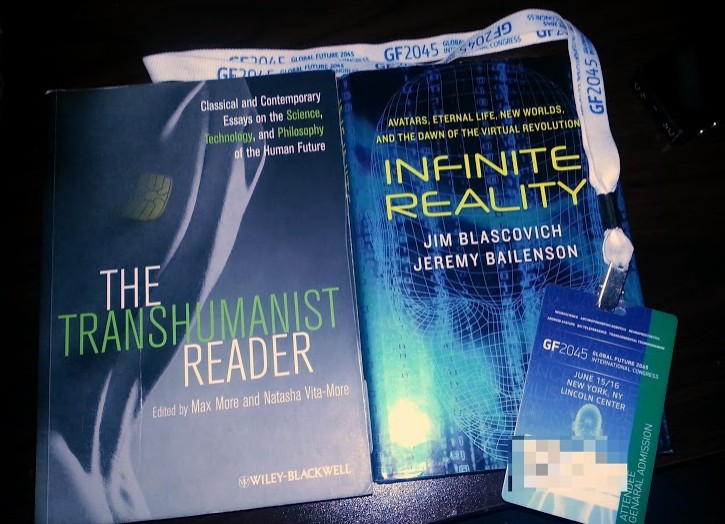

William Gibson's Neuromancer, page 56The evolution of the web will bring this global brain with amazing interconnectedness: my humble vision is to push humanity forward in being able to tap into that and be able to transcend as a species. It was in 2013 when I attended a the GF2045 Transhumanism conference in New York City.

![]()

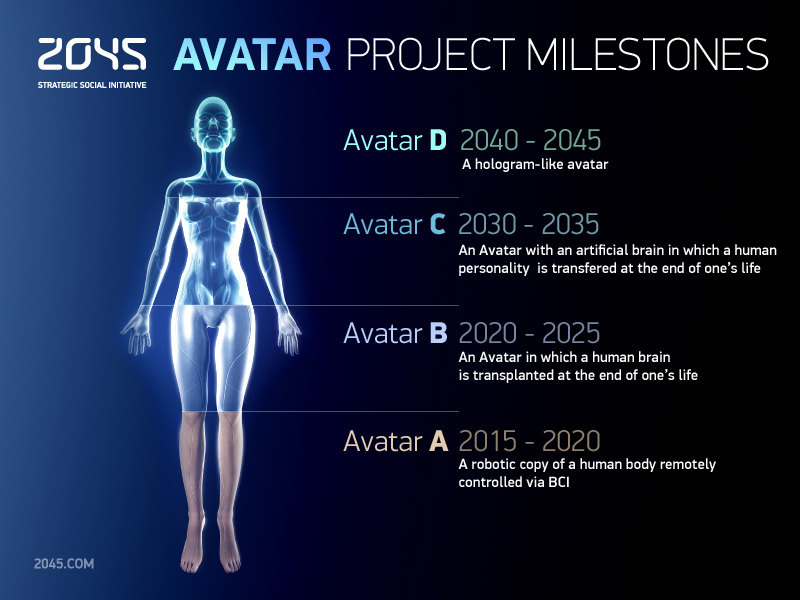

It's greatly motivated me to expand my long term thinking strategy if I were to experience the singularity in my lifetime. I got to hear the science and tech behind some of the most brilliant minds but one project has stood out for me the most and that is project AVATAR:

![]()

This is where I saw myself being able to contribute. I had an Oculus Rift headset at the time and knew immediately that it would play a huge role in project AVATAR's development. Whether it might be in regards to Deep Learning [ http://deepmind.com/ ] algorithms being applied to live / recorded 360 captures from the devices or from the high grain data collected of human interaction in virtual / augmented environments, I was ready to apply myself in this direction to find out. However, it was later that year when Bitcoin started taking off like crazy, and for nearly a year my focus was split from the crypto-currency boom that followed up after it. As 2015 arrived, I made a New Years resolution to focus on one thing this time and get it right: Virtual Reality. It is about halfway in the year now, and it seems that every opportunity I have turned down yielded a bigger one right around the corner. My aspirations are so huge they sometimes frighten me... but at the same time I have gone through pains to design a system that can scale exponentially, be distributed with cheap hardware, decentralized, and by default respect the user's freedom. I've already made prototypes that can one day lead to a turn key revolution once consumer head mounted displays get into millions of consumers hands, and that is only be a matter of months away.

![]()

to be continued...

-

General Update

05/29/2015 at 02:55 • 0 commentsIt's been awhile since my last project log and I've made a ton of breakthroughs that have shaken me to my core at the potential impact that this could bring to the world for revision 1. First of all, I've created a new front-end to AVALON using darkengine.

![]()

Because the page will be loaded up quite often, I've kept it very minimalist. It is also nice that darkengine has its own docker-compose for easy setting up (although Docker only works on 64bit systems, arm support is very limited). Also for the simple fact that it the web server is being hosted on a raspberry pi, I wanted to keep it as small a footprint as possible. I was unhappy with the upload mechanism because it would involve iframes and did not seem a very elegant solution. Droopy is a python script that creates the web server that allows for easy file sharing into the directory of one's choosing. I could either have a database back-end to organize all the things or perhaps run multiple instances. It would be straightforward to Dockerize the program as well or include it with darkengine.

I'm sure there is some nice HTML5 file-dropper code out there as well. I want it to dead simple for a person to bring content from a device into VR, without having to touch any FireBoxRoom code so that they can focus on designing and creating the rooms from inside Janus. There were a number of steps involved with getting darkengine running on the Raspberry Pi:

- Update/Upgrade and install software-properties-common

- Install nginx

- sudo add-apt-repository -y ppa:nginx/stable

- apt-get update; install nginx

- rm -rf /var/lib/apt/lists/*

- install php5-fpm from php5-redis

- rsync config files into /etc/nginx/ and /etc/php5/

- point 127.0.0.1 php in /etc/hosts

On a system with Docker, it's easiest to use Docker-Compose

# Install docker-compose curl -L https://github.com/docker/compose/releases/download1.2.0/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose # run in directory where the docker-compose.yml is docker-compose build && docker-compose up -d # check to see if it's running docker psI found some inspiration for a FireBoxRoom I want to create that looks straight out of Burning Chrome:

![]()

Another important development involves discovering IPFS when I was investigating large scale distributed systems, latency, and general infrastructure involved with the Metaverse.

The InterPlanetary File System (IPFS) is a peer-to-peer distributed file system that seeks to connect all computing devices with the same system of files. In some ways, IPFS is similar to the Web, but IPFS could be seen as a single BitTorrent swarm, exchanging objects within one Git repository. In other words, IPFS provides a high throughput content-addressed block storage model, with content-addressed hyperlinks. This forms a generalized Merkle DAG, a data structure upon which one can build versioned file systems, blockchains, and even a Permanent Web. IPFS combines a distributed hashtable, an incentivized block exchange, and a self-certifying namespace. IPFS has no single point of failure, and nodes do not need to trust each other.

You can read the white paper on IPFS from here. Here's some key points:

- Peer-to-Peer distributed file system

- BitTorrent swarm exchange objects within one Git repo

- Data structure upon which one can build content-addressed + versioned filesystems, block-chains, even a permanent web.

- Combines distributed hash table, incentivized block exchange, self-certifying namespace.

- No single point of failure

- Nodes don't have to trust each other.

This seems to be a very promising solution in the context of heavily mediated Virtual Reality distributed networks to have a hypermedia distribution protocol.

Speaking of media distribution, we also had one of the best 360 live-streams yet from inside a movie set!

![]()

Standing outside of the 360 Video Sphere.

I have also had a recent breakthrough in terms of mobile implementations for AVALON that carry huge implications and keep me up at night.

I'm experimenting with a decentralized cryptocoin marketplace implementation in VR using BitcoinJS and OpenBazaar.

Going beyond virtual reality, I have also been polishing up code in order to smooth the transition from a 2D web page into a 3D one so that when you start the client, you are automatically transported into a super cool Virtual Reality room. This technology transforms WiFi networks into a means of transporting the soul and devices into a pocket universe. These devices will light up like a star in a lone black-sky desert, forming archipelagos as people mesh together ad-hoc networks -- forming constellations. I'm serious about creating a metaverse and have made incredible progress its infrastructure.

I'm at my limit for the amount a single person can do and really need to put forth a business plan. This is why my updates are less frequent as I align myself with the mission to obtain adequate funding that is required for a lab / additional programmers. Money will help move the project along quicker so that I could focus on creating content and avatars, just in time for the consumer release that is a matter of months away.

Oh yeah, one more thing...

![]()

Stay tuned to find out what this might be ;)

Metaverse Lab

Experiments with Decentralized VR/AR Infrastructure, Neural Networks, and 3D Internet.

alusion

alusion A vision came to me through the 802.11 in the form of a dream-catcher.

A vision came to me through the 802.11 in the form of a dream-catcher.

Yesterday marked the opening of

Yesterday marked the opening of

I'm beginning to experiment with textures for 3D models within a virtual reality world. I'd like to texture objects with cymatic skins that react to music. Right now, I have created a simple fading gif to just test out the possibilities. The room I plan to experiment within will be with the Black Sun:

I'm beginning to experiment with textures for 3D models within a virtual reality world. I'd like to texture objects with cymatic skins that react to music. Right now, I have created a simple fading gif to just test out the possibilities. The room I plan to experiment within will be with the Black Sun:

16x Resolution

16x Resolution

The image was enhanced 32x. The result tells me that waifu2x can paint the base, while deep dream can fill in the details. ;)

The image was enhanced 32x. The result tells me that waifu2x can paint the base, while deep dream can fill in the details. ;)  Enhance.

Enhance.