-

Minor Update

03/24/2016 at 15:40 • 0 commentsIn order to reduce friction and help with spacing, I ordered and installed oil impregnated bronze bushings for the sprockets, however it has not fixed the problem. My current working theory is the sprocket's teeth are not releasing from the chain and as a result are getting jammed in the chain links and pulled backwards, royally messing up the drive train and preventing forward movement.

The current fix (just to get the friggin robot to move) is to discard the sprockets (for now, I think they are the best solution, but sometimes excellence is the enemy of good enough) and use some oversized 3" washers and the spacers previously purchased to keep the chain captive. I've run a simulation and with two washers on each side, the maximum deflection due to a 100 lb-force load on the area where the chain is contained is .01" maximum.

Which is good enough. I also managed to bend both the original rod, and the new one I ordered with the bearings.

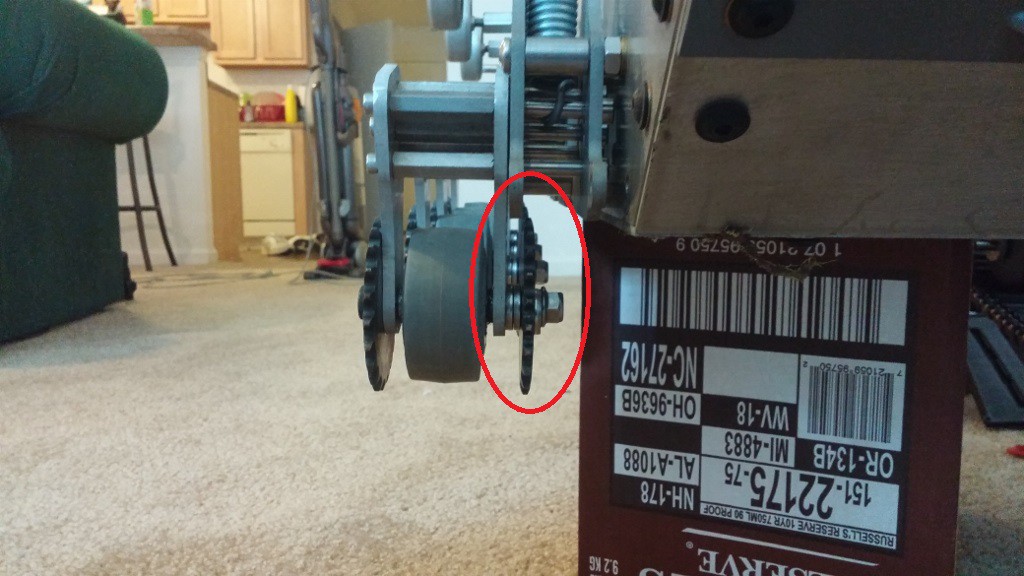

Here is the mockup of the 3" washer on the system.

And the washers came in fairly quick, so I've started working on installation. I also laser cut acetal copolymer, mainly because it looked cool and would help protect the aluminium from getting scratched.

That is all for now! Hopefully I will have time this weekend to replace the bad output shaft on the left gearbox, and finish attaching the washers to the wheels, so I can get this robot rolling again!

-

Updates! Mechanical, software, and more!

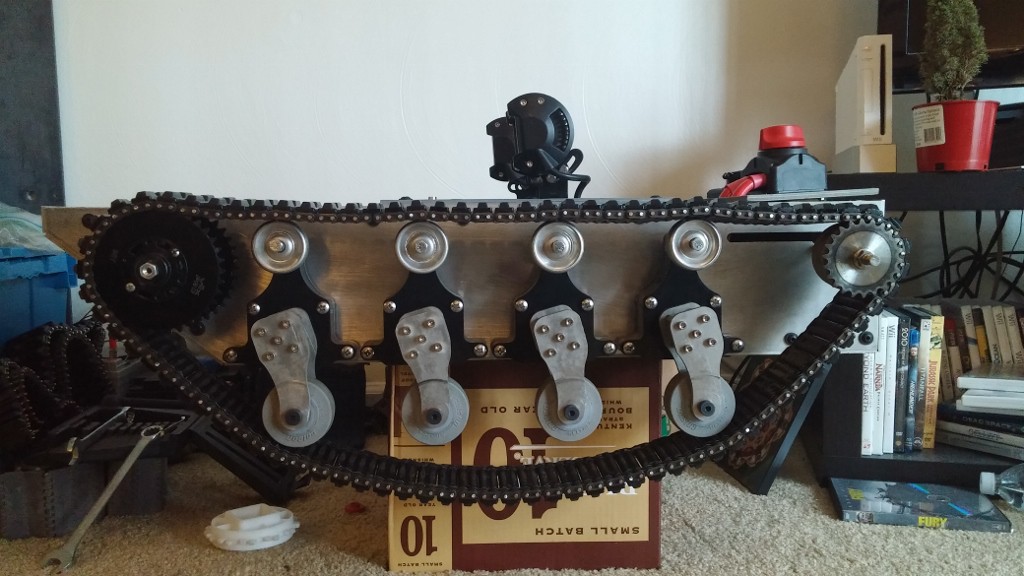

03/05/2016 at 16:51 • 0 commentsSorry for the long bought of silence, between school and a mishap with the ordering department, I have just within the last week got all the mechanical bits required to mount the new treads. However there is still some mechanical bits that need to be finalized and some bugs to hammered out.

![]() (sorry for the potato quality photos, took these on my phone before I left for spring break)

(sorry for the potato quality photos, took these on my phone before I left for spring break)First on the list:

Sprockets on the wheel modules are creating some problems. Basically in order for the suspension system to work, the sprocket on the inside needs to be offset enough to avoid hitting parts of the suspension system![]()

![]()

As you can see the sprockets had to be offset to avoid hitting the steel plate. However, The sprocket on the outside of the system has no washer now, and grinds the aluminium, and locks up.

![]()

You can see the marks on the wheel module above.

Basically I just need to play with spacing and spacer so that the sprockets can rotate without grinding but still be captive and rigid enough so the treads don't slide off. I'm thinking that laser cut acetal resin washers/spacers is probably the best bet. I've already used that technique to help reduce friction on the suspension system, as you can above and below.

![]()

The acetal resin dramatically helped with friction and binding, and combined with new oil-impregnated washer I hope will provide a longer service life before the washers wear out. I think doing something similar to the outside of the wheel module, combined with oil impregnated washers, would help the spacing of the sprockets as well as prevent the sprockets from binding and locking up the drive train.

Second:I need to replace some parts of the drive train. Sadly, while taking apart drive train, I broke a standoff.

Opps. So the output of the drive shaft needs to be replaced. Fairly easy to do, just a bit of a pain as the whole front of the robot needs to be taken off to get the motor and gearbox out.![]()

However overall I am very happy with the new tracks and cannot wait to get the system moving. And every single part I need to get the system function arrived in the mail before spring break. So hopefully I can get the platform moving before the 19th.

Software:

I've continued working on the monocular camera obstacle avoidance and I would say that overall it is going pretty well. Basically the last big challenge is getting rid of the latency due to the video frame buffer in OpenCv. Sadly the OpenCV video capture property CV_CAP_PROP_BUFFERSIZE isn't working with the Logitech C920 on the Odroid. Therefore another solution needs to be found to ensure the video frame being processed is as close to real time as possible.

But as you can see the algorithm works fantastically well on road surfaces

And does a great job on finding obstacles in an unknown enviroment. Sadly no screenshots of this yet, but hopefully soon.

The next big step is to implement memory, and have the system save the histograms from previous frames and use those histograms to find obstacles in the current frame. This will remove the strong assumption that the area in the reference area (green box above) is free from obstacles and would allow the user to "train" the robot by manually driving the robot.

-

Some slight mechanical misfit

01/21/2016 at 16:55 • 0 commentsSo finished assembling the new treads and test fitted them on the chassis.

It looks good! Downside is my CAD model was a little bit off, so the spacers spacing the sprockets so they interface with the chain on the boogie wheels is a little bit off (looks like an 1/8 of an inch or so on each side). Opps. But my 3D printer has been fixed and the Taulman Bridge Nylon has arrived, so I will print some spacers. They will either be a temporary measure to ensure the new spaces fit or as a permanent solution if they work well (really depends on how well they hold up).

That is all for now!

-

New treads have arrived!

01/19/2016 at 15:51 • 0 commentsJust a quick update, with the big news being the new improved treads have arrived and I have started to assemble them.

![]()

I am very impressed with the quality and cannot wait to mount them on the chassis. In addition I have continued to work on the software for the Cortex M4 (Teensy 3.2) to control the motors given a velocity and angle message sent over USB. Hopefully I can finish that up today. Now that I am back at college, I can once again have access to the chassis.

That is all for know!

-

Mechanical and Electrical Improvements (12-15-2015)

12/15/2015 at 17:52 • 0 commentsSorry for the delay in updating this project, but it's the final stretch in the semester so I have not had a lot of free time. However I have had time to finalize several electrical and mechanical improvements, order parts, and render some new models to show the changes!

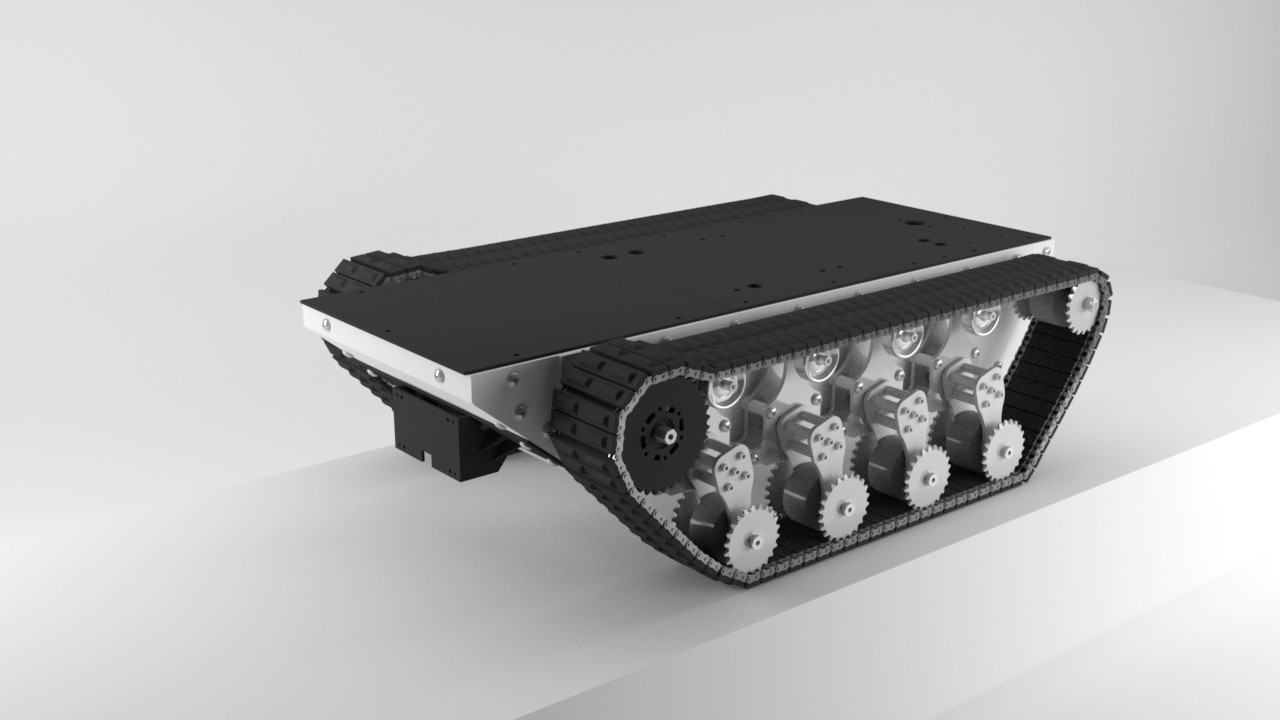

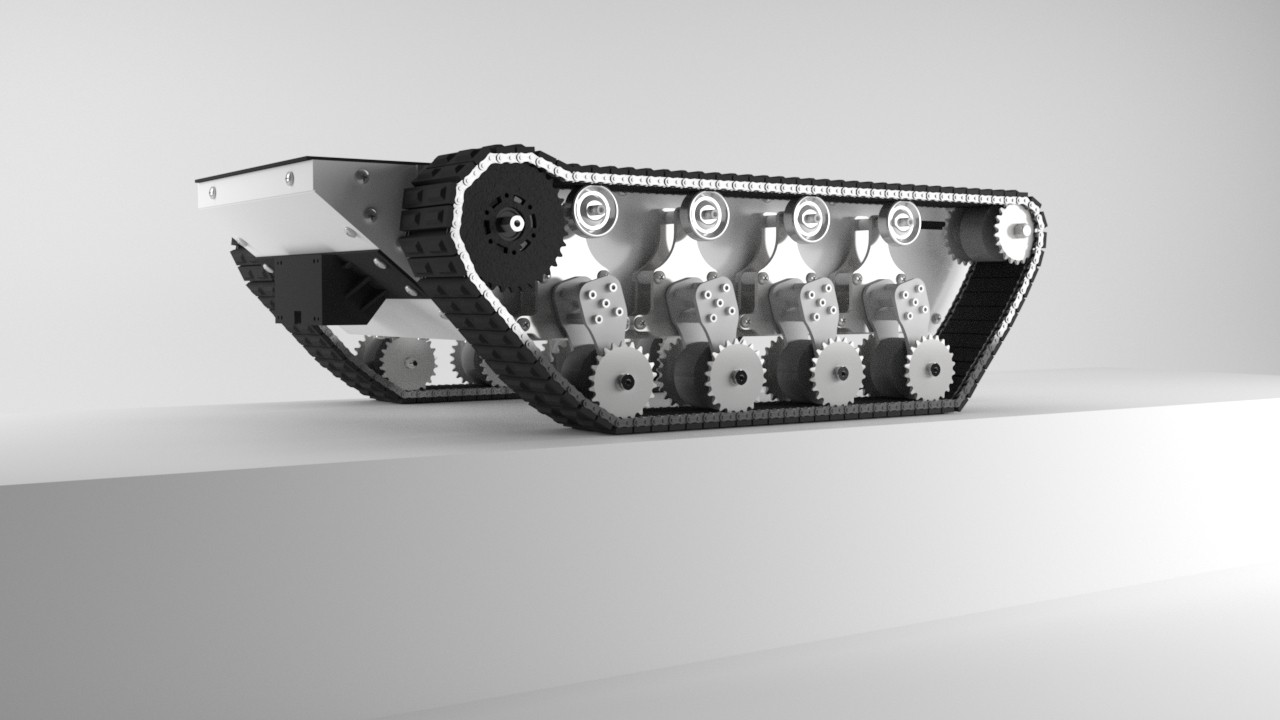

The biggest mechanical change is moving away from industrial conveyor belts (which work well on flat surfaces but are too slick to grip well) and use jupdyke's Modular Continuous Track System! I'm excited to get my hands on the new tracks and see how well they perform. Since I can use steel sprockets and have more control over the length of the tracks, they should help prevent the system from throwing tracks as often as it is now. Also, I should be able to go up wet hills!

I've also finalized the design for the new camera mount and laser-cut an abs prototype.

New renderings with improved tracks, sprockets, and the new camera mount:

![]()

![]()

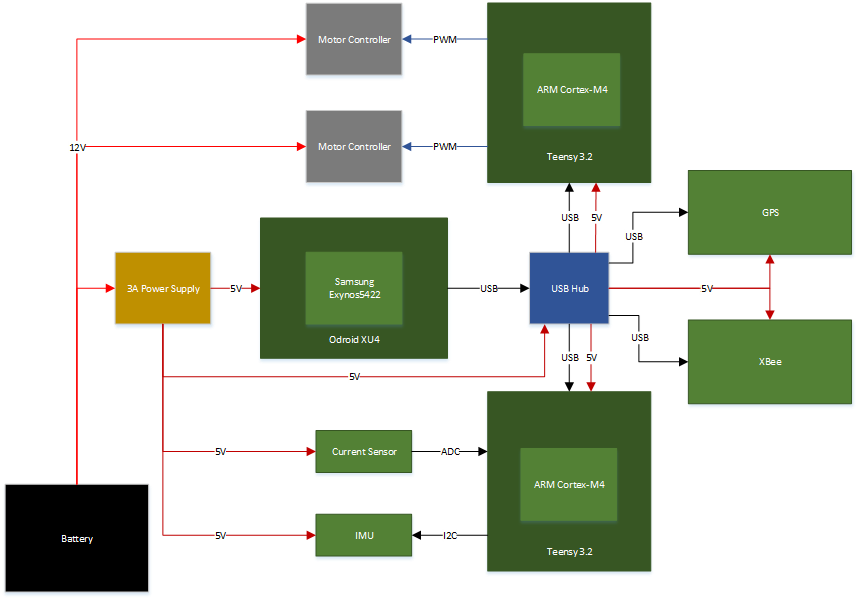

On the electronics front I've installed OpenCV with OpenCL, OpenGL, TBB, and as many other optimizations as possible on the Odroid XU4, and have the obstacle detection algorithm running at 10 FPS. Taking a lesson from ROS and the embedded systems class here, I've tried break the system into smaller parts to make programming and debugging as easy as possible and have them communicate with each other with standard messages. Therefore, I have the Odroid XU4 doing the image processing and sending messages over USB to Teensy 3.2s which interface with the sensors and motor controllers. This way, I can program and test the motion control and planning, obstacle detection, and sensors interdependently.

Updated Block Diagram:

![]()

That is all for now.

-William

-

Obstacle Detection and Avoidance

10/31/2015 at 16:46 • 0 commentsExamples

![]()

Processed (White is impassable terrain)

Obstacle Detection:

based on and taken from:

Ulrich, I. 2000. Appearance-Based Obstacle Detection with Monocular Color Vision. In Proceedings of the AAAI National Conference on Artificial Intelligence.Theory:

The basic algorithm utilizes histograms and a "safe" area to detect impassable terrain in the environment. It is assumed the area directly in front of the robot is clear of obstacles, and can be used as a reference. A histogram of this safe region is generated. The more advance alogrithm stores histograms from past travel, and uses those as known "safe areas". Then the image is scanned pixel by pixel and the value of each pixel is compared its bin in the histogram of the safe region. If the number of pixels in the bin is below some threshold, that pixel is classified as belonging to an obstacle. The HSV color space is used instead of the RGB color space because it is more resistant to changes in lighting.

Implementation:

Implementation so far can be found here: https://github.com/williamg42/AGV-IV/tree/master/Autonomous/Autonomous_Obstacle_Avoidance

The current code is only the segmentation algorithm, which applies the basic algorithm described above. A more advance algorithm is currently under development.

Obstacle Avoidance:

based on and taken from:

Horswill, I. 1994. Visual Collision Avoidance by Segmentation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, 902-909.image at time t and let D(.) be the obstacle detection algorithm described above. We define the bottom projection ("bprojection") of the binary image J to be a vector b(J), indexed by x (horizontal) coordinate, whose x'th element is the height of the lowest marked pixel in the x'th column of J:

bx(J) = min{ y: J(x,y) = 1}

It will be shown below that under the right conditions, b(D(I)) is a radial depth map: a mapping from direction to the amount of freespace in that direction. Given the radial depth map b(D(I(t))), we define the left, right, and center freespaces to be the distances to the closest objects to the left, right and center:

Where xc is the x coordinate of the center column of the image, and w is a width parameter.

Now if we assume the robot is a true holonomic drive (which it is not, but we can "convert" and angular and translation velocities to left and right wheel velocities fairly easily. This should allow the robot to avoid hitting something but will not maintain its previous direction vector, which is fine for now)Where dTheta/dt is the angular velocity, v(t) is the translational velocity, and dmin is the closest a robot should ever come to an obstacle, and c_theta and c_v are user defined gains. There will also be velocity cap on the equations to limit the robots maximum speed, which are not mentioned in the equations for simplicity.

-

Waterproof USB Web-camera with Wide Angle Lense

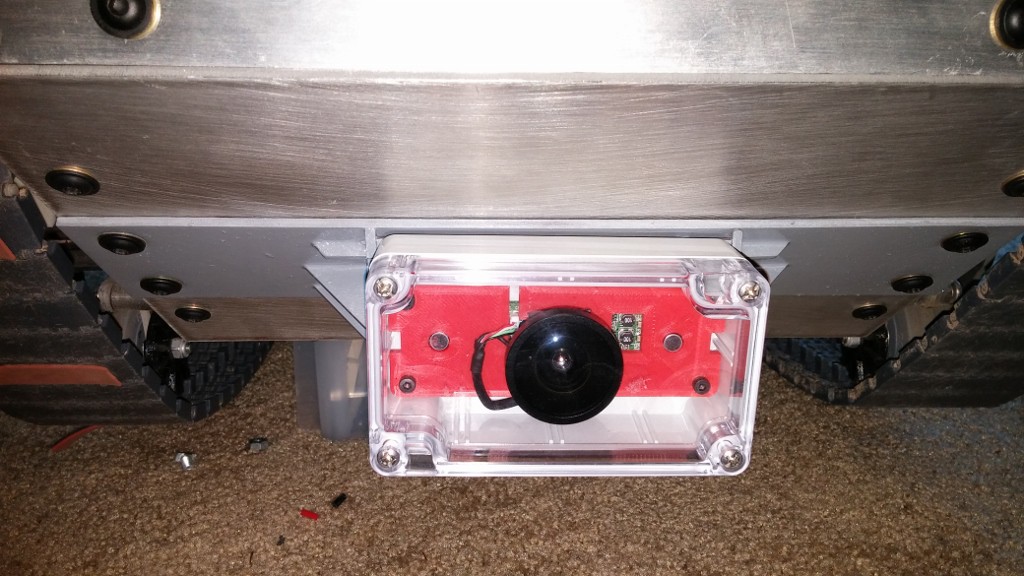

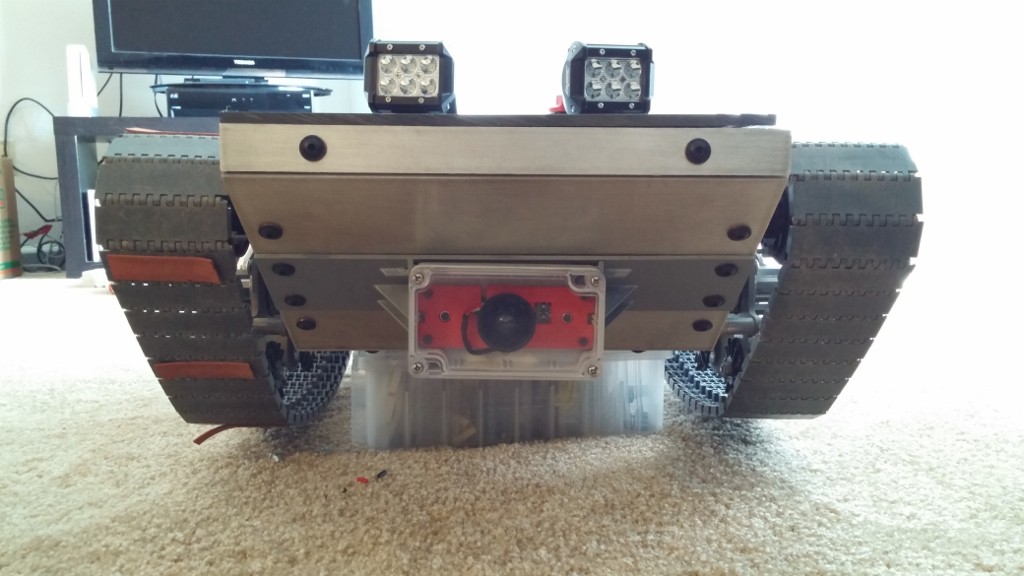

10/21/2015 at 13:38 • 0 commentsOne of the important design aspects of this project is a sensor system for detecting obstacles in the path of travel. However to keep in line with project theme of ruggedized and usable in all weather conditions, this sensor system needs to be housed in an IP66 enclosure and firmly attached to the robot. The majority of off the shelf sensors in the hobbyist price range are not designed to handle rough use or exposure to water or mud. To meet this goal I decided to use a USB web-camera and modify its mechanical housing.

![]()

![]()

In particular the Logitech C920. I removed it from its default housing, designed a custom 3D printed mount, and epoxied the entire assembly into a poly-carbonate and ABS box. Combined with a cheap wide angle lens, I now have a sensor that can get wet and hopefully handle the abuse the system is designed to take.

On a side note, the grey bracket the camera is mounted to is a quick prototype created by 40 watt CO2 laser engraver. It is more of a placeholder until I finalize the design and get it manufactured out of aluminium or delrin.

Expandable Ruggedized Robotic Platform

Robotic platform designed to operated in harsh conditions experienced in outdoor environments. Modular with easy to replace components.

williamg42

williamg42

(sorry for the potato quality photos, took these on my phone before I left for spring break)

(sorry for the potato quality photos, took these on my phone before I left for spring break)