Firefly — A Low-Cost Flying Robot to Save Lives

Firefly is a low-cost, modular flying robot for first responders that uses intelligent, nature-inspired algorithms to navigate autonomously.

Firefly is a low-cost, modular flying robot for first responders that uses intelligent, nature-inspired algorithms to navigate autonomously.

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

After designing the mapping and navigation algorithms for Firefly, I wanted to create a flying robot so that I could test my work in real-world environments. At this point, I wasn't ready to build a flying robot from scratch, so I added sensing and processing hardware to a Parrot AR.Drone. There were a few important considerations when designing this hardware that I'm going to cover in this log.

Selecting a single-board computer

My notes are below. Ultimately, I chose the ODROID-C1 because it was relatively fast but also inexpensive and energy-efficient. (The notes refer to UncannyCV, a computer vision library optimized for ARMv7-A processors.)

Designing a sensor module

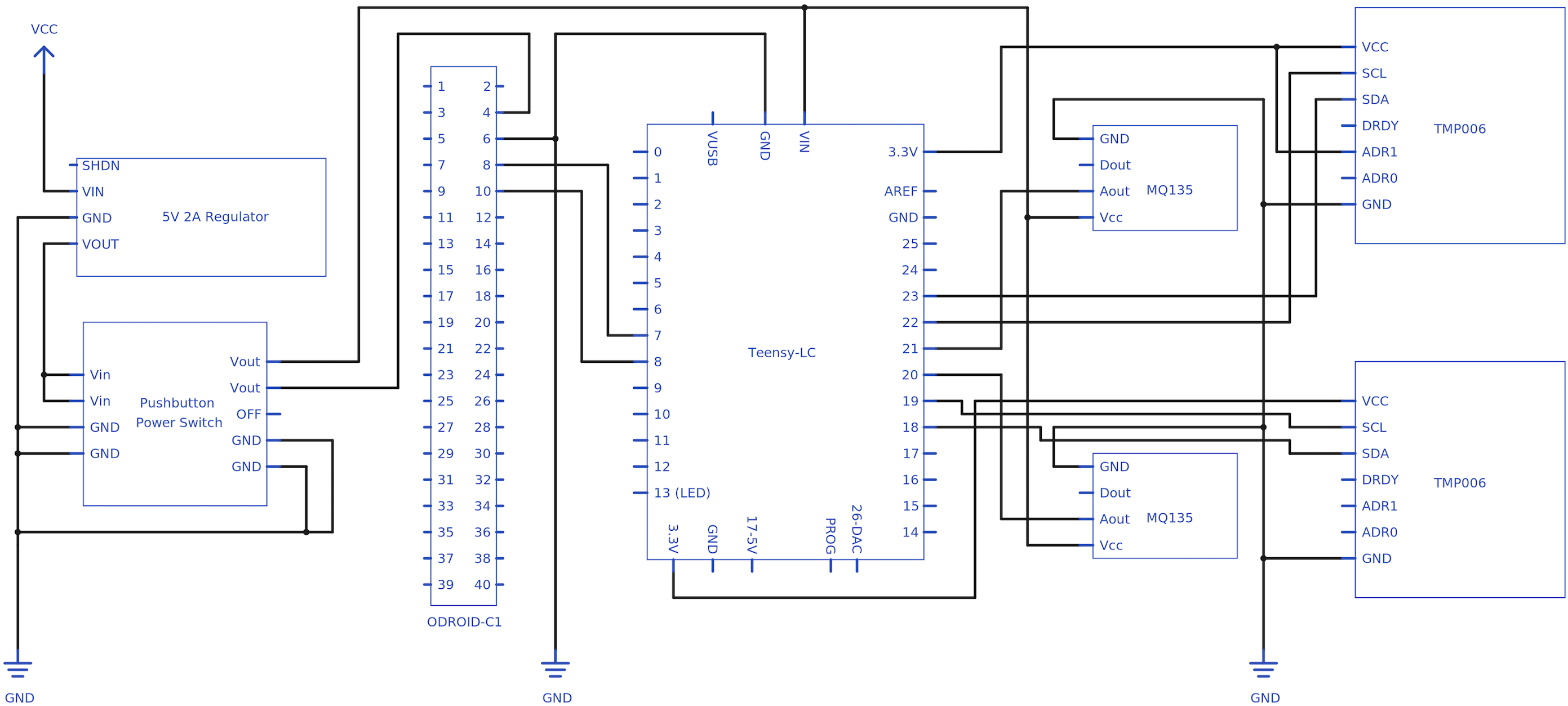

I also needed to design a sensor module that could work with my navigation algorithm to locate fires. My notes are below:

Also, here's a final schematic of my sensor module:

Selecting a specific gas sensor for fire detection

Here are my notes on this:

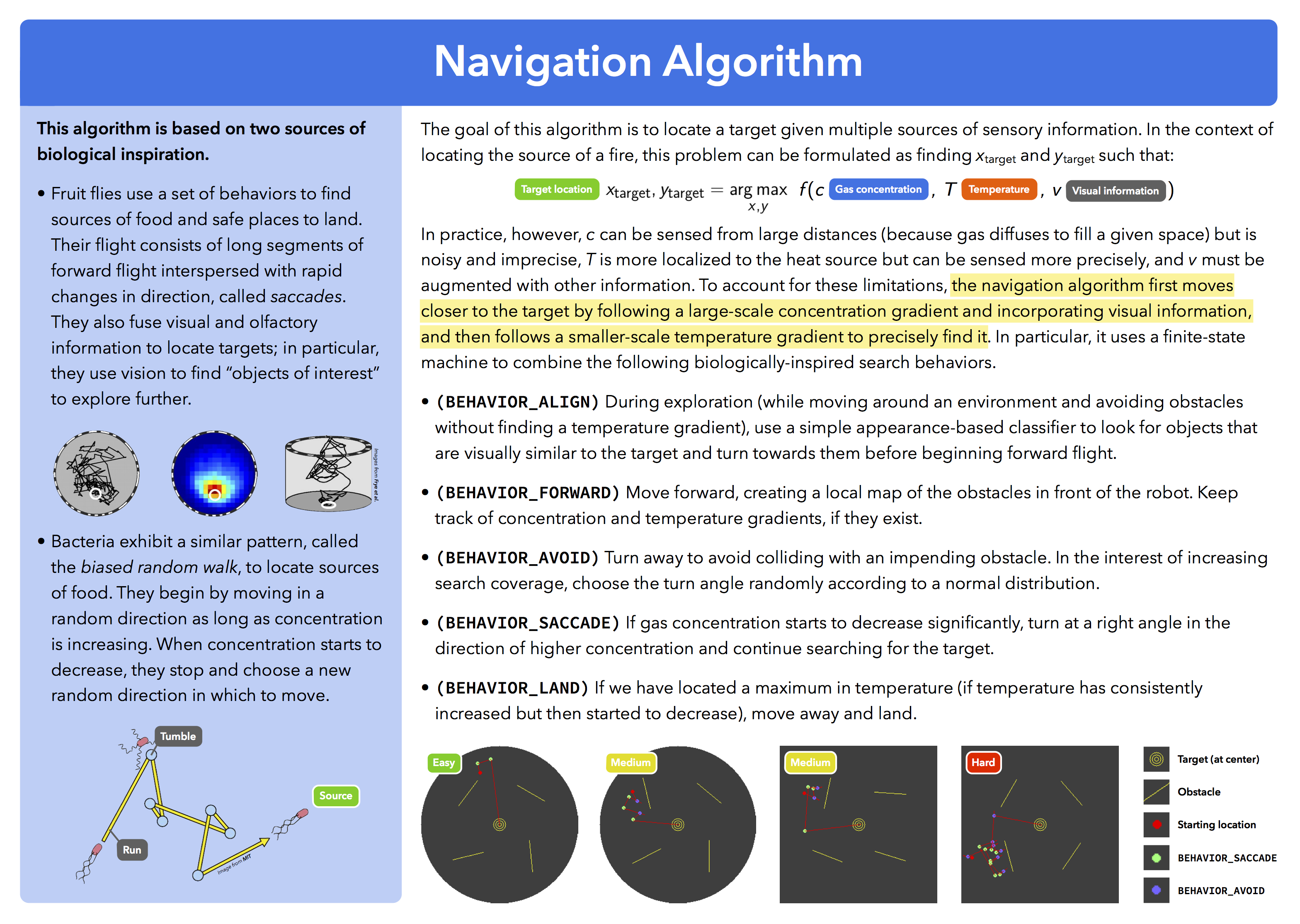

The third component of Firefly's algorithmic layer deals with the problem of autonomously locating a target—like a trapped victim after a disaster or the source of a fire in a burning building—by combining multiple different sensory inputs. The problem with existing approaches is that they largely focus on taking measurements in several, precise locations, using these measurements to compute the location of the target.

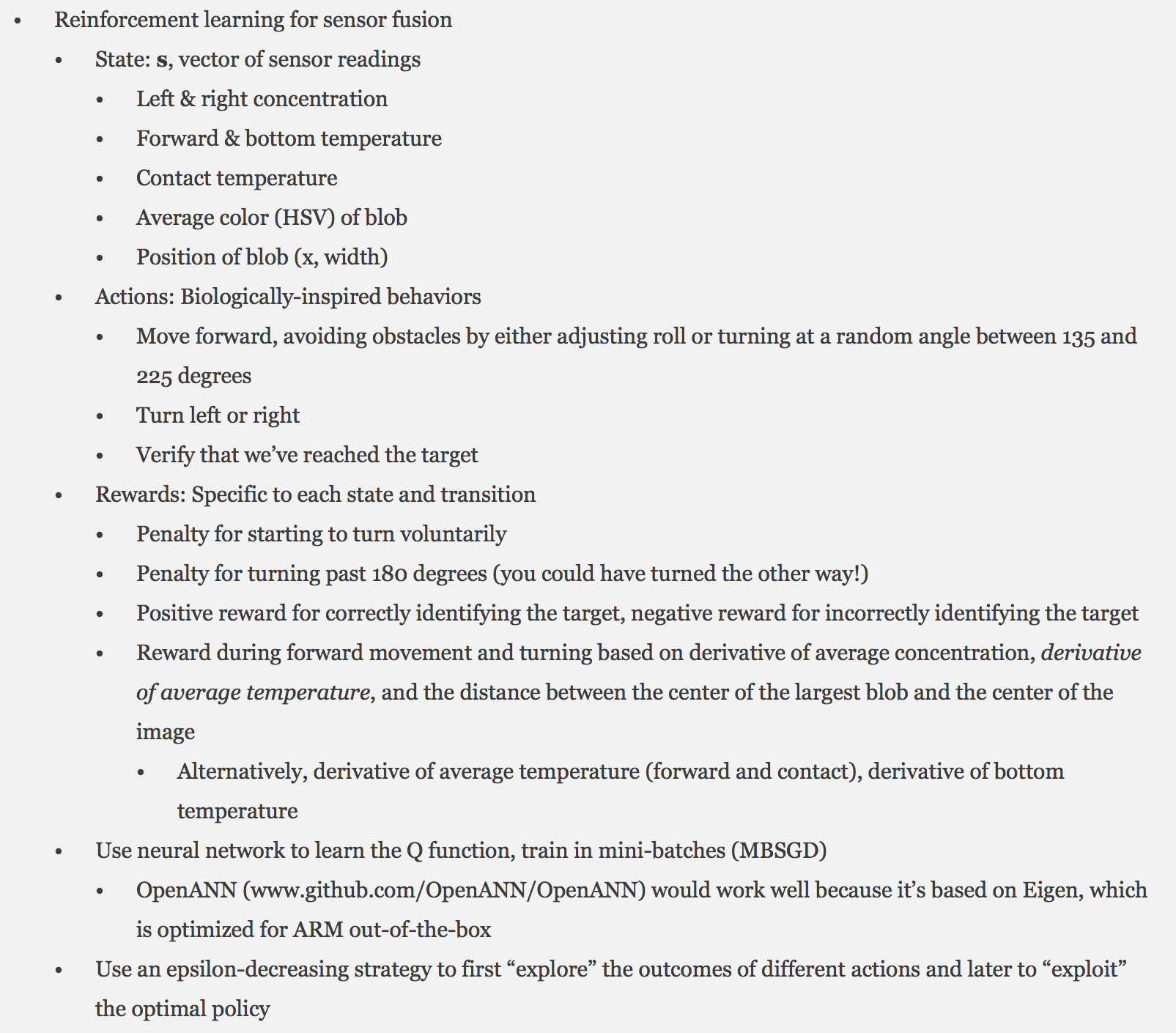

Approach 1: Reinforcement Learning

Initially, this seemed like a classic reinforcement learning problem, because it's easy to formulate the problem in terms of states (sensor readings), actions (robot behaviors), and rewards (when you get closer to the target). I implemented this on my first prototype of Firefly—here are my notes:

While this approach sounded good in theory, there were a few problems that made it infeasible. First, it required extensive training for each use case. It also didn't generalize well; I found that it worked only in the specific cases for which it was trained. Additionally, it wasn't robust to noisy sensor readings, which was especially problematic because I was using very cheap sensors that produced a useful output but also had significant noise. Because of this, I quickly decided to move on to a simpler, behavior-based approach, described below.

Approach 2: Following Multiple Gradients

This approach worked much more reliably, both in simulation (see below) and in some real-world experiments. Here's a graphic I made giving an overview of this approach:

Evaluation

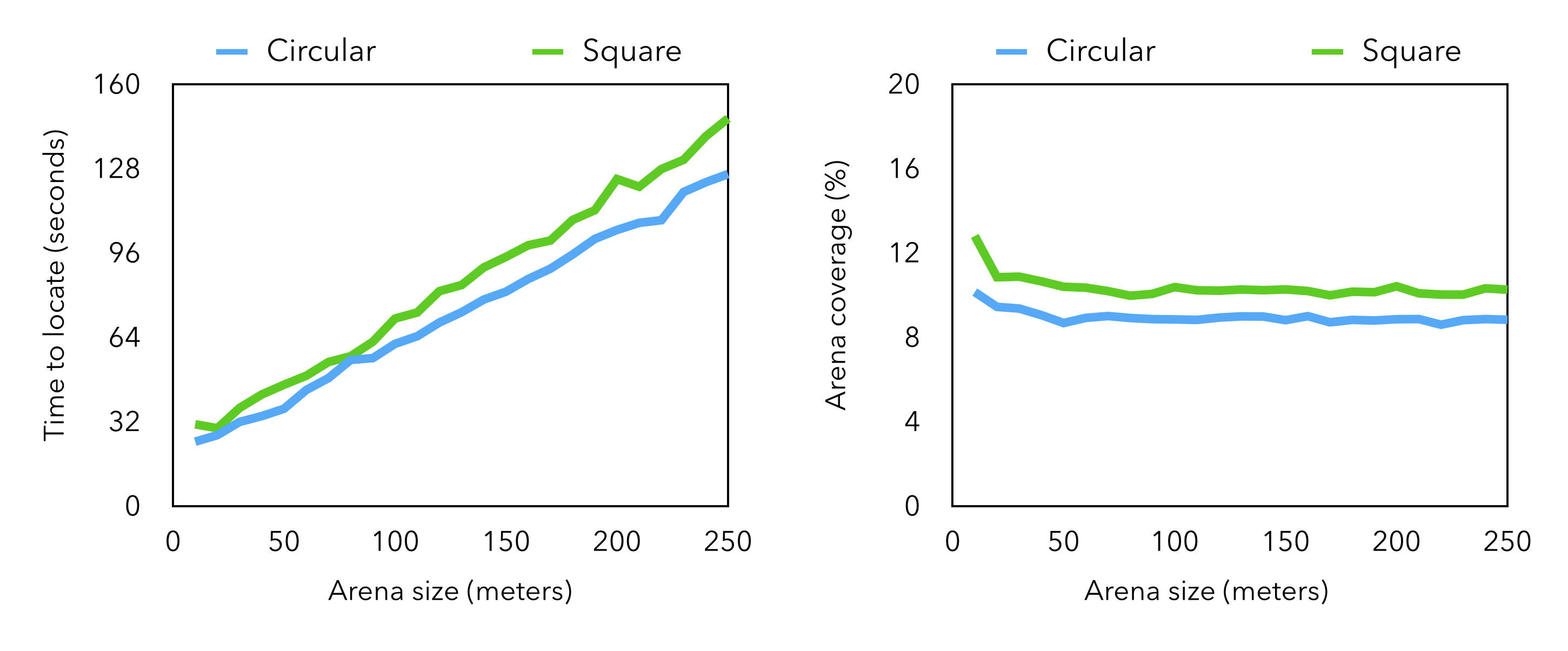

I created a simulation to see how this algorithm would perform in a variety of environments. In the simulation, the robot starts out with a random position and orientation inside a square arena (with a certain side length) or circular arena (with a certain diameter) and moves 0.1 meters per loop iteration. The arena contains four identical obstacles with random orientations; the robot has to avoid these obstacles and the arena walls. Concentration and temperature are modeled with a two-dimensional Gaussian distribution centered in the arena; the distribution for concentration fills the arena, while the distribution for temperature is limited to a small area around the target.

You can see the results of a few simulation runs above (they're the diagrams at the bottom of the image). I also used the simulation to quantify the effectiveness of this algorithm, and I was very happy with the results—the simulation indicated that the algorithm is effective (~98% success rate given the ten-minute flight time of a typical quadrotor), efficient, and scales well to large environments. Here are some specifics on what I found: I also conducted some real-world testing with a group of local firefighters—I'll talk more about that in a future log. Until then, if you want to see the algorithm in action, my TEDxTeen talk (linked in the sidebar) includes a video of one of these experiments.

I also conducted some real-world testing with a group of local firefighters—I'll talk more about that in a future log. Until then, if you want to see the algorithm in action, my TEDxTeen talk (linked in the sidebar) includes a video of one of these experiments.

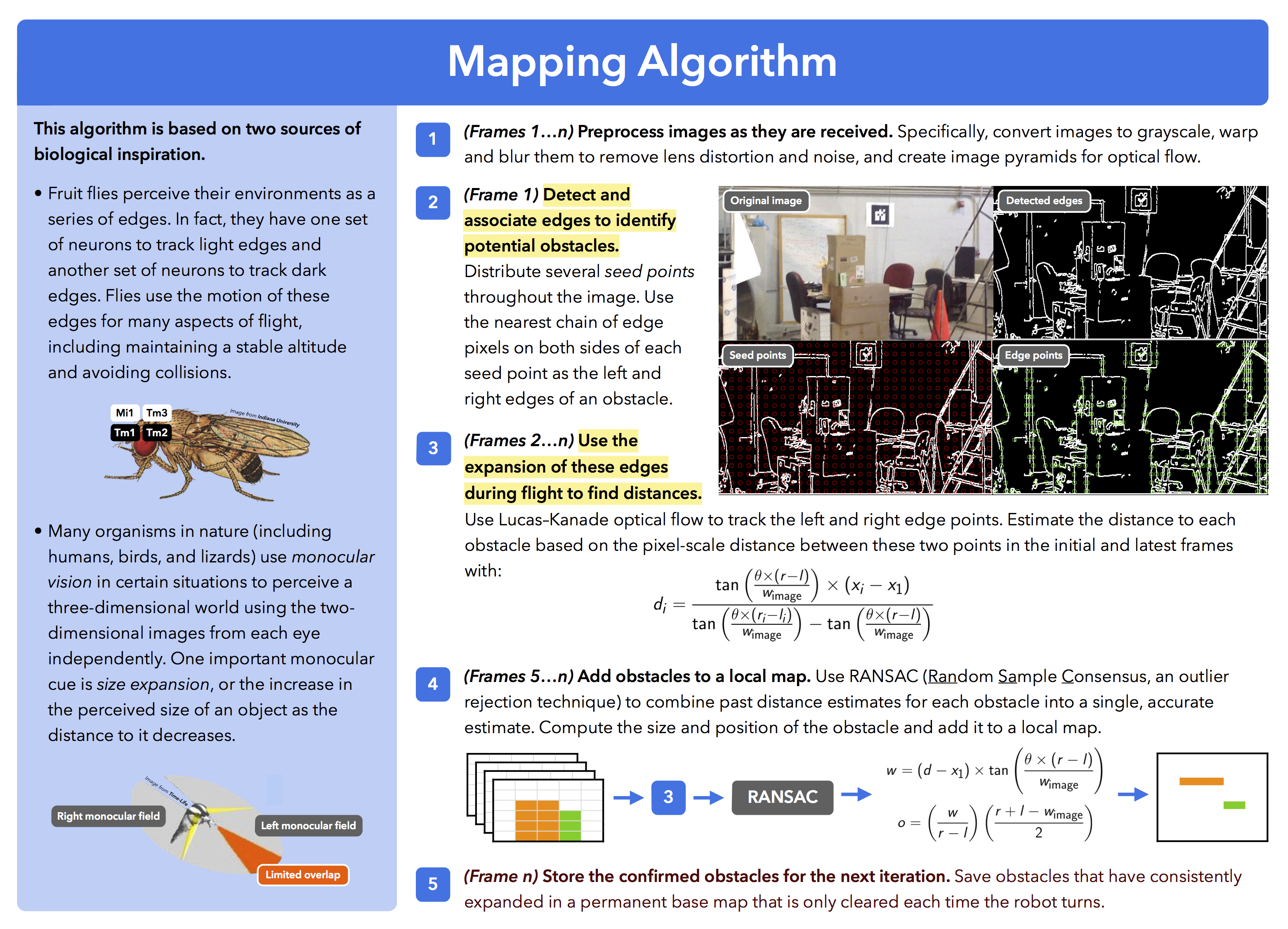

The second component of Firefly's base algorithmic layer deals with the problem of avoiding collisions with obstacles. Most existing algorithms for mapping and obstacle avoidance use complex, sophisticated sensors, like depth cameras or laser scanners. The problem with these sensors is that they're large, heavy, expensive, and draw a lot of power. Mapping with a single camera (or monocular mapping) is a good alternative, but it's a much harder problem because you're trying to use fundamentally two-dimensional information to understand a three-dimensional environment. While a few algorithms exist for this task, they're either limited to simple, structured environments, or are computationally-intensive and difficult to run in real time. Because of these limitations, I had to develop my own for Firefly.

Approach

Here's a graphic illustrating how my monocular mapping algorithm works:

This algorithm proved to be able to avoid obstacles of different sizes, shapes, colors, and textures—more on this in a future log! Until then, if you really want to see it in action, my TEDxTeen talk (linked in the sidebar) has a video showing some of my results.

This algorithm proved to be able to avoid obstacles of different sizes, shapes, colors, and textures—more on this in a future log! Until then, if you really want to see it in action, my TEDxTeen talk (linked in the sidebar) has a video showing some of my results.

(This is the first of a series of logs that I'll be posting over the next few days giving a brief overview of the work I've done on Firefly to date, and what I'm hoping to get done over the next few months.)

One of the components of Firefly's base algorithmic layer deals with escaping from moving threats in complex, dangerous environments by mimicking how fruit flies avoid swatters—so, for example, if one of these robots is carrying out a search and rescue mission after an earthquake and the ceiling collapses or an object falls, this algorithm helps the robot recognize it and move away in time. This is where the story of Firefly begins, so, in this log, I'll talk about my inspiration for the project, and describe the process of designing and implementing hardware and software for escaping from moving threats.

Inspiration

Firefly started out as a high school science fair project. In the summer before ninth grade, my family returned from a vacation to find our house filled with fruit flies, because we had forgotten to throw out some bananas on the kitchen counter before we left. I spent the next month constantly trying to swat them and getting increasingly frustrated when they kept escaping, but I also couldn't help but wonder about what must be going on "under the hood"—what these flies must be doing to escape so quickly and effectively.

It turns out that the key to the fruit fly's escape is that they're able to accomplish a lot with very little: they use very simple simple sensing (their eyes, in particular, have the resolution of a 26 by 26 pixel camera), but, because they have such little sensory information to begin with, they're able to process all of that information very quickly to detect motion. The result of this is that fruit flies are able to see ten times faster than we can, even with brains a millionth as complex as ours, and this is what enables them to respond to moving threats so quickly.

Around the same time, drones were in the news a lot. It quickly became clear that although they had tremendous potential to help after emergencies or natural disasters, they simply weren't robust or capable enough. One specific problem was that these robots weren't able to react to quickly-moving threats in their environments. Existing algorithms for avoiding collisions don't work here because they're so computationally-intensive and rely on complex sensors, so I made the connection to fruit flies: I wanted to see whether we could apply the same simplicity that makes the fruit fly so effective at escaping to make flying robots better at reacting to their environments in real time.

Building a sensor module to detect approaching threats

I had planned to use a small camera, combined with some simple computer vision algorithms, to detect threats. However, I found that even the lowest-resolution cameras available today create too much data to process in real time, especially on the 72 MHz ARM Cortex processor on the Crazyflie, the quadrotor on which I wanted to implement my work. I came up with a few alternatives and I eventually settled on these infrared distance sensors. (Sharp has since released a newer version that's twice as fast and has twice the range, so that's what I'm planning to use for my latest prototype.) The beauty of this approach is that each of these sensors produces a single number, compared to laser scanners or depth cameras, which produce tons of detailed data that’s nearly impossible to process and react to quickly.

Initially, I wanted to use four of these sensors to precisely sense the direction from which a threat is approaching. I also wanted to use a separate microcontroller and battery to avoid directly modifying the Crazyflie's electronics. I built a balsa wood frame for the sensors, attached a Tinyduino, and added a small battery, and... my robot wouldn't take off, because it didn't have enough payload capacity to carry all of this added weight. I performed lift measurements and soon found that I could use a maximum...

Read more »

Create an account to leave a comment. Already have an account? Log In.

I'd like to try flying a quad in a burning building... Sounds like an exciting flight.

Autonomously, you may find new challenges because of the fire... aside from smoke, debris, and other obvious hazards, what about thermal effects on airflow in a burning structure?

Once a firefighter punches the window out with his axe, and tosses the little robot in, does the sudden in or out rush of air get accounted for? Also, at times this very procedure of breaching the structure for entry of personnel, water/chemicals, or even robots can cause a pretty serious lasting inward draw of air, creating gusts within the structure, which can loosen debris or fan a fire into an inferno, etc etc.

As for longevity, surely there is an appropriate nylon or other plastic that holds up well in high temperatures for the rotors... Still probably want to sheild as much of the electronics and wiring as you can though.

I absolutely agree with your posting FLOZ! However, if he keeps the FireFly around the horizontal periphery of the incident-structure and stay away from vertical updrafts he should be OK even without shielding. In that case the FF should be equipped with a power zoom lens camera to compensate for not going into a hot-spot. Also maybe something with FLIR too. By removing the camera's IR filter it can be sensitive into infrared. It could have a IR tracking sensor to look for hot-spots to AVOID. It also needs a Firefighter's pulser lamp and toner so ground-responders can see and hear it and avoid hitting it with fire hoses and ladders. It can't do much good with rescue sound detection of trapped humans as the quad's rotor noise is too high for that (unless it lands on a safe ledge, powers down, and listens). Also a light communications/power tether would be nice touch. In that way it could have a positive signal return of video and telemetry and if armored enough (i.e. kevlar) have a way to rescue the FF if it get's trapped under falling debris. Also the tether could supply power to make mission sorties longer.

Low cost, quad copter.. sure.. But flying one into a burning building? ... with plastic blades... exposed circuits... The mental images of what happens next are at least amusing.

If you're bound and determined to put this thing into an inferno.. You really need to be thinking about some type of fire resistant exterior and blades.

I think the temperatures are a smaller problem than Windows, Doors and Smoke...

But in general i like the idea.

Ultrasonic range detector could help avoid walls and solid structures in smoke. I think the quad's rotors would clear most smoke away. I think this FireFly is not a small quad either. The detector would also make glass in windows opaque and avodable for the quad.

Well, the name doubling is confusing. Firefly is already used for ARM RK3288 based development board http://en.t-firefly.com/en/

You're right and they COPYRIGHTED the name Firefly too last year. He could rename it FireFly or Fire-Fly or something creative like that to avoid a law suit. What he heck does that product actually do?

Become a member to follow this project and never miss any updates

Alexander Kirillov

Alexander Kirillov

Spencer

Spencer

Quackieduckie

Quackieduckie

Kyle Isom

Kyle Isom

If you need a rudimentary camera with little video data as possible, lookup up RAMera. It was an old idea to take the top of of RAM chip and use it's digital output as a digital camera. The resulting resolution is really bad, probably as bad a a fruit fly's vision.

You could rename your project: VIDalia

Vidalia is a genus of fruit flies in the family Tephritidae. Also the prefix VID denotes something to do with the eye like in video. That may be apropos?