Up until now I've been documenting my work on RAIN (formerly known as *Raiden*) on my blog at https://jjg.2soc.net/category/rain/. This includes information about the previous phase of the project (Mark I) and longer discussions of the philosophy behind the work (and why I think it matters).

Here I'm going to focus on documenting ongoing work on a specific machine, the RAIN Mark II Personal Supercomputer.

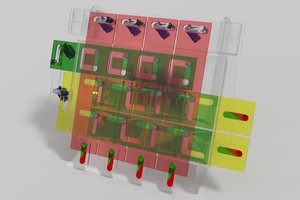

Mark II is an 8-node ARM computing cluster using a Gigabit Ethernet interconnect. It utilizes a combination of PINE64 SOPINE modules and PINE A64 single-board computer to create a distributed-memory supercomputer with up to 32 ARM cores, 16 vector/GPU cores and 16 GB of memory in a small, clean (and I think, beautiful), easy-to-use package.

In addition to the hardware, the RAIN project involves making the creation of new high-performance applications approachable by people with little or no experience with supercomputers. My goal is to do for supercomputers what the personal computer did for mainframes. This means more than putting the box on someones desk, it also means giving them tools to use it the way they want to.

The design has gone through many revisions and I've recently abandoned some of my own work in favor of using the PINE64 Clusterboard. I didn't take this change lightly as my designs for assembling arrays of PINE A64 boards is more flexible and scalable than the Clusterboard, but the board is just so perfectly suited for the Mark II's design and meets the needs of the goals I have for Mark II with almost no compromise, so it's the obvious choice.

This means I can get the machine operational faster, and design a machine that is much easier for others to reproduce (sticking to off-the-shelf components is one of the design goals for Mark II). All of this means I can turn my attention to the software side of the system sooner and I think that's where I have the most value to provide anyway.

jason.gullickson

jason.gullickson

cb22

cb22

Dave's Dev Lab

Dave's Dev Lab

Mastro Gippo

Mastro Gippo

Does this serve a different use-case than some of the services provided by amazon or microsoft through AWS or Azure designed around high performance computing?